Confidence in climate forecasts

Posted on 4 August 2010 by Kevin Judd

Guest post by Kevin Judd

Climate scientists are telling us that the earth's average temperature is going to rise 2 to 3 degrees over the next 50 to 100 years. How do they make this prediction? And why are they confident their prediction will be correct? Climate scientists make this prediction using a climate model. So what is a climate model?

Climate scientists are telling us that the earth's average temperature is going to rise 2 to 3 degrees over the next 50 to 100 years. How do they make this prediction? And why are they confident their prediction will be correct? Climate scientists make this prediction using a climate model. So what is a climate model?

Perhaps you have seen, or even had a ride on, one of those model stream-trains. These are miniature working replicas of real steam-engines. Climate models are the same; they are a working replica of the earth, only instead being made of rock and water and other materials, they are made from mathematical equations.

These mathematical models are the basis of science and technology. There are models for how microwave ovens work; models for car engines and power stations; models for jet-aircraft. Models allow scientists and engineers to build things that have never been built before, by testing how they will work before they are made. Models were used to build the rockets that took astronauts to the moon and back safely. Models allows scientists to predict complex things like the weather.

These models make correct predictions because they are based on general scientific principles, often referred as "Laws", like the law of gravity. General scientific principles are important because they connect phenomena that are not obviously connected. For example, the principles of microwave ovens are related to the greenhouse effect. The principles of car engines and power stations are related to how the earth will warm up. The principles of aircraft are related to winds, storms, and ocean currents.

This interconnectedness gives scientific models great power. If the general principles of climate models were wrong, scientists would have known long ago: microwave ovens wouldn't work, aircraft wouldn't fly, weather couldn't be forecast.

Based on general principles alone climate scientists have every reason to be confident in their predictions, because the principles have been well tested. Furthermore, the climate models of twenty years ago accurately forecast temperature rises over the last 20 years. This successful prediction further validates the general principles, and gives us confidence in climate models. Add to this that the climate models of today are much better than 20 years ago.

Of course there will always be some uncertainty about how the details of climate change will play out, but there is no doubt on the basic story that the earth's average temperature is going to rise 2 to 3 degrees over the next 50 to 100 years. Anyone who says otherwise, either does not understand how science works, or is being deliberately misleading.

In my next segment I'll consider what the consequences of this warming will be.

NOTE: this post is also being "climatecast" by Kevin Judd on RTR-FM 92.1 around 11.30 AM WAST today. You can listen to a streaming broadcast of RTR-FM online via http://www.rtrfm.com.au/listen.

NOTE: this post is also being "climatecast" by Kevin Judd on RTR-FM 92.1 around 11.30 AM WAST today. You can listen to a streaming broadcast of RTR-FM online via http://www.rtrfm.com.au/listen.

Arguments

Arguments

0

0  0

0 In other words, the 2000s were actually warmer than one would predict based on the rate of warming over the preceding two decades.

In other words, the 2000s were actually warmer than one would predict based on the rate of warming over the preceding two decades.

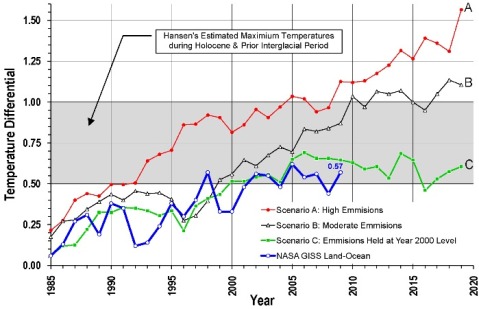

Figure 1: Scenarios A, B and C Compared with Measured NASA GISS Land-Ocean Temperature Data (after Hansen, 2006)

The blue line in Figure 1 denotes the NASA GISS Land-Ocean data and Scenarios A, B and C describe various CO2 emission outcomes. Scenarios A and C are upper and lower bounds. Scenario A is “on the high side of reality” with an exponential increase in emissions. Scenario C has “a drastic curtailment of emissions”, with no increase in emissions after 2000. Scenario B is described as “most plausible” which is expected to be closest to reality. The original diagram can be found in Hansen (2006).

It is evident from Figure 1 that the best fit for actual temperature measurements is the emissions-held-at-year-2000-level Scenario C. This suggests that global warming has slowed down significantly when compared with the “most plausible” prediction Scenario B.

A similar study comparing HADCRUT3 with AR4 may be found here

CONCLUSIONS

It is evident that computer models over-predict global temperatures when compared with observed temperatures.

Global warming may not have stopped but it is certainly following a trajectory that is much lower than that predicted by computer models. Indeed, it is following the zero-increase-in-emissions scenarios from the computer models

Figure 1: Scenarios A, B and C Compared with Measured NASA GISS Land-Ocean Temperature Data (after Hansen, 2006)

The blue line in Figure 1 denotes the NASA GISS Land-Ocean data and Scenarios A, B and C describe various CO2 emission outcomes. Scenarios A and C are upper and lower bounds. Scenario A is “on the high side of reality” with an exponential increase in emissions. Scenario C has “a drastic curtailment of emissions”, with no increase in emissions after 2000. Scenario B is described as “most plausible” which is expected to be closest to reality. The original diagram can be found in Hansen (2006).

It is evident from Figure 1 that the best fit for actual temperature measurements is the emissions-held-at-year-2000-level Scenario C. This suggests that global warming has slowed down significantly when compared with the “most plausible” prediction Scenario B.

A similar study comparing HADCRUT3 with AR4 may be found here

CONCLUSIONS

It is evident that computer models over-predict global temperatures when compared with observed temperatures.

Global warming may not have stopped but it is certainly following a trajectory that is much lower than that predicted by computer models. Indeed, it is following the zero-increase-in-emissions scenarios from the computer models

From OSS Foundation

From OSS Foundation

Comments