Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Posted on 4 October 2013 by dana1981

Earlier this week, I explained why IPCC model global warming projections have done much better than you think. Given the popularity of the Models are unreliable myth (coming in at #6 on the list of most used climate myths), it's not surprising that the post met with substantial resistance from climate contrarians, particularly in the comments on its Guardian cross-post. Many of the commenters referenced a blog post published on the same day by blogger Steve McIntyre.

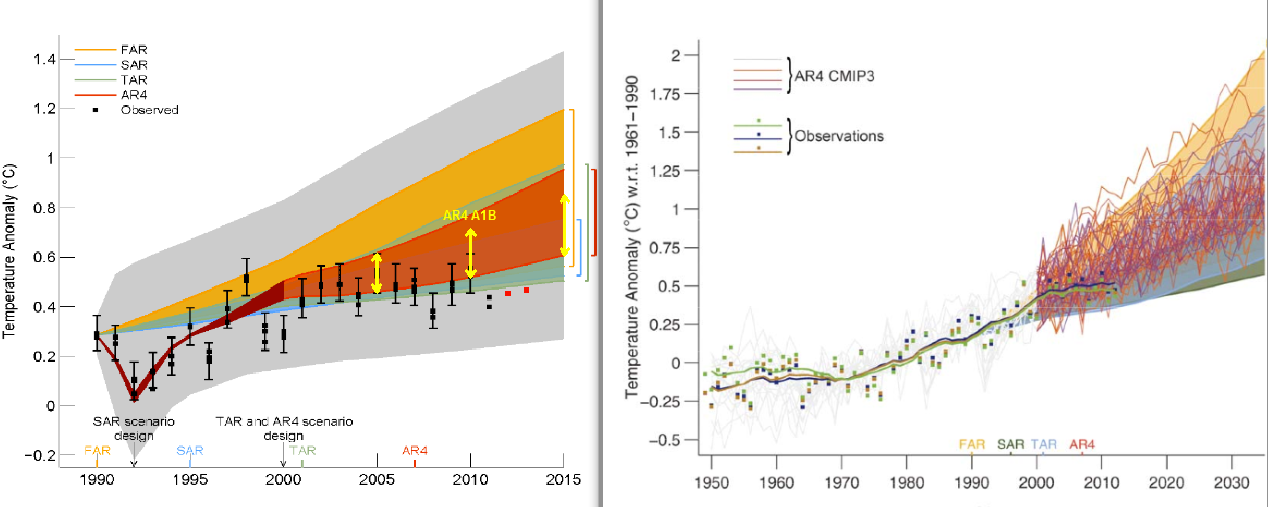

McIntyre is puzzled as to why the depiction of the climate model projections and observational data shifted between the draft and draft final versions (the AR5 report won't be final until approximately January 2014) of Figure 1.4 in the IPCC AR5 report. The draft and draft final versions are illustrated side-by-side below.

I explained the reason behind the change in my post. It's due to the fact that, as statistician and blogger Tamino noted 10 months ago when the draft was "leaked," the draft figure was improperly baselined.

IPCC AR5 Figure 1.4 draft (left) and draft final (right) versions. In the draft final version, solid lines and squares represent measured average global surface temperature changes by NASA (blue), NOAA (yellow), and the UK Hadley Centre (green). The colored shading shows the projected range of surface warming in the IPCC First Assessment Report (FAR; yellow), Second (SAR; green), Third (TAR; blue), and Fourth (AR4; red).

What's Baselining and Why is it Important?

Global mean surface temperature data are plotted not in absolute temperatures, but rather as anomalies, which are the difference between each data point and some reference temperature. That reference temperature is determined by the 'baseline' period; for example, if we want to compare today's temperatures to those during the mid to late 20th century, our baseline period might be 1961–1990. For global surface temperatures, the baseline is usually calculated over a 30-year period in order to accurately reflect any long-term trends rather than being biased by short-term noise.

It appears that the draft version of Figure 1.4 did not use a 30-year baseline, but rather aligned the models and data to match at the year 1990. How do we know this is the case? Up to that date, 1990 was the hottest year on record, and remained the hottest on record until 1995. At the time, 1990 was an especially hot year. Consequently, if the models and data were properly baselined, the 1990 data point would be located toward the high end of the range of model simulations. In the draft IPCC figure, that wasn't the case – the models and data matched exactly in 1990, suggesting that they were likely baselined using just a single year.

Mistakes happen, especially in draft documents, and the IPCC report contributors subsequently corrected the error, now using 1961–1990 as the baseline. But Steve McIntyre just couldn't seem to figure out why the data were shifted between the draft and draft final versions, even though Tamino had pointed out that the figure should be corrected 10 months prior. How did McIntyre explain the change?

"The scale of the Second Draft showed the discrepancy between models and observations much more clearly. I do not believe that IPCC’s decision to use a more obscure scale was accidental."

No, it wasn't accidental. It was a correction of a rather obvious error in the draft figure. It's an important correction because improper baselining can make a graph visually deceiving, as was the case in the draft version of Figure 1.4.

Curry Chimes in – 'McIntyre Said So'

The fact that McIntyre failed to identify the baselining correction is itself not a big deal, although it doesn't reflect well on his math or analytical abilities. The fact that he defaulted to an implication of a conspiracy theory rather than actually doing any data analysis doesn't reflect particularly well on his analytical mindset, but a blogger is free to say what he likes on his blog.

The problem lies in the significant number of people who continued to believe that the modeled global surface temperature projections in the IPCC reports were inaccurate – despite my having shown they have been accurate and having explained the error in the draft figure – for no other reason than 'McIntyre said so.' This appeal to McIntyre's supposed authority extended to Judith Curry on Twitter, who asserted with a link to McIntyre's blog, in response to my post,

"No the models are still wrong, in spite of IPCC attempts to mislead."

In short, Curry seems to agree with McIntyre's conspiratorial implication that the IPCC had shifted the data in the figure because they were attempting to mislead the public. What was Curry's evidence for this accusation? She expanded on her blog.

"Steve McIntyre has a post IPCC: Fixing the Facts that discusses the metamorphosis of the two versions of Figure 1.4 ... Using different choices for this can be superficially misleading, but doesn’t really obscure the underlying important point, which is summarized by Ross McKitrick on the ClimateAudit thread"

Ross McKitrick (an economist and climate contrarian), it turns out, had also offered his opinion about Figure 1.4, with the same lack of analysis as McIntyre's (emphasis added).

"Playing with the starting value only determines whether the models and observations will appear to agree best in the early, middle or late portion of the graph. It doesn’t affect the discrepancy of trends, which is the main issue here. The trend discrepancy was quite visible in the 2nd draft Figure 1.4."

In short, Curry deferred to McIntyre's and McKitrick's "gut feelings." This is perhaps not surprising, since she has previously described the duo in glowing terms:

"Mr. McIntyre, unfortunately for his opponents, happens to combine mathematical genius with a Terminator-like relentlessness. He also found a brilliant partner in Ross McKitrick, an economics professor at the University of Guelph.

Brilliant or not, neither produced a shred of analysis or evidence to support his conspiratorial hypothesis.

Do as McKitrick Says, not as he Doesn't Do – Check the Trends

In his comment, McKitrick actually touched on the solution to the problem. Look at the trends! The trend is essentially the slope of the data, which is unaffected by the choice of baseline.

Unfortunately, McKitrick was satisfied to try and eyeball the trends in the draft version of Figure 1.4 rather than actually calculate them. That's a big no-no. Scientists don't rely on their senses for a reason – our senses can easily deceive us.

So what happens if we actually analyze the trends in both the observational data and model simulations? That's what I did in my original blog post. Tamino has helpfully compared the modeled and observed trends in the figure below.

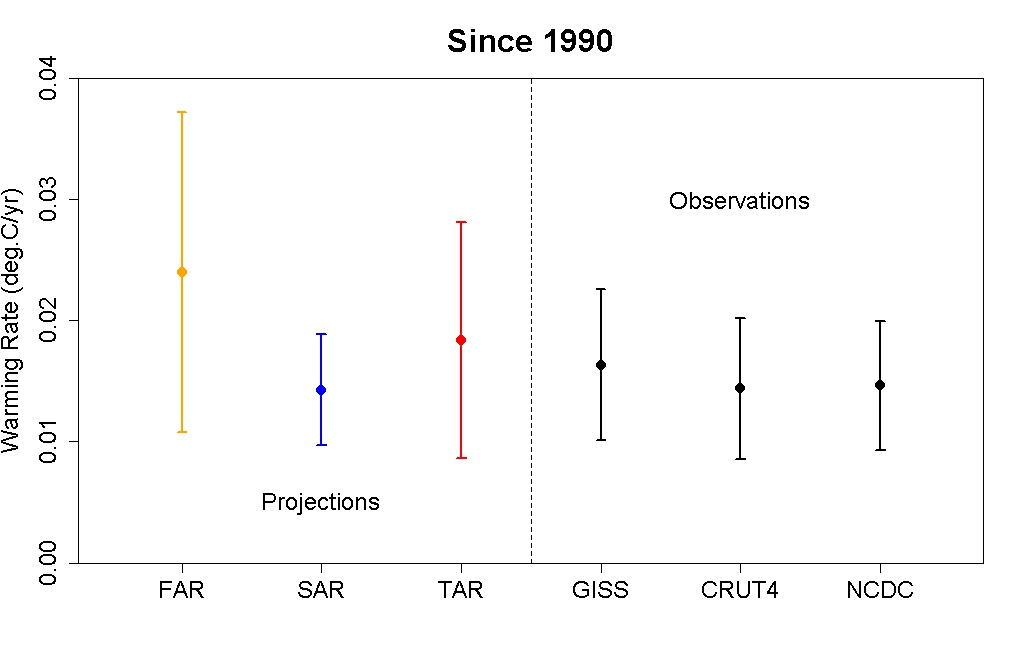

Global mean surface temperature warming rates and uncertainty ranges for 1990–2012 based on model projections used in the IPCC First Assessment Report (FAR; yellow), Second (SAR; blue), and Third (TAR; red) as compared to observational data (black). Created by Tamino.

The observed trends are entirely consistent with the projections made by the climate models in each IPCC report. Note that the warming trends are the same for both the draft and draft final versions of Figure 1.4 (I digitized the graphs and checked). The only difference in the data is the change in baselining.

This indicates that the draft final version of Figure 1.4 is more accurate, since consistent with the trends, the observational data falls within the model envelope.

Asking the Wrong (Cherry Picked) Question

Unlike weather models, climate models actually do better predicting climate changes several decades into the future, during which time the short-term fluctuations average out. Curry actually acknowledges this point.

This is good news, because with human-caused climate change, it's these long-term changes we're predominantly worried about. Unfortunately, Curry has a laser-like focus on the past 15 years.

"What is wrong is the failure of the IPCC to note the failure of nearly all climate model simulations to reproduce a pause of 15+ years."

This is an odd statement, given that Curry had earlier quoted the IPCC discussing this issue prominently in its Summary for Policymakers:

"Models do not generally reproduce the observed reduction in surface warming trend over the last 10 –15 years."

The observed trend for the period 1998–2012 is lower than most model simulations. But the observed trend for the period 1992–2006 is higher than most model simulations. Why weren't Curry and McIntyre decrying the models for underestimating global warming 6 years ago?

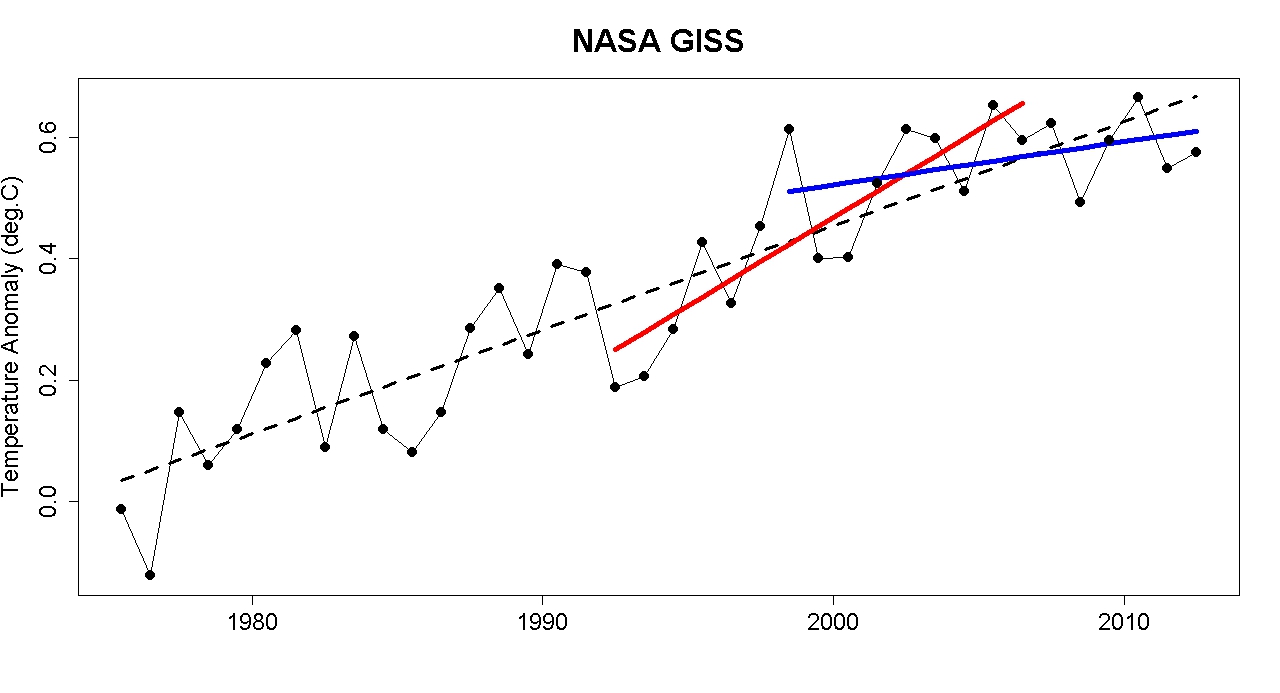

Global surface temperature data 1975–2012 from NASA with a linear trend (black), with trends for 1992–2006 (red) and 1998–2012 (blue). Created by Tamino.

This suggests that perhaps climate models underestimate the magnitude of the climate's short-term internal variability. Curry believes this is a critical point that justifies her conclusion "climate models are just as bad as we thought." But as the IPCC notes, the internal variability on which Curry focuses averages out over time.

"The contribution [to the 1951–2010 global surface warming trend] ... from internal variability is likely to be in the range of −0.1°C to 0.1°C."

While it would be nice to be able to predict ocean cycles in advance and better reproduce short-term climate changes, we're much more interested in long-term changes, which are dominated by human greenhouse gas emissions. And which, as Curry admits, climate models do a good job simulating.

It's also worth looking back at what climate scientists were saying about the rapid short-term warming trend in 2007. Rahmstorf et al. (2007), for example, said (emphasis added):

"The global mean surface temperature increase (land and ocean combined) in both the NASA GISS data set and the Hadley Centre/Climatic Research Unit data set is 0.33°C for the 16 years since 1990, which is in the upper part of the range projected by the IPCC ... The first candidate reason is intrinsic variability within the climate system."

Data vs. Guts

Curry and Co. deferred to McIntyre and McKitrick's gut feelings about Figure 1.4, and both of their guts were wrong. Curry has also defaulted to her gut feeling on issues like global warming attribution, climate risk management, and climate science uncertainties. Despite her lack of expertise on these subjects, she is often interviewed about them by journalists seeking to "balance" their articles with a "skeptic" perspective.

Here at Skeptical Science, we don't ask our readers to rely on our gut feelings. We strive to base all of our blog posts and myth rebuttals on peer-reviewed research and/or empirical data analysis. Curry, McIntyre, and McKitrick have failed to do the same.

When there's a conflict between two sides where one is based on empirical data, and the other is based on "Curry and McIntyre say so," the correct answer should be clear. The global climate models used by the IPCC have done a good job projecting the global mean surface temperature change since 1990.

Arguments

Arguments

Klapper:

In post 45 you say:

"I don't think a negative forcing of 0.1W/m2 over 4 or so years at the end of an analysis period is enough to explain the large errors in the model trends over the last 20 to 30 years compared to observations."

The Tamino graph in the opening post shows that in 2007 the models were statistically much too low. Did you complain then that the models should be raised to compensate for their low errors? Scientists at the time said that it was probably due to natural variation. More data has shown this to be the most likely explaination. What is your response to the extreme warming from 1992-2006??? Your claim of 20-30 years is bullshit and should be withdrawn. Over the past 20-30 years the models have been accurate. Read the opening post so that you stop making such wild, demonstratably inaccurate claims.

[JH] Please cease and desist from using the word, "bullshit" in future posts. It is inflamatory and uncivil to do so.

This is warning #1.

Rob Painting @#50

McIntyre analyzed trends in the models from 1979 to 2013, a period over which the theoretcial forcing from increasing CO2 is about 0.8Wm2. If you believe stratospheric aerosols have played a role in suppressing warming in the observations, then ask yourself: What is the secular trend in aerosols over that period? (if any). Looking at Solomon et al 2011's Mauna Loa charts there is no secular trend in background transmission values from the late 70's to now. If there is no secular trend in background stratospheric aerosols over the analysis comparison period, then that can't be the reason for the difference between models and observations, no matter how strong the effect of aerosols.

The other point is that the observations are less responsive than the models to stratospheric aerosols if we compare the response of the observations to El Chichon and Pinatubo to the models, global SAT-wise. So not only is there no secular trend in background stratospheric aerosols, but they don't seem to have any more leverage over SAT in the real world than the model world.

@ michael sweet #50

I'm not sure which Tamino graph you are referring to but the second Tamino graph does not compare observations to the models, which is the point of discussion here. The second Tamino graph compares one observation trend vs a second observation trend.

Taminos choice of a random trend starting in 1992 and going to 2006 (the red trend line) is a very poor one if you want to demonstrate the validity of the model predictions against the observations. His intent was to show a "hot" trend in the observations, hence he started the trend in the Pinatubo global temperature slump.

So he got a very warm 15 year trend in the GISS dataset, 0.29C/decade to be exact. However the SAT trend in the latest model experiments, the CMIP5 ensemble, is even warmer, substantially so at 0.43C/decade over the same period. I think the reason for the extreme warming trend in the models 1992 to 2006 is mostly that they overcool in the episodes of major vulcanism, and in this particular interval, starting as it does in one, the temperatures at the start of the model run are too low accentuating the warming rate.

As for your claim that my numbers are "bullshit", be specific as to which of them are wrong. McIntyres choice of a trend period 1979 to 2013 to compare model trends vs observations should be above reproach since the period is 34 years, above the defined 30 year length needed to capture the climate signal. My analysis of the 1990 to 2013 period using CMIP5 (5AR) was just to compare to the above FAR, SAR, TAR graph of 1990 to now.

Tamino got a high warming when he cherry picked the start date. Deniers currently pick the strongest El Nino of the 20th century as their start date, obviously as much a cherry pick as Tamino's. It is up to you to provide data to support your cherry pick.

Please provide a citation to your bullshit number of 0.43C/decade. Your claim of 30 years of errors that I previously cited is also bullshit. Provide a peer reviewed reference to this wild claim or withdraw it. Provide a link to McIntyres peer reviewed analysis. The OP shows using peer reviewed data that the IPCC has been accurate, you must provide links that support your wild claims in the face of this peer reviewed data.

When you make claims that are directly contradicted by the peer reviewed data in the OP and you provide no citations you are comparing your unsupported opinion against peer reviewed data. That has no place in a scientific discussion. Although the denier blogs encourage unsupported argumentation, this is a scientific blog. You must provide links to peer reviewed data or you are dealing in bullshit. If you link to a non-peer reviewed blog it will carry no weight in a scientific argument.

[JlH] Please cease and desist from using the word, "bullshit" in future posts. It is inflamatory and uncivil to do so.

This is warning #2.

Klapper - you misunderstand, this is not a matter of belief, that is simply what the evidence is - stratospheric aerosols blocked more sunlight from reaching the Earth in recent times. Neely et al (2013) show that since the year 2000 these sunlight-blocking sulfate particles have offset some 25% of the effect of the extra greenhouse gases. In their study abstract they write:

"Observations suggest that the optical depth of the stratospheric aerosol layer between 20 and 30 km has increased 4–10% per year since 2000, which is significant for Earth's climate. Contributions to this increase both from moderate volcanic eruptions and from enhanced coal burning in Asia have been suggested. Current observations are insufficient to attribute the contribution of the different sources. Here we use a global climate model coupled to an aerosol microphysical model to partition the contribution of each. We employ model runs that include the increases in anthropogenic sulfur dioxide (SO2) over Asia and the moderate volcanic explosive injections of SO2 observed from 2000 to 2010. Comparison of the model results to observations reveals that moderate volcanic eruptions, rather than anthropogenic influences, are the primary source of the observed increases in stratospheric aerosol."

Rather than rely on some climate science contrarian blogger, as you do, do you have any peer-reviewed literature to support your claims? As you know anyone can write anything they like on the internet, but we here at SkS rely upon the work of actual experts in the field of climate science whose ideas and research is subject to the scrutiny of other experts. That would be the peer reviewed literature.

@ michael sweet #54

None of the graphs in the OP are peer-reviewed. Tamino is cited in this blog and comments and none of that analysis is peer-reviewed. The CMIP5 data methods/review are in fact not peer-reviewed although they form the basis for much comment and many graphs in the AR5 IPCC report.

The surface warming rate of 0.43C/decade from 1992 to 2006 is correct for the CMIP5 ensemble of experiments from different models for the RCP4.5 scenario. These data are freely available at the KNMI data explorer website. I'll withdraw the number if you can tell me the correct one.

Klapper... The difference here is that McIntyre/Tisdale are doing a lot of hand waving and Tamino is actually testing the claims with analysis.

The point is quite clear that 1990 is an anomolous warm year in the trend. Anyone honestly interpreting the data would look at that simple explanation and conclude that it would be better to use a longer term baseline.

It's just fascinating to me how people like McIntyre and Tisdale will scream at the top of their blogger lungs about tiny nuances in climate science they find objectionable, but they completely ignore when someone shows them missing such simple points.

@ Rob Painting #55:

We are not comparing observation trends vs. model trends since 2000 are we? The data since 2000 are only relevent to longer trends starting in 1979 or 1990 if they represent a longer term secular trend in the impact of aerosols. We know from the Solomon et al 2011 paper the impact might be -0.1W/m2. That's not significant for the longer term trends that McIntyre compared where the CO2 forcing was 8 times that magnitude.

If you can make a point there is a long term trend in the background of the impact of aerosols, that the models don't capture, then put if forward. If the background aerosols in the '80s were no different than the last decade, then the net impact on an observations trend spanning the last 30 years is probably nil.

@ Rob Honeycutt #57:

My references to the trends calculated by McIntyre are from his "2 Minutes to Midnight" post, not the one on Figure 1.4. He calculates the SAT warming trend for multiple runs of different CMIP5 models and then all 109 runs and compares them against the actual warming trend in a "box and whiskers" plot. It seems a reasonable approach to comparing the models to observations to me.

Klapper @36,

Following your posts is like playing a game of find the pea under the thimble. Talk about obfuscation ;)

You agree though that the observations lie within the envelope of possible model outcomes. Good. So I'm not sure why you wish to keep arguing moot points.

That said, there is obviously something wrong with your calculated trend in your post. The maximum rate of warming for TAR for 1990-2012 comes in at near 0.29C/decade. But that rate is for CMIP3 not CMIP5. Anyhow, your rate is clearly way too high. If one's calculation is an outlier it is time to consider that your result is the one that is most likely in error. You also say you have calculated the rate through 2013, a little odd given that the year is not done yet ;)

Regardless, you and McIntyre are not evaluating the model output correctly. First, and foremost you should be only comparing those gridpoints at which one has both observations and model output. Then one should be using a common baseline; a term and concept that McIntyre does not appear to understand except when attack scientists Marcott et al., ironically the choice of baseline period was then central to his whole uncertainty argument ;) Also, ideally you evaluate the models when they have been driven using the best estimates of the observed forcings.

Last, but not least, the ensemble mean model estimate can be misleading and is not necessarily the best metric to use for evaluating the models.

Oh well, at least while Steve McIntyre is very busy trying to figure out what a baseline is (allegedly) he is not attacking, smearing and stalking climate scientists :) Small blessings.

Just a point of clarification. Of course, my reference to the baseline is with respect to McIntyre's and McKitrick's confusion about the draft figure 1.4 in AR5.

Hey that reminds me, since you are such a fan of Steve and Ross, maybe you could ask them who leaked the draft report, and if they do who that person was. Thanks.

Klapper,

Looking back through this thread I see that you have provided not a single citation or reference, not even to a blog post. You provide only your unsupported assertions with numbers that are wildly different from those posted on scientific blog posts. It is impossible for me to continue an argument with you since you refuse to support your data.

Moderator: I am sorry for the strong language, I was tired of Klapper's unsupported claims. I will not use such langusge in the future.

[JH] Thank you.

Klapper said... "It seems a reasonable approach to comparing the models to observations to me."

That's because you're looking for a specific conclusion. Confirmation bias.

@Albatross #60:

"...But that rate is for CMIP3 not CMIP5..."

I checked my work on the CMIP5 SAT warming from 1990 to mid 2013 for the model ensemble for the RPC45 scenario and the rate is 0.29C/decade. The data I downloaded the first pass were the "One run per model tas rpc45". I also checked the "all runs per model tas rpc45" but the number is the same at 0.29C/decade. Of course 2013 is not done yet and I wanted to compare apples to apples so my comment references the mid year since both CMIP5 and HadCRUT4 are available monthly.

I think CMIP3 is for 4AR not TAR as you suggest. My point in #36 was to check the most recent data (5AR) against what Tamino had done for FAR, SAR and TAR. As for your comment on "...Then one should be using a common baseline.." that is not applicable to the comparison of linear regression trends which only compare the rate of change not the absolute averages.

Both you and Rob Honeycutt have confused my references to McIntyre's work as something to do with his recent post on the 1990 baseline issue. My references to McIntyre relate to his earlier "2 minutes to midnight" post and the analysis comparing CMIP5 model experiments to the observational trend. If you think there is something wrong with his comparison of 1979 to 2013 trends, models to observations, then what is it?

@ michael sweet #62:

"...numbers that are wildly different from those posted on scientific blog posts"

The numbers I've posted are correct. If they are not then will someone here please tell me what the correct ones are. If the CMIP5 model ensemble warming trend between 1990 and 2013 is not 0.29C per decade then what is it?

Klapper:

Do you have a point make, or do you just enjoy engaging in a never-ending discourse with people like Rob Honeycutt, Michael Sweet, and others?

Klapper,

You admit then that your posts are essentially off topic, especially those referring to McIntyre's blog posts. Let us focus on the actual post above.

1) Do you agree that Curry's claims quoted above were incorrect for the reasons stated?

2) Do you agree that the initial problem with the draft figure 1.4 for AR5 has now been corrected?

3) Do you agree that McIntyre's and Mckitrick's accusations of foul play regarding figure 1.4 are incorrect?

We'll take it from there. Right now I have to be somewhere for the evening with friends and family.

Michael Sweet & Albatross, the mean trend for the CMIP5 81 member ensemble from July 1992-June 2007 is 0.413 C per decade (as calculated by me, today from data downloaded from KNMI. The observed trends lie within 1.1 (HadCRUT4, NOAA) or 0.91 (GISS) standard deviations of that value, but below that value in each case. In contrast, for the 54 member ensemble of CMIP3 (AR4), the mean trend is 0.297 C per decade over that period, with observations falling within 0.1 standard deviations (HadCRUT4 and NOAA below the ensemble mean, GISS above it).

This is largely irrelevant to the discussion. Tamino compared a trend resulting from variability that is modelled to one that is not. Therefore we expect the former divergence to also show up in the computer models, while the later does not. It does not alter the fact that we have two significantly different trends from the long term trend as a result of short term variability in the climate system. It would be inappropriate to use the 1992-2006 interval to suggest warming is much greater than we expect from the long term ensemble mean trend (ie, the one that is actually projected into the future). Likewise it would be inapproprate to use the later to suggest it will be much less.

Nor is it particularly illuminating to compare the 1992-2006 observed trend to the modelled trend for that period. It does illustrate that the computer models over predict global cooling due to volcanoes, but that was something already well known. To take that fact and try and finesse it into a claim that the models are wrong long term trends is dubious at best. If, however, Klapper does want to make that argument, he ought to do so explicitly rather than taking short term trends impacted by that known flaw and pretend that they demonstrate some other flaw by the expedient of not mentioning the impact of the known flaw.

As a follow on from my post @68, below is the inverted, lagged Southern Oscillation Index (SOI):

For the purposes of the discussion in 68, the important thing is that the El Pinatubo erruption coincided with an El Nino event that was almost as strong as, but more sustained than, that in 1997/98. The prior, El Chichon erruption also coincided with a strong El Nino event (in fact the strongest on record based on the SOI). These ENSO events may explain part of the weak response to those volcanoes in the observational record relative to the models.

In respect to the 1992-2006 trend, it is particularly important to note the relative strength of the 1992-95 El Nino event to the conditions in 2006, which exhibit weakly positive SOI conditions (ie, neutral to a mild La Nina). Thus, to the extent that the 1992-95 ENSO event is not consistent across all models, we would expect modelled trends over that interval to be significantly greater than observed trends. On that basis, the CMIP3 ensemble mean shows too little trend over that period, even though it gets the prediction almost perfect. CMIP5, with a greater apparent divergence is more likely to better model the ENSO neutral (because not consistently timed across all ensemble members) conditions.

Of course, there is a possibility that the ENSO conditions were induced by the volcanoes; and that this behaviour shows up in the models. Therefore this analysis should be treated with caution.

@ John Hartz #66:

Yes, I do have a point to make: the models run too hot.

[DB] As others have already pointed out to you, the actual evidence is to the contrary. Thus, you merely express your opinion. Please characterize it as such in the future. Or else moderation for sloganeering will ensue.

@Klapper #70:

Is that why the Arctic sea ice is disappearing? Is that why alpine glaciers are melting? Is that why the Greenland ice sheet is loosing mass?

Klapper @70, yes. The models currently run hotter than observations. Specifically, taking the trend since 1975, the observations run 16.5% (HadCRUT4, GISS) to 21.5% (NOAA) cool compared to CMIP3, and 27.6% (HadCRUT4, GISS) to 31.9% (NOAA) cool compared to CMIP5. Alternatively expressed, the observations run 0.64 (HadCRUT4, GISS) to 0.84 (NOAA) standard deviations cool compared to CMIP3, and 1.29 (HadCRUT4, GISS) to 1.49 (NOAA) standard deviations cool compared to CMIP5.

Do you think these facts falsify the models? Even though observations are running within 2 Standard Deviations of the ensemble means?

Alternatively, do you think that if temperatures run 15% cooler than AR4 projections for BAU, that is sufficient lee way to not have to do anything about global warming (the effective current policy setting in most of the world). That the difference between 3.65 C and 4 C at the end of the century makes all the difference in the world?

Or more to the point (ie, the actual topic under discussion), do you think the final draft version of figure 1.4 inaccurately represents the relationship of these trends? Or do you think it is more accurate to show the 1990-2015 trend with observations lying below the model envelope (as shown by Fig 1.4 in the 2nd order draft), even though with the same baseline the observations (and trends) lie well within the two sigma envelope for AR4 (as the final draft shows)?

John Hartz @71, are you trying to draw attention to the fact that the models are underestimating some effects of global warming; and that assessing the validity of the models base on just one number (GMST) rather than on their total output is rather facile? If so, I agree with you. Personally I think there is little doubt that the CMIP5 models perform worse than the CMIP3 models with regard to GMST. It does not follow that the models are worse. To make that assessment we must consider the whole range of model data.

Further to the discussion above, here are the observed trends from 1990 to current relative to the CMIP 3 (AR4) trends from 1990-2015:

On the left hand side, the number of models in each 0.025 C/decade bin is shown, with values shown being the upper limit of each bin. It should be noted that observed trends are also assigned to a bin.The trend of the GISS record is 0.152 C/decade, resulting in it being assigned to the 0.15-0.175 bin. It is not shown as having a 0.17 C per decade trend as might be assumed from casual inspection of the graph. In a similar manner, the HadCRUT4 and NOAA records are shown in a bin, rather than having trends as low as might be assumed from a casual inspection.

On the right hand side, the 54 member ensemble mean, maximum, minimum and mean plus or minus two standard deviations with are shown with the actual observed trends. Observed trends place by hand, so are only approximately accurate.

The key point for this discussion is that the 2nd order draft version of Fig 1.4 shows the observed data falling below the AR4 envelope, suggesting visually that the trend would fall below the minimum value in the ensemble (green line), whereas in fact they lie well above that value. That is shown much better in the final draft version of 1.4.

Obfusticate as much as they like, the deniers cannot alter the fact that the 2nd order draft version of figure 1.4 was a misleading graphic for comparing models and data for AR4, due primarilly to poor baselining. The final draft version corrects the baseline issues and is far superior.

@Tom Curtis #73:

Not exactly. I'm trying to draw attention to the fact that manmade climate change is real and is happening now.

Unless we have a time machine, we'll never know how well the models perform their primary task, i.e., long-range forecasts say to the year 2100.

In the meantime, we're wasting valuable time by arguing over minutiae.

@Tom Curtis #72:

You pre-empted my next post somewhat since I was going to agree the 1990-1992 to 2006/now trends didn't prove much other than the models overcool during major volcanic episodes and we should instead focus on McIntyre's 1979 to 2013 model vs observations analysis. Since you've basically done the analysis from 1975 showing the same thing as McIntyre we can skip the argument of whether the models run too hot or not.

Now we can discuss the significance of the models running too hot. I think the models run too hot since they have tuned their feedbacks to radiative forcing during only the warm phase of ocean cycles. (-snip-).

(-snip-).

I'll leave the discussion of Figure 1.4, the politics and personalities of global warming to others (for today anyway).

[DB] Sloganeering snipped.

Moderator [DB] @ 39: I read the OP again and it doesn’t address my question @39 at all. The discussion in the OP is about the baseline is the location of zero on the Y axis scale. I want to know why the IPCC zoomed out so far and covered the actual temperature with messy spaghetti lines. So, I have gone off and gathered raw data from original sources and plotted it. This is my first time trying to post an image -- hopefully it works.

I have downloaded HADCRUT4 (baselined 1961 to 1990) and plotted the data in Excel. I have also plotted a center weighted 5 year moving average. I then have digitized the original IPCC AR3 and AR4 model projections from the IPCC’s website and plotted those in the chart. Note that both the AR3 and AR4 projection are aligned to the year they were projected – 1980 for AR3 and 1990 for AR4 – and at the location of the 5 year moving average for those years. This aligns the trend projections of AR3 and AR4 to the center of the HADCRUT data for their respective years. While the chart speaks for itself, it is clear that the actual temperatures generally run a little cooler than model projections. In 2005 the actual temperatures have clearly departed from model projections.

[DB] Please constrain image widths to 450.

As this thread concerns the AR5 projections, please take your course of inquiry to this discussion of the TAR and the AR4 IPCC projections.

StealthAircraftSoftwareModeler... There's a big problem with the Excel graph you've created. You're only looking at the trend. Modelers know they can't project short term (10-15 year) surface temperature changes.

I keep stating this over and over, but I'll say it again. Climate modeling is a boundary conditions experiment. You're treating like an initial conditions experiment.

Stealth, what you've done with your chart is to completely remove the boundaries established by the modeling. Thus, you've removed the most relevant aspect of the climate models.

Modeling is not about the mean. It's not about the trend (except >30 years). It's about the boundaries that are established by the range of model runs.

SAM @77, I believe what you were trying to produce was an honest comparison between observations and the CMIP 3 (AR4) model ensemble. Is that right?

Is that not fair? Can't you pick out the observations from the model runs? Isn't that rather the point! If you cannot easilly pick out the observations from the model runs, then the model runs have predicted the observations within the limits possible for stochastic processes (such as short term climate variations).

Of course, despite Rob Honeycutt's sage advice, you may be more interested in short term trends. Nothing in science dictates what you are interested in, regardless how futile for advancing knowledge. Of course, if you are interested in trends, compare with trends:

Doing otherwise is an apples and oranges comparison. The only justification I can think of for doing so is a deliberate intent to mislead.

You will now find a number of CMIP3 - observation comparisons produced by me above. All are done with based on sound methods of comparison; and none show a significant divergence between models and observations. It is only by doing illegitimate comparisons - by poor baselining, by comparing individual observations to trends, etc - that you can create the impression that something is radically wrong with the models. The effort various deniers are expending on justifying such illegitimate comparisons is, IMO, a fair mark of how little regard we should have of their opinion.

@Tom Curtis #79:

What is your rationale for continuing to use the CMIP3 data instead of the more recent CMIP5 as the model reference?

Tom @ 79 - that's quite brilliant. I hadn't thought of expressing it in that way, but the image you provided gets the point across very clearly. Nice work!

Klapper @80, the rationale is that the topic of the post is the treatment of the comparison between CMIP3 and observations in AR5. Specifically, the difference in treatment of figure 1.4 between the 2nd order draft and the final draft. I am happy to discuss the CMIP 5 results, but this is probably not the correct thread to do so.

SAM @ 39,

Furthermore, what does the spaghetti add to the information? It doesn’t appear to add much, but it certainly clutters up the chart, making it nearly impossible to see the actual temperature plot. The simple banded range of model projections in the draft image make it easier read the chart.

The point of the spaghetti rather than the simple banded model is to compare like-with-like — individual climate model realisations with the actual temperature record, which is also an individual climate realisation. "Skeptics" love to compare an actual temperature record with the ensemble mean — which, as predicted by the Central Limit Theorem, is a lot less noisy and fails to show important but unpredictable short-term effects like ENSO because of differences in timing from one realisation to the next — and then use the difference between them to claim that the models do not do a good job, ignoring the true spread of the model realisations and even adjusting the baseline.

If the models truly did not do a good job, then the actual temperature record would still stand out when plotted with the individual model runs, as Tom did @ 79. If it went outside the banded range, it would also go outside the spaghetti, since the former is merely an indication of the spread of the latter.

If a "Skeptic" cannot pick out the actual temperature record from one of those spaghetti charts, then how can they claim the models are not doing a good job?

The magnitude of the apparent random variations of the individual realisations is also valuable to illustrate, because it shows that climate models also have wild swings and plateaux when you carefully cherry-pick a high point for the starting point of a trend calculation.

As for the OP:

Suppose the draft version of Figure 1.4 had used 1992 as the starting point rather than 1990. I can guarantee that all those "skeptics" who are so confused about baselining now would instead be instant experts on the subject and endlessly pointing out how the IPCC had tried to mislead readers by using an inappropriate baseline that exaggerated the temperature record compared to the projections.

In an earlier post McIntyre als showed a box-plot from 1979-2013 that suggest that the trend in the AR5 models run significantly 'hotter' than the observed temperature trend (HADCRUT4) over the same period.

The box-plot:

I notice this boxplot don't include the uncertainty in the HADCRUT4 trend, which included (using the SkS trendcalculator) shows that the uncertainties overlap (0.158 ±0.044 °C/decade (2σ)), thus may in fact be the same trend afterall. Is there more to critique? Thanks.

JasonB's comment and Tom Curtis's top image and the paragraph immediately underneath it, I think should be part of the new post that mammal_E is working on, about prediction intervals versus confidence intervals (I hope).

Cynicus @84a, how has he fudged it? Let me count the ways:

1) To start with, only 42 models were used to explore the RCP 4.5 scenario. With 13 named models in the box plot, that leaves 29 singletons. In turn that means that there are only 80 model runs by those 13 models, or an average of 6.15 model runs each. If your thinking it's a bit of a statistical stretch doing a box plot on just six data points, you are right. It is worse than that, for while some models such as the CSIRO Mk3 have all of 10 runs, others such as the CESM-CAM5 have only 3 runs. The CESM-CAM5 still gets its own little box plot, with median, 25th and 75th percentiles, and the 90% range whiskers all of its own, and all of just three runs. That is a bit of a joke statistically. In fact, even the CSIRO Mk 3, with its box plot and whiskers based of 8 runs, plus two outliers (I'm cracking up here) is essentially meaningless statistically. McIntyre had too few samples to make any statistically meaningful claims about individual models, and he knew it. More importantly, the restricted range of the 90% range reflects only the very few samples rather than being a real indication of the variability to be expected from the model.

That means the only meaningful statistic in the entire figure is the box plot coloured gold on the right, ie, the full ensemble.

2) McIntyre compares with only HadCRUT4. HadCRUT4 excludes some of the fastest warming regions in the world. Most notably the Arctic, but also large sections of north Africa, the middle east and areas north of India. Curiously those later areas are where most of the 19 nations that set new national temperaturerecords in 2010 are located. Therefore we know that HadCRUT4 understates the actual trend in GMST, although we don't know exactly be how much. (GISS, in constrast, may either overstate or understate it.) Therefore, absent the use of a HadCRUT4 mask (almost impossible to set up on the KNMI explorer), we know the HadCRUT4 record understates the trend in that period. A reasonable estimate of how much it understates it by is 0.1 C/decade.

3) As can be seen in the graph @69 above, using a 1979 start point introduces a significant negative trend to observed temperatures due to ENSO fluctuation. This is exagerated in the HadCRUT4 record because it includes most areas affected by ENSO, but excludes many areas that are not. Absent this effect, the observed record would be about 0.1 C higher.

If we ignore the nonsense about doing box plots for models with just three samples, the comparison is interesting. There is nothing wrong with making such comparisons, provided you are aware, and make your readers aware, of potentially misleading aspects of the comparison (as in points (2) and (3) above). Further, even using GISTEMP, or an ENSO adjusted GISTEMP, it is likely the observed trend would still have fallen between the 75th and 90th percentile of model trends. The CMIP5 models do run hot relative to observations. Just not as hot, perhaps, as is suggested by the comparisons with HadCRUT4 (unless it is ENSO adjusted, and the models have a HadCRUT4 mask applied).

Franklefkin @85, thankyou for your inquiry. Following it up I discovered that I had mistakenly used trends from 1975 rather than the trends from 1990. Here is the graph reproduced with the correct trends:

From 1990, the minimum trend is 0.08 C per decade. That is literally the minimum trend from any member of the ensemble over that period. There are 6 (out of 54) ensemble members with a lower trend than HadCRUT4, and 8 with a lower trend than GISS. I cannot comment on your quote from AR4 unless you actually cite it.

Tom Curtis - Very clear reply to SAM, thank you.

Cynicus - As Tom Curtis pointed out, McIntyre is only using the HadCRUT4 data, which is notably missing polar areas with higher temperature trends. A comparison between global model trends and not-global observations is inaccurate unless the model data is masked to the same extent - and I see no sign that McIntyre has done so.

McIntyre has not shown the real distributions over the model runs, de-emphasized natural variability, compared masked observations with unmasked models, on and on and on. He has not made his case. Far from his claims - Observations continue to validate the models.

Tom Curtis, Climate Change 2007: Working Group I: The Physical Science Basis ContentsSPMProjections of Projections of Future Changes in Climate < A major advance of this assessment of climate change projections compared with the TAR is the large number of simulations available from a broader range of models. Taken together with additional information from observations, these provide a quantitative basis for estimating likelihoods for many aspects of future climate change. Model simulations cover a range of possible futures including idealised emission or concentration assumptions. These include SRES[14] illustrative marker scenarios for the 2000 to 2100 period and model experiments with greenhouse gases and aerosol concentrations held constant after year 2000 or 2100. For the next two decades, a warming of about 0.2°C per decade is projected for a range of SRES emission scenarios. Even if the concentrations of all greenhouse gases and aerosols had been kept constant at year 2000 levels, a further warming of about 0.1°C per decade would be expected. {10.3, 10.7} Since IPCC’s first report in 1990, assessed projections have suggested global average temperature increases between about 0.15°C and 0.3°C per decade for 1990 to 2005. This can now be compared with observed values of about 0.2°C per decade, strengthening confidence in near-term projections. {1.2, 3.2} Model experiments show that even if all radiative forcing agents were held constant at year 2000 levels, a further warming trend would occur in the next two decades at a rate of about 0.1°C per decade, due mainly to the slow response of the oceans. About twice as much warming (0.2°C per decade) would be expected if emissions are within the range of the SRES scenarios. Best-estimate projections from models indicate that decadal average warming over each inhabited continent by 2030 is insensitive to the choice among SRES scenarios and is very likely to be at least twice as large as the corresponding model-estimated natural variability during the 20th century. {9.4, 10.3, 10.5, 11.2–11.7, Figure TS.29} http://www.ipcc.ch/publications_and_data/ar4/wg1/en/spmsspm-projections-of.html

In the above, taken from AR4, the predictions clearly state warming will be between 0.15 and 0.30 c/decade. In fact, they state that if ghg (and aerosols) were to remain at 2000 levels - with no increases, warming would proceed at 0.1 C/decade.

[JH] The readibility of your posts would be greatly enhanced if you were to avoid composing lengthy paragraphs such as the first one above. If you group your thoughts into shorter paragraphs, readers will be better able to understand what your points are.

franklefkin the range of 0.15 and 0.3 c/decade seems only to be mentioned in the sentence "Since IPCC’s first report in 1990, assessed projections have suggested global average temperature increases between about 0.15°C and 0.3°C per decade for 1990 to 2005.".

The word "since" implies that projections in this range were made after the first assessment report (FAR), but that doesn't mean that they are AR4 projections as it would include projections made during the period covered by the second and third reports as well. If you want to know what the AR4 projections actually say, the best thing to do is to download the CMIP3 archive and find out, as the AR4 projections are based on those model runs.

I don't know if previous IPCC reports made the same baselining error, but frankly it doesn't matter. This report does it properly and compares warming projections from all previous reports, whilst doing it properly. If the contrarians are arguing that it's okay to improperly baseline because the IPCC has previously improperly baselined, that's a really absurd argument.

Dana @92, in the TAR (at least), I could not find a direct comparison between projections and temperatures in this manner. Rather they compared them using the nifty diagram shown in fig 8.4:

I assume the temerature series given a common thirty year baseline (as is standard), but that is not specified.

On the other hand, in displaying their projections, they set 1990 = 0 Degrees C on the multimodel means projections. Again, not the same thing, but something that can be misinterpreted as the same.

franklefkin @90, here are the percentiles for various trend periods from CMIP3:

_____________5.00%__25.00%__50.00%__75.00%__95.00%

1975-2015:_0.126__0.164___0.195___0.243____0.284

1990-2015:_0.100__0.176___0.233___0.278____0.389

1992-2006:_0.075__0.167___0.308___0.411____0.527

1990-2005:_0.080__0.177___0.280___0.374____0.487

As can be seen, the 90% range for 1975-2015 trends approximates to the values given in the quote, and may be the basis for those values. Alternatively they may have been referring to prior assessment reports as suggested by Dikran Marsupial. Regardless, the quote is insufficiently clear to say, and certainly not clear enough to contradict the data from the CMIP3 database.

This is from AR4.

Table 3.1.

Projected global average surface warming and sea level rise at the end of the 21st

century. {WGI 10.5, 10.6, Table 10.7, Table SPM.3}

Temperature change Sea level rise

(°C at 2090-2099 relative to 1980-1999)

a, d

(m at 2090-2099 relative to 1980-1999)

Case Best estimate Likely range Model-based range

excluding future rapid dynamical changes in ice flow

Constant year 2000

concentrations

b

0.6 0.3 – 0.9 Not available

B1 scenario 1.8 1.1 – 2.9 0.18 – 0.38

A1T scenario 2.4 1.4 – 3.8 0.20 – 0.45

B2 scenario 2.4 1.4 – 3.8 0.20 – 0.43

A1B scenario 2.8 1.7 – 4.4 0.21 – 0.48

A2 scenario 3.4 2.0 – 5.4 0.23 – 0.51

A1FI scenario 4.0 2.4 – 6.4 0.26 – 0.59

Notes:

a) These estimates are assessed from a hierarchy of models that encompass a simple climate model, several Earth Models of Intermediate

Complexity, and a large number of Atmosphere-Ocean General Circulation Models (AOGCMs) as well as observational constraints.

b) Year 2000 constant composition is derived from AOGCMs only.

c) All scenarios above are six SRES marker scenarios. Approximate CO

2

-eq concentrations corresponding to the computed radiative forcing due to

anthropogenic GHGs and aerosols in 2100 (see p. 823 of the WGI TAR) for the SRES B1, AIT, B2, A1B, A2 and A1FI illustrative marker scenarios

are about 600, 700, 800, 850, 1250 and 1550ppm, respectively.

d) Temperature changes are expressed as the difference from the period 1980-1999. To express the change relative to the period 1850-1899 add

0.5°C.

(table 3.1 AR4 pg 45)

(hopefully a moderator can fix the formatting)

Anyway, only the first 3 scenarios have projected warming less than .15 C/ decade, with the other 3 well above that. Bottom line, AR4 predicted temperature increases greater than the 0.1C /decade used in your trend graph.

Again, if Tom Curtis used the min projected temp increase from AR4 of 0.15 C/decade instead of the 0.1 or 0.08 C/decade that he did use, the graph would be significantly different. It would show that observed temps are right at the bottom limit of projections.

franklefkin The AR4 WG1 report is available online in HTML format here:

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/contents.html

It would be far easier if you were to just post a link to the relevant page. I looked up page 45 and it doesn't have any of that in my paper copy.

An important point to make here is that if inspection of the CMIP3 models says X and you think the report says Y, then do bear in mind the possibility that there isn't an error in the IPCC report (written by climatologists who generally know what they are talking about) but instead there is some point that you do nt understand and are misinterpreting the report.

Forgetting for a moment the text of the report, do you accept that the CMIP3 model trends are as Tom presents in his diagrams?

Dikran, he is quoting from the Synthesis Report.

I am not sure why he imagines quoting "likely" trends (ie, the 66% confidence interval) for mean trends to the end of the century would contradict 90% confidence intervals for trends to 2015.

franklefkin, projected temperature increase is not linear across the twenty first century, so quoting mean trends to the end of the century has no bearing on trends from 1990-2015 (which may be much lower, and have a wider spread to boot given that they are short term trends).

Thanks Tom, it appears that it is indeed the case that franklefkin has missed a few important points. One should be wary of stating a "bottom line" unless you have first made sure that you really understand all of the details, true skepticim needs to start with self-skepticism.

The two posts of mine above used the following links:

LINK:

and

LINK:

Both of these are obviously from the IPCC, and both address their projections.

A further quote from that report is as follows:

"

Since the IPCC’s first report in 1990, assessed projections have

suggested global averaged temperature increases between about 0.15

and 0.3°C per decade from 1990 to 2005. This can now be compared

with observed values of about 0.2°C per decade, strengthening

confidence in near-term projections.

{WGI 1.2, 3.2}

3.2.1 21

st

century global changes

Continued GHG emissions at or above current rates would

cause further warming and induce many changes in the global

climate system during the 21

st century that would

very

likely

be larger than those observed during the 20th

century.

{WGI 10.3}

"

This is saying that past reports had projected increases of between 0.15 and 0.30 C/ decade, and that observations had increases of around 0.2, which bolsterred their confidence their ability to make these projections. Furthermore, they went on to state that further GHG emmissions make it very likely that warming would be even greater in the coming decades than what had been observed in the 20th century.

So how do we go from there, to saying that the projection had been for between 0.10 and 0.30 C/decade? That is obviously lower than 0.15, not greater.

Please point out in the AR4 where the minimum rojection of 0.10 C /Decade warming is.

[RH] Shortened links that were breaking page format.

franklefkin the request was for you to give a link to the source along with the quote so that we could easily check up on the context of the quote (and see the table itself), we ought to be able to do that without having to go back through your previous posts to try and find out where it is from.

Now, please could you answer my question: "Forgetting for a moment the text of the report, do you accept that the CMIP3 model trends are as Tom presents in his diagrams?"