Frauenfeld, Knappenberger, and Michaels 2011: Obsolescence by Design?

Posted on 2 May 2011 by Daniel Bailey

We know the planet is warming from surface temperature stations and satellites measuring the temperature of the Earth's surface and lower atmosphere. We also have various tools which have measured the warming of the Earth's oceans. Satellites have measured an energy imbalance at the top of the Earth's atmosphere. Glaciers, sea ice, and ice sheets are all receding. Sea levels are rising. Spring is arriving sooner each year. There's simply no doubt - the planet is warming.

And yes, the warming is continuing. The 2000s were hotter than the 1990s, which were hotter than the 1980s, which were hotter than the 1970s. In fact, the 12-month running average global temperature broke the record 3 times in 2010; according to NASA GISS data (2010 is tied with 2005 for the hottest year on record for GISS and tied with 1998 using HadCRUT). Sea levels are still rising, ice is still receding, spring is still coming earlier, there's still a planetary energy imbalance, etc. etc. Contrary to what some would like us to believe, the planet has not magically stopped warming.

Humans are causing this warming

There is overwhelming evidence that humans are the dominant cause of this warming, mainly due to our greenhouse gas emissions. Based on fundamental physics and math, we can quantify the amount of warming human activity is causing, and verify that we're responsible for essentially all of the global warming over the past 3 decades. In fact we expect human greenhouse gas emissions to cause more warming than we've thus far seen, due to the thermal inertia of the oceans (the time it takes to heat them). Human aerosol emissions are also offsetting a significant amount of the warming by causing global dimming.

The Original Frozen Tundra

In October of 2010, The National Oceanic and Atmospheric Administration (NOAA) released the Arctic Report Card. The report contains a wealth of information about the state of climate in the Arctic Circle (mostly disturbing). Especially noteworthy is the news that in 2010, Greenland temperatures were the hottest on record. It also experienced record setting ice loss by melting. This ice loss is reflected in the latest data from the GRACE satellites which measure the change in gravity around the Greenland ice sheet (H/T to Tenney Naumer from Climate Change: The Next Generation and Dr John Wahr for granting permission to repost the latest data).

Figure 1: Greenland ice mass anomaly - deviation from the average ice mass over the 2002 to 2010 period. Note: this doesn't mean the ice sheet was gaining ice before 2006 but that ice mass was above the 2002 to 2010 average.

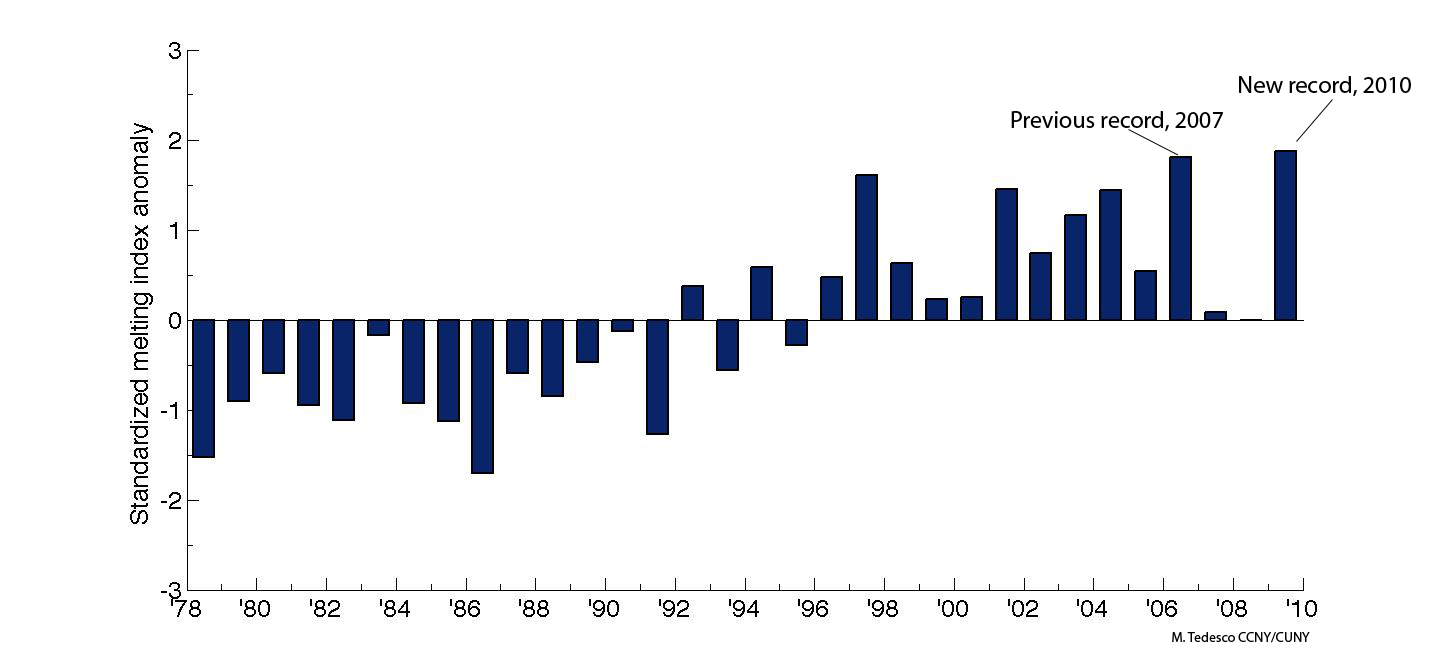

Additionally, Tedesco and Fettweiss (2011) show that the mass-loss experienced in southern Greenland in 2010 was the greatest in the past 20 years (Figure 2 below).

Figure 2: Greenland melting index anomaly (Tedesco and Fettweiss (2011))

The figure above shows the standardized melting index anomaly for the period 1979 – 2010. In simple words, each bar tells us by how many standard deviations melting in a particular year was above the average. For example, a value of ~ 2 for 2010 means that melting was above the average by two times the ‘variability’ of the melting signal along the period of observation. Previous record was set in 2007 and a new one was set in 2010. Negative values mean that melting was below the average. Note that highest anomaly values (high melting) occurred over the last 12 years, with the 8 highest values within the period 1998 – 2010. The increasing melting trend over Greenland can be observed from the figure. Over the past 30 years, the area subject to melting in Greenland has been increasing at a rate of ~ 17,000 Km2/year.

This is equivalent to adding a melt-region the size of Washington State every ten years. Or, in alternative, this means that an area of the size of France melted in 2010 which was not melting in 1979.

Selective Science = Pseudo-Science

Into this established landscape comes a new paper which presents a selective Greenland melt reconstruction. During the review process the papers’ authors were urged to, yet chose not to, include record-setting warm year 2010 temperatures. Had the authors considered all available data, their conclusion that ‘Greenland climate has not changed significantly’ would have been simply insupportable.

They write:

“We find that the recent period of high-melt extent is similar in magnitude but, thus far, shorter in duration, than a period of high melt lasting from the early 1920s through the early 1960s. The greatest melt extent over the last 2 1/4 centuries occurred in 2007; however, this value is not statistically significantly different from the reconstructed melt extent during 20 other melt seasons, primarily during 1923–1961.”

Designed Obsolescence?

Their selective ‘findings’ were obsolete at the time the paper was submitted for publication in December of 2010. In the review process, the authors and journal editors were made aware that important new data were available that would change the conclusions of the study. Unfortunately, the paper represents not only a failure of the review process, but an intentional exclusion of data that would, if included, undermine the paper’s thesis.

Dr. Jason Box has chosen to share for the record a timeline of important events associated with this article’s publication:

- 26 August, 2010, I was invited by Dr. Guosheng Liu – Associate Editor – Journal of Geophysical Research (JGR) – Atmospheres to review the article. Sara Pryor was the JGR chief editor ultimately responsible for this paper’s review.

- 27 August, 2010, I accepted the review assignment.

- 22 September, 2010, I submitted my review, in which I wrote: “The paper may already be obsolete without considering the extreme melting in 2010. I would therefore not recommend accepting the paper without a revision that included 2010.” I post my review posted verbatim here. At this time, I indicated to the editors that I did not wish to re-review the paper if the authors chose not to include 2010 temperatures. It was clear by this date, from the readily-available instrumental temperature records from the Danish Meteorological Institute and other sources such as US National Climate Data Center and NASA GISS that the previous melt season months were exceptionally warm.

- 16 October, 2010, a NOAA press release publicized record setting Greenland temperatures. The press release was linked to this Greenland climate of 2010 article, live beginning 21, October 2010.

- 27 December, 2010, I was invited to re-review the paper. I again stated that I did not wish to re-review the paper if the authors chose not to include 2010 temperatures. By this date, it was more clear that 2010 temperatures were exceptionally warm.

Another very important point: the excuse that the data was not available just is not reasonable given that both the Tedesco and Fettweiss 2011 and Mernild et al 2011 papers each managed to reference this 2010 data in publications that came out prior to that of Frauenfeld, Knappenberger, and Michaels.

Dr. Box:

"The Editor’s decision whether or not to accept the paper would have been made sometime in early 2011. This paper should not have been accepted for publication without taking into account important new data."

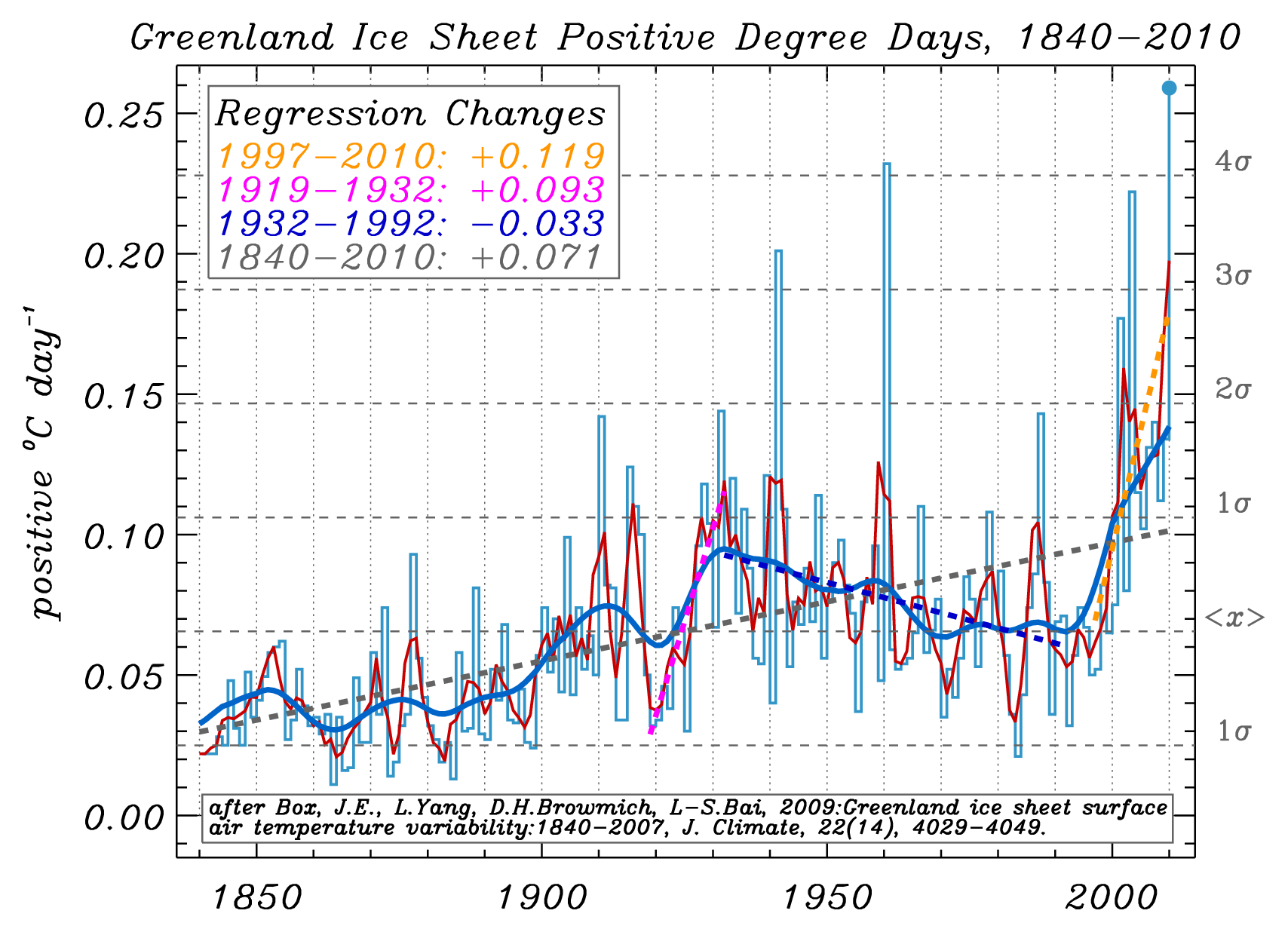

Figure 3: Positive Degree Day reconstruction for the Greenland ice sheet after Box et al. (2009). The "regression changes" presented here are equal to the linear fit (dashed lines in the graphic) value at the end of the period minus the beginning of the period, for example, the 14-year change is the 2010 value minus the 1997 value. The blue Gaussian smoothing line is for a 29 year interval. The dark red smoothing line is for a 3 year interval. PDDs are the sum of positive temperatures. A PDD sum of 10 has twice the melt potential as a PDD sum of 5. Note that not only is the recent melting convincingly distinguishable from that of the 20th Century, but that summer and annual average temperatures in recent years are increasingly above values in the 1920s-1930s. (Courtesy Dr. Jason Box)

Greenland’s past temperatures

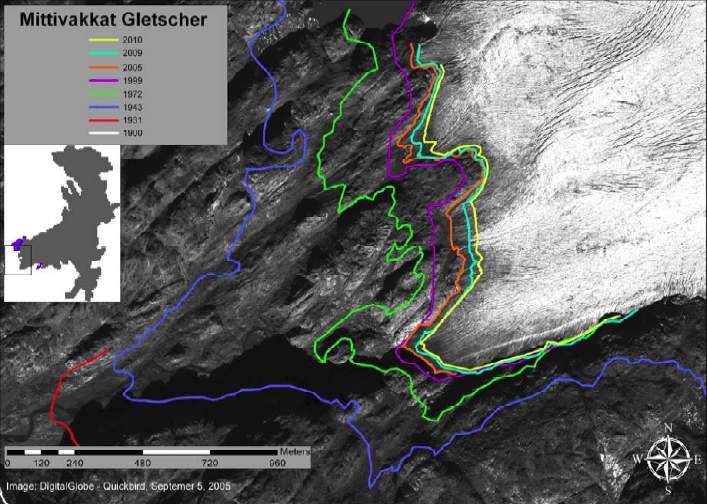

Including year 2010 data reveals (as seen in Figure 5 at bottom), in contrast to the message of the Frauenfeld, Knappenberger, and Michaels paper, that recent Greenland temperatures are warmer than at any time during the 20th Century for the summer, autumn, and annual periods. The 1925-1935 spring season was warmer in 1930 than 2010, but not warm enough to make the corresponding annual average exceed that of the recent times. Important for a melt reconstruction, what Frauenfeld, Knappenberger, and Michaels neglected to include, was that recent summer temperatures exceed those of any time during the past century. As a result glaciers in southern Greenland have retreated far behind their meltlines from the early 20th Century. Evidence of this can be seen in Mittivakkat Glacier (Figure 4 below):

Figure 4: Mittivakkat Glacier in Southern Greenland. Note the red line indicating the 1931 extent of the glacier relative to the yellow line depicting its position in 2010 (Mernild et al, 2011)

One thing to remember is that the regional warming that Greenland experienced in the early 20th Century came at a time when the world overall was colder than it is today. And that the warming then was a result of multiple forcings (in which GHG warming played a role) and is thus fundamentally different than the anthropogenic global warming of the most recent 30 years (in which GHG warming plays by far the predominant role). Additionally, the global cryosphere (the parts of the world covered in ice) has experienced much greater warming (in terms of volume and global extent) in this the most recent period than in the time of supposedly similar warming (the early 20th Century).

Given the thermal lags of oceans and ice, it is clear that Greenland has yet to fully respond to the warming forced upon it, so a reasonable approximation of another 1-2° C is yet in its pipeline. This will translate into yet greater mass losses to come, which evidence indicates may be experienced in non-linear fashion.

Figure 5: Where 2010 ranks relative to the warm period observed from 1923-1961 by Frauenfeld, Knappenberger and Michaels (Source)

Two lingering questions remain:

- Why did Frauenfeld, Knappenberger, and Michaels not include year 2010 data when they were asked to and when the data were readily available, yet the other papers containing the 2010 data published before theirs did?

- Why did the journal publish this paper without the requested revisions?

Climate Warming is Real

Dr. Box:

"Multiple lines of evidence indicate climate warming for which there is no credible dispute. No scientific body of national or international standing has maintained a dissenting opinion. I personally have found no credible science that disproves that human activity significantly influences climate.

An enormous and overwhelming body of science leads rational thinkers to the conclusion that humans influence climate in important ways. For decades, the science has indicated that human activity has become the single most influential climate forcing agent."

National and international science academies and scientific societies have assessed the current scientific opinion, in particular on recent global warming. These assessments have largely followed or endorsed the Intergovernmental Panel on Climate Change (IPCC) position of January 2001 which states:

An increasing body of observations gives a collective picture of a warming world and other changes in the climate system… There is new and stronger evidence that most of the warming observed over the last 50 years is attributable to human activities.

Acknowledgements

- Dr. Jason Box, Assoc. Prof., Department of Geography, Byrd Polar Research Center, The Ohio State University, Columbus, Ohio, USA for his invaluable assistance, advice, knowledge and patience

-

Dr. Mauri Pelto, Professor of Environmental Science, Science Program Chair; Director, North Cascade Glacier Climate Project, Nichols College, Dudley, MA, USA for his timely insights and suggestions

Without the expertise of these two fine climate scientists this article could not have come to pass.

Arguments

Arguments

[DB] Fixed images. I note that it was hotter in Mongolia way back when...

[DB] Fixed Link tag.

[DB] Anyone who wants to respond to this needs to do so on the Tracking-the-energy-from-global-warming thread, where this subject more properly belongs. Thanks!