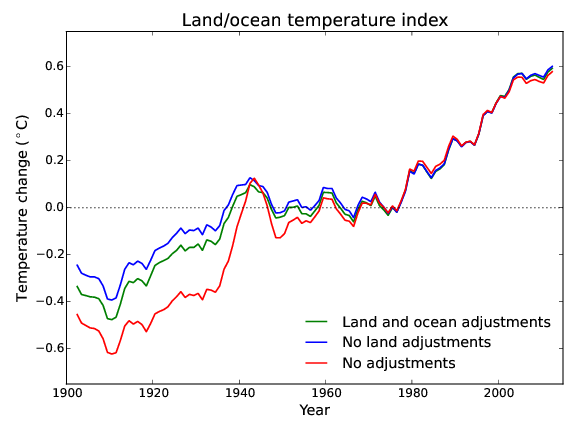

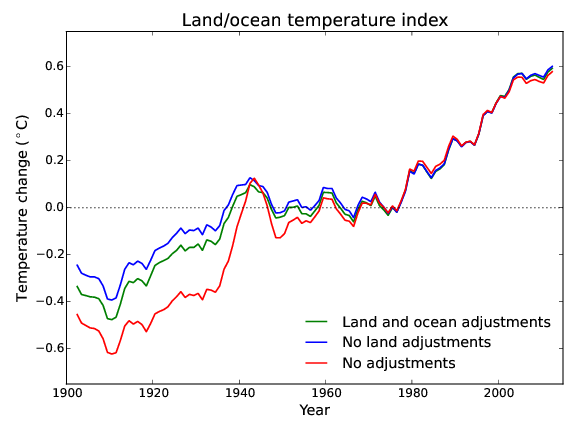

Figure 1: The global temperature record (smoothed) with different combinations of land and ocean adjustments.

Figure 1: The global temperature record (smoothed) with different combinations of land and ocean adjustments.The homogenization of climate data is a process of calibrating old meteorological records, to remove spurious factors which have nothing to do with actual temperature change. It has been suggested that there might be a bias in the homogenization process, so I set out to reproduce the science for myself, from scratch. The results are presented in a new report: "Homogenization of Temperature Data: An Assessment".

Historical weather station records are a key source of information about temperature change over the last century. However the records were originally collected to track the big changes in weather from day to day, rather than small and gradual changes in climate over decades. Changes to the instruments and measurement practices introduce changes in the records which have nothing to do with climate.

On the whole these changes have only a modest impact on global temperature estimates. However if accurate local records or the best possible global record are required then the non-climate artefacts should be removed from the weather station records. This process is called homogenization.

The validity of this process has been questioned in the public discourse on climate change, on the basis that the adjustments increase the warming trend in the data. This question is surprising in that sea surface temperatures play a larger role in determining global temperature than the weather station records, and are subject to a larger adjustments in the opposite direction (Figure 1). Furthermore, the adjustments have the biggest effect prior to 1980, and don't have much impact on recent warming trends.

Figure 1: The global temperature record (smoothed) with different combinations of land and ocean adjustments.

Figure 1: The global temperature record (smoothed) with different combinations of land and ocean adjustments.

I set out to test the assumptions underlying temperature homogenization from scratch. I have documented the steps in this report and released all of the computer code, so that others with different perspectives can continue the project. I was able to test the underlying assumptions and reproduce many of the results of existing homogenization methods. I was also able to write a rudimentary homogenization package from scratch using just 150 lines of computer code. A few of the tests in the report are described in the following video.

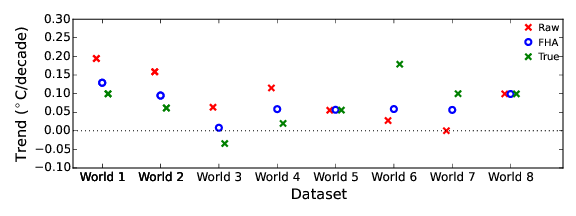

While it is fairly easy to establish that there are inhomogeneities in the record; that they can be corrected, and that correcting them increases the warming trend, there is one question which is harder to answer: how large is the effect of inhomogeneities in the data on the global temperature record? The tests I conducted on the NOAA data and code showed no sign of bias. However the simple homogenization method presented in the report underestimates the impact on global temperatures, both in synthetic benchmark data for which we know the right answer (Figure 2), and in the real world data. Why is this?

Figure 2: Trends for raw, homogenized and true data for 8 simulated 'worlds', consisting of artificial temperature data to which simulated inhomogeneities have been added. In each case the simple homogenization method (FHA) recovers only a part of the trend in the adjustments. Benchmark data are from Williams et al 2012.

Since writing the report I have been investigating this question using benchmark data for which we know the dates of the inhomogeneities. For the benchmark data the primary reason why the simple method only captures part of the trend in the adjustments is a problem called 'confounding'. When comparing a pair of stations we can determine a list of all the breaks occurring in either record, but we can't determine which breaks belong to which record. If the breaks are correctly allocated to the individual records, the rest of the trend in the adjustments is recovered. Existing homogenization packages include unconfounding steps, and I am now working on developing my own simple version.

The report asks and attempts to answer a number of questions about temperature homogenization, summarized below (but note the new results described above).

Further Reading

Cowtan, K. D. (2015) Homogenization of Temperature Data: An Assessment.

Williams, C. N., Menne, M. J., & Thorne, P. W. (2012). Benchmarking the performance of pairwise homogenization of surface temperatures in the United States. Journal of Geophysical Research: Atmospheres (1984–2012), 117(D5).

Help us do science! we’ve teamed up with researcher Paige Brown Jarreau to create a survey of Skeptical Science readers. By participating, you’ll be helping me improve SkS and contributing to SCIENCE on blog readership. You will also get FREE science art from Paige's Photography for participating, as well as a chance to win a t-shirt and other perks! It should only take 10-15 minutes to complete. You can find the survey here: http://bit.ly/mysciblogreaders. For completing the survey, readers will be entered into a drawing for a $50.00 Amazon gift card, as well as for other prizes (i.e. t-shirts).

Posted by Kevin C on Monday, 2 November, 2015

|

The Skeptical Science website by Skeptical Science is licensed under a Creative Commons Attribution 3.0 Unported License. |