The journal Remote Sensing has published a Commentary by Kevin Trenberth, John Fasullo, and John Abraham, which mainly responds to Spencer & Braswell (2011) [SB11], whose publication led the journal's editor to resign. The paper also comments on Lindzen and Choi (2009), as both of these papers attempted to constrain climate sensitivity with recent observational data.

Trenberth et al. note a similar conclusion as that determined by Santer et al. (2011), that short-term noise can disguise the long-term warming trend over periods on the order of a decade:

"deviations between trends in global mean surface temperature and TOA radiation on decadal timescales can be considerable, and associated uncertainty surrounds the observational record. The recent work suggests that 20 years or longer is needed to begin to resolve a significant global warming signal in the context of natural variations."

They also comment on some deficiencies in SB11:

"SB11 fail to provide any meaningful error analysis in their recent paper and fail to explore even rudimentary questions regarding the robustness of their derived ENSO-regression in the context of natural variability. Addressing these questions in even a cursory manner would have avoided some of the study’s major mistakes. Moreover, the description of their method was incomplete, making it impossible to fully reproduce their analysis. Such reproducibility and openness should be a benchmark of any serious study."

Some of these criticisms are very similar to those made in Dessler (2011). Readers may recall from our discussion of Dessler's paper that SB11 performed a regression analysis on just 6 of the 14 models they examined – the 3 with highest and 3 with lowest equilibrium climate sensitivities. Dessler noted that SB11 excluded some of the models which best simulate the El Niño Southern Oscillation (ENSO), and that these were also the models which best matched the data in his regression analysis, and thus what SB11 were really testing was climate models' ability to replicate ENSO. Trenberth et al. arrive at the same conclusion:

"Our results suggest instead that it is merely an indicator of a model’s ability to replicate the global-scale TOA response to ENSO. Since ENSO represents the main variations during a ten-year period, this is of course not surprising...what is driving all of the changes are the associations with ENSO."

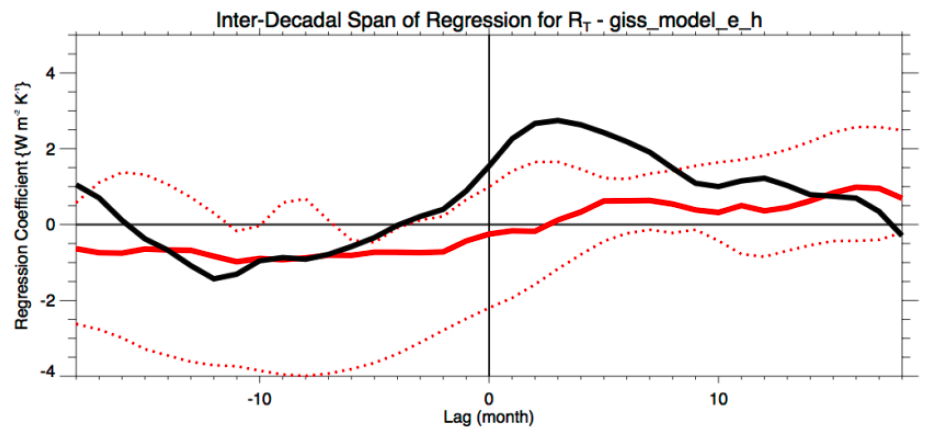

Trenberth et al. performed a similar analysis to SB11, but rather than treat the model result as a single 100 year run as in SB11, they divided it into ten decade long samples of the same length as the observational record. Trenberth et al. show their results for a model which doesn't replicate ENSO well (Figure 1), a model which does (Figure 2), and for all model runs (Figure 3).

Figure 1: Slope of regression coefficients between monthly temperature anomalies and climate models using a model which does not accurately reproduce ENSO. This model has an equilibrium climate sensitivity of 2.4°C for doubled CO2 (relatively low sensitivity). The black line is from observations, the red line is model results averaged by decade, and the red dashed line indicates the range of model results.

Figure 2: Slope of regression coefficients between monthly temperature anomalies and climate models using a model which does accurately reproduce ENSO. This model has an equilibrium climate sensitivity of 3.4°C for doubled CO2 (relatively high sensitivity). The black line is from observations, the red line is model results averaged by decade, and the red dashed line indicates the range of model results.

Figure 3: Slope of regression coefficients between monthly temperature anomalies and all CMIP3 climate models. The black line is from observations, the red line is model results averaged by decade, and the red dashed line indicates the range of model results.

Contrary to SB11's conclusion, Trenberth et al. find that models with higher equilibrium climate sensitivities do a better job of replicating the observed relationship, but the correlation between model sensitivity and regression strength is of marginal statistical significance. Thus they conclude that SB11 fundamentally take the wrong approach:

"Consequently, bounding the response of models by selection of those with large and small sensitivities is inappropriate for these model-observation comparisons."

Trenberth et al. also repeat a common criticism of Roy Spencer's research, that the climate model he uses is too simple and does not accurately represent a number of important climatic factors:

"Because the exchange of heat between the ocean and atmosphere is a key part of the ENSO cycle, SB11’s simple model, which has no realistic ocean, no El Niño, and no hydrological cycle, and an inappropriate observational baseline, is unsuitable. Use of a reasonable heat capacity for the ocean is also crucial. Importantly, SB11 treated non-radiative energy exchange between the ocean and atmosphere as a series of random numbers, which neglects the non-random variations of this energy flow associated with the ENSO cycle...None of those processes are included in the SB11 model and its relevance to nature is thus highly suspect."

This paper has been incorporated into the Intermediate level rebuttal to Roy Spencer finds negative feedback

Posted by dana1981 on Tuesday, 20 September, 2011

|

The Skeptical Science website by Skeptical Science is licensed under a Creative Commons Attribution 3.0 Unported License. |