In 2007, Mark Hoofnagle suggested on his Science Blog Denialism that denialists across a range of topics such as climate change, evolution, & HIV/AIDS all employed the same rhetorical tactics to sow confusion. The five general tactics were conspiracy, selectivity (cherry-picking), fake experts, impossible expectations (also known as moving goalposts), and general fallacies of logic.

Two years later, Pascal Diethelm and Martin McKee published an article in the scientific journal European Journal of Public Health titled Denialism: what is it and how should scientists respond? They further fleshed out Hoofnagle’s five denialist tactics and argued that we should expose to public scrutiny the tactics of denial, identifying them for what they are. I took this advice to heart and began including the five denialist tactics in my own talks about climate misinformation.

In 2013, the Australian Youth Climate Coalition invited me to give a workshop about climate misinformation at their annual summit. As I prepared my presentation, I mused on whether the five denial techniques could be adapted into a sticky, easy-to-remember acronym. I vividly remember my first attempt: beginning with Fake Experts, Unrealistic Expectations, Cherry Picking… realizing I was going in a problematic direction for a workshop for young participants. I started over and settled on FLICC: Fake experts, Logical fallacies, Impossible expectations, Cherry picking, and Conspiracy theories.

When I led a 2015 collaboration between the University of Queensland and Skeptical Science to develop the free online course Denial101x: Making Sense of Climate Science Denial, we made FLICC the underlying framework of the entire course. An important component of our debunking of the most common myths about climate change was identifying the denial techniques in each myth. A common comment we received from students was how much they appreciated learning about FLICC.

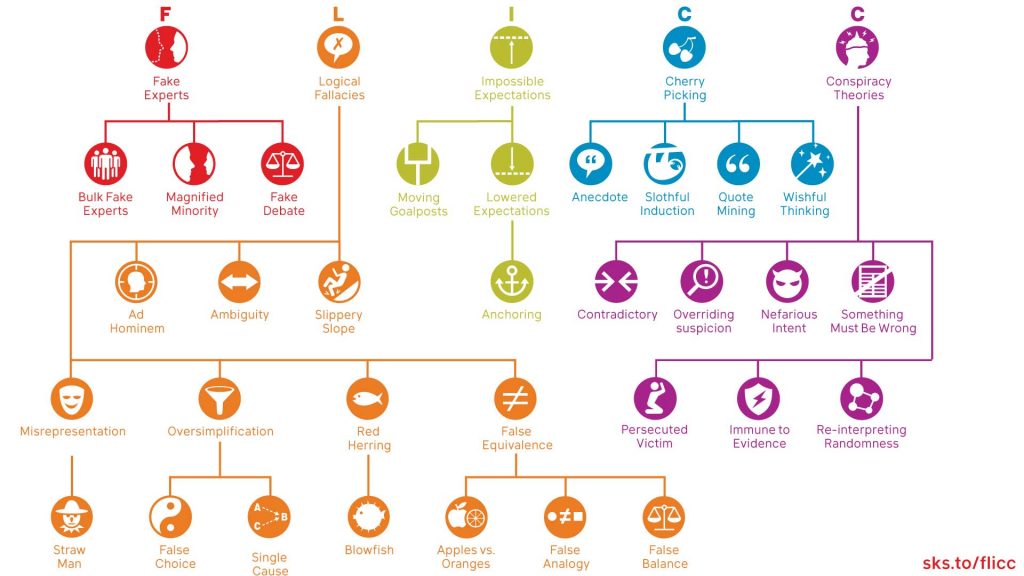

Since moving to the Center for Climate Change Communication at George Mason University, I’ve continued to build the FLICC taxonomy. In my collaboration with critical thinking philosophers Peter Ellerton and David Kinkead, I was introduced to reasoning fallacies that we hadn’t included in Denial101x. As I begun developing the Cranky Uncle game, I began a series of fallacy quizzes where I gradually built the taxonomy up as I introduced people to an ever-growing collection of denial techniques (note the differing difficulty levels between quiz #1 and quiz #8). When Stephan Lewandowsky and I published The Conspiracy Theory Handbook, we added seven traits of conspiratorial thinking. Here is the latest version of the FLICC taxonomy (with all the icons freely available and shareable on Wikimedia):

When I visited Brisbane in December 2019, I asked the University of Queensland if I could record a video explaining the updated FLICC taxonomy. They agreed but once they saw my script, including explanations and definitions of each denial technique, they suggested I divide the video into a three-parter. Always a sucker for a trilogy, I agreed – here are the three videos:

As well as the videos, this post includes written definitions and examples of each denial technique. I will continue to update this table as the taxonomy evolves in the future.

| TECHNIQUE | DEFINITION | EXAMPLE |

| Ad Hominem | Attacking a person/group instead of addressing their arguments. | “Climate science can’t be trusted because climate scientists are biased.” |

| Ambiguity | Using ambiguous language in order to lead to a misleading conclusion. | “Thermometer readings have uncertainty which means we don’t know whether global warming is happening.” |

| Anecdote | Using personal experience or isolated examples instead of sound arguments or compelling evidence. | “The weather is cold today—whatever happened to global warming?” |

| Blowfish | Focusing on an inconsequential aspect of scientific research, blowing it out of proportion in order to distract from or cast doubt on the main conclusions of the research. | “The hockey stick graph is invalid because it contains statistical errors.” |

| Bulk Fake Experts | Citing large numbers of seeming experts to argue that there is no scientific consensus on a topic. | “There is no expert consensus because 31,487 Americans with a science degree signed a petition saying humans aren’t disrupting climate.” |

| Cherry Picking | Carefully selecting data that appear to confirm one position while ignoring other data that contradicts that position. | “Global warming stopped in 1998.” |

| Contradictory | Simultaneously believing in ideas that are mutually contradictory. | “The temperature record is fabricated by scientists… the temperature record shows cooling.” |

| Conspiracy Theory | Proposing that a secret plan exists to implement a nefarious scheme such as hiding a truth. | “The climategate emails prove that climate scientists have engaged in a conspiracy to deceive the public.” |

| Fake Debate | Presenting science and pseudoscience in an adversarial format to give the false impression of an ongoing scientific debate. | “Climate deniers should get equal coverage with climate scientists, providing a more balanced presentation of views.” |

| Fake Experts | Presenting an unqualified person or institution as a source of credible information. | “A retired physicist argues against the climate consensus, claiming the current weather change is just a natural occurrence.” |

| False Analogy | Assuming that because two things are alike in some ways, they are alike in some other respect. | “Climate skeptics are like Galileo who overturned the scientific consensus about geocentrism.” |

| False Choice | Presenting two options as the only possibilities, when other possibilities exist. | “CO2 lags temperature in the ice core record, proving that temperature drives CO2, not the other way around.” |

| False Equivalence (apples vs. oranges) |

Incorrectly claiming that two things are equivalent, despite the fact that there are notable differences between them. | “Why all the fuss about COVID when thousands die from the flu every year.” |

| Immune to evidence | Re-interpreting any evidence that counters a conspiracy theory as originating from the conspiracy. | “Those investigations finding climate scientists aren’t conspiring were part of the conspiracy.” |

| Impossible Expectations | Demanding unrealistic standards of certainty before acting on the science. | “Scientists can’t even predict the weather next week. How can they predict the climate in 100 years?” |

| Logical Fallacies | Arguments where the conclusion doesn’t logically follow from the premises. Also known as a non sequitur. | “Climate has changed naturally in the past so what’s happening now must be natural.” |

| Magnified Minority | Magnifying the significance of a handful of dissenting scientists to cast doubt on an overwhelming scientific consensus. | “Sure, there’s 97% consensus but Professor Smith disagrees with the consensus position.” |

| Misrepresentation | Misrepresenting a situation or an opponent’s position in such a way as to distort understanding. | “They changed the name from ‘global warming’ to ‘climate change’ because global warming stopped happening.” |

| Moving Goalposts | Demanding higher levels of evidence after receiving requested evidence. | “Sea levels may be rising but they’re not accelerating.” |

| Nefarious intent | Assuming that the motivations behind any presumed conspiracy are nefarious. | “Climate scientists promote the climate hoax because they’re in it for the money.” |

| Overriding suspicion | Having a nihilistic degree of skepticism towards the official account, preventing belief in anything that doesn’t fit into the conspiracy theory. | “Show me one line of evidence for climate change… oh, that evidence is faked!” |

| Oversimplification | Simplifying a situation in such a way as to distort understanding, leading to erroneous conclusions. | “CO2 is plant food so burning fossil fuels will be good for plants.” |

| Persecuted victim | Perceiving and presenting themselves as the victim of organized persecution. | “Climate scientists are trying to take away our freedom.” |

| Quote Mining | Taking a person’s words out-of-context in order to misrepresent their position. | “Mike’s trick… to hide the decline.” |

| Re-interpreting randomness | Believing that nothing occurs by accident, so that random events are re-interpreted as being caused by the conspiracy. | “NASA’s satellite exploded? They must be trying to hide inconvenient data!” |

| Red Herring | Deliberately diverting attention to an irrelevant point to distract from a more important point. | “CO2 is a trace gas so it’s warming effect is minimal.” |

| Single Cause | Assuming a single cause or reason when there might be multiple causes or reasons. | “Climate has changed naturally in the past so what’s happening now must be natural.” |

| Slippery Slope | Suggesting that taking a minor action will inevitably lead to major consequences. | “If we implement even a modest climate policy, it will start us down the slippery slope to socialism and taking away our freedom.” |

| Slothful Induction | Ignoring relevant evidence when coming to a conclusion. | “There is no empirical evidence that humans are causing global warming.” |

| Something must be wrong | Maintaining that “something must be wrong” and the official account is based on deception, even when specific parts of a conspiracy theory become untenable. | “Ok, fine, 97% of climate scientists agree that humans are causing global warming, but that’s just because they’re toeing the party line.” |

| Straw Man | Misrepresenting or exaggerating an opponent’s position to make it easier to attack. | “In the 1970s, climate scientists were predicting an ice age.” |

And lastly, a bit of fun. Every year, Inside the Greenhouse hold a competition inviting people to submit climate comedy videos. In 2019, I submitted Giving Climate Denial the FLICC, which received an honorable mention.

This post is reposted from crankyuncle.com.

Posted by John Cook on Tuesday, 31 March, 2020

|

The Skeptical Science website by Skeptical Science is licensed under a Creative Commons Attribution 3.0 Unported License. |