How we discovered the 97% scientific consensus on man-made global warming

Posted on 1 November 2013 by MarkR

As part of our work on "The Consensus Project" study, I said:

"We want our scientists to answer questions for us, and there are lots of exciting questions in climate science. One of them is: are we causing global warming? We found over 4000 studies written by 10 000 scientists that stated a position on this, and 97 per cent said that recent warming is mostly man made.”

The journal we published in, Environmental Research Letters has a word limit and despite publishing loads of extra info since then, we haven't talked through some of the conversations we had on the way. There have been lots of comments asking why we considered the scientists we did, and how we judged whether they endorsed that most of the recent global warming was man-made or not.

A brain surgeon for brain surgery, a climate scientist for climate science

Our first thought was: who should count? We decided that researchers who work and publish on climate science are the right group to ask. Just like you wouldn't ask a dentist to do brain surgery we thought that it was better to ask those who research climate change rather than the Big Bang.

These are the guys and girls who spend all their working lives studying the climate and who stay up-to-date with new research. If something is discovered then they will read about it, put it in context, and judge how strong the evidence is.

To us, we were looking at the experts who could spend enough time and would know enough to judge all of the evidence for themselves. Not everyone has done new research showing that the world ain't flat, but scientists who need to know about the shape of the Earth have checked all the evidence and they're convinced that it ain't.

Second: how does research show whether it endorses or rejects man-made global warming? We found that many studies don't put in their summary an explicit statement that they've found that Earth is round rather than flat, that the atmosphere is made of air rather than cheese, that they determined that 1+1=2 or that they've calculated that climate change is man-made.

Let's say that some scientists are working on extreme weather in Japanese cities, and they say that the number of hot days are changing, and that they are interested in looking at what are the 'probable changes' in future due to 'predicted global warming due to an increase in atmospheric greenhouse gases'.

We reckoned that scientists like this were implying that man-made global warming is real when they wrote that they expect future warming from greenhouse gases to have an impact.

This isn't as 'explicit' as saying something like 'we find that since the nineteenth century, greenhouse gases, not solar irradiance variations, have been the dominant contributor to the observed temperature changes'. This is why we had different categories for how strong the endorsement or rejection was.

The atmosphere isn't made of cheese

One argument we've noticed is that the opinions of those Japanese scientists should be ignored and shouldn't be counted when trying to work out whether there's a scientific consensus on man-made global warming, because they don't explicitly calculate the cause.

But what if you apply that to other ideas, like whether the world is round or flat, or whether the atmosphere is made of air rather than cheese? A quick search through google scholar for studies on the atmosphere finds nothing on the first page where the authors explicitly say that they measured that the atmosphere isn't made of cheese. But they implicitly reject that idea when they work as if it's made of air. We reckoned that if scientists judge the evidence on a subject to be very strong, if there is a consensus, then they will work as if it's true and their work should be counted as an implicit endorsement.

Among those who want to try to hide the consensus, one trick is to try to rubbish or hide away as many studies as possible. They claim to make the consensus disappear by saying that scientists who say that climate has warmed and greenhouse gases will cause future warming do not think that recent global warming is man-made. This is an example of impossible expectations, one of five common features of denial movements as explained by Dana Nuccitelli.

The same tricks could be used to 'disappear' any scientific consensus: using their techniques, the consensus that the atmosphere isn't made of cheese could also be disappeared.

Who knows better about a study: the scientist who did the study, or Joe Blogger?

We only looked at the summaries (or 'abstracts', in journal-speak) because we had thousands of them to look at. They have limited space, so they don't always report everything that a study has found, but there was no way we had time to read thousands of full papers.

So we asked the scientists who wrote them to tell us what their full paper said about the cause of global warming. 1,189 scientists were kind enough to answer for 2,142 of their papers.

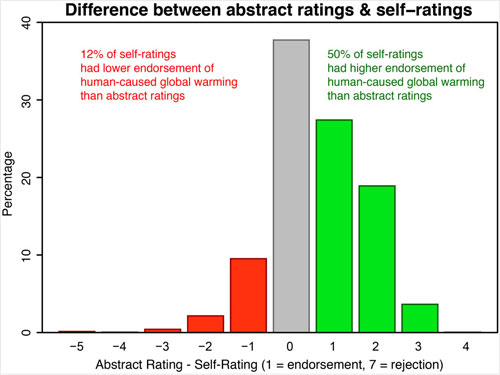

It turns out that our study of the summaries was very conservative. We thought that there were 18 papers, or just 0.8%, showing the most explicit endorsement of man-made global warming, although 36% had less strong endorsements. It turns out that the authors reckoned that more like 10% of their papers represented the most explicit type of endorsement possible once you went beyond the summary we looked at: more than 10 times as many as we reported.

The overall consensus remained about the same: 97% whether you look at just the summaries or at the whole paper, but often the scientists said that the full paper was a stronger endorsement than we'd found in the summary.

Commentators who've said that we should ignore the 'implicit' endorsements like those Japanese scientists have been curiously quiet on what the scientists themselves said.

Do you think that gravity is real, or is it 7% real?

Finally, where did we get the 10,000 scientists from? Our recipe was simple: if a scientist published a paper that endorsed or rejected, and hadn't published any paper saying the opposite, then they were added to the endorsement or rejection pile.

Let's take two examples: Professor Kevin Trenberth and Dr Roy Spencer.

Professor Kevin Trenberth has published over 200 papers in climate science, and our search terms found 14 studies, of which one is the highest level of explicit endorsement, and a total of 7 are endorsements of any level. The other 7 are 'no position'. In our ratings, that puts him as an endorsing scientist, and he's added to the pile of 10,188 endorsing authors.

There is an argument that he is only 50% endorsing, or if you believe that only the most explicit endorsements count, then only 7% of him should count as an endorsing scientist. We think that's the wrong way of going about it. Just because he wrote a paper saying that satellites were underestimating global warming but didn't talk about whether it was man-made doesn't mean that 7% of him is convinced that we don't know whether man-made global warming is real!

The example of Dr Spencer is the same, but in the opposite direction. We found 12 studies by him including 11 'no position' and 1 'rejection'. This meant we added him to the pile of 128 rejecting authors, even though only 7% of his papers were involved in that judgment. This is opposite to what he said in testimony to the US congress.

Conclusions

Testing scientific opinion isn't as easy as you'd think, but we're convinced we found a solid way of doing it. If a study assumes that man-made global warming is true, then the authors have obviously judged the evidence and should be counted as an implicit endorsement. Their judgment shouldn't just be thrown out: if we did that then we'd end up with claims that there is no consensus over whether Earth is round or the atmosphere is made of air rather than cheese!

If a scientist has published work saying that man-made global warming is real, but they also published a study that says nothing about the cause of global warming, then we should only count the bits where they talk about the cause, whether endorsing or rejecting. Otherwise you end up with the idea that someone is only 8% or 50% endorsing of an idea, which probably doesn't reflect them very well.

Anyway we didn't want you to just trust us: in the paper and our data we showed what the scientists who wrote the papers said. According to them, we were too conservative. We reckon 97% consensus and the scientists themselves reckon a 97% consensus, but their endorsements were on average stronger and more explicit than we estimated.

Arguments

Arguments

Hi Mark,

Though the sampling and methods, as well as the questions, were different, and though the presentation was not officially published (though it has been cited since), Our survey in 2007-8 resulted in the following conclusion: (verbatim):

4. Almost all respondents (at least 97%) conclude that the human addition of CO2 into the atmosphere is an important component of the climate system and has contributed to some extent in recent observed global average warming.

Since it was me who constructed the sample list and since my co-authors are not trivial figures in the field, I know that it was a decent stab at getting to the reality, at the time, of Climate Scientists' opinion. What is remarkable is that the 'bottom line' is so similar, though the approach was so different.

Perhaps the time has come to reconsider the original survey and attempt a more rigorous follow-up...

Best wishes, F.

Just in case anyone cares: http://www.jamstec.go.jp/frsgc/research/d5/jdannan/survey.pdf

Fergus Brown @2, the article is not peer reviewed - or more probably is peer reviewed and rejected. The reason it could not pass peer review, if the authors even had the gumption to risk that sort of rejection is plain to see. In the methodoligy, they state that:

In fact, a complex compound question is asked, which differs from response to response. That is because each potential "response" introduces new, and not necessarily compatible elements so that ordering responses on a numerical scale is meaningless. Thus response one includes five distinct sub hypotheses, including a claim of no warming, a claim of scientific fraud by the IPCC, and a claim that the physics of greenhouse are "a false hypothesis". Agreement with response one requires agreement with all five distinct sub-hypotheses, and therefore is not a simple measure of the primary question. Response 6, which among other things agrees that the IPCC understates the problem also requires agreement that the IPCC has been politically compromised.

The standard practise in surveys is to ask simple, distinct questions which respondents are asked to rate their agreement with. On that basis, agreement with the basic physics should be separated out as a distinct question. Likewise, agreement that the IPCC has been politically compromised should be separated out as a distinct question. Indeed, ideally, it should be two questions, "To what level do you agree that the IPCC has been politically compromised so as to understate the effects of global warming?" and "To what extent do you agree that the IPCC has been politically compromised so as to overstate the effects of global warming?" Together with a distinct question that "Indicate on a scale of 1 to 7 the extent to which the IPCC underestimates (1), accurately reflects, or overestimates (7) the risks of global warming", this would allow you to tease out the level of disagreement with the IPCC which is simply scientific disagreement.

When faced with such complex, compound responses, respondents must either not respond, respond in a bin that does not truly reflect their opinion to avoid more seriously compromising their position, or attempt to treat the scale as a simple scale in response to the main question. The researcher can have no idea as to which strategy was taken, and as diverse strategies are likely, the proportion of each taken. The data, therefore becomes uninterpretable except at the grossest level.

This problem is compounded by the clear bias in the responses. Out of seven numbered responses, four indicate the IPCC has overestimate the problem, either drastically and fraudulently, or through excessive confidence. This means scientists who agree with the IPCC or believe it understates the problem are pigeonholed into just three position, and are less likely to find a response that matches their actual opinion. They are, therefore, less likely to respond. This bias would explain the significant disagreement in results in what still remains the best and most comprehensive survey of climate scientists results, Bray and von Storch (2010) (despite certain problems I have with it on some questions).

All in all, the survey you link to is almost a complete waste of time. I am, therefore, unsurprised to see Roger Pielke Snr's name attached to it, but disappointed to see James Annan's.

I agree with Tom. This just doesn't look like a serious poll. And the conclusion about the significance of the rejection side is, I would think, not supportable at all. Starting out with such a small sample, I don't see why you would even begin writing the paper! You'd be better off just scrapping the results and starting over. Spend time trying to find ways to get a larger sampling of respondents and improving the questions.

Heck, if we were able to get thousands of respondents for Cook et al, it can't really be that difficult a task. (Though, I'll admit to contributing a considerable number of hours collecting email addresses off the internet for Cook et al.)

Rob, your remarks seem unduly harsh.

The sample size isn't necessarily a huge problem in itself, depending on what's being tested.

As Tom highlights in his remarks on the methods of elicitation employed for the effort, having more concerted assistance by a social scientist familar with the nuances of survey technique would have been of benefit for Fergus' paper. Unfortunately, social scientists seem to be at a discount among so-called hard science types, one of the reasons physical scientists are flailing so badly in attempting to communcate with the public at large. Fergus' paper is an example of what may happen by ignoring a substantial body of expertise.

What's interesting to me is that despite its limitations, Fergus' experiment produced a result broadly in agreement with Cook et al. "97%" seems to be a point of convergence. :-)

Doug @5, the authors did in fact elicit the aid of David Jepson, who is described as a "poll specialist" and as having a BSc. What branch of study for a BSc would qualify you as a "poll specialist" is beyond me.

More importantly, the agreement on a 97% concensus is entirely superficial and depends on incorrectly interpreting the Cook et al (2013) concensus as being that "human activities contribute a net positive forcing over the twentieth century", or something equivalent. In fact, the Cook et al "consensus position" is that "humans have caused greater than 50% of recent global warming" where "recent" is undefined, but certainly includes the last 40 years, and probably not more than 130 years. Only 82% of respondents to Fergus' survey could reasonably be interpretted as agreeing to that proposition.

Given the poor quality of the survey, however, I believe that to be an irrelevant data point.

Doug... Maybe so. I'm certainly not an expert on polling but it would seem to me, with only 140 data points, and the low end consisting of figures of 1's and 3's, those are not very robust figures when only a few additional data points could alter the conclusions fairly significantly. The method of collection could also have a significant affect on the results at this

The Doran figures are usually critiqued for similar reasons, even though they start with well over 1000 respondents and whittle that down to a small figure representing researchers who have specific expertise in climate work.

But then again, the conclusions of Doran were that the greater the expertise in the climate research, the more likely they were to believe the AGW was a problem.

It seems to me if you really wanted to get a true read on what they seem to be setting out to find, don't you think you'd want to do a bit more work? You'd want a larger sampling. You'd want some way to better test that your phrasing wasn't influencing your results.

I'm not an expert either, Rob, but as a bystander to properly constructed surveys I've been amazed at how much useful information can be extracted from what looks like not only a terrible response rate but that coming on top of a "small" sample size. My skepticism over these counterintuitive scenarios has been nullified after being treated to detailed explanations. Not to say it's easy. The process reminds me somewhat of people traversing glaciers with crevasses covered witn snow, or something like that. Looks simple, turns out to be quite perilous, can be done pretty reliably given enough expertise.

Given that survey research methodology in detail and as it's used for conducting scientific inquiry is taught at the graduate level, a BSc doesn't seem very predictive of a person's qualifications for assisting Fergus' work, not even if it's a degree in a directly related field. Not to say that Fergus' assistant couldn't have been helpful, just that the BSc isn't sufficient or even very relevant.

I get what Tom is saying, but defocusing a little bit it's fairly clear that all the darts on this board are falling in the same general vicinity; latterday climate change is significant and is mostly thanks to us. However the tea leaves sink to the bottom of the cup, the message is the same. It would be nice if we were permitted to stop trying to evade this conclusion and concentrate on fixing the problem.

doug @8, Cook et al (2013) surveyed 8,547 authors. Allowing that those surveyed were not all climate scientists, and that not all climate scientists were surveyed, this still suggests that there are around 10 thousand actively researching climate scientists world wide. Dummies.com have a simple formula for confidence intervals relative to sample size. Of the three surveys under discussion, there are 140 responses for Brown, Pielke and Annan; 373 for Bray and von Storch; and 1,189 for Cook et al. Using a population size of 10,000 and a 95% confidence interval, this yields margins of error of, respectively 1.95%, 1.92%, and1.84%. Hardly any difference at all, but this assumes that the sample is random, that the survey was not biased, and that it had a normal distribution. All three suppositions are false for all three surveys.

The sample is not random in all three cases because responses depend on factors which may biase the results. In particular, people with stronger opinions are more likely to respond. In addition, the method of selecting the sample population in Cook et al introduces biases. Further, knowing the name of the people conducting the survey may also bias responses.

The actual survey instrument for Brown et al was definitely biased as discussed above. So also was the question in Bray and von Storch most closely matching that in Cook et al, although not as much as that in Brown et al. The question in Cook et al is not biased, but definitely open to misinterpretation; although ,tellingly, most of the misinterpretations have come after the event, and after "skeptics" initially based their criticisms on a correct interpretation of the survey, which leads me to believe misinterpretation may not be such a large factor.

Finally, the distribution of the sample in Brown et al is tri-modal rather than normal, it is is at least plausible that the distributions of opinions in the population are not normal.

We had a terrific discussion tonight over dinner thanks to this article. Fascinating. Short of having very favorable extenuating circumstances, Fergus' response was deemed too small to be of much use. Not to say there could be nothing there, just very unlikely to be worth publishing. Useful impressions but short of a finding, so to speak.

Tom's absolutely correct about the difficulty of avoiding bias both in the way elicitations are crafted and via self-selection. It's very hard. However, I understand that neither problem is necessarily fatal or an insurmountable obstacle to further learning. So much to learn about this.

The really interesting part of the conversation was about Google's sampling system. I think it's going to open a world of possibilities that were previously too expensive and too cumbersome to explore.

In my opinion, the "implicit endorsements" are the strongest endorsements. An explicit statement that "we find" or "we conclude" suggests that the question is open (at least to some extent) before the research was done and the results were examined. An implicit endorsement implies that the question is closed.

It might be interesting to obtain and plot the ratios of implicit endorsements to explicit endorsements of "Continuous Creation" and "Big Bang" in the cosmology/astrophysical literature as a function of time from the 1950s to the present. If this were done, then I suspect it would support my opinion about the relative strength of an implicit versus an explicit endorsement in a scientific field.

Thanks to all for their comments and observations about the original survey. I agree that there were many parts of it which were insufficiently rigorous, but in fairness we do point out in the paper that it is not being presented as any more than a preliminary study, and we also take pains to point out that it is not sufficiently robust for the results to meet the criteria of statistical significance.

It was always our intention really to present a 'first view' of opinion, post-Oreskes, and avoiding that particular methodology. At the time, we hoped to be able to follow up with something more scientific; unfortunately we couldn't, but others have.

The questions were worked hard on, but not with sufficient expertise to meet proper survey criteria; the other criticisms are also fair. But I will stick by the work we did, in that it was prepared, including the sample, with good intention, in search of a fair and representative sample, and no unreasonable claims were made on the back of the work.

The original point of mentioning it (apart from showing off :) ), was to point out the 'coincidence' of the result. That it has given some of you some entertainment is an added pleasure. Lastly, I'd say that anyone interested in doing a similar undertaking should recognise in advance that even a smallish and provisional survey requires a vast amount of work, so kudos to John and the team for their efforts.

Fergus Brown @12, well spoken!

By happy chance, this month's AAPOR Journal of Survey Statistics and Methodology is largely dedicated to some important problems brought up by Tom. The current issue is built around an extensive and fascinating "task force" report on non-probability sampling, followed by illuminating comments and then a rejoinder by members of the group producing the report.

Thanks to the necessary background information provided throughout the whole discussion, in sum the November issue of JSSM provides a rich cornucopia of references to both non-probability sampling methods as well as more traditional methods.

J Surv Stat Methodol (2013) 1 (2): 89.

doi: 10.1093/jssam/smt018

Thumbs upped FB's comment. :-)

I missed the original SkS forum discussion on this blog post. A very enjoyable read it was.

I'll apologise in advance if the reference to John Howard skirts too closely to the issue of being political, but today he's come out as a denier of the need to act ugently (and indeed at all, in all likelihood) in response to human-caused climate change:

http://www.smh.com.au/federal-politics/political-news/the-claims-are-exaggerated-john-howard-rejects-predictions-of-global-warming-catastrophe-20131106-2wzza.html

It seems that the conservative arm of Australian politics is determined to go with the meme that the science is wrong, and just as determined to drag the country and the planet down a course of perpetual inaction based on this ideology.

We have a profound problem in our country when even 100% agreement amongst professional scientists would be insufficient to sway the people who have their hands of the steering wheel of the nation. Something is profoundly broken in our government (whether current or recently-former) when an untrained lay person associated with vested interests would rather trust his ideologically-based "instinct" than experts who have a far better understanding of the subject.

I'm not sure that anything could convince these people until the country and the planet are rendered essential ruined for habitation by Western human society - and even then it might be a Hy-Brazil scenario.

Question:

Of the scientists that were surveyed to rate their own papers, did you include Alan Carlin, Craig D. Idso, Nicola Scafetta, Nils-Axel Morner, Nir J. Shaviv, Richard S.J. Tol, and Wei-Hock "Willie" Soon?

I ask because Anthony Watts, referring to a PopTech article regarding those scientists' comments on the paper, says that they were not contacted. But the scientists themselves say nothing about that.

Do you have a list of the scientists you attempted to contact, perhaps in supplementary material?

Any leads appreciated.

Barry.

barry @18, Richard Tol was certainly included, as he has himself confirmed. Unfortunately self rating authors are entitled to anonymity and Cook and his co-authors have done their best to ensure it. Therefore they cannot answer with regard to the others unless they voluntarilly permit their names and self ratings to be released; or themselves volunteer the information as to whether or not they respond, and if so how they responded. I suspect they will not volunteer that information because, if they do, it will be obvious that their disagreement is unusual among respondents. They are angling to be considered representative when they know full well from the self rating survey that they are not.

I will add that there claims about their papers being incorrectly rated in the abstract ratings, are not, in all cases, what they are cracked up to be.

I’ve been having an exchange over the Cook et al paper and would like some information concerning Willie Soon and also Craig D. Idso’s claim that they were mischaracterized in the survey as being neutral instead of showing that they were in opposition. I’ve looked for a response to the claim but I’ve been unable to find it. Can someone steer me to an explanation?

Stranger... In terms of the big picture, that hardly matters. Cook et al took the extra step of allowing researchers to self-rate their papers, and the results were nearly identical to the SkS raters' results.

If Soon and Idso self-rated their papers, then their ratings were recorded there.

Stranger: If you look at the rated abstracts, and search on "Soon" and "Idso", you will see that of the sampled abstracts Soon's (2 abstracts) were rated 3 (implicit endorsement) and 4 (neutral) respectively, while Craig Idso's abstracts (I found 2) also were rated 3 and 4.

This was a sampling protocol - not an exhaustive search of every paper published - but of the particular Soon and Idso fish/papers in the net the rankings were neutral or higher in endorsement of AGW.

Stranger @20, searching The Consensus Project database, I find just two papers with Willi Soon as a coauthor. The first, on polar bears, was rated neutral because it does not include any discussion in the abstract germain to the attribution of recent global warming. The abstract of the second reads as follows:

The first thing you will notice is that it says nothing to dismiss the attribution of at least 50% of recent global warming to anthropogenic factors. On the contrary, it several times mentions CO2 forcing (an anthropogenic factor) as a relevant forcing, and as a cause of recent warming. Specifically, it is stated:

Given reasonable background information about the relative strengths of anthropogenic and solar forcing, that represents an implicit endorsement that >50% of recent warming was anthropogenic. However, we don't need to dig that far in. The paper uses climate models which are known, given historical forcings, to show humans as responsible >50% of recent warming. Absent an explicit disclaimer indicating that the authors are not using standard historical forcings, that again respresents an implicit endorsement. The paper was in fact rated as Explicitly endorsing but not quantifying, ie, a 2, and that is arguably a mistake. (I would rate it as 3, implicitly endorsing.) It is, however, a mistake that makes zero difference to the headline result of Cook et al.

Now it is possible that Soon and his coauthors did clearly indicate the use of radically a-historical forcings in the depths of the paper. The raters did not get to see the depths of the paper, however. They rated on the abstract and therefore a rating justified by the abstract, though contradicted within the paper merely shows that abstracts often poorly communicate the contents of papers, not that the raters made a mistake. Further, raters clearly rated abstracts, not authors. If Willi Soon is really saying that he (rather than an abstract of one of his papers) was rated as endorsing the consensus, then he either completely misunderstands the study he is criticizing (nothing new there) or completely misrepresents it.

Turning to Craig Idso, he also has to papers rated, one of which was rated as neutral. The second, which was rated as implicitly endorsing the consensus, had the following abstract:

Cutting to the chase, the authors are suggesting an alternative explanation to the fact that spring is coming earlier than it did in the past. The standard explanation is that it is warmer earlier. Craig Idso's alternative explanation in terms of the CO2 fertilization effect is found to be a viable hypothesis, that "... might possibly account for 2 of the 7 days by which the spring drawdown of the air’s CO2 concentration has advanced over the past few decades." The might, possibly indicates not only uncertainty, but the upper range of the potential effect. That is, it might account for 28.6% of the botanical effect of an early spring (and zero of the effect on animals). That leaves around 70% still attributable to the traditional explanation, ie, the increased warmth.

To my mind, that is not enough to rate the paper as implicitly endorsing the consensus; though only because the consensus is implicitly defined as relating to attribution on which the abstract says nothing. Therefore this is a case of an abstract that was rated (3), but should have been rated, IMO, (4).

Note again that the ratings are not rating authors, and not rating papers. However, Cook et al did include a rating of papers by the authors. Comparison between it and the abstract ratings showed that by far the most common "error" was rating papers that endorsed the consensus as not endorsing the consensus. Again, if Craig Idso understood Cook et al, he would know that to be the case. He would know that pointing out one or two potential errors without pointing to the overall error statistics as shown be comparison of the abstract and author self ratings is a blatant cherry pick. Indeed, that is probably why he claims the error, but does not draw attention to the results of the author self ratings.

Thanks Tom. The 97% controversy has been raging at our newspaper blog since the moment it was published. The Soon and Idso claims about your Cook et al was just the latest "skeptic" point that was to show how unriliable the study is. I would think the 1200 authous representing 2000 papers should be a large enough number as you point out.

I read a blog exchange between Dana and professor Mike Hulme. It's left me a bit confused or should I say very confused. I'm not sure what Hulme's point is or where he's comming from. His statement "..97% consensus” article is poorly conceived, poorly designed and poorly executed. It obscures the complexities of the climate issue...". has been embraced by the deniers to claim Hulme is shooting down the paper. It seems that he's down on the process not that there isn't concensus but unfortunately all he's accompolished to do is confuse. Is he doing this to obfuscate? The fact that he thinks were "beyound it" concerning concensus seems counter productive at this time.

Stranger... What most people miss about the Cook paper is that the main point is not the actual "97%" number. It's about the difference between the public's incorrect perception of the level of consensus and the actual level of consensus.

What "skeptics" always fail to do, whilst they twist and writhe over the data, is attempt to apply their own methods for evaluating the level of consensus. Or, perhaps they have and found out that something close to 97% is actually correct... and they don't want to report that.

The importance of the research is, showing the difference between perception and reality on the part of the public relative to the level of acceptance of AGW tends to move people toward acceptance of the science.

The people at your newspaper blog ranting about the research are going to do that no matter what. That's fine. Those people will never be moved and they needn't be moved. In fact, I would hold that it's better they aren't because they become a foil against which to communicate the stark reality of AGW.

When I encounter these people I usually say one of two things:

1) Okay, you don't like the research. Do your own. Show us what you believe the level of consensus is, but you need to do what Cook et al did and go through the tough process of getting your results peer reviewed. (And FYI, that ain't easy.)

2) Since Cook et al took the self-skeptical approach of getting researchers to rate their own papers, the results are likely to be very robust. And that's not to even mention the other research papers that show similar results.

So, we now have numerous peer reviewed papers showing a very high level of scientific consensus on AGW. We have no papers showing otherwise.

"Skeptics" are batting a big fat zero right now on this topic.

Thanks Rob.

I know that the deniers using newspaper blogs will never be convinced. I know there are people who read the blogs who might not have strong opinions. We have a university bio chemist and a philosopher of science taking part in some of our discussions. I think that people who read the blog but have no strong opinion would find the AGW side on our blog much more credible just by the way they conduct their arguments. I think that might a benefit but I have to say I've no way of knowing. I'm probably wasing time.

I have have asked, even on this website why deniers wouldn't fund their own survery since it would only cost the Koch brothers of some other organization chump change to do it. I figured they were afraid of the Richard Muller effect.

I want to avoid being repetitious but I'm still bothered by the Mike Hulme thing. As a layman I just don't know what to think about his comments concerning the survey. It may be something I should let go of but I'd like to get in inkling of where he's coming from because if he’s so concerned about the huge amounts of carbon going into the atmosphere he sure doesn’t seem to be helping the cause. Just the opposite.

Stranger 26, Mike Hulme gives every appearance of having become a post modernist. Do not expect too much in the way of rational behaviour from him.