Recent Comments

Prev 1725 1726 1727 1728 1729 1730 1731 1732 1733 1734 1735 1736 1737 1738 1739 1740 Next

Comments 86601 to 86650:

-

arch stanton at 07:49 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

Les (8), I agree with you. I hope the doctor returns to inform us of its relevance. -

mspelto at 07:07 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

Dr Jay: We have three lines of measurement for glaciers. The WGMS reports glaciers where people with boots on the ground actually make measurements of glacier change annually. Typically this is mass balance of about 100 glaciers and terminus change on 400-500 glaciers. I measure 10 glaciers for mass balance and report all of these. This does not include glaciers where retreat is measured periodically, I measure terminus change on 60 glaciers, but only report about 10 that I examine annually. Most like Ptarmigan Ridge Glacier I do not as I visit them less frequently. Today we can also map the changes in area and hence terminus change using satellite imagery as well as thickness, these are not reported to the WGMS yet. The number that are tracked in this way is in the many thousands and these, take a look at the Colonia Glacier or Hariot Glacier for example, with the detailed terminus maps from satellite images. As we have done this it has confirmed that the WGMS sample is quite representative. -

scaddenp at 06:21 AM on 18 May 2011Lindzen Illusion #2: Lindzen vs. Hansen - the Sleek Veneer of the 1980s

Actually, is angusmac trying to imply that since he thinks scenario C is a good fit for actual temperature then the forcings are actually close to scenario C??? -

KR at 05:56 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

Gah - *lots of data* in the previous post -

KR at 05:55 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

les - It's a bit surprising. I started to get the answer to that halfway through typing "average ocean depth" into Google. Jay - The information about sea rise versus ice melt is also a Google answer. Lots data is out there, you just have to look a bit. -

les at 05:51 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

7 - Jay "what is the global average sea level, in meters please, if you could." I'm scoring that as the oddest question ever asked on SkS.Moderator Response: (DB) My initial thought was it was a snipe hunt; regardless of units, sea level is normally a base referent and is set as zero. -

dana1981 at 04:51 AM on 18 May 2011Is the CRU the 'principal source' of climate change projections?

nanjo - actually you called it "Lau". Funny, I had a typo in typing your typo. As for the rest of your comment, as DB suggests, you really ought to learn more about models. First of all, hindcasting is an important part of gauging the accuracy of a model (if it can't match the past, then it's not accurate). Secondly, the data matches the linear extrapolation of the model after 2003 pretty darn well, on average. As for "theorizes", that's the word I want to use because it's the correct term ("assume" is wrong). -

Dr. Jay Cadbury, phd. at 04:25 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

@KR This is a good answer. KR, what is the global average sea level, in meters please, if you could.Response:[DB] Google is your friend. You can look this stuff up yourself, you know, rather than asking.

-

nanjo at 04:22 AM on 18 May 2011Is the CRU the 'principal source' of climate change projections?

dana1981 at 06:44 AM on 17 May, 2011 My profound apologies for transposing "o" and "a". If I have offended anybody by my unforgivable typo..... I AM VERY VERY SORRY. The graph you have shown was published in 2005. It uses data up until 2003. all the graph BEFORE 2003 is is not a forecast by any model. They were the data used in coming up with model(s) in that Hansen paper. So, that part of the graph DOES NOT speak about the quality of the model. You don't get a gold star for matching the past. SO, look at the graph again, how wonderfully the model performs, After 2003. particularly after 2005. Nothing to do with any cherry picking. If you have used a data for creating a model, you don't get to use it crow about how great your model is. Linear extrapolation shown is not based on any model based on scientific inquiry. That is the kind of thing you can have a 9th grader do with a graph paper, a ruler and a NO.2 Pencil. How good that linear extrapolation fits is so irrelevant and Dana, I beg you to forgive me for any other typos. As for "theorizes..... i guess if that is the word you want you use, you should go for it.Response:[DB] Please, no all-caps. And you really ought to learn more about models.

-

KR at 04:11 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

Fully melted Antarctic and Greenland ice caps would raise sea levels by 80 meters, according to the USGS. That would be, um, bad. Melt of all glaciers besides those would only add an additional half meter. West Antarctic shelf and Greenland melt as per previous interglacials (with temperatures 2°C above present values, well within possible nthropogenic change levels) ~would be 10 meters or more, flooding ~25% of the US population, primarily on the East and Gulf Coasts. -

Manwichstick at 04:02 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

RE: 1. Dr. Jay, The experts I consult with have a lot to say about the glaciers melting. What they are much more cautious about is how to model future melt rates. They point to a lack of good on-the-ground data from the glaciers themselves. This uncertainty in how to model, does not make one blind to the already observed rate of glacial melt. Perhaps you are confusing the two. -

Dr. Jay Cadbury, phd. at 04:01 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

And before anybody goes after me for the question of the empirical evidence for the melting ice caps, I'm just trying to figure out if anyone knows how much the sea level will rise because that is the danger to humans, correct? So I just don't want people to think I'm sounding like a snob, obviously I wouldn't be asking for empirical evidence assessing the risk of the sun if it moved much closer to the earth. I know a fully melted ice cap is probably bad.Response:[DB] You are far, far off-topic here. Many posts exist at SkS (Search thingy will find them). SLR about 7 meters from Greenland & Canadian Archipelago, 7 meters or so from WAIS, another 60-65 meters from the EAIS. Even a partially melted ice cap is a very bad thing for people.

No human civilization we are aware of was around the last time sea levels were at that level.

Please focus on the topic of this thread from this point on.

-

Dr. Jay Cadbury, phd. at 03:58 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

That is helpful but I cannot tell from the graphic the total number of glaciers. I am still sorting through the link Jimbo provided.Response:[DB] Then go to Mauri's page I linked to. Or better yet, the primary literature in addition to the WGMS site Jimbo gave you.

-

Jim Powell at 03:47 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

Dr. Jay: World Glacier Monitoring Service -

Dr. Jay Cadbury, phd. at 03:41 AM on 18 May 2011Another animated version of the Warming Indicators Powerpoint

I've said this before, I don't think anyone can make a statement on glaciers. I think we need a world index of all glaciers. The problems we have with glaciers are numerous. Firstly, I don't know if melting starting points are accurately documented. Furthermore, if a melting glacier resumes growing, could we say this a sign of global cooling? So in summary, more information is needed, I say.Response:[DB] Glacial mass-balance is a function of deposition in the glacier's accumulation zone (where it "packs on weight"), and its losses in the ablation zone (everywhere else). Additionally, slope and terrain play a role as well.

If the glacier is getting substantially more deposition through enhanced snowfall in its accumulation zone, it may begin to advance further downslope (that gravity naughtiness). However, increased meltwater pulses due to a warmer environment downslope may actually cause even greater acceleration and calving at the output end of the glacier due to basal lubrication and subsequent retreat upslope of the glacier.

Measurements of glacier thickness, accumulation and ablation worldwide are reflected in the enclosed graphic. In addition to the graphic I provide, see Jimbo's comment at #2 and glaciologist Mauri Pelto's site here:

[Source]

The reality is that hard-working glaciologists have been all over this for many decades. And this is all very well-documented in the literature. And at Skeptical Science (that Search function thingy).

-

les at 02:24 AM on 18 May 2011Lindzen Illusion #5: Internal Variability

108 - Chris. The lecture by Sanater is excellent in that it's always good to see some good science being discussed (I'd say the same for your posts); but also upsetting when you see the appalling work of the "skeptics" laid bare. It should really give "sceptics" pause for a little soul searching. -

Albatross at 02:22 AM on 18 May 2011Drought in the Amazon: A death spiral? (part 1:seasons)

Re #30, Really, it is not clear what you are trying to say. But you seem to think that more than doubling CO2 and associated climate disruption will more than be compensated for by CO2 enrichment. Recent research has found that the ITCZ can migrate up to 5 degrees latitude when the planet warms-- think of the consequences that would have. You ignored the results from field studies by Feeley. And you forget that this is not so much as to what has happened but where we are heading. And really, citing cherry-picked studies from ideologues like Idso does not help you case. And what has below ground productivity got to do with things, or how is that related to this post? Oh, it is strawman, of course. We are talking about the canopy and transpiration, and die back of the canopy. And talking of strawmen, you are also making a arguing a strawman about fires. Making the argument that there have been periods of greater fire activity in the southern hemisphere before so there is nothing to worry about in the future is nonsense. All you have demonstrated is that large variations in the degree of biomass burning in the Southern Hemisphere (not the Amazon per se, the authors do not mention specifically the Amazon that I could see), and that it is possible to burn a lot more biomass than has been of late. Hardly reassuring. So your long post ultimately does not support Solomon's propaganda. In fact, it is just a fine example of the lengths people will go to to delude themselves. -

Albatross at 01:48 AM on 18 May 2011Lindzen Illusion #5: Internal Variability

Ken @104, This is what you originally said: "There are a few great minds who don't subscribe to the AGW theory" When challenged that has now changed to this: "He definitely does not subscribe to AGW - 'alarmist global warming" You are going to have to do better than that re Dyson, you cannot even concede that you misrepresented Dyson, so you move the goal posts as noted by JMurphy @105. That is, IMO, dishonest and disingenuous. Now onto the subject of this post. You are also going to have to do much better than this when asked about Lindzen's inane comments on natural variability: "Lindzen maybe, maybe not - right. It depends on how widely the limits of natural variability are drawn." Come on. So we simply adjust the time frame to fit Lindzen's ideology? That is poor Ken. And since when does Lindzen get to choose how widely the limits of natural variability are drawn and over which time? Given that you and BP are so well informed, and seem to understand what Lindzen is implying, please specify for us what these limits are. Also, please clearly describe for all of us how your missives here and the data support Lindzen's ideology? As for what is going on between 700 m and 2000 m. Really, you have to ask that? Trenberth (2010) has looked at this-- for the much debated 2003-2010 period, the positive slope of the OHC trace increases when one includes data below 700 m-- did you miss the first figure in Dana's post? Trenberth says: "However, independent analysis8 of the full-depth Argo floats for 2003 to 2008 suggests that the 6-year heat-content increase is 0.77 ± 0.11 W m−2 for the global ocean or 0.54 W m−2 for the entire Earth, indicating that substantial warming may be taking place below the upper 700 m." Also from the Figure's caption: "The differences between the black and blue plots after 2003 suggest that there has been significant warming below 700 m, and that rates of warming have slowed in recent years" -

Charlie A at 00:25 AM on 18 May 2011Is the CRU the 'principal source' of climate change projections?

Another small question about the graph you posted. It is labeled "Smoothed (simple 5-year averages", but it is unlike any such graph that I can calculate. How did you manage to plot a simple 5 year average for 2011? Normally, when I plot a "simple 5-year average" I plot a 5 year moving average for every year. (with the last 2 years not being plotted). When I do the simple moving average plot, there is a very obvious flattening in 2003. That doesn't appear in your graph, hence my question as to how you calculated it. If I use the IPCC recommended 5 year gaussian filter, the flattening is even more pronounced.Response:[DB] Apologies for not being more clear. Being tired (and no doubt lazy) at that moment, I wrote the descriptive verbiage from memory. The 5-year graphic is from this post by Tamino. He clarifies the graphics here:

Tom Curtis

-

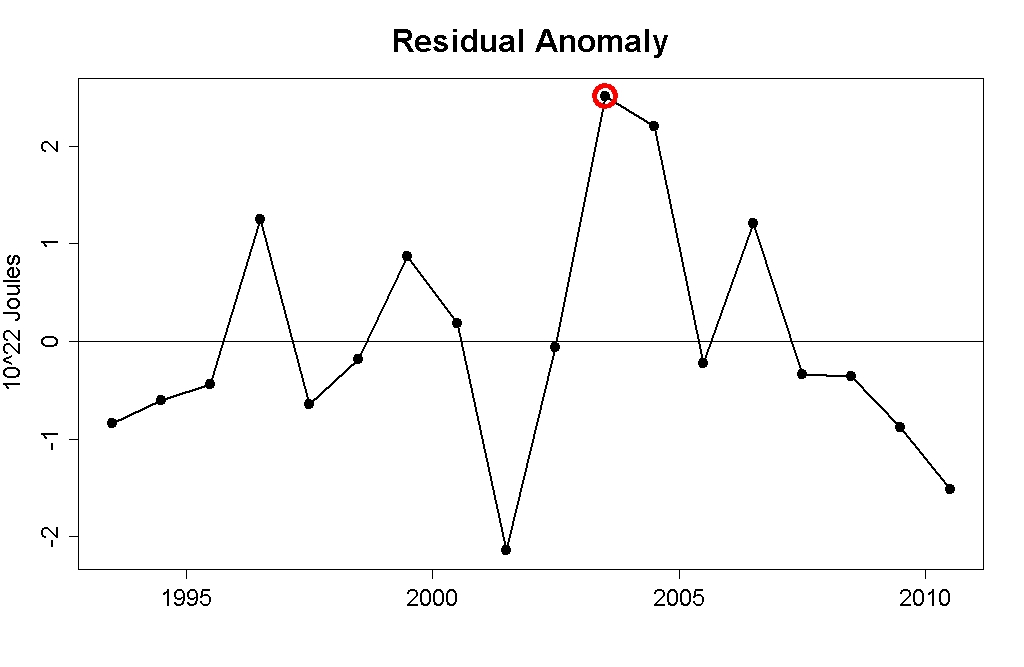

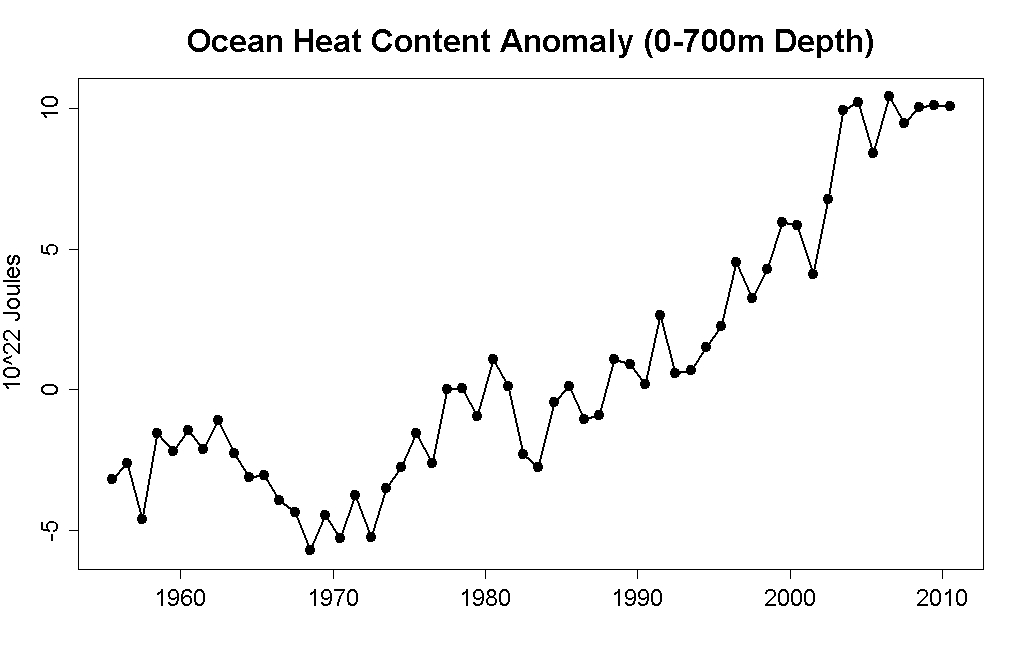

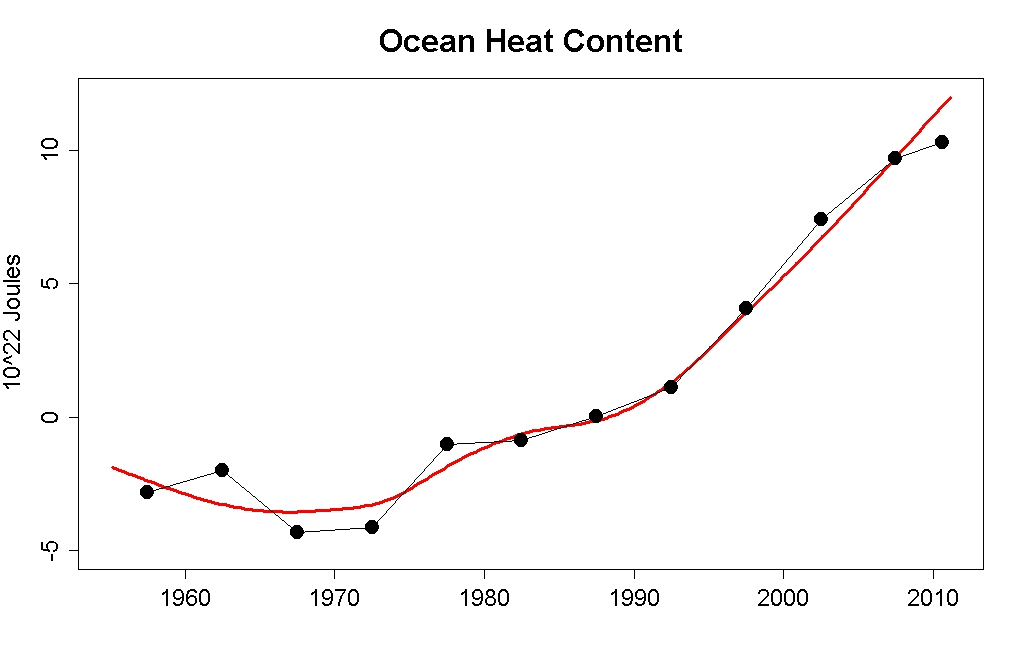

Charlie A at 00:11 AM on 18 May 2011Is the CRU the 'principal source' of climate change projections?

DB inline comments: "Tamino shows clearly the nature of the "Cherry-pick" that is 2003". and "Smoothed (simple 5-year averages), one gets this:" When Roger Pielke started posting the OHC graphs, 2004, not 2003, was the highest point. At that time, several years ago he explain his choice of 2003 as being the point where the Argo data dominated the record. It is only in the 2010 revisions that 2003 became the highest point. Splicing together datasets of different types of measurements is difficult, and when a newer system has much better coverage and accuracy than an older system it is quite common to do analysis starting from the introduction of the new system. Some common examples: Sea Ice measurements from beginning of satellite coverage, ignoring earlier surface based observations. GRACE mass loss measurements. Lower tropospheric temperatures from satellites vs earlier radiosonde measurements. In post #12 Ken Lambert refers to an article on XBT-Argo transition. A key element of that explanation is Schuckmann 2009, "Global hydrographic variability patterns during 2003–2008" Was Schuckmann cherry picking when he started his analysis in 2003? Much more likely is that he chose 2003 because that is when the reliable, comprehensive dataset started. Regarding the 5 year smoothed plot: The beauty of OHC content is that one does not need to average over a long period. The change in ocean heat content over a 3 month period is a direct integration of the radiative imbalance over that 3 month period. It reflects variations (over that 3 month period) of such things as the average albedo due to variations in cloud cover or aerosols. -

chris at 23:48 PM on 17 May 2011Lindzen Illusion #5: Internal Variability

Ken Lambert at 22:08 PM on 17 May, 2011 That’s poor, Ken. You’ve done a little bait and switch. The point is that your mate D.H. Douglass butchered an attempt at statistical analysis of comparison of model and empirical data to attempt to draw a false conclusion about the reliability of the models (they wanted to pretend that the empirical data was incompatible with the range of model variability). The laughable nature of their analysis is described in a lecture by Dr. Santer which is worth an hour of anyone’s time – very enlightening and instructive indeed. (if you only want the relevant bit start at around 30 mins in). I can see why you love D/K’s style of “research” since it’s a little similar to your researches; i.e. look for areas of uncertainties in sub-disciplines of a subject, assume that the most extreme end of the uncertainty that matches your preconceived idea is the true description of the reality bounded by the variance of the uncertainty (e.g. by putting your faith in D/K's "outputs")…..and then pretend that that calls into question all levels of greater certainty above. As your bait-n-switch quote shows, whereas Douglass was determined that the uncertainty could be repackaged to assert that models was incompatible with empirical data on a rather small element of atmospheric response to radiative forcing ( strength of a hotspot), Santer et al are rather more relaxed and honest about the nature of uncertainty. This nice paragraph of yours is apposite: ” The geothermal effect should be easily eliminated from measurements. A negative 0.1W/sq.m TOA imbalance at equilibrium is required to eliminate this effect, otherwise the biosphere would gain energy indefinitely.” I wasn’t going to be too hard on Douglass/Knox but since you brought that up (and them up!), it’s worth mentioning another analysis that D/K butchered. They proposed that the warming in Iceland between 1979-1996 is due to the geothermal effect that you mention. H. Bjornsson, T. Jonsson, and T. Johannesson from the Icelandic Meteorological Office in their comment linked under “butchered” just above, showed that the map of volcanic activity (where geothermal effects should dominate) is incompatible with the expectation from geothermal contributions to surface temperatures, that there is no evidence for an enhancement of the geothermal flux during the period of interest, and that the geothermal flux is anyway approaching 3 orders of magnitude too low. D/K are obviously having great fun with their very late second careers as climate contrarians; but your reliance on them for info on climate-related matters is misplaced… [P.S. Ben Santer has just been elected to the National Academy of Sciences for his outstanding work on climate science - good work, yes?!] -

Bob Lacatena at 23:24 PM on 17 May 2011Lindzen Illusion #2: Lindzen vs. Hansen - the Sleek Veneer of the 1980s

I'd like to suggest to any casual readers that they look at angusmac's posts, and those in response, and note how he quite simply refuses to see the point of the entire argument. He is so intent on proving a point that he desperately wants to be true that he is ignoring the simple and obvious... even after it has been explained to him over and over again. This is an important behavior to watch for on these threads, the habit of some to make their case by mere repetition, often through the inclusion of as many numbers and graphs and equations as possible, while simply and blatantly ignoring even the most basic and irrefutable arguments that knock the supports right out from under their case. Merely repeating a flawed argument over and over, with more and more intricate (if invalid) numbers, does not make it any more true, or convincing, and forcing others to repeat the same salient point over and over does not make that point any less true. Be wary. -

Berényi Péter at 23:21 PM on 17 May 2011Lindzen Illusion #5: Internal Variability

#106 Ken Lambert at 22:08 PM on 17 May, 2011 This whole ocean heating story is fascinating. We have an open bathtub holding vast amounts of water which is heated from above, the sides and below. Above from radiation and air transfer, the sides from ice shelf effects where they occur, and below from geothermal (0.1W/sq.m globally). Fascinating, indeed. What you are not told often, Ken, is that the deep oceanic circulation (aka thermohaline circulation) is not a heat engine, that is, the mechanical energy needed to push huge water masses around is not derived from entropy (that is, temperature and/or salinity) differences inside the hydrosphere. A heat reservoir heated from above in a gravitational field simply can't do that. Downwelling of high salinity cold water in subpolar regions could not go on forever, because sooner or later the abyss would get saturated, deep water would be as cold and salty as possible, so no more could go under in any way. There should be other processes operating elsewhere that pump heat down and remove salts from deep layers, to make room for more. Geothermal heating plays a small part in it (it warms water a bit at the proper level), as short wave solar radiation (light) also does when it penetrates into the water to a depth of several hundred meters. But the main player is turbulent mixing, driven by mechanical energy like wind and deep tidal breaking. Most of it is concentrated to small areas along coastlines and mid ocean ridges, where bottom topography is sufficiently rugged. BTW, about 80% of deep turbulent mixing happens in the Southern Ocean, so the true diver of ocean currents is located there. Thermohaline downwelling itself does not transfer any heat into the abyss, it removes heat from there. -

JMurphy at 22:43 PM on 17 May 2011National Academy of Sciences on Climate Risk Management

I hope someone will soon be reviewing another paper (Fall et al), which not only confirms the temperature trend (in the US), despite the belief of certain individuals; but also shows that peer-review works (as long as the paper is eventually accepted and published, of course) and that so-called skeptics are not prevented from publishing such work by an imaginary conspiracy. One paper, three proofs - what more could anyone want ?Response:[DB] A review is planned.

-

Ken Lambert at 22:40 PM on 17 May 2011Is the CRU the 'principal source' of climate change projections?

Charlie A #11 Have a look here for an explanation of the XBT-Argo transition: http://www.skepticalscience.com/news.php?p=2&t=78&&n=202 -

Ken Lambert at 22:36 PM on 17 May 2011Lindzen Illusion #7: The Anti-Galileo

chris #113 How about we agree to disagree about 0.1 +/-0.1W/sq.m -

Bern at 22:26 PM on 17 May 2011Special Parliament Edition of Climate Change Denial

[DB] response to #52: no, it's not like scientists are speaking in a different language, no matter how Tolkienesque. It's like the scientists write a journal article saying "It would appear that the sky is predominantly blue in colour, except at dawn & dusk when preferential scattering and refraction may produce a pinkish hue at times", whereupon the skeptics immediately start jumping up and down and screaming to their media contacts "Those scientists are saying the sky is pink! Everyone knows it's blue! It's never pink! They're corrupt liars, don't believe a word they say!" The press, of course, immediately write their headlines along the lines of "Breaking News: Scientists Say Sky is Pink!", followed by opinion columns by prominent skeptics about how the sky is blue, has always been blue, what are those scientists talking about, they obviously don't know anything about atmospheric physics, they must be either incompetent or conspiring to defraud the public, etc. The problem, of course, is deliberate miscontruing of scientific statements by deniers, aided by 'reporting' by the press that consists of little more than writing about or even just reprinting press releases from various thinktanks. If a little more effort went in to understanding what the scientists were saying, and reporting it accurately, then I think the message would get out quite easily. -

Ken Lambert at 22:08 PM on 17 May 2011Lindzen Illusion #5: Internal Variability

Chris #103 "He butchered an analysis of comparison of model and empirical tropical tropospheric temperature." Here is the conclusion from the butcher busters (Schmidt, Santer, Wigley et al) "We may never completely reconcile the divergent observational estimates of temperature changes in the tropical troposphere. We lack the unimpeachable observational records necessary for this task. The large structural uncertainties in observations hamper our ability to determine how well models simulate the tropospheric temperature changes that actually occurred over the satellite era. A truly definitive answer to this question may be difficult to obtain. Nevertheless, if structural uncertainties in observations and models are fully accounted for, a partial resolution of the long-standing ‘differential warming’ problem has now been achieved. The lessons learned from studying this problem can and should be applied towards the improvement of existing climate monitoring systems, so that future model evaluation studies are less sensitive to observational ambiguity." Seems like a pretty equivocal conclusion to me. "Has there been very little ocean heat uptake into the upper 700m of the ocean in recent years? Maybe, maybe not. When we're confident about that we can ask the questions of whether the radiative imbalance has reduced quite a bit for a time or not, and why or why not. You can stick for D/K-style show-and-tell; I'm plumping for the science "in the round"!" In other words - you don't know until further data is obtained. The upshot is that if the radiative imbalance has reduced quite a bit, then cooling forcings are in play which need to be identified, OR the warming forcings are not according to the theory. Hansen has a go with extra Aerosols, and the unbelievable 'delayed Pinitubo bounce decay effect'. This whole ocean heating story is fascinating. We have an open bathtub holding vast amounts of water which is heated from above, the sides and below. Above from radiation and air transfer, the sides from ice shelf effects where they occur, and below from geothermal (0.1W/sq.m globally). The geothermal effect should be easily eliminated from measurements. A negative 0.1W/sq.m TOA imbalance at equilibrium is required to eliminate this effect, otherwise the biosphere would gain energy indefinitely. The top and sides heating need plausible mechanisms for getting heat in and out, down from the top layers to the depths and into the abyss. -

JMurphy at 21:57 PM on 17 May 2011Lindzen Illusion #5: Internal Variability

Ken Lambert wrote : "RE: Dyson: He definitely does not subscribe to AGW - 'alarmist global warming'" I wish you so-called skeptics would try and agree on your terminology or at least (à la Poptech) stop using different versions of terms whenever you feel like it - and without even bothering to tell anyone ! And do you have your own version of "alarmist" too ? If so, please explain what it means. -

Arkadiusz Semczyszak at 21:43 PM on 17 May 2011Drought in the Amazon: A death spiral? (part 1:seasons)

Solomon is essentially a professional misinformer. Maybe ... Sometimes he is too biased - use "cherry picking". Only that this case shows that - here - he's right. Like Idso who cites many articles of the late twentieth century, for example: “In the relatively short-term study of Lovelock et al. (1999a), for example, seedlings of the tropical tree Copaifera aromatica that were grown for two months at an atmospheric CO2 concentration of 860 ppm exhibited photosynthetic rates that were consistently 50-100% greater than those displayed by control seedlings fumigated with air containing 390 ppm CO2.” Co2 favors the development of tropical forests and these buffering - the hydrological cycle - is less periods of extreme drought. And what says - cited by Solomon - Phillips et al., 2008., in: The changing Amazon forest : “Because growth on average exceeded mortality, intact Amazonian forests have been a carbon sink. In the late twentieth century, biomass of trees of more than 10 cm diameter increased by 0.62 +/- 0.23 t C ha-1 yr-1 averaged across the basin. This implies a carbon sink in Neotropical old-growth forest of at least 0.49 +/- 18 Pg C yr-1. If other biomass and necromass components are also increased proportionally, then the old-growth forest sink here has been 0.79 +/- 29 Pg C yr-1, even before allowing for any gains in soil carbon stocks. This is approximately equal to the carbon emissions to the atmosphere by Amazon deforestation.” And what speaks - on this topic - Greenpeace?: “ Rafael Cruz, a Greenpeace activist in Manaus who has been monitoring the drought, said that while the rise and fall of the Amazon's rivers was a normal process, recent years had seen both extreme droughts and flooding become worryingly frequent. Although it was too early to directly link the droughts to global warming, Cruz said such events were an alert about what could happen if action was not taken.” Of course it is. Historically, droughts have been even greater and the smaller population of Amazonia. So We must - firstly - to increase the possibility of water retention in periods of drought - of course ... It is always useful. Will Amazon drought significantly affected the photosynthesis?: “ We found no big differences in the greenness level [!] of these forests between drought and non-drought years, which suggests that these forests may be more tolerant of droughts than we previously thought,” said Arindam Samanta, the study's lead author from Boston University. The comprehensive study published in the current issue of the scientific journal Geophysical Research Letters used the latest version of the NASA MODIS satellite data to measure the greenness of these vast pristine forests over the past decade. A study published in the journal Science in 2007 claimed that these forests actually thrive from drought because of more sunshine under cloud-less skies typical of drought conditions. The new study found that those results were flawed and not reproducible. “This new study brings some clarity to our muddled understanding of how these forests, with their rich source of biodiversity, would fare in the future in the face of twin pressures from logging and changing climate,” said Boston University Prof. Ranga Myneni, senior author of the new study.” A significant mistake of catastrophic forecasts (the reaction of global ecosystems to extreme phenomena - were found to be greater resistance - a significant reduction of "tipping points") speaks this paper: The ecological role of climate extremes: current understanding and future prospects, Smith, 2011.: “For example, above- and below-ground productivity remained unchanged across all years of the study ...” Meanwhile in: The 2010 Amazon Drought Lewis et al, 2011., they are frighten us ... Lewis also threatens us with fire But here (SH - Southern Hemisphere) has recently seen something quite different - a surprising ... Large Variations in Southern Hemisphere Biomass Burning During the Last 650 Years, Wang et al. 2010.: “ These observations and isotope mass balance model results imply that large variations in the degree of biomass burning in the Southern Hemisphere occurred during the last 650 years, with a decrease by about 50% in the 1600s, an increase of about 100% by the late 1800s, and another decrease by about 70% from the late 1800s to present day. And so it looks like ... I recommend also comments (not the same "very incomplete" text post) - here - to this post. -

Ken Lambert at 21:42 PM on 17 May 2011Lindzen Illusion #5: Internal Variability

Albatross #102 RE: Dyson: He definitely does not subscribe to AGW - 'alarmist global warming'. "And, you seem to be blissfully unaware of the huge difference in measuring OHC in the top 700 m versus the top 1500-2000 m. Please stop confusing the two." Well you can tell us all what is happening between 700m and 2000m. Peer reviewed Argo analysis please. "What we have is data that says that maybe [warming] occurs, but it's within the noise....The point we have to keep in mind is that without any of this at all our climate would wander--at least within limits." Lindzen maybe, maybe not - right. It depends on how widely the limits of natural variability are drawn. -

CBDunkerson at 21:38 PM on 17 May 2011Increasing CO2 has little to no effect

This is one of the most bizarre things about the AGW 'skeptic' movement. After Arrhenius first suggested the possibility of AGW in 1896 real skeptics countered with arguments like 'the CO2 effect is saturated' and 'oceans would absorb all the extra CO2'... which based on the limited knowledge of the time were compelling enough that the vast majority of scientists rejected AGW. It was only after these objections were disproved by other advancements, around the 1960s, that science started looking at the possibility of AGW again... and found that it was already underway. Yet here we are half a century later and the modern 'skeptics' are recycling these ancient arguments as if they were new and valid... rather than long since proven false. -

Dikran Marsupial at 20:43 PM on 17 May 2011Increasing CO2 has little to no effect

trunkmonkey@40 The upper atmosphere, where the Earth's energy budget is decided is very cold and hence very dry. There isn't a great deal of water vapour there to absorb the photons. There is a good overview of most of this from Spencer Weart and Ray Pierrehumbert at Realclimate here. The summary is here, emphasis mine: So, if a skeptical friend hits you with the "saturation argument" against global warming, here’s all you need to say: (a) You’d still get an increase in greenhouse warming even if the atmosphere were saturated, because it’s the absorption in the thin upper atmosphere (which is unsaturated) that counts (b) It’s not even true that the atmosphere is actually saturated with respect to absorption by CO2, (c) Water vapor doesn’t overwhelm the effects of CO2 because there’s little water vapor in the high, cold regions from which infrared escapes, and at the low pressures there water vapor absorption is like a leaky sieve, which would let a lot more radiation through were it not for CO2, and (d) These issues were satisfactorily addressed by physicists 50 years ago, and the necessary physics is included in all climate models. There is also a follow up post here where Ray Pierrehumbert goes into the physics in more detail. Hopefully those two posts answer your questions, in short, physics has been able to solve this problem for about sixty years. -

JMurphy at 20:29 PM on 17 May 2011Special Parliament Edition of Climate Change Denial

Response: [DB] You mean like this? To make one last very off-topic comment : Yes, a bit like that but more like this last line from a classic Carry On film - if you like that sort of thing (which I do). -

Stephen Baines at 14:39 PM on 17 May 2011Lindzen Illusion #2: Lindzen vs. Hansen - the Sleek Veneer of the 1980s

Angumusac Pretty elaborate table. You could summarize it in two sentences though. 1. Hansen's early model had too high a sensitivity. 2. Scenario B ended up with the forcing that best matched reality out of the three. Why not just say that?Response:[dana1981] Because he's trying to exaggerate how far off Hansen was by using this "business as usual" quote, even though it's been explained to him several times that Hansen is not in the business of predicting future CO2 changes.

-

scaddenp at 14:38 PM on 17 May 2011Increasing CO2 has little to no effect

Numerous articles about this, but try Schmidt et al, 2010 for serious crack at it. -

trunkmonkey at 14:23 PM on 17 May 2011Increasing CO2 has little to no effect

The absorbtion bands of CO2 and water vapor overlap making it difficult to parse out the purely absorbtive greenhouse contribution of incremental CO2. How are we so sure water vapor would not absorb the photons if CO2 were removed, and for that matter, sure water vapor is not absorbing the photons INSTEAD of CO2. I realize both signatures are visible from space, but is seems the parsing problem should apply here as well. -

adelady at 14:16 PM on 17 May 2011National Academy of Sciences on Climate Risk Management

Bern, you're right. I think the biggest use of the book among politicians will be quite specific. Those who are interested _will_ read it. They know better than any of us which particular politician has which particular misconception(s). They'll be able to say "Hey, you don't need to read the whole thing. Just have a look at page/ chapter/ paragraph ... and tell me what you think." Very useful. And when the yesbuts come in reply, the references to the real science will be easily accessible. (I wouldn't mind betting a few of them get the phone apps to help out.) -

Charlie A at 14:06 PM on 17 May 2011Is the CRU the 'principal source' of climate change projections?

2003 is the first year that we had truly global coverage of ocean heat content measurement. The Argo network was started in early 2000's and expanded dramatically in 2003. If you look at the ocean heat content plots, you will see a very large discontinuity as the main measurement method changed over from XBTs to Argo floats. The recent adjustment of data reduced this non-physical step change, but it is still quite evident.Response:[DB] Tamino shows clearly the nature of the "Cherry-pick" that is 2003:

Looking at the totality of the data:

Smoothed (5-year averages), one gets this:

-

Albatross at 13:57 PM on 17 May 2011Hockey stick is broken

All, Bud seems to be cherry-picking papers from the NIPCC's "Prudent Path" misinformation document. If so, he can keep at this for some time.... Also, in the face of evidence to the contrary of his beliefs the "skeptics" just keeps forging ahead, mostly ignoring the inconvenient evidence. At this point one has to wonder whether the person is a "skeptics" or someone in denial about AGW. There are more Hockey Sticks out there than can be used by a NHL team, some generated using independent data not used in the original HS graph. e @85, good catch!Response:[dana1981] I suggest we follow Daniel's sage advice. DNFTT. Until Bud can address the fact that the lone hemispheric reconstruction he has referenced is flawed and outdated, there's little point in feeding him further.

-

angusmac at 13:57 PM on 17 May 2011Lindzen Illusion #2: Lindzen vs. Hansen - the Sleek Veneer of the 1980s

Dana, to summarise our discussuion, I enclose the timeline and narrative showing the reduction in the estimate for the 2019 temperature anomaly from Hansen's initial estimate of 1.57°C in 1988 to your estimate of 0.69°C in 2011. Links: Hansen (1988a), Hansen (1988b), Hansen (2005), Hansen (2006), Schmidt (2007) & Dana (2011)

Links: Hansen (1988a), Hansen (1988b), Hansen (2005), Hansen (2006), Schmidt (2007) & Dana (2011)

-

Bern at 13:42 PM on 17 May 2011Is the CRU the 'principal source' of climate change projections?

Charlie A: why pick 2003 as the start date for your comparison? Is it because 2003 was an abnormally high data point for OHC, perhaps? Suggest you look at the link in my post #7. And the manuscript that goes along with the chart of OHC, on the NOAA page you linked? It says this: "Here we update these estimates for the upper 700 m of the world ocean (OHC700) with additional historical and modern data [Levitus et al., 2005b; Boyer et al., 2006] including Argo profiling float data that have been corrected for systematic errors." Note: the upper 700m of the world ocean - that's the top 20% or so. There's another 2,500 metres of water below that, and recent work suggests there's a lot more deep mixing going on that previously thought. Actually, a quick search reveals this nice SkS article about the energy balance problem. You should read that, and comment there, as this is getting seriously off-topic. -

Charlie A at 13:42 PM on 17 May 2011Is the CRU the 'principal source' of climate change projections?

Just to clarify something in the above post .... The numbers 0.7 x 10^22 joules expected versus 0.08 x 10^22 joules observed are the annual increases in OHC. (These are sometimes expressed in zetajoules or 10^21 joules as 7 zetajoules/year expected vs. 0.08 zetajoules/year observed.) As these are the annual changes, the zero points and the intercepts are not relevant. The change in the earth's heat content each year is a direct measure of the average radiative imbalance over that year. All the different ways of adjusting intercept points are not relevant to the annual radiative imbalance. If you prefer to use units related back to the watts/meter-squared forcings, the conversion is 1 x 10^22 joules/year (or 10 zetajoules/year) of heat content increase results from a forcing of 0.62 watts/meter-squared over the entire globe. -

Charlie A at 13:12 PM on 17 May 2011Is the CRU the 'principal source' of climate change projections?

There is a radiative imbalance between the total energy going into the earth system vs. the total energy leaving the earth system. The forcing from CO2 and various other things end up with a net incoming radiation (mostly shortwave) that is greater than the outgoing radiation (mostly longwave). That results in an increase in the heat content of the earth. About 95% of that increase in the earth's heat content appears in the upper 700 meters of the ocean. We have had fairly accurate measurement of this ocean heat content (OHC) since 2003 with the widespread deployment of the ARGO network. The expected radiative imbalance is around 0.7 * 10^22 joules. (See the SLOPE of the extrapolated line in comment 5, above). The observed rise in OHC since 2003 is about 0.08 * 10^22 joules. That is the missing energy that Trenberth referred to. OHC is a useful metric in that a snapshot of the delta of the OHC over a 3 month or annual period shows the net radiative imbalance of the earth over that period. No further adjustments needed. NOAA OHC page: -

Daniel Bailey at 12:28 PM on 17 May 2011Hockey stick is broken

To piggyback on the prevailing sentiment, regionalized warming/cooling is that: regional. For your examples given, the warming experienced regionally during the MWP was just that: regional periods of warming interspersed with bouts of regional cooling. So for every "dog" study showing a certain region was "warm at a certain time period therein, another "pony" study showing cooling during the period can be rolled out. As an example, Martín-Chivelet et al 2011, showed that the 20th century was the time with highest surface temperatures in Northern Spain in the last 4000 years (more robust discussion here), which includes the MWP. Whoopee. But where the warming/cooling of the past differs from the warming experienced in the last century & this is two-fold: 1. This warming is truly global 2. It is driven largely (especially since 1975 or so) by us with our GHG emissions. Please take the time to read the literally hundreds of posts at this site going over this in exquisite detail. Whichever sources of information you've been learning from so far have done you a disservice. -

Jerry at 12:15 PM on 17 May 2011Special Parliament Edition of Climate Change Denial

Not a Bud comment. In response to scientific certainty you say That humans are very likely (>90%) responsible for most of the temperature rise post-1970 due to fossil-fuel GHG emissions (primarily CO2). I recognize the need for scientific accuracy, but shouldn't it really be >99%, or even >99.99%? Someone could easily take >90% to mean there is a 1 in 10 chance--not horrible odds--when the reality seems more like 1 in a million. JerryResponse:[DB] You speak to the issue of scientific reticence (the over-arching need to "be right") vs colloquially-used language in the real world. In my response earlier, I phrased the expressions of certainty as used by both the National Academies of Sciences and the IPCC.

In the terms you reference, in the common tongue, you may well be correct. After all, unless the physics of human-produced GHGs differ entirely from those of GHG of natural origin (in worlds populated with Iris effects, cloud-causing ENSO and low-flying bacon), what other surmise can be drawn?

In order to effectively communicate with the outside world, scientists must learn to speak comfortably in the common tongue. As things now stand, scientists may as well be speaking in the high tongue of the Noldor.

-

Bern at 11:22 AM on 17 May 2011National Academy of Sciences on Climate Risk Management

Marcus: yes, hypocrisy and politicians seem to often go hand-in-hand. However, I think that we should be careful not to tar all politicians with the same brush, when many are acting without a full appreciation of the situation. Hopefully, though, John's book, and publications like this NAS report, will open the eyes of the more honest amongst the political ranks, who still endeavour to do what they think is best for their electorate. All it takes are a few prominent 'conversions' to the science, and some media coverage, for the average joe sixpack to think "hey, there might be something in this global warming thing after all...". As an example - I was talking to my mother recently, and she was surprised to hear that there was some substance to global warming - she thought it was just another non-issue the politicians were getting upset about. So there is a lot of ignorance (in the 'lack of knowledge & understanding' sense of the word) amongst the electorate. That's what we need to work to correct. I gave a presentation at my work a few weeks ago on global warming, and the response was along the lines of "Wow, I didn't know that!"... -

Marcus at 11:12 AM on 17 May 2011National Academy of Sciences on Climate Risk Management

@ Bern. Yes, thank you again for exposing the Rank Hypocrisy of Tony Abbott. Unless I lived as a total hermit, there is no way that I can avoid the full impact of a GST in virtually *every* facet of my life. By contrast, my use of public transport & green electricity means that my exposure to a carbon tax will be incredibly minimal-& can be reduced further still via some very sensible actions on my part. Its also interesting how Abbott is demanding that Gillard seek a mandate for the Carbon Tax, because she failed to announce it before the last election-yet again he was instrumental in putting together Work Choices, a policy which was brought in *without* a mandate from the electorate! His hypocrisy knows no bounds! -

Bern at 10:43 AM on 17 May 2011National Academy of Sciences on Climate Risk Management

"impose massive costs without meaningful benefits." Australian readers might be more familiar with this in phrased in slightly different language: "It's a great big new tax on everything!" Which is particularly ironic, given that "it" (being a carbon price) only taxes carbon emissions, and that the promulgator of the above soundbite was instrumental in imposing the only "great big new tax on everything" (i.e. the GST) that Australia has seen for the last few decades... -

sailrick at 10:24 AM on 17 May 2011Drought in the Amazon: A death spiral? (part 1:seasons)

On the California coast, La Nina brought us a wet winter and huge snowpack in the Sierra mountains this year. Not a typical La Nina year here, which are usually dryer than normal. Last year, we saw a fairly typical El Nino winter, with plenty of rain, but an unusually cool summer followed. Any ideas as to why?

Prev 1725 1726 1727 1728 1729 1730 1731 1732 1733 1734 1735 1736 1737 1738 1739 1740 Next

Arguments

Arguments