Recent Comments

Prev 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 Next

Comments 23201 to 23250:

-

One Planet Only Forever at 04:08 AM on 6 September 2016Range anxiety? Today's electric cars can cover vast majority of daily U.S. driving needs

Associated with the range of electric vehicles is the more important need for electricity generation to be better than natural gas burning and plug-in infrastructure to be built as a public utility (not expecting a popularity and profitability motivated system to rapidly develop the required result).

Electric cars do make sense as long as the electricity generation to power the vehicle produces less CO2 than the burning of natural gas to generate electricity.

An eia presentation indicates Natural Gas generation produces about 0.55 kg of CO2 per kWh but the total amount of CO2 would be higher due to electrical delivery losses and CO2 or equivalent, like fugitive methane emissions, generated in the production and delivery of the natural gas.

I chose to buy a hybrid because I live in Alberta. In Alberta in 2015 more than 50% of the electricity generation was coal fired (Alberta Government Report for 2015)

Electric vehicle efficiency ranges from 20 to 25 kWh/100 km. If the generation was from natural gas, that would be a minimum of 11 to 14 kg CO2/100 km (higher when other CO2 impacts are added). Alberta's average would be poorer than that. At 50% coal (0.95 kg CO2 / kWh) and 50% natural gas the result would be a minimum of approximately 0.75 kg of CO2 / kWh. That means a minimum of 15 to 19 kg of CO2 / 100 km (actual amount higher due to distribution losses and other considerations).

Burning gasoline generates 2.3 kg CO2 per litre. With a 40% bump of emissions for extraction, refining and transportation of the fuel (what seems to be a reasonable value based on many different values provided by many different sources) there would be 3.3 kg of CO2 per litre. And my hybrid is running 4.7 l/100 km combined city and highway use (in the city I am getting close to 4.2 l/100 km). So my hybrid use generates a total of 15.5 kg CO2/ 100km (only 14 kg/100 km in the city).

Another important consideration I had to make was that Alberta currently lacks decent electric vehicle plug-in locations for long distance travel (or for in city travel). And in Alberta most day-trips to destinations near the city of Calgary or Edmonton would be a round-trip that is well beyond 100 miles total distance (many local day-trips would be longer than 200 miles round-trip).

So the focus needs to be on vastly improving the elctricity generation in many regions. And vastly improving the infrastruction for plugging in when travelling outside of cities in many regions. That will take leadership that many regions are unlikely to have a clear majority vote to support. That may require external motivation on those regions to "Do Better than their population would prefer to do".

-

chriskoz at 23:45 PM on 5 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Haze@7,

I may have misunderstood a series of your suggestions posted @3 as thoughtful propositions. In such event, I appologise.

With the benefit of the doubt, it looks as suggestions posted @3 can be read as the questions of a person ignorant on the subject. Which is fine: everyone can be ignorant about certain aspects of reality until they learn the facts. Now, that you've learned the facts, and understood how far off the mark suggestions @3 are, you should not ask such questions ever again, unless you want to be called a pseudoscientist or more tivially a denier of reality. Deniers do not accept the evidence but keep recycling old myths.

You last question:

why pursuing the lowering of CO2 emissions is not going to lead to penury for the workers

can have many elaborate asnswers. The simplest one is: biggest penury will affect not "workers" but those who burn the most fossil fuels and who have most vested interest in the burning. Classical example is carbon tax and dividend policy. Whoever produces most CO2 pays big. In case dividends are paid back in equal proportion to every all citizens, those citizens who produce less CO2 must receive back more than they paid in carbon tax, ergo they are better off under such policy.

-

Paul W at 20:37 PM on 5 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Haze @7 the pseudoscience in your post @3 is the simple giving of reality to the popular misconseptions of the poorly climate science educated as if cultural dominance (in the MSM pseudoscience is the dominant culture, where frequent articles from climate scientists are dismissed) makes it real.

Making pseudoscience real as if it holds a rational position that resembles reality is the standpoint of the climate denial. While political popularity is a kind of reality wanting to give it physcial reality outside of political polemics is what makes claims false.

Given the rightwing popularism at work in the English speaking world you have to expect a challenge to your views in this site.

Science is about data and not about being kind or accessable to those holding popular ideology that denies the changes happening in almost all habitats on our planet currently.

If your daughter had a diagnosis of gangrene and 97% of doctors agreed. Does treating the condition as nothing to be concerned about help make decisions about her wellbeing?

The current government claims to have dropped the carbon tax to save money yet it has led to a crisis in the federal budget worse than what they claimed they wanted to fix. So much politics is about appearances that are simply false. Giving falsehoods reality to be nice and accessable gains you what?

-

RedBaron at 15:42 PM on 5 September 2016Breathing contributes to CO2 buildup

@ Tom Curtis # 70

You said, "All in all, this means we need a increase in food production per hectare by about a factor of two for current populations (assuming 70% loss of agricultural land to allow for a wild nature), and near three for projected future populations."

I am nearly 100% certain we could do exactly that and very likely more on 1/2 the land currently under agriculture. Probably not England. It is an Island. But in the North America, most of Asia and Africa? I would bet my bottom dollar we can. And I am not just saying that without evidence either. The current industrialized models of production in agriculture are that inefficient in land use. The models are designed to be efficient in other things, not quantity food produced sustainably per hectacre. In fact in some things like the CAFO buisness model, it was specifically designed to be inefficient on purpose as a buffer stock scheme. This from Wiki:

Most buffer stock schemes work along the same rough lines: first, two prices are determined, a floor and a ceiling (minimum and maximum price). When the price drops close to the floor price (after a new rich vein of silver is found, for example), the scheme operator (usually government) will start buying up the stock, ensuring that the price does not fall further. Likewise, when the price rises close to the ceiling, the operator depresses the price by selling off its holding. In the meantime, it must either store the commodity or otherwise keep it out of the market (for example, by destroying it)

The more inefficient the better. Biofuels has the same purpose. The idea is to purposely over produce grain because although an inefficient use of land, it can be stored. Then unlike many buffer stock schemes, instead of destroying the surplus, you waste it as inefficiently as possible on livestock or biofuels. The system does work in what it was designed to do. But in no way can you estimate the land needed to feed the world's population based on that type of system. The system was designed for a world where land was seen as practically limitless. Now within that system, production is incredibly efficient. But the system itself was designed to be an inefficient use of land, to fit the buffer stock scheme instead.

The very first thing you could do to approximately double the food produced per hectare is reintegrate animal production back on the crop farmers land. Then of course they must be properly managed, but there are countless ways to do that without lossing any crop yields at all, and sometimes increasing yields. Remember in industrialised countries like USA over 1/2 the arable cropland goes for producing animal feeds and biofuels. Just by going to a forage based system integrated into the arable cropland you reach that food production goal of "factor of two for current populations" right away. In fact probably would be too fast. Might have to first switch to forage based regenerative systems, to repair the non-arable grazing land first, and gradually remove livestock as wild populations of animals rebuild their numbers in the newly restored habitat. Those removed domestic animals placed gradually into the integrated arable cropland as they are removed from rewilded land. If you took them away too fast the non-arable land would be undergrazed and either recover too slowly or even sometimes degrade even faster.

It a bit hard to really explain it all on a forum like this. But I can say that IMHO we could do exactly what you asked with our current technology and at the same time actually sequester 5-20 tonnes CO2 per hectare per year into the long term stable soil carbon pool. And there are lots of case studies that show this from all over the world.

-

Haze at 14:34 PM on 5 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Tom Curtis@4 thanks for a very detailed comment and for enlightening me of the procedures for government funding in America. Because the MSM refer primarily to the President I assumed, incorrectly, he has more power than he actually does have. Your point "educated to the required standard, if they are prepared to put in the effort." certainly applies to me in this instance for although I have a science based PhD, my knowledge of the workings of the American government is clearly inadequate.

chriskoz@6. You state "pseudoscience, represented by Haze@3, is far less difficult than the science." Could you take the time tell me in which part of my comment I represented pseudoscience?

scaddenp@5. I doubt very much that I have better ideas to communicate than the climate scientist but perhaps being a little less scientific and a little more, for want of a better word, chatty might help.

For example, at some stage, the comment might be made that " there is a fear amongst many that cutting emissions of CO2 is going to cause economic pain, often to those who are least able to bear it. As renewables become increasingly both less expensive and more efficient, costs associated with the means of power production eg wind turbines and solar panels are continually falling. Clearly that has a lowering effect on power prices. More importantly perhaps, research into the storage of the energy from renewables is continuing apace and is getting to the point where battery storage is becoming within the financial reach of the average home owner. If the government subsidies on the machinery of renewables can be increasingly directed toward subsidising storage research and development then renewables will, without doubt, lead to significant falls in the costs of domestic power. The hybrid petrol/battery powered hybrid car is a practical example of how advances in renewables cut costs as the fuel bill for these vehicles is a lot less than for conventionally powered cars. That's why they're very popular with taxi drivers.

I don't regard this as deathless prose and wrote it straight off the top so I'd imagine someone with more literary skill than I, would be able to provide a shorter and more punchy piece on why pursuing the lowering of CO2 emissions is not going to lead to penury for the workers. -

Tom Curtis at 12:29 PM on 5 September 20162016 SkS Weekly Digest #36

With regard to the poster, it may technically be true in that we do not have any monthly records or proxies for temperature prior to the 17th century; but there were probably warmer months 6-8 thousand years ago, almost certainly warmer months about 110 thousand years ago, and certainly warmer months multiple millions of years ago. Indeed, in the very distant (pre-human) past, for certain periods, average annual temperatures would have been warmer than July 2016. So, the hottest month ever recorded, but that is more a fact about the shortness of the records, and the lack of resolution in the proxies than about the temperature. And while calling attention to the fact that it was the warmest July since 1880 (or 1850 for less reliable records) is worthwhile, the second "Ever", in orange type by emphasizing the time element makes the whole misleading.

-

chriskoz at 09:51 AM on 5 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Tom@4,

Your response to Haze@3 required far more knowledge (e.g. about the workings of US political system, even though you do not live within it) and far more research (e.g. finding Young Earth Creationist example) than the original, essentially random suggestions.

Not to mention the time you had to spend to type your response & structure it into clear bullet points. Thank you.

That comparison further reafirms your point that the pseudoscience, represented by Haze@3, is far less difficult than the science.

I think the same applies to every aspect of life and every skill: it's far easier to promote random but convenient nonsense rather than logical understanding of the facts. Also in political life. A stark axample is current US presidential campain: a random, completely ignorant candidate came in promoting ideas so absurd, contradicting the basics of that political systems and yet, still enjoys enormous popularity of his electorate and beaten all of his reality-obiding, professional opponents.

-

chriskoz at 08:51 AM on 5 September 20162016 SkS Weekly News Roundup #36

Follow up of my post from last week:

David Karoly and Clive Hamilton: why we can't sign the latest Climate Change Authority report

You can read a full report at www.climatecouncil.org.au

Mind boggling, that despite the best efforts of the founders of CCA that it be "free from politics", the political agenda has infiltrated therein! -

Tom Curtis at 08:04 AM on 5 September 2016Breathing contributes to CO2 buildup

RedBaron @69, I understand from your comment that it was my second paragraph @66 that you disagreed with, not the first. In particular you disagreed with my assessment that:

"More interesting is the sustainable population at current, or likely near future technologies. I think the evidence is that we have already exceeded it, though primarilly due to the proportion of land committed to food intake, plus the overfishing of the oceans."

That may, in part, be due to a disagreement about what is mean by "sustainable". I have no doubt that we can increase food production into the future sufficiently to support the most likely peak world population of about 11 billion people:

(See also this)

I do not doubt we can do so and maintain ecosystem integrity in the limited sense that O2 production and soil health will not be impared, and a new stable ecosystem will develop. But it will be a stable ecosystem similar to that of Britain's, in which all of nature is shaped by man, and there is no room left for most of the native mega-fauna - particularly predators (such as the bears and wolves that used to be native to Britain).

It may be that is what the world population really desires. It is certainly true that absense make the heart grow fonder when it comes to large predators; and that humans in the end will have no place for lions, tigers etc, except in zoos. The same is true for large grazers, other than those dedicated for human consumption. But I hope we have more space for nature than that, and that would require that we produce the food for the 11 billion on less land than we currently have under agriculture, not more.

What is more, with rising economic expectation in the third world, we need to factor in an increase in food consumption per capita by about 30-50%, and an increase in food quality (ie, more protein and fresh fruit and vegetables). This is particularly the case in Africa where most of the population increase is expected to come.

All in all, this means we need a increase in food production per hectare by about a factor of two for current populations (assuming 70% loss of agricultural land to allow for a wild nature), and near three for projected future populations.

-

scaddenp at 07:33 AM on 5 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Haze, I am not quite sure what your point is. That people are stupid? Dont want believe unwelcome facts?

" Or are Real Climate and Skeptical Science seen as being run by elitists who not receptive to and dismissive of the views of "ordinary" Americans and Australians?"

If by that you mean that RC and SkS are not into deceptions, unphysical theories, misinformation, ideological claptrap, conspiracy theories, and accusations of fraud, then you are correct. If these are the concerns and views of "ordinary" citizens, then we have serious problems with education that are not going to be fixed overnight.

If you have better ideas about how we could comminicate the facts and counteract the fiction, then we are all ears. I read WUWT comments at times and despair. Somehow, people need to understand that reality is not a consumer choice and the ideological position need to conform to reality and not the other way round. For many, I think that the question "what data would change your mind on climate change" is viewed as essentially the same as "what argument would convince you to vote for the enemy party". Neither is conceivable and so reality is shut out by participation in an echo chamber of reassuring lies.

-

RedBaron at 03:43 AM on 5 September 2016Breathing contributes to CO2 buildup

@ 68 Tom Curtis,

Exactly correct Tom. Industrialization has improve some things dramatically. No farmer wants to give up his tractor etc..., except maybe a few Amish who do without. Industrialization has given us tools. It's how we use those tools that make all the difference. If when we treat those biological systems on which we use those tools to harvest food and fiber as a net sum zero product, (ignoring how fundamentally different biological systems function), then that's when we have problems with unsustainability. Which is just the flip side of the overpopulation coin.

At lower population levels we didn't have this problem because we could always move on to new untapped areas when the areas we were harvesting from collapsed, like you mentioned with fishing. The collapsed areas would recover over time and we could come back to them later in many cases.

However, now we must fundamentally change the production models. We no longer have to option of overuse, abandonment/fallow, and returning decades or centuries later. Instead we must change the production models to methods using holistic systems science and modern technology appropriately applied.

If we take current productive land/fisheries etc. and change the production models, we can regenerate the ecosystems services on that land/fisheries. We also can return to currently abandoned areas and apply these new system science based regenerative models to them as well, returning them to productivity.

So actually when you add the current productive land/fisheries to the abandoned land/fisheries that collapsed earlier due to over use, we can actually increase the total population they can support sustainably long into the distant future.

A great working example of this is China's Loess Plateau Project. This land was destroyed by agriculture and could no longer support much population at all. Since beginning to restore the land, the amount of food and fiber produced has increased every year. The amount of carbon sequestration has increased every year. The runoff water quality has increase every year. The wildlife and biodiversity has increased every year. When you change the production models you see profound differences.

In the USA a similar revolution in thinking is also being taught and is in its infancy. Best exemplified by this quote from the USDA-NRCS.

"When farmers view soil health not as an abstract virtue, but as a real asset, it revolutionizes the way they farm and radically reduces their dependence on inputs to produce food and fiber." -USDA (Author Unknown)

I am an organic research farmer. I am not afraid of change. I am the change.

-

Tom Curtis at 23:59 PM on 4 September 2016Breathing contributes to CO2 buildup

RedBaron @67, the current population is approximately 7.4 Billion, so that my estimate of up to a quarter of that sustained using preindustrial (ie, pre 1750) technology represents a population of up to 1.85 billion. That is 2.64 times the 0.7 billion population in 1750, so I am certainly allowing for some advances in non-industrial technologies.

Having said that, I do not think you are giving serious consideration to the difficulties involved. A preindustrial fishing technology is restricted to small (because of limited work force) wooden, sail powered vessels. Such vessels cannot fish with long lines, nor trawl, nor drift net. Nor can they fish the deep ocean, and increasingly important source of modern fish. Further, they cannot operate more than about a weeks distance from port, and typically will operate within a few hourse sailing from port. Given those limitations, catchable fish will be a very small fraction of currently available, even with a rebound of fish stocks.

Or consider grain growing, with no combine harvesters; with plows being of wood construction with (at best) a cast iron plate to restrict wear on the blade, and drawn by oxen or (hopefully) horses. Harvesting will probably be by scythe. These factors required something in the order 60-80% of the population to be agricultural workers, just to provide enough food for all.

Or consider that such heating fuel we use will be in the form of charcoal, requiring extensive forests over much that is now agricultural land.

And that leaves aside questions of spoilage, famine and drought.

There is often a ridiculous optimism by some people who, urban dwellers nearly all, and with no knowledge of history, suppose that we can get rid of industrialization to advantage. But life before industrialization was nasty, brutish and short. In general, excepting the upper middle class and above, hunter gatherers lived better than the vast majority of even 19th century populations, but only by dint of a very low population per unit area.

-

Tom Curtis at 17:49 PM on 4 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

Haze @3, first, and rather trivially, in the US, it is Congress, not the President who controlls the purse strings. Consequently, without the approval of the Republican (and ergo AGW denier) dominated House, and the Republican (and ergo AGW denier) Senate, no major advertising campaign promoting acceptance of the science on climate could have been funded by the President. Indeed, the President does not even controll the education system, which devolves to a state and local level such that it is a running battle to keep young Earth Creationism out of the schools in blue states, let alone pseudosciences not so widely acknowledged as such (such as AGW denial).

Second, the pseudoscientific side of the argument has the advantage that they do not need to be, nor appreciably strive to be correct. As a result they can shape their arguments to be persuasive without worrying to much if they are valid. And there is no question that they do that. They quote out of context, cherry pick, use deceiptful graphs, manufacture data from thin air. Worse, when arguments are refuted, they just wait a bit then recycle them again. While doing this, they are appealing to peoples selfish interest in a fairly direct way. I was a rev head when I was younger. Still would be if it were not for global warming. I would love for WUWTs arguments to be true. So, there is a very direct interest for every American who would rather drive a Hummer than an Accord to not believe in AGW. Likewise there is a very direct interest in any older person who does not want their legacy to be tarnished by the fact that their lifestyle created a very major problem for their children. There is even a direct interest for anybody with political leanings towards not trusting the government in that AGW denial gives a superficial reason for not trusting the government.

In contrast the AGW side has its arguments constrained not by the need to be persuasive, but that they be sound. And to know whether or not an argument in science is sound is often hard work. To truly understand the science you need to put in six years of tertiary education just to get to the start point. That is not elitism, anymore than it is elitism to think you require six or more years of experience to become a decent plumber. I personally believe that virtually anybody can become educated to the required standard, if they are prepared to put in the effort. But AGW denial tells you that not only do you not need that effort, but that you understand the situation better than those who have put in the effort (because, purportedly, you can refute their arguments with trivial points). No effort plus flattery plus justifying what you wanted to do already vs effort expected, plus an expectation that you actually understand, plus an expectation that you modify your behaviour in significant ways for future generations. Why on earth would you think these are both equally easy to sell?

Third, the reporting of science in the MSM is woeful. This is the case even outside of AGW, as shown humorously but correctly by John Oliver:

It becomes worse in the reporting of AGW because of false balance - the lazy, irresponsible approach of the MSM to reporting on all topics where they consider their job consists simply of getting a sound bite from "each side" with no attempt to require the sound bites to be cogent, relevant, or well supported. As a result nearly all MSM reports on climate change are accompanied with a deniers sprouting some irrelevancy that purports to refute the evidence.

On top of that, there is a "man bites dog" effect. When the IPCC gets something wrong, that is in fact a big news story because it happens so rarely. So it gets reported. If a AGW denier is wrong, well they are right less frequently than a stopped clock, so that is not a story at all - and gets no coverage.

With these impediments, even the friendly mainstream media on balance disinforms about AGW.

Finally, here are the rankings of the primary pro-biological science website (the Panda's Thumb) vs the two most popular pseudoscience websites on evolution, the "intelligent design" Discovery Institute, and the Young Earth Creationist Answer's in Genesis:

Panda'sThumb: 105,377

Answers In Genesis: 11,865

Discovery Institute: 64,437

Clearly the popularity of pseudoscience on the web is not confined to AGW. This is for reasons already given (under the second point) above. Now, unless you want to start arguing that clearly the belief that the Universe is only 10,000 years old, and that all species were restricted to just a few breeding pairs (six for clean, and 1 for unclean) just 6,000 years ago, at which time a global flood covered the earth to a depth 9 km is more scientific than standard biological and geological science based on the above data, you are committed to the fact that pseudoscience sells easier than science.

And once again, that is because, not being based on fact, they can be shaped to tell you what you want to hear rather than what is true.

-

Glenn Tamblyn at 17:48 PM on 4 September 2016As nuclear power plants close, states need to bet big on energy storage

Paul D

From the Isentropic website:

"Isentropic's facilities and operations are currently in the process of being taken over by the Sir Joseph Swan Centre for Energy Research based at Newcastle University."Hopefully the technology will get rebirthed, we need it.

-

Haze at 14:45 PM on 4 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

It seems that despite the 97% consensus scientists with a Democrat as President for the last 8 years, are unable to convince the American Republican voter that AGW is of serious concern. Perhaps instead of saying it is due to advertising from the anti-AGW side, concentratiing on why their advertising is having less effect might be more profitable. No matter how much the Koch brothers and Rupert Murdoch et al. can spend, it is nowhere near the amount the Americn government can spend if it so desired. Perhaps the swing away from AGW by Republican voters reflects failure in the approach of AGW proponents rather than success of the approach taken by the anti-AGW factions. It is also perhaps relevant that, for example, WUWT and JoNova attract far more respondents than do Skeptical Science and Real Climate. Why is that? Because Watts and Nova are better funded? Better publicists? More in tune with "ordinary" Americans and Australians? Or are Real Climate and Skeptical Science seen as being run by elitists who not receptive to and dismissive of the views of "ordinary" Americans and Australians?

-

Paul D at 23:15 PM on 3 September 2016As nuclear power plants close, states need to bet big on energy storage

Just discovered some bad news...

According to Isentropics web site (cutting edge energy storage company) they were put in administration in January.

In the months up to that month their published accounts indicate that they were running out of money and although their was some interest in more funding. None came forward.

Wonder to what extent the political change in the UK had on their fortunes? Normally such a company would have plenty of financial support until they had a marketable product. However after the election and a collapse in the Labour vote the government made significant changes to it's energy policy, putting doubt in the minds of energy investors.

More info here:

https://beta.companieshouse.gov.uk/company/05077488/filing-history

-

ubrew12 at 21:41 PM on 3 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

When you 'save the World', as America arguably did in WWII, your Nationalists tend to think they can walk on water. This is a problem for America that Europe doesn't have. American Nationalists were furious at the Vietnam War protestors because the 'shining city on a hill' doesn't do mistakes. American conservatives have since been given a number of issues upon which to 'circle the wagons' and punish the Anti-War hippies: abortion, guns, the 'War on Drugs' and, to some extent, the 'War on Terror'. But once you build the moat, information doesn't easily penetrate the castle walls: the 'War on Taxes' and the 'War on Global Warming treehuggers' have been inserted into the conservative push-button issues list by deep-pocketed interests that, in many cases, aren't even American.

-

Paul D at 18:41 PM on 3 September 2016Coordinator of UK Ocean Acidification Research Attacks The Spectator for 'Willfully Misleading' James Delingpole Column

The likes of Delingpole continually present confusing and contradicting arguments to support a political ideology.

Political ideologies will always lie and contradict their own arguments to support their flawed ideologies (this includes the left and unions).

In Delingpoles case he is contradicting the long held view that 'global warming' sciences can not possibly make predictions because the system being analysed is to complicated. Whilst here he is claiming a few hours science will do the job (to satisfy his ideology).

-

RedBaron at 17:05 PM on 3 September 2016Breathing contributes to CO2 buildup

@ 66 Tom Curtis,

You seem to be mixing science and technology with industrialization. Not all technology is industrial in nature. This is important to remember in agriculture.

The other important thing to remember is that counter intuitively, biological systems yield more when not overused. For example, a fishery that is overfished, yields fewer tons of fish per year than a fishery that is not overfished. Grassland that is overgrazed yields less meat per acre per year than grassland that is properly grazed. Forests that are over timbered yield less wood per acre per year than forests properly managed. We are so wired into net sum zero thinking that people often miss that.

So yes, agriculture (including fisheries) could sustain populations much higher than we currently have, even though the current population already exceeds carrying capacity. We simply need to use holistic systems models of production instead of net sum zero industrial models of production.

Don't get me wrong. Industrial models are very good at some things. But when it comes to biological systems, far from ideal.

-

nigelj at 08:53 AM on 2 September 2016Americans Now More Politically Polarized On Climate Change Than Ever Before, Analysis Finds

The Republicans seem to be taking a very fixed attitude of climate change denial. American conservatives appear to be retreating into very fixed beliefs, and appear afraid of a world that is changing outside of America.

European conservatives are more flexible and receptive to climate change science, probably because two wars on their continent have led to people seeing the need to compromise and cooperate.

Americans emphasise individuality more, and constitutional freedoms. Peoples are shaped by their past histories and America and Europe differ in many regards.

-

ubrew12 at 06:52 AM on 2 September 2016Coordinator of UK Ocean Acidification Research Attacks The Spectator for 'Willfully Misleading' James Delingpole Column

Delingpole: "Does this prove that global warming is not a problem? No it doesn’t. What it does do is lend credence to... sceptics..." Translation: "I have no idea if AGW is a problem or not. However, if you want to know what to do about it, you should listen to me and not those Scientists." It used to be rare to see such public displays of 'the superiority of ignorance', but this is Trump's World, now.

-

Tom Curtis at 10:24 AM on 1 September 2016Breathing contributes to CO2 buildup

MDMonty @65, IMO it is dubious that a preindustrial revolution technology could sustain population levels at even a quarter of current levels. Primarily that is because it would not be able to sustain the vast energy inputs into food production; but also because the much slower transport speeds would necessitate the majority of food consumption to be from local or close (over sea) distances. The UK may be able to source wheat from the US, for instance, but not most vegetables or fruit, and not much in the way of meat; simply due to spoilage. A sustainable population level at those industrial levels would be in the order of half to a billion people.

More interesting is the sustainable population at current, or likely near future technologies. I think the evidence is that we have already exceeded it, though primarilly due to the proportion of land committed to food intake, plus the overfishing of the oceans. Simply as regards global warming, we can sustain the current population on greenhouse free energy and transport system, possibly at a higher standard of living than is currently common in Western Countries. That, however, requires a major effort to transition; as the current population with the current energy mix is clearly unsustainable.

-

MDMonty at 09:43 AM on 1 September 2016Breathing contributes to CO2 buildup

For clarity's sake: it appears the role of human respiration ("Does breathing contribute to CO2 buildup in the atmosphere?") wrt CO2 buildup amounts to a zero sum when including photosynthesis. In essence this describes a natural sort of equilibrium.

I completely agree insofar as the science itself goes (and the provided formulas-thanks)

Pragmatically -somewhat referring to #64 response (Pointfisha) above- the population of humans is increasing alongside deforestation, excluding deforestation where it is a consequence of industrialization.

This seems to indicate that mass consumption of meat (specifically beef & lamb) has a significant impact on climate.

I guess I am asking what an acceptable human population would be, assuming pre-1750 (pre-Industrial Revolution) technology, in keeping with keeping CO2 levels static or reducing them from current levels. Another way of asking this: at what population will human respiration outstrip the capacity of photosynthesis?

I do realize my query is flawed in that is purely theoretical, and perhaps even dumb: excludes other natural sources of CO2 (vulcanism, animal respiration) but those variables seem outside the scope of this article.

-

chriskoz at 08:50 AM on 1 September 20162016 SkS Weekly Digest #35

On the mitigation front in OZ, Turnbull gov seems to be continuing discredited policy of his predecessor:

Climate Change Authority's key report 'neglects to join the dots', critic says

In an unprecedented move, two of the authority's 10 members – climate scientist David Karoly and public ethics professor Clive Hamilton – plan to release a dissent report within days to highlight their disagreements with the final study.

Thank you David & Clive. I'm looking forward to it.

-

bozzza at 20:01 PM on 31 August 2016Global warming is melting the Greenland Ice Sheet, fast

Rates of change is what matters: good question and good answer.

(Shape forms in time....)

-

Bob Loblaw at 10:44 AM on 31 August 2016Temp record is unreliable

I will also mainly let Tom and Glenn respond to DarkMath, only interjecting when I can add a little bit of information.

For the moment, I will comment on Glenn's description of the US cooperative volunteer network and comparisons between manual and automatic measurements.

I am more familair with the Canadian networks, which have a similar history to the US one that Glenn describes. A long time ago, in a galaxy far, far away, all measurements were manual. That isn't to say that the only temperature data that was available was max/min though: human observers - especially at aviation stations (read "airports") - often took much more detailed observations several times per day.

A primary focus has been what are called "synoptic observations", suitable for weather forecasting. There are four main times each day for synoptic observations - 00, 06, 12, and 18 UTC. There are also "intermediate" synoptic times at (you guessed it!) 03, 09, 15, and 21 UTC. The World Meteorological Organization tries to coordinate such readings and encourages nations to maintain specific networks of stations to support both synoptic and climatological measurements. See this WMO web page. Readings typically include temperature, humidity, precipitation, wind, perhaps snow, and sky conditions.

In Canada, the manual readings can be from their volunteer Cooperative Climate Network (CCN), where readings are typically once or twice per day and are usually restricted to temperature and precipitation, or from other manual systems that form part of the synoptic network. The observation manuals for each network can be found on this web page (MANOBS for synoptic, MANCLIM for CCN). Although the CCN is "volunteer" in terms of readings, the equipment and maintanance is provided to standards set by the Meteorological Service of Canada (MSC). MSC also brings in data from many partner stations, too - of varying sophistication.

Currently, many locations that used to depend on manual readings are now automated. Although automatic systems can read much more frequently, efforts are made to preserve functionality similar to the old manual systems. Archived readings are an average over the last minute or two of the hour, daily "averages" in the archives are still (max+min)/2. Canada also standardizes all time zones (usually) to a "climatological day" of 06-06 UTC. (Canada has 6 time zones, spanning 4.5 hours.) It is well understood that data processing methods can affect climatological analysis, and controlling these system changes is important.

-

DarkMath at 09:42 AM on 31 August 2016Temp record is unreliable

Thanks for all the responses everyone, they're very helpful. Michael is correct, I'm still working through the data. Actually I haven't even started as I was travelling today for work and only got back just now.

Moderator Response:[RH] Just a quick reminder: When you post images you need to limit the width to 500px. That can be accomplished on the second tab of the image insertion tool. Thx!

-

Dcrickett at 06:01 AM on 31 August 2016Katharine Hayhoe on Climate and our Choices

Using an individual's hereditary factors and lifestyle choices as an analogy to climate's natural factors and greenhouse gas emissions is a brilliant approach to communicating climate matters. I plan to use it.

-

jja at 02:25 AM on 31 August 2016California has urged President Obama and Congress to tax carbon pollution

I like to quote James Hansen during his interview with Amy Goodman of DemocracyNow! at the Paris COP21 regarding their efforts.

Well, we have to decide: Are these people stupid, or are they just uninformed? Are they badly advised? I think that he really believes he’s doing something. You know, he wants to have a legacy, a legacy having done something in the climate problem. But what he’s proposing is totally ineffectual. I mean, there are some small things that are talked about here, you know, the fact that they may have a fund for investment, invest more in clean energies, but these are minor things. As long as fossil fuels are dirt cheap, people will keep burning them.

AMY GOODMAN: So, why don’t you talk, Dr. James Hansen, about what you’re endorsing—a carbon tax?

JAMES HANSEN: Yeah.

AMY GOODMAN: What does it mean? What does it look like?

JAMES HANSEN: Yeah. It should be an across-the-board carbon fee. And in a democracy, it’s going to—it should—the money should be given to the public. Just give an equal amount to every—you collect the money from the fossil fuel companies. The rate would go up over time, but the money should be distributed 100 percent to the public, an equal amount to every legal resident.

I completely agree, falsified carbon credits in a trading scheme designed by wall street to increase revenues will be a disaster for future generations. Carbon Tax not Cap and Trade!

-

michael sweet at 00:06 AM on 31 August 2016Temp record is unreliable

I think that Darkmath is working through the data. I think Glenn and Tom will provide better answers to his questions than I will. I think Darkmath is having difficulty responding to all the answers they get. That is dogpiling and is against the comments policy. I would like to withdraw to simplify the discussion.

As a point of interest, earlier this year, Chris Burt at Weather Underground had a picture on his blog of the min/max thermometer at Death Valley, California, showing the hottest reliably measured temperature ever measured world-wide. (copy of photo). This thermometer is still in use. Apparently the enclosure is only opened twice a day (to read the thermometer). Min/max thermometers are still in use, even at important weather stations.

-

Tom Curtis at 23:19 PM on 30 August 2016Temp record is unreliable

DarkMath @365, as indicated elsewhere, the graph you show exagerates the apparent difference between versions of the temperature series by not using a common baseline. A better way to show the respective differences is to use a common baseline, and to show the calculated differences. Further, as on this thread the discussion is on global temperatures, there is no basis to use Meteorological Station only data, as you appear to have done. Hence the appropriate comparison is this one, from GISTEMP:

Note that the Hansen (1981) and Hansen (1987) temperature series did not include ocean data, and so do not in this either.

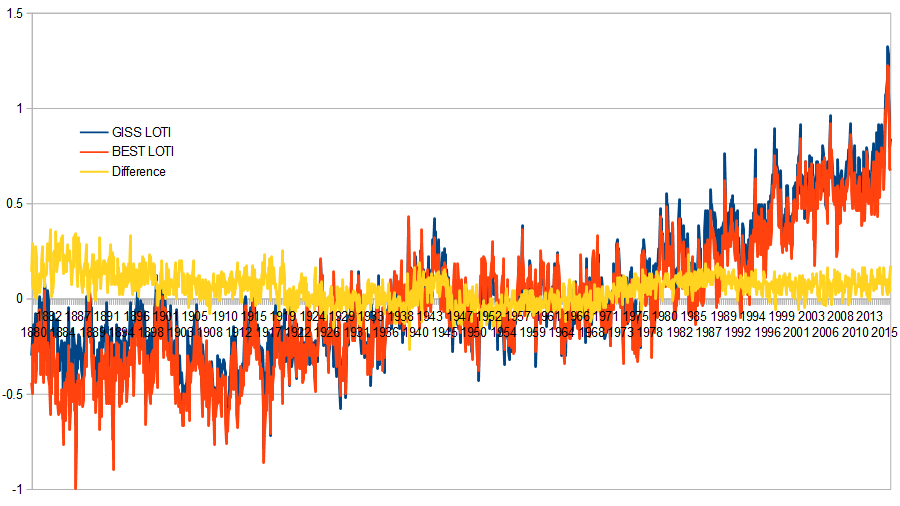

For comparison, here are the current GISTEMP LOTI and BEST LOTI using montly values, with the difference (GISS-BEST) also shown:

On first appearance, all GISTEMP variants prior to 2016 are reasonable approximations of the BEST values. That may be misleading, as the major change between GISSTEMP 2013 and 2016 is the change in SST data from ERSST v3b to ERSST 4. Berkely Earth uses a HadSST (version unspecified), and it is likely that the difference lies in the fact that Berkely Earth uses a SST database that does not account for recent detailed knowledge about the proportion of measurement types (wood or canvas bucket, or intake manifold) used at different times in the past.

Regardless of that, the difference in values lies well within error, and the difference in trend from 1966 onwards amounts to just 0.01 C per decade, or about 6% of the total trend (again well within error). Ergo, any argument on climate that depends on GISS being in error and BEST being accurate (or vice versa) is not supported by the evidence. The same is also true of the NOAA and HadCRUT4 timeseries.

-

Glenn Tamblyn at 16:24 PM on 30 August 2016Temp record is unreliable

Darkmath

I don't know for certain but I expect so. There was no other technology available that was cheap enough to be used in a lot of remote stations and often unpowered stations. Otherwise you need somebody to go out and read the thermometer many times a fday to try and capture the max & min.

The US had what was known as the Cooperative Reference network. Volunteer (unpaid) amateur weather reporters, often farmers and people like that who would have a simple weather station set up on their property, take daily readings and send them off to the US weather service (or its predecessors).

Although there isn't an extensive scientific literature on the potential problems TObs can cause when using M/M thermometers, there are enough papers to show that it was known, even before consideration of climate change arose. People likethe US Dept of Agriculture did/do produce local climatic data tables for farmers - number of degree days etc. - for use in determining growing seasons, planting times etc. TObs bias was identified as a possible inaccuracy in those tables. The earliest paper I have heard of discussing the possible impact of M/M thermometers on the temperature record was published in England in 1890.

As to BEST vs NOAA, yes the BEST method does address this as well. Essentially the BEST method uses rawer (is that a real word) data but apply statistical methods to detect any bias changes in data sets and correct for them. So NOAA target a specific bias change with a specific adjustment, BEST have a general tool box for finding bias changes generally.

That both approaches converge on the same result is a good indicator to me that they are on the right track, and that there is no 'dubious intent' as some folks think. -

Bob Loblaw at 13:18 PM on 30 August 2016Temp record is unreliable

Darkmath:

I don't think it has been linked to yet, but there is also a post here at Skeptical Science titled "Of Averages and Anomalies". It is in several parts. There are links at the end of each pointing to the next post. Glenn Tamblyn is the author. Read his posts and comments carefully - they will tell you a lot.

Moderator Response:[PS] Getting to close to point of dog-piling here. Before anyone else responds to Darkmath, please consider carefully whether the existing responsers (Tom, Michael,Glenn, Bob) have it in hand.

-

DarkMath at 11:50 AM on 30 August 2016Temp record is unreliable

Thanks Glenn. Was the Min/Max thermometer used for all U.S. weather stations early in the 20th century? What percent used Min/Max? Where could I find that data?

One other thing regarding the technique I suggested where a change in measurement means the same weather station is treated as an entirely new one. That's the technique the BEST data is using and they got the same results as NASA/NOAA did with adjustments. Does that address the problems you raise with the "simpler" non-adjustment technique?

-

DarkMath at 11:38 AM on 30 August 2016Temp record is unreliable

Thanks Michael for referencing the BEST data, they seem to be employing the simpler technique where any change in temperature recording is treated as an entirely new weather station. I'll look into further.

Regarding the change in adjustments/estimates over time being due to computing power that seems like a stretch to me. Given Moores Law in 30 years they could look back to the techniques employed today and say the same thing. Who knows what new insites Quantum Computers will yield when they come online.

Actually my real question is about the BEST data matching up with results NASA/NOAA got using adjustments/estimates. Which iteration do that match up with, Hansen 1981, GISS 2001 or GISS 2016?

Moderator Response:

Moderator Response:[PS] The simplist way to find what the adjustment process was and why the changes is simply to read the papers associated with each change. GISS provide this conveniently here http://data.giss.nasa.gov/gistemp/history/ (Same couldnt be said for UAH data which denier sites accept uncritically).

(Also fixed graphic size. See the HTML tips in the comment policy for how to do this yourself)

[TD] You have yet to acknowledge Tom Curtis's explanation of the BEST data. Indeed, you seem in general to not be reading many of the thorough replies to you, instead Gish Galloping off to new topics when faced with concrete evidence contrary to your assertions. Please try sticking to one narrow topic per comment, actually engaging with the people responding to you, before jumping to another topic.

-

Glenn Tamblyn at 11:38 AM on 30 August 2016Satellite record is more reliable than thermometers

Darkmath

Since the Satellite and ground series have different baseline periods, they can't be directly compared easily. Generally the satellite data sets are around 5 times less accurate than the ground datasets - stitching together a coherent redord from multiple satellites, with lots of technical issues is quite hard.One transition that occurred around 2000 was the switch from Microwave Sounding Units (MSU) to the Advanced Microwave Sounding Units (AMSU) on later satellites. Although the basic concepts of how temperatures are measured is the same, there are potentially important differences that may have had an impact.

-

Glenn Tamblyn at 11:28 AM on 30 August 2016Temp record is unreliable

Darkmath

Just to clarify one point, regarding time of observation. Until the introduction of electronic measurement devices, the main method for measuring surface temperatures were maximum/minimum thermometers. These thermometers measure the maximum and minimum temperatures recordedsince the last time the thermometers were reset. Typically they were read every 24 hours. Importantly they don't tell you when the maximum and minimum were reached, just what the values were.

If one reads a M/M thermometer in the evening, presumably you get the maximum and minimum temperatures for that day. However they can introduce a bias. Here is an example.

Imagine on Day 1 the maximum at 3:00 pm was 35 C and drops to 32 C at 6:00 pm. So the thermometers are read at 6:00 and the recorded maximum is 35 C. Then they are reset. The next day is milder. The maximum is only 28 C at 3:00 pm, dropping to 25 C at 6:00 pm when the thermometer are read again. We would expect them to read 28 C, that was the maximum on day 2.

However, the thermometer actually reports 32 C !!! This was the temperature at 6:00 pm the day before, just moments after the thermometer was reset. We have double counted day 1 and added a spurious 7 4 C [edit Whoops, can't count]. This situation doesn't occur when a cooler day is followed by a warmer day. And an analogous problem can occur with the minimum thermometer if we take readings in the early morning, there is a double counting of colder days.

The problem is not with when the thermometer was read, the problem is that we aren't sure when the temperature was read because of the time lag between the thermometer reading the temperature, and a human reading the thermometer. This is particularly an issue in the US temperature record and is referred to as the Time of Observation Bias. The 'time of observation' referred to isn't the time of measuring the temperature, it is the later time of observing the thermometer.

The real issue isn't the presence of a bias. Since all the datasets are of temperature anomalies, differences from baseline averages, any bias in readings tends to cancel out when you subtract the baseline from individual readings;

(Reading + Bias A) - (Baseline + Bias A) = (Reading - Baseline).

The issue is when biases change over time. Then the subtraction doesn't remove the time varying bias:

(Reading + Bias B) - (Baseline + bias A) = (Reading - Baseline) + (Bias B - Bias A)

The problem in the US record is that the time of day when the M/M thermometers were read and reset changed over the 20th century. From mainly evening readings (with a warm bias) early in the century to mainly morning readings (with a cool bias) later. So this introduced a bias change that made the early 20th century look warmer in the US, and the later 20th century cooler.

Additionally, like any instrument, the thermometers will also have a bias in how well they actually measure temperature. But this isn't a bias that is effected by the time the reading is taken. So this, as a fixed bias, tends to cancel out.

So these instruments introduce biases into the temperature record that we can't simply remove by the sort of approach you suggest. In addition, since most of the temperature record is trying to measure the daily maximum and minimum and then averages them, your suggested approach, even if viable would simply introduce a different bias - what would the average of the readings from your method actually mean?

In order to create a long term record of temperature anomalies, the key issue is how to relate different time periods together and different measurement techniques to produce a consistent historical record. Your suggested approach would produce something like 'during this period we measured an average of one set of temperatures of some sort, A, and during a later period we measured an average of a different set of temperatures of some sort, B. How do A and B relate to each other? Dunno'.

More recent electronic instruments now typically measure every hour so they aren't prone to this time of observation bias. They can have other biases, and we also then need to compensate for the difference in bias between the M/M thermometers, and the electronic instruments.

-

ubrew12 at 10:46 AM on 30 August 2016Report Shows Whopping $8.8 Trillion Climate Tab Being Left for Next Generation

Joel_Huberman@2: As long as people realize that the actual bill presented to millenials has as much probability of being $18 trillion as being $0 trillion. The fact that economics cannot 'show' a solid number is already 'estimated' in the statistics.

-

michael sweet at 10:42 AM on 30 August 20161934 - hottest year on record

DarkMath,

I responded to you here.

Most experienced readers follow the comments page here where all your posts, and the responses, will show up.

-

michael sweet at 10:39 AM on 30 August 2016Temp record is unreliable

Darkmath,

Responding to Darkmath here. Tom will give you better answers than I will.

It appears to me that you agree with me that your original post here where you apparently have a graph of the completely unadjusted data from the USA is incorrect. The data require adjusting for time of day, number of stations etc. We can agree to ignore your original graph since it has not been cleaned up at all.

I will try to respond to your new claim that you do not like the NASA/NOAA adjustments. As Tom Curtis pointed out here, BEST (funded by the Koch brothers) used your preferred method of cleaning up the data and got the same result as NASA/NOAA. I do not know why NASA/NOAA decided on their corrections but I think it is historical. When they were making these adjustments in the 1980's computer time was extremely limited. They corrected for one issue at a time The BEST way is interesting, but that does not mean that the BEST system would have worked with the computers available in 1980. In any case, since it has been done both ways it has been shown that both ways work well.

It strikes me that you have made strong claims, supported with data from denier sites. Perhaps if you tried to ask questions without making the strong claims you will sound more reasonable. Many people here will respond to fair questions, strong claims tend to get much stronger responses.

My experience is that the denier sites you got your data from often massage the data (for example by using data that is completely uncorrected to falsely suggest it has not warmed) to support false claims. Try to keep an open mind when you look at the data.

-

nigelj at 09:23 AM on 30 August 2016California has urged President Obama and Congress to tax carbon pollution

In my opinion carbon taxes have plenty of merit. Consider that electric vehicles have good potential to reduce emissions, but uptake is slow because they have about a $5,000 price premium (or more) and a lack of recharging stations, and people are understandably hesitant to make the change.

It just seems that a carbon tax on oil or petrol, or alternatively more directly on fossil fuel companies, may be enough to encourage electric cars, and perhaps would get them across the line without the tax having to be too high. The tax collected could also subsidise the purchase price of the vehicles.

I have been reading about emissions trading schemes, and these things are enormously complicated, and the link between the schemes and encouraging electric cars seems very tenuous to me. The European Union emissions trading scheme has not produced particularly spectacular results. There is very slow uptake of electric vehicles and while wind farms have increased, the windfarms seem to be from subsidies, rather than the emissions trading scheme.

Some of these carbon trading units originate in countries that do not rank terribly high in freedom from corruption surveys, yet the units or credits can be globally traded. I honestly wonder what value they would really have, and how you could even determine their integrity. A carbon tax would be inherently more transparent.

Emissions trading units are also linked to planting of forests. This is nice in theory as forests are a carbon sink, but we are not really too sure how good this carbon sink is, and it relies on a very strict system of planting and felling trees. There is a risk that over reliance is put on tree planting, rather than reducing emissions at source. In comparison a carbon tax hits the source of emissions, and tree planting could be simply subsidised with the tax collected as a backup plan.

-

Tom Curtis at 08:42 AM on 30 August 20161934 - hottest year on record

DarkMath @53, first, when comparing temperature anomalies (as shown in the two graphs above), you need to provide them with a common baseline - ie, the interval with a mean temperature of 0. Failure to do so can create a strong apparent visual discrepancy even between temperature series which are isomorphic. It is very evident in your first graph that no such common baseline is calculated, with not even a single data point having common values, let alone a 30 year period with a common mean. If you employ a common mean, the comparison looks like this:

Second, there are several differences between the 1981 and the 2016 product. Of these, the most important are:

1) An increase in the number of reporting stations from around 1000 (1981) to around 2200 (1987), to around 7,200 (1999-to 2015), to 26,000 (2016 but possibly not yet implimented). The differences in station numbers reporting at a given time between 1987 and now are shown below. In 1981, the number of reporting stations is half that of 1987, though no doubt following a similar pattern over time;

2) The introduction of adjustments for station moves, instrument changes, etc from the 1990s (detailed in a 1999 publication;

3) The introduction of adjustments for the urban heat island effect (1999), and the switch of classification of urban areas from a classification based on population to one based on the intensity of night lights as observed from satellite (2010);

In addition for the full global Land Ocean Temperature Index, the use of Sea Surface Temperature data started in 1995, and the way temperatures over sea ice was changed in 2006 to better reflect the fact that sea ice insulates the overlying air layer from the SST. These changes do not effect the above graph, which are based on the Meteorological Station only data.

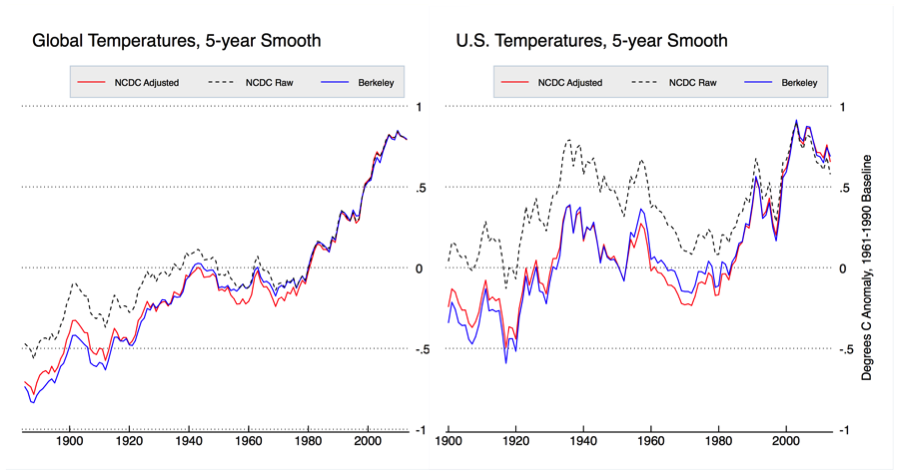

IMO, it is thoroughly unrealistic to expect such changes to have no impact on the estimate of global temperature. Nor is it realistic to expect that because in 1981, no adjustments for station moves etc (because Hansen did not have access to the station metadata to make such adjustments possible, nor under undertaken the research that would provide the theoretical justification for how those adjustments are made), nor to incorporate more data as it becomes available. And now that you are on record as endorsing the methodology of the BEST temperature series (@54), here is a comparison of the BEST temperature series with that from the NCDC, which uses the same data as does GISTEMP:

Clearly, if we are to trust the BEST temperature series, we should conclude that the adjustments by NOAA, and ipso facto by GISS, have improved the data. Further, if we adopt the logic that we should automatically distrust measurements which purportedly improve over time, we should not accept modern determinations of the speed of light, which have inflated by 50% over the original measure in 1675.

With regard to the satellite data:

1) The satellite, TLT data measures a weighted average of atmospheric temperatures from 0 to 12,000 meters, whereas the surface temperture data measures a hybrid of the 2 meter air temperature over land, and the SST over sea. These are not the same thing, nor are they expected to change in lock step:

2) The satelite TLT data is far more greatly effected by ENSO and volcanic temperature fluctuations, making it much noisier. As a result of this, the strong El Nino in 1997/98 along with the strong La Ninas in 2008 and 2011/2012 have a much larger effect on the short term trend post 1998.

3) The satelite TLT data has four or five major versions from different teams, all using precisely the same data but with much larger differences in trend etc than the different land surface series (most using different data, and all using different methodologies). Prima facie, that indicates the TLT temperature series is less well known than is the surface temperature series.

4) The particular satellite temperature series you use (RSS) has just had a major revision increasing the post 1998 trend in its TMT dataset. That revision will have a similar impact on the as yet unrevised TLT dataset once the revision is made, so we know the data show in not currently accurate.

The use of satellite data to construct a temperature series requires far more adjustments than is required for the surface temperature series; and there is no consensus among those working in that field as to the correct way to make those adjustments. Further, as noted above, the different way of making those adjustments has a significant impact on the final product (unlike the case with the surface products, where different methods come up with essentially the same result). Given that, in a case where surface and satellite data disagree, there is no question that the surface data should be considered a more reliable indication of the surface trend.

Moderator Response:[TD] Thank you, Tom, for carefully reading DarkMath's comment, for responding specifically to his/her points, for responding in detail and thoroughly, and for responding with referenced evidence rather than handwaving, personal incredulity, and implications or even accusations of conspiracies. DarkMath, please follow Tom's example in your commenting style.

(Tom, we are trying to move this discussion to the appropriate threads, so in future please respond to DarkMath on those other threads.)

-

DarkMath at 07:48 AM on 30 August 2016Satellite record is more reliable than thermometers

I'm hoping someone could explain the following chart showing a clear divergence between satellite and land temps starting around the year 2000:

The satellites and land measurements roughly agree from 1980-2000 so there must be some validity to satellite measurement. I read there isn't consensus on whether there has been a pause in global warming from 2000-2016. Many climate scientists like Michael Mann agree there was no warming. Others say there was warming. Who's right? How does that effect this chart. Why did satellites measurement become unreliable starting in 2000? I'm not trying to be inflammatory I just don't know who to believe.

Thanks in advance.

Moderator Response:[TD] There are several possibilities, and probably all of them are contributing. See Tamino's post on RSS's remaining divergence even after RSS started publishing a new version (4.0) that partly corrected for satellite drift. Be sure to read the comments on that post. Also read Tamino's earlier post that used RSS's earlier version (3.3) that did not correct for drift, and be sure to read the comments there too.

For more background, read Eli Rabbett's post "UAH TLT Series Not Trustworthy." Then read his post "Ups and Downs." Then read his post "Mind Bending," and click the links within that post. You should read the comments in all three posts.

You are incorrect about "the pause." Start by reading the post "Aerosol emissions key to the surface warming ‘slowdown’, study says." Click the links in that post to read about why a short period of surface warming being slower than the periods immediately before and after it, is not the same as a pause even in surface warming, and is wholly inadequate evidence of a pause or even a slowdown in the underlying trend even in the surface warming let alone of the whole system warming.

[RH] Fixed image size.

-

John Hartz at 07:44 AM on 30 August 20161934 - hottest year on record

DarkMath:

In comment #53, you state:

I don't have any skin in the game here. I have a strong science background but only deal with medical data all day long.

In comment #54, you state:

If I've learned one thing in my engineering career it's always go with the simplest option first.

Does your "engineering career" include dealing with medical data all day long?

-

DarkMath at 06:34 AM on 30 August 20161934 - hottest year on record

michael sweet: "without adjusting for differrences in number of sites, location of sites or time of day of measurements"

There are many different ways to clean up data. For example adjusting for changes in the time of day. Say a weather stations data from 1900 - 1950 was taken at 12:00pm and from 1950 - 2000 it was taken at 1:00pm.

NASA/NOAA's approach to clean the data is by far the most complicated. It didn't have to be. You could start out in this case without making any adjustments at all. You simply treat them as two separate data sets. Voila. All that matters is that weather stations change in recorded temperature at a specific time. You reduce it to a rate of change, one set for the 12:00pm and another for 1:00pm. It no longer matters what time the temperature was taken because using 2 datasets instead of one removes time of measurement as a variable. The same would hold true for an elevation change or change in location.

NASA/NOAA didn't even attempt this simple option. Instead they went with some hefty calculations to make that one stations 100 years of data appear if it was all taken at the same time, the same location and same elevation. That's great, it's worth doing but shucks that is a lot of work. If anything the much simpler "treat a change in time/elevation/location" as if it were another weather station technique could be used to validate the more complicated approach. If I've learned one thing in my engineering career it's always go with the simplest option first.

Moderator Response:[PS] This is getting offtopic here. Please post any responses to Darkmath about how the temperature record is adjusted to "Temp record is unreliable".

Darkmath - put any further responses you wish to make over adjustment there too please.

[TD] Darkmath, read the Advanced tabbed pane on that thread before commenting there. You also must actually read Tom Curtis's response to you, in which he already explained the BEST team's approach of not making any adjustments. Then you must respond to his comment explicitly (on the thread that moderator PS has pointed you to). I write "must," because SkS comments are for discussion, not sloganeering. "Sloganeering" includes failing to engage with respondents substantively.

[JH] I flagged DarkMarth for sloganeering upstream on post #48. I also advised him to read the SkS Comments Policy and to adhere to it.

-

Dcrickett at 02:36 AM on 30 August 2016California has urged President Obama and Congress to tax carbon pollution

“You can lead a horse to water…” may apply to the US Congress, neither of whose houses is of good repute these days. (Here in Illinois, our legislature is the stuff of which legends are made.) Nevertheless, climate action is a matter of global concern; serious action at state and local levels offers minimal benefits and possible serious economic drawbacks.

The US, as a major economic power, can impose a Carbon Tax along with tariffs on imports and rebates on carbon fees paid, to nullify any advantage non-taxing foreign economic entities might have by avoiding an equivalent Carbon Tax. Even better, the tariffs paid on imports from such entities would accrue to the coffers of the US treasury, with nothing going to the non-taxing (or under-taxing) foreign governments. There is wisdom in the slogan “Carbon Taxation in One Country,” a paraphrase of the slogan associated with Iosif Dzhugashvili, altho in this case with an international intent more commonly associated with Lev Bronstein.

The US Congress can impose such a tax, together with the corresponding tariffs and rebates. However, no individual State among these United States can so do.

We Americans should do all we can to get our Congress to do so. No other country has the global economic power to do so, save perhaps China.

-

DarkMath at 00:17 AM on 30 August 20161934 - hottest year on record

Tom Curtis, you have valid points about why the temperature needs to be adjusted and/or estimated. But the problem is those adjustments and estimates change over time:

The more the temperature record changes the less confidence I have in it.

Then there is the discrepency between NASA's land and satellite temperature data. They don't always match up. For example here:

I don't have any skin in the game here. I have a strong science background but only deal with medical data all day long. I'm an objective observer of climate science. But I got to tell you though is the more I read the more I think the science definitely doesn't appear "settled". And given I've become an expert in observing human scientific endeavor over the past 30 years, :-), I always expect the worst.

Moderator Response:[TD] All the adjustments, both procedures and individual raw and adjusted data, are publicly available--along with the rationales for the repeatedly improved adjustment methods. You are welcome to state on SkS your specific objections to any of those procedures, rationales, or data. But you are not welcome to simply state, without referring to any of that evidence, that you just don't trust the adjustments, because that implies that you don't trust the scientists no matter how publicly and thoroughly they document their work, which implies that you are unwilling to discuss evidence. The SkS comments are for evidence-based discussions. All that I've just told you to address, must appear in the thread that moderator PS pointed you to, not this thread.

Regarding satellite measurements of temperature, read the post "Which is a more reliable measure of global temperature: thermometers or satellites?" Comment further on that topic on that thread, not this one. I strongly suggest that before commenting there you also read the post "Satellite measurements of warming in the troposphere"--all three tabbed panes, but especially the Advanced one. You should hesitate to assume the satellite temperature indices are superior to surface and balloon indices especially because those satellite indices started to diverge from both surface and balloon indices around the year 2000 when the satellite instruments were switched.

[RH] Please limit images to 500px.

-

Glenn Tamblyn at 18:52 PM on 29 August 20162016 SkS Weekly News Roundup #35

chriskoz

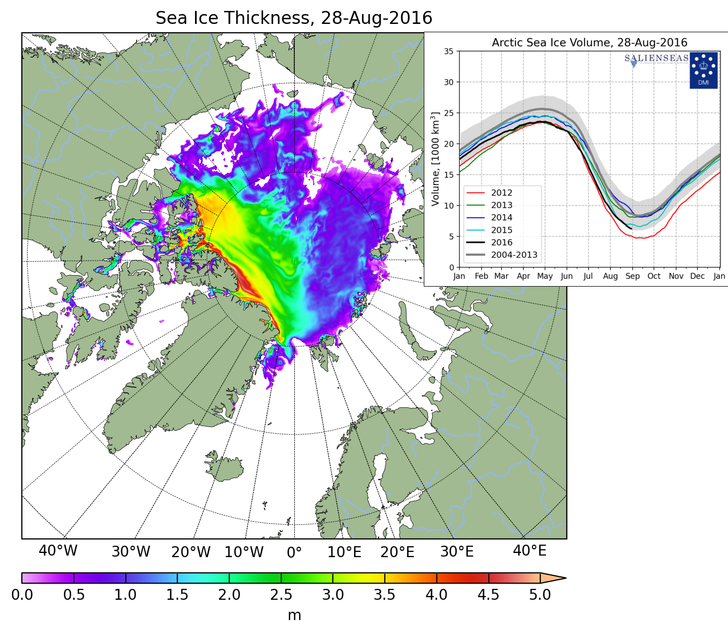

The Uni Bremen chart shows concentration differences across the pack more clearly than the NSIDC chart HK showed:

More interestingly, although it is more work, is to have followed the evolution of the ice pack over the season through direct imagery from Lance-MODIS. Major cracking events north of the Canadian Archipelago and often close to the Pole, lots of broken up floes across not just the peripheral seas but into the Central arctic basin on and off again over months. They move apart slightly, compact, refreeze together a bit. If 3-4 meter thick multi-year ice breaks up into flows that refreeze, the 'joints' are thinner, weaker. You can often see this in the Lance-Modis images.They are all indications of an ice pack that has less structural integrity. So concentrations maps such as above are an end of season consequence of a far more broken up ice pack. And a series of strong storms in the last few weeks has also contributed.

So by poor I mean mechanical integrity.

As to thickness, PIOMAS only updates every month. DMI update there volume product almost daily and it is suggesting a significant drop over August. There are some concerns that the model DMI uses isn't as robust as PIOMAS but still it is probably useful as indicative of relative changes until PIOMAS updates. And we all wish Cryosat 2 was operational during the melting season :-(

If you haven't been there Neven's Arctic Sea Ice Blog is good value. His graphs page here links to all sorts of sources. Check out the Regional Graphs pages for basin level graphs produced by one of his regular users, Wipnius, from raw satellite feeds. Then visit his forum. Lots of knowledgeable people who live and breath the arctic.

-

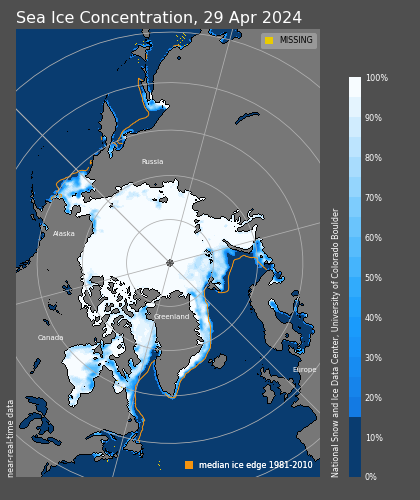

2016 SkS Weekly News Roundup #35

Chriskoz:

Chriskoz:

Glenn is probably referring to this chart of the sea ice concentration. Note the large regions on the Siberian side with less than 50% concentration. With some care I guess it would be possible to maneuver a small boat between the ice floes in much of that region. After this coming winter, the so-called multiyear ice will contain lots of thin first year ice. -

One Planet Only Forever at 14:22 PM on 29 August 20162016 SkS Weekly News Roundup #35

I have noticed that in the NSIDC records for Arctic Ice Extent (Charctic Interactive Sea Ice Graph) the fluctuation of extent appears to be noticeable lower one or two years after significant El Nino events (using the NOAA ONI values to identify significant El Nino events).

- 1999 and 2000 had significantly lower extents than 1998

- 1984 and 1985 had significantly lower extents than 1983

- 1990 and 1991 had significantly lower extents than 1987/88 (the warm ONI values started in 1986 and continued through 1988)

- 1993 and 1995 had significantly lower extents than 1992

- 2005 had a significantly lower extent than 2003

- 2012 had a significantly lower extent than 2010

If there is a reason for the minimums to drop in the years immediately following an El Nino then 2017 or 1018 would likely be a minimum that is clearly lower than 2012.

Prev 457 458 459 460 461 462 463 464 465 466 467 468 469 470 471 472 Next

Arguments

Arguments