Recent Comments

Prev 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 Next

Comments 26101 to 26150:

-

Ian Forrester at 05:26 AM on 17 March 2016Lots of global warming since 1998

I have to start off by saying that I am no statistician. However, I do get upset when people start quibbling about “statistical significance" when I'm not sure if they know what it means. Firstly, it does not mean that there is no trend if it does not meet statistical significance criteria. After all, 95% for statistical significance is just an arbitrary decision. I much prefer p values, since they represent chance. Thus if data have a p value of <0.05 we can claim statistical significance. However what is the real difference between P=0.04 and p=0.06. In real terms there is really no difference just that in the former case we can claim “statistical significance” and in the second case we can’t. Remember that p=0.05 is just an arbitrary choice; there is no real meaning to it, in terms of the data.

-

barry1487 at 01:47 AM on 17 March 2016Lots of global warming since 1998

OPOF,

Though you have tried to explain your preference for phrasing that implies no warming since 1998, you are relying on having identified a few extremes in all of the available information as the basis for the claim

I have no preference for such language. Only accuracy.

I didn't choose the data set/s or the time period. I only commented on how it was described in the OP.

What I am asking you to do is to honestly answer what your preferred trend value wold have predicted for the February 2016 temperature data set values relative to February 1998.

No analysis of such chaotic data could predict a single month's value, but one could give a probabilistic estimate. For the satellite data on such a short time frame, the estimate would be pretty wide. Looking at the whole system, warming has continued, and physics tells us it will continue. A spike like Feb 2016 was bound to happen sometime.

...go back month by month and identify how many previous months you have to be looking into to get a ststistically significant number of months where there has been 'no warming between the recent monthly value and the monthly value 18 years prior'.

It's not possible, as far as I'm aware, to determine 'no trend' to statistical significance - at least just by applying a linear regression; they're not made to detect 'no trend'. But one can make a reasonable assumption of no trend after a long time with a small trend. Eg, 100 years of temps may be 0.0002C/decade +/- 0.0004. The trend is negligible, as is the uncertainty, so you'd be on solid ground saying there was no trend.

Jan 1998 - Feb 2016 has a trend of 0.02C +/- 0.185 in the RSS data set. Claiming this is a warming, flat or cool trend is statistically illegitimate. With the uncertainty, the trend could be anywhere between -0.183 to 0.187C/decade (95% confidence level).

That's exactly why I commented in the first place.

Somewhat similar to your suggestion, I checked the longest period for a non-statistically significant tend (UAH/RSS) in a post above.

Add one more year to those results and you have statistically significant warming derived from the ARMA (1,1) model used the the SkS app. So, for the satellite data sets, you need to start a regression in the early '90s before you can say there is a legitimate warming trend. Not from 1998.

My criticism is of the 'analysis' (or lack) of the satellite data sets in this post (and there's more to it, like blurring the line between rcently revised mid-tropospheric trends and the lower tropospheric data), not of the bigger picture. I don't think it serves the message well to fudge these details.

Reckon I've said my piece long enough. Have a good one.

-

One Planet Only Forever at 00:28 AM on 17 March 2016Lots of global warming since 1998

barry,

Please comment on the predictive accuracy of the 'statistical' trend you have been preferring to promote (no significant warming since 1998) regarding the expected values of the temperature data in the past months.

What I am asking you to do is to honestly answer what your preferred trend value wold have predicted for the February 2016 temperature data set values relative to February 1998. And then go back month by month and identify how many previous months you have to be looking into to get a ststistically significant number of months where there has been 'no warming between the recent monthly value and the monthly value 18 years prior'. You can even go the other way and investigate how many years you need to reduce your range to to find a sstistically significantly number of prior year months that are warmer than the current monthly values.

And while you are at it, please comment on the likelihod that the 'no warming trend' will correctly predict that March 2016 will not be warmer than March 1998 (or any other March value).

-

One Planet Only Forever at 00:00 AM on 17 March 2016Lots of global warming since 1998

barry@12,

I understand that when you push the data values at the begining of a time period up to the maximum of the uncertainty band and push the data values at the end of the time period down to the bottom of the uncertainty band you may be able to claim that the result is a trend line that is not increasing.

However, understanding that those potential 'no increase' trend values are 'less likely among the total range of possible trend values' means it would be inappropriate for a person who actually understands things to declare that finding an extreme value of 'no warming' means that it is more correct to state that the data set does not indicate (with statistical significance or whatever other term is wished to be use) that 'warming has occurred during that period'.

Of course there will always be some people who will resist better understanding something when they sense that understanding it better will be contrary to their personal interest (that type of sense is clearly far too common in much of the USA and other places in the so called 'advanced or developed nations' like Alberta). And it is highly likely that such minds will look hard to find extreme examples that justify what they prefer to belive and desire to have others believe. But any success of that type of extremely irrational unreasonable type of thinking is clearly a political marketing problem, not a scientific presentation issue.

Though you have tried to explain your preference for phrasing that implies no warming since 1998, you are relying on having identified a few extremes in all of the available information as the basis for the claim, or said anoyther way, you are wanting to make a statement that you actually could understand is highly unlikely to be correct.

-

Tristan at 23:23 PM on 16 March 2016Lots of global warming since 1998

<i>The satellites show warming since 1998 too.</i>

They do not. Not to statistical significance.

Depends what you're controlling for and what published data you're using. The language should be more precise. It isn't however, merely wrong.

-

SirCharles at 22:37 PM on 16 March 2016Lots of global warming since 1998

Gary and Keihm 1991 showed that natural variability in only 10 years of UAH data was so large that the UAH temperature trend was statistically indistinguishable from that predicted by climate models.

http://science.sciencemag.org/content/251/4991/316.shortHurrell and Trenberth 1997 found that UAH merged different satellite records incorrectly, which resulted in a spurious cooling trend.

http://www.cgd.ucar.edu/cas/abstracts/files/Hurrell1997_1.htmlWentz and Schabel 1998 found that UAH didn’t account for orbital decay of the satellites, which resulted in a spurious cooling trend.

http://www.nature.com/nature/journal/v394/n6694/abs/394661a0.htmlFu et al. 2004 found that stratospheric cooling (which is also a result of greenhouse gas forcing) had contaminated the UAH analysis, which resulted in a spurious cooling trend.

http://www.atmos.washington.edu/~qfu/Publications/nature.fu.2004a.pdfMears and Wentz 2005 found that UAH didn’t account for drifts in the time of measurement each day, which resulted in a spurious cooling trend.

http://science.sciencemag.org/content/309/5740/1548.abstract -

SirCharles at 22:29 PM on 16 March 2016Lots of global warming since 1998

An eye-opening article by Dan Satterfield at the American Geophysical Union blog:

=> NASA- February Temperatures Hottest Ever

It's not only the 5th month in a row that is topping temperature records by a big margin. Dan is also cleaning up some myths.

-

barry1487 at 22:14 PM on 16 March 2016Lots of global warming since 1998

Fair points, but not addressing the criticism. The unqualified cliam is;

<i>The satellites show warming since 1998 too.</i>

They do not. Not to statistical significance.

We wouldn't give this a free pass if a skeptic used the same period to say no warming "because it's not statistically significant," or if they claimed a 'pause.' We frequently remind them of this ommission in their 'analysis'. I'm doing the same here. Neither of those claims are justfied. Neither is a claim of warming for that period for the satellite data.

It's about integrity, whether in 'messaging' or in accurate science.

-

Cedders at 22:11 PM on 16 March 2016Oceans are cooling

Thanks Tom & Rob for replies. The map is indeed impressive and worrying for coral bleaching, mangrove swamp etc (what's the source & baseline period, please?).

I would still be interested in any simplified model, calculus or references that describe the coupling between the surface and the ocean layers: is the deep ocean warming rate expected to accelerate with further SST warming? How long will the 700-2000m layers take to reach equilibrium? If 88% of the heat imbalance is going into oceans, doesn't that mean the oceans are providing a huge air-con service, but one that will eventually disappear? It has policy implications, eg if Earth system sensitivity is 6 °C, but thermal inertia meant 'only' 60% of that surface warming even a century after reaching 550ppm, we might want to think seriously what human capabilities are going to be in the 22nd century.

I still would like to suggest if I may that this contrarian meme may have become sufficiently prevalent for SkS to examine thoroughly to produce a "basic" clarification for lay people like me. (I think UKIP in UK may have picked it up via WUWT.) Thanks again.

-

Tristan at 21:17 PM on 16 March 2016Lots of global warming since 1998

We can say 'there has, unambiguously been warming'. We just can't say it if we know nothing more than certain temp records. That would be a pointless condition to hold ourselves to.

-

Kevin C at 20:52 PM on 16 March 2016Lots of global warming since 1998

A few details:

Neither of the Karl 2015 trends are to the present - they both omit 2015. Include 2015 and both would be statistically significant.

The period from 1998 has presented several problems:

- The ship/buoy transition which has created a bias. The correction has not been totally nailed down yet. There is more work on this in the pipeline.

- Rapid arctic warming, shown by IR satellites, weather models and buoys. This again leads to a bias particularly in the NOAA, UKMO and JMA records. NOAA and UKMO are both working on global records.

- The changing ice-edge anomaly bias. This is a new result from our recent model comparison paper and is not fully understood, although I know NOAA are looking at it.

I expect the picture to become a lot clearer over the next 12 months at least on the first 2 points, with new versions from NOAA, UKMO and JMA. We've identified the issues, they are being addressed as we speak, and we have a good idea what the changes are going to look like. In the mean time I would be cautious of drawing conclusions from records with known biases.

-

barry1487 at 19:36 PM on 16 March 2016Lots of global warming since 1998

Tom,

"RSS is not explicitly out of date..."

V4 TLT is supposedly coming out in about 6 months. I strongly doubt it will change my point for trends run to Dec 2015. I also doubt we'll have statistical significance in v4 TLT 1998- 2016 (inclusive) trend. The relatively large variability will still be there, which is why the satellite data sets need longer time periods to reach this threshod than the surface records, as you know. -

barry1487 at 19:30 PM on 16 March 2016Lots of global warming since 1998

OPOF,

You can also run the trends/uncertainty there to check for 1997/99 (run it for full years to avoid intereference from annual cycle). Last time I looked, the longest time-period lacking statistical significance was RSS - 23 years. Not that that means too much. Failing to disprove the null doesn't mean that there is no trend, just that you can't demonstrate it statistically.

I'll check just now for the logest period without statistical significane in the sat records - just for curiositiy's sake...

RSS - No stat sig warming since 1992: 24 yrs

UAH - No stat sig warming since 1995: 21 yrs

This doesn't 'prove' no warming, of course. That's not how the null hypothesis works. But it does mean you cannot say there has definitely been warming. Not according to those data sets. A broader lok at the climate system tells a different story.

-

barry1487 at 19:29 PM on 16 March 2016Lots of global warming since 1998

@ nigelj,

you can use the SkS trend calculator to check the trends/uncertainty yourself if you'd like.

www.skepticalscience.com/trend.php

Simply, if the uncertainty (+/-) is larger than the trend, then the trend fails statistical significance (is not statstically distingishable from 0).

-

barry1487 at 19:27 PM on 16 March 2016Lots of global warming since 1998

Hi Tom,

I didn't metion models or IPCC predictions.

When the skeptics talk about short-term trends, their critics - rightly - scold them for ignoring statistical uncertainty. When Tamino criticises papers on temp trends that lack uncertainty estimates he is right to do so (as he did recently with a paper Mann co-authored, while commending other parts of the paper).

The statement in the OP was clear. "The satellites show warming, too." Not to statistical significance. It's inconsistent to correct 'skeptics' for this oversight and then do the same thing.

"...unless the trend shows a statistically significant difference from 0.165 C/decade (for GISTEMP), the correct assumption is that the trend is continuing..."

As I said to begin with, it would have been better to lay the case out this way."You'd be on firmer ground showing that the temps since 1998 have not deviated from prior warming to statistical significance."

"I do not know if the UAH data used in the trend calculator is vs 5.4"

More likely v5.6. Strongly doubt it's beta6.

-

Tom Curtis at 15:44 PM on 16 March 2016Lots of global warming since 1998

barry @5 & 6, the trends are:

HadCRUT4: 0.107 +/- 0.111 C/decade

NOAA (Karl 2015): 0.107 +/- 0.123 C/decade

GISTEMP: 0.154 +/- 0.113 C/decade

BEST: 0.125 +/- 0.104 C/decade

HadCRUT4 krig: 0.142 +/- 0.117 C/decade

Karl 2015 Global: 0.134 +/- 0.141 C/decade

RSS*: 0.002 +/- 0.185 C/decade

UAH: 0.106 +/- 0.186 C/decade

RSS is not explicitly out of date, with a recent improvement of the diurnal drift adjustment which significantly increaste the 1998-2016 trend having been applied to the TMT channel, but not yet carried through to the TLT channel. I do not know if the UAH data used in the trend calculator is vs 5.4 or the as yet un peer reviewed vs 6.

The key thing to note is that the only dataset in which the approx 0.2 C per decade model predicted lies outside the uncertainty interval is RSS, which is explicitly acknowledged by its author to be less accurate than surface thermometer records, and in its current version, to be biased low by inaccurate correction for diurnal drift. That means there is better statistical grounds to say that recent temperature trends are following the models than there is to say that they have paused, or are in a hiatus. Insisting that not showing a statistically significant difference from zero is important, but not showing a statistically significant difference from model trends is irrelevant amounts to special pleading. I amounts to forcing your preferred story on the data because you like the narrative.

Deniers try to avoid the charge of special pleading by insisting that a trend of zero is the null hypothesis. The correct response to that claim is neither polite, nor in keeping with the comments policy.

Being more polite, the null hypothesis depends on the theory you are testing. If you are testing the theory that the temperatures are following model trends, the null hypothesis (ie, that which you are trying to falsify) is that the trend is that of the models, and only trends showing a statistically significant difference from the model trends are relevant in that only they can contribute towards falsifying the model.

More naively, if we do not have a specific model in mind, the null hypothesis is that nothing interesting will happen, ie, that the trend will continue as it was doing before. More precisely, the trend will remain the same as it has been since the last stastically determined 'breakpoint' in the trend (which happens to be about 1970). In other words, unless the trend shows a statistically significant difference from 0.165 C/decade (for GISTEMP), the correct assumption is that the trend is continuing at 0.165 C per decade. As it happens, the GISTEMP trend differs by about a tenth of the error from the ongoing trend.

-

One Planet Only Forever at 14:33 PM on 16 March 2016Lots of global warming since 1998

barry,

What do 'your' statistics say about the data since 1997 or 1999?

-

bozzza at 14:06 PM on 16 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

OPOF,

The market place is made of profiteers: that includes the Governments that regulate it.

Ultimately anarchy is not what Governments want but until the tax base stops growing their is little impetus for changing the rules. I suppoe a point of diminishing returns comes into play somewhere however...

-

nigelj at 13:06 PM on 16 March 2016Lots of global warming since 1998

Barry @ 6. I will take your word that the satellite record does not have statistically significant warming since 1998. I suppose it gives you climate change sceptics some straw to hold onto.

I think my mind is pretty made up. There is now a mountain of evidence that we are altering the climate, and at a significant rate.

The surface is warming strongly according to nasa giss. The satellites measure the atmosphere, and this may be heating more slowly, but its the surface that dominates weather patterns and rates of ice melt.

-

barry1487 at 10:42 AM on 16 March 2016Lots of global warming since 1998

Should have checked before I spoke. NOAA and GISS have statstically significant warming since 1998 (to year-end 2015). But not HadCRU4, and definitely not RSS and UAH satellite records, no matter which version you use.

-

barry1487 at 10:39 AM on 16 March 2016Lots of global warming since 1998

The satellites show warming since 1998 too.

This comment - and the article - lacks uncertainty estimates. Red flag. None of the global temperature datasets have statistically significant warming since 1998. You'd be on firmer ground showing that the temps since 1998 have not deviated from prior warming to statistical significance. -

nigelj at 08:09 AM on 16 March 2016Lots of global warming since 1998

The main reason given for the slight slowdown in surface temperatures since about 1998 until recently was more heat energy going into the oceans. Last years el nino seems to have released a lot of this, and caused a large temperature record. Doesn't this validate the research?

-

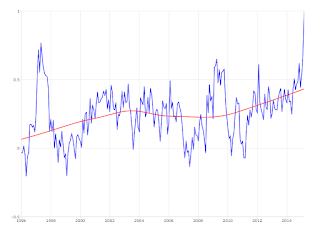

Ronsch at 05:31 AM on 16 March 2016Lots of global warming since 1998

I applied a LOESS regression to the RSS data from 1998 on to both the old and new RSS data. LOESS regression is a better type of analysis than a moving average. Both analyses show temperatures climbing with a slight slowing from 2003 to 2009.

-

michael sweet at 02:45 AM on 16 March 2016Lots of global warming since 1998

Typo: first paragraph 2004--> 2005. Please delete this note.

-

John Hartz at 01:52 AM on 16 March 2016Lots of global warming since 1998

Dana: Thank you for updating this important set of rebuttal articles.

PS: One down, 175 more to go. :)

-

John Hartz at 01:20 AM on 16 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

Suggested supplemental reading:

Climate Change and Conservative Brain Death by Jonathan Chait, New York Magazine, Mar 14, 2016

-

BBHY at 22:21 PM on 15 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

Seems like only one candidiate has a serious plan to address climate change,

The problem with American politics is we have 1) people who do not beleive in climate change and are very lilely to vote, 2) people who feel that half-hearted measures to address climate change are good enough and are moderately likely to vote, and 3) people who agree that it is a serious proplem that needs serious solutions but are not likley to actually vote.

-

Tristan at 21:09 PM on 15 March 2016After 116 days, MIT fossil fuel divestment sit-in ends in student-administration deal for climate action

A few things:

I'd suggest than individuals are far more likely to go beyond their legal responsibilities than corporations are. I don't have any shareholders, and I don't have to account for my time to anyone. The opportunity costs of 'benevolent' behaviour are different.

We are not waiting on technology. We can already achieve a huge chunk of the transition with existing, proven, cost-competitive industry. However, those industries are presently disadvantaged by a combination of massive FF subsidies and the lack of carbon pricing.

Which statements from the MIT activists suggest that they are not "thinking outside the blaming box"?

-

Elmwood at 16:52 PM on 15 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

sounded like hillary supports "clean coal" as a bridge or something like that. everything i've read about clean coal shows it's either not clean or it's completely uneconomic.

hillary is status quo, much like obama, which is probably worse than just saying you are not going to do anything about it like rubio.

-

One Planet Only Forever at 14:54 PM on 15 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

bozzza,

When a 'Marketplace' deliberately fails to act on the development of better understanding of what is really going on to advance humanity to a lasting better future for all, what should 'That Marketplace' expect? To be left alone? To be encouraged to be 'Even more Daring'? To be able to drum up support through appeals for the defense of 'Its Freedom to do more of what it pleases'?

-

denisaf at 13:46 PM on 15 March 2016Why We Need to Keep 80 Percent of Fossil Fuels in the Ground

This discusses policies that should be adopted to reduce fossil fuel usage. It is aimed at the decisions that the coal, oil, and gas industries should make. It does not take into account the fact that there is a vast range of infrastructure (machines, cars, planes, ships, etc) that irrevocably uses fossil fuels. these cannot possibly be shut down rapidly. The most that is possible is policies be adpoted to reduce the demand of the infrastructure while encouraging the industry to power down. This process can only happen slowly despite the policy decisions by those aiming to reduce the impact of climate change. 90000 ships, thousands of airliners and millions of cars will not be scapped rapidly.

-

bozzza at 12:42 PM on 15 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

..talk about government intervention into the marketplace!

-

Tom Curtis at 08:23 AM on 15 March 2016Volcanoes emit more CO2 than humans

Ybnvs @266, it is ironic that somebody trumpeting that "science is based on fact" provides no evidence if the CO2 vents "just recently" found of New Guinea - something I can find no evidence of either by searching google, or google scholar.

However, science is not founded on 'fact' as you put it, but on fact and reasoning. It follows that if you are to criticize a scientific finding, you must be at least aware of the scientific reasoning behind the result. In this case, the total volcanic CO2 flux is determined not just be adding up sources, but by detecting atmospheric (or sea water) concentration of tracer gases from volcanoes, such as H3 - determining the total flux from that concentration, and from that and knowledge of the ratio of CO2 to the tracer gas from volcanic emissions, determining the total CO2 flux.

A third approach is to determine the rate at which CO2 is naturally sequestered. Given that CO2 concentrations have been stable for 10 thousand years, and (once temperature fluctuations are accounted for, over millions of years), the total geological flux of CO2 must be very close to the rate at which CO2 is sequestered - given a third method of determining the total geological CO2 flux.

As the rate of geological CO2 flux has been determined by two methods in addition to the simple inventory method you assume, we have good reason to think that changes in that inventory will not substantially revise the current estimates, and certainly not by two orders of magnitude required for geological flux to equal anthropogenic flux. That is particularly the case given that your uncited new source consists of a CO2 vent, ie, a type with a much lower overall flux than is typical of direct volcanic sources.

-

Ybnvs at 05:19 AM on 15 March 2016Volcanoes emit more CO2 than humans

"Counter claims that volcanoes, especially submarine volcanoes, produce vastly greater amounts of CO2 than these estimates are not supported by any papers published by the scientists who study the subject" ... this statement inferes that the scientists who study the subject know everything there is to know about the subject. The inconvenient truth is that the ocean occupies two thirds of the planet and the ocean floor isn't mapped as nicely as the streets of Manhattan. Just recently a vast area of underwater vents emmitting CO2 like a glass of champagne were accidentally found near sea coral off New Guninea. Science is not based on consensus, it is based on fact. Before we can determine cause and effect as it pertains to global warming we must identify all of the CO2 emmitting sources then measure their variance against the change in global temperature. We are a long way from knowing how many CO2 emmitting sources are under the sea.

-

Sharon Krushel at 04:39 AM on 15 March 2016After 116 days, MIT fossil fuel divestment sit-in ends in student-administration deal for climate action

#12 - Tristan

It seems to me most of us take as much "responsibility" as we are required by law. But some individuals and companies do indeed go above and beyond.I agree with the carbon tax because it is being paid by industry and by consumers, which is fair. We do need to pay what it truly costs.

I do admire the passion and perseverance of the MIT students. They certainly have brought attention to the global warming problem and the need to take action. And they have sparked some very important conversation.

I would challenge them to think outside the blaming box and focus energy on innovation that would facilitate the transition to renewables. They are probably already doing that, but wouldn’t it be cool if one of those rich people Philippe mentioned could respond to their enthusiasm by funding research at MIT so they could come up with better storage and management of energy developed by renewables, or contribute some other technical innovation to this quest for a healthier planet.

Someone here commented on a Facebook post about Alberta's carbon tax, saying that there should be X-prizes for defined tech advances in renewable energy.

-

shoyemore at 02:03 AM on 15 March 2016Sanders, Clinton, Rubio, and Kasich answer climate debate questions

An anecdote from journalist Michael Tomasky in this month's New York Review of Books:

Not long ago, I talked with Democratic Senator Al Franken of Minnesota, who explained how the Republicans’ fear of facing a Koch-financed primary from the right, should they cast a suspicious vote on climate change, prevented them from acknowledging the scientific facts.

And what percentage of them, I asked, do you think really accept those facts deep down? “Oh,” Franken said, “Ninety.”

He explained that in committee hearings, for example, witnesses from the Department of Energy come to discuss the department’s renewable energy strategy, “and none of them challenge the need for this stuff.”

This fear of losing a primary from the right is the third factor that has created today’s GOP, and it is frequently overlooked in the political media.

-

Tristan at 01:33 AM on 15 March 2016After 116 days, MIT fossil fuel divestment sit-in ends in student-administration deal for climate action

I appreciate your response, Sharon.

Corporations of all stripes tend to take as much 'responsibility' as they are required to by law. I don't think Oil/Coal/Gas companies are meaningfully different to the other members of the Fortune 500* - The ramifications of the actions different industries take may be different however. It is these ramifications which are stimulating the divestment movements.

The intention of divestment of any sort, is to generate greater corporate responsibility via societal pressure on either the legislators or the industry itself. In the instance of FF energy generation, one response trumps all others - the adoption of an appropriately implemented carbon tax, such that the deadweight loss incurred from fossil fuel use is offset.

---

*rent-seekers who do whatever they can to pay less tax, reduce regulation and otherwise gain competitive advantage over their competitors while delivering the majority of their profits to people who already have significant wealth.

-

Sharon Krushel at 16:11 PM on 14 March 2016After 116 days, MIT fossil fuel divestment sit-in ends in student-administration deal for climate action

#9 - Hello Philippe,

I'm sure you would not be prejudiced toward any individuals. What I'm suggesting is that we need to offer the same justice to companies, that we grant to individual people, and refrain from basing our decisions on stereotypes.You say, "The overall behavior in the fossil fuel world is of the sort that has already proven so many times to lead to catastrophic failure. The same mode of operation chosen by tobacco. The attitude of utilities spreading cancer-causing chemicals in the water. The denial and irresponsible handling that caused a more recent water quality crisis in Flint. The same mind set that led VW to cheat. The attitude that consists of acknowledging that something is wrong but going on with it, developing all sorts of methods to cover, protect, hide, avoid."

This isn't much different from saying, "The overall behaviour of (this ethnic group) is bad; therefore, (this person) is bad. They're all the same."

I totally agree with the most important of your points. You're right about the dishonesty of Exxon and the damage this causes. I am not suggesting we continue to invest in companies that have been proven to be guilty of willful wrongdoing (but then we need to apply this standard to every company we invest in, not just fossil fuel companies).

What we must recognize is that Exxon is not Syncrude nor Shell nor any of the other oil companies. They may all be in the same "ocean" or industry, but they are each responsible for their own boat.

It is also unjust to project the behaviour and attitude of VolksWagon onto companies that have not in fact displayed such dishonesty and deceit.

Contrary to your accusation that "the overall behavior in the fossil fuel world" is to acknowledge that something is wrong but go on with it, there are companies that respond responsibly. In fact, some aim to exceed environmental laws and champion excellent work (e.g., see the above referenced report regarding reclamation of tar sands land).

I agree that coal is a big problem, but even in regard to coal, the Boundary Dam CCS project in Saskatchewan demonstrates a significant positive response from government and industry to the truth about global warming. The CCS project at the Scotford Complex in Fort Saskatchewan, Alberta is another responsible response to the truth. And information will be shared online about the project's design, processes and lessons learned to help make CCS technologies more accessible and drive down costs of future projects.

Managers, engineers and others who have poured their heart and soul and ingenuity into projects like this, who work for the sake of the environment within the industry, are shaking their heads at the demonization and divestment craze, especially when they see miles of vehicles lined up on multiple-lane highways in Montreal, and other cities where the criticism originates.

The extraction and production of fossil fuel is not, in itself, wrong while we're still driving our cars, tractors are still working farmland, diesel trucks are still bringing food to us, and various forms of energy are keeping us warm in the winter, etc. And I too travel to visit family, which requires a seven-hour drive south. I use the fuel carefully and gratefully. I’ve had to stop speeding to increase fuel efficiency, and for me that’s a big sacrifice. Northern highways are so beautiful and open. :)

Considering all of the above, this is what I believe: We need to recognize that we are in transition and work together to reduce consumption and move toward renewable energy and a low carbon economy without resorting to scapegoating.

Indiscriminate divestment from fossil fuel companies is, in my opinion, a form of scapegoating that distracts us from the changes we need to make as consumers.

I agree we need public policies, but they must not be based on half truths, hypocrisy or prejudice. Dishonesty or tunnel vision on anyone’s part will be equally damaging.

Moderator Response:[RH] Reduced image size that was breaking page formatting. Please keep your image width down to 500px.

-

Christopher Gyles at 15:39 PM on 14 March 20162016 SkS Weekly News Roundup #11

The Skeptical Sience Facebook page link above is not working for me - it results in a Not Found message. Ditto with the one in the Weekly Roundup email.

Moderator Response:[JH] Links are working properly.

-

Sharon Krushel at 15:20 PM on 14 March 2016After 116 days, MIT fossil fuel divestment sit-in ends in student-administration deal for climate action

#6 - Tristan, I appreciate the way you worded your response. "...willing to sacrifice some measure of profits for one's beliefs." That makes sense to me, and I admire that. I appreciate that you're open to conversation about "the nature of a given industry and whether or not we should accept the way it currently operates."

My contribution to the conversation, from my perspective and experience in northern Alberta, is that not all companies and people within the industry are the same, any more than all scientists are the same. (More on that in my response to Philippe below.) At the risk of monopolizing the conversation on this part of the page, I'd like to relay some stories from the north that might not be on the news.

As I see it, the current environmentalist trend is to demonize the fossil fuel industry as the culprits of global warming - greedy villains who don't care about the environment. People don’t like having their beliefs challenged, especially when their cause has become a meme and, in some cases, developed black-and-white religious fervour. Some of the reporting by the media has been biased toward feeding this fervour. Even celebrities (who may not know what a chinook is) are getting on the band wagon.

A more balanced diet of information on the way the industry operates would include, for example, photos of reclaimed areas, not just mined areas, in the Canadian tar sands. A very critical tourist was shown a beautiful area near Fort McMurray and was asked, “What would you think if this area were to be mined?” He replied that it would be a terrible tragedy. The tourist was then told, “It already has been mined.”

Personally, I don’t remember ever seeing a photo of tar sands reclaimed land shown in the news. Apparently this tourist hadn’t either.

If you would like to get some first-hand information on the tar sands and the way the industry currently operates in regard to the environment and First Nations people, Ross Whitelaw ross.w@telus.net is a very knowledgeable man of integrity who used to work in environment and safety. He's open to questions, and if he doesn't know the answer, he could probably put you in touch with someone who does.

This is the kind of conversation that needs to take place - going to the source for information and exchange of ideas and questions. I've been working with Ross and others as members of the Anglican Church addressing the divestment issue.

Ross recently took a tour of Smoky Lake Tree Nursery, which currently grows all of the reclamation stock for the five major oil sands operators: Syncrude, Suncor, Imperial Oil, Shell Albian and CNRL. They also grow seedlings for conventional oil and gas reclamation. Here is an excerpt from his report, which outlines the lengths to which they go to restore the land with the biodiversity of 61 native species.

Tristan, I like the honesty of your comment, "I am not comfortable deriving income from this source." I understand that, considering the connection between fossil fuels and global warming.

Another view to consider is that, at this point in time, no matter what companies we invest in, the burning of fossil fuels is likely involved. Burning fossil fuels creates far more CO2 emissions than extracting them (e.g., "Final combustion of the oil – mostly emerging from vehicle tailpipes – accounts for 70 to 80 per cent of lifecycle emissions"). And if consumers weren't using them, the companies wouldn't be extracting them. If we’re driving our cars to work or if our work involves operating vehicles or machinery, or selling products that were produced in a factory, we’re still "deriving income from this source."

I recently read a story of a First Nations man in Fort McMurray who lost his job in the oil industry due to low oil prices, so he sought help to set up a small tourist company, which is admirable. However, if you look at this from a global warming perspective, in order for this man's new business to succeed, people have to burn fossil fuels to get there and burn more fossil fuels once they arrive in order to see the sights.

Would it benefit the environment for MIT or other institution to divest from the oil company this man worked for and invest instead in this man’s tourist industry, which would be burning the fuel produced by his former employer?

In light of this, I accept that I am "deriving income from this source" (directly - with investment in reputable fossil fuel companies, or indirectly) because that's the current reality, and I will invest what I can in renewable energy as well, to help speed the transition along.

Managing the transition to renewables is complicated. Alberta is phasing out it's older coal plants first. And renewables are on the increase. The goal is to be at 0 dependence on coal by 2030 - a very ambitious and expensive goal. In the meantime, for the fossil fuels I still have to use, I'd be much more comfortable getting them from the Canadian tar sands or from a well-managed coal plant (especially if it has CCS) than from Saudi Arabia or other countries where we have no control over the environmental or ethical implications or how the royalties are used.

I know it is not my mandate to change people's minds. I am simply grateful for this website and the opportunity to participate in the conversation.

-

chriskoz at 11:02 AM on 14 March 20162016 SkS Weekly Digest #11

Stefan from RC is visiting UNSW, and surely he gave us the first-hand comment about the unprecedented spike in Feb global temps (that are felt especially nasty in SE Australia):

Emergency is the right subtitle for this article. When are the politicians going to wake up?

-

John Hartz at 04:14 AM on 14 March 20162016 SkS Weekly News Roundup #11

richardPauli: Your concerns about the article, Climate change could cause 500,000 more deaths by 2050 by Raveena Aulakh, Toronto Star, Mar 05 2016, seem a tad overblown and misdirected.

The teaser line (sub-headline) to the article is:

Over half a million more people could die in 2050 as climate change affects diet, says a new study in the medical journal the Lancet.

It should be quite obvious to anyone reading the the above sub-headline of the article that it is a summary of a new study published in the Medical Journal Lancet.

The text of the article includes a link to the Lancet article, Global and regional health effects of future food production under climate change: a modelling study.

If you want to know how the numbers reported in a newspaper article were derived, you need to carefully read and digest the study that generated those numbers.

-

richardPauli at 03:19 AM on 14 March 20162016 SkS Weekly News Roundup #11

It was at Sunday section above

Climate change could cause 500,000 more deaths by 2050 by Raveena Aulakh, Toronto Star, Mar 05 2016

http://www.thestar.com/news/world/2016/03/05/climate-change-could-cause-500000-more-deaths-in-2050.html

-

richardPauli at 03:17 AM on 14 March 20162016 SkS Weekly News Roundup #11

The prediction "Climate change cause 500,000 more deaths by 2050" is the worst case of optimism bias I have ever seen.

We should suspect the source. Everyone from the UN, to Oxfam to the Catholic Church is researching those numbers. Even the B&M Gates Foundation fought with the World Health Organization about what numbers are and how to evaluate death rate. It is a very difficult task . but a few years ago, when I scanned the ranges then - it was all over the ranges from 50,000 to 400,000 deaths per year (currently). The data reports take a little digging, but it is all search engine. (just ask "how many die from climate change")

Part of the problem is how to categorize. For instance, is Malaria deaths part of the increase? Famine from salt water inundation by sea level rise? Which floods are counted, only storms or some storms? Then Syrian climate refugees - all of them? Or some? Are the increase in wildfires all categorized as global warming associated?

For a newspaper to say 500,000 by the year 2050.. some 34 years hence seems dangerously misinformed. What are they trying to promote? I have no idea why they would post that.... it shows a very shallow understanding of climate impacts globally. I will be sure to write the publisher at http://www.thestar.com/about/contactus.html Perhaps they can publish a cursory overview of how to evauate future impacts. Their numbers of 500,000 may have been reached in 2015, Maybe this year. Certainly in the next few. Irresponsible publishing.

-

One Planet Only Forever at 02:42 AM on 14 March 2016Sea level rise is accelerating; how much it costs is up to us

richardPauli,

An elaboration of your point that is very important to understand:

"Observations that are not learned from, or better understanding from evaluation of observations and experimentation being deliberately denied ... is far too common in our 'socio-economic-political system that is based on popularity and profitability' because of the power of deliberately misleading marketing in the hands of callous greedy and intolerant people (who hope to keep their clearly unacceptable handiwork as the most powerful invisible-hand in the voting and market place".

Anyone paying attention can understand that the system, and in particular marketing in the system, is the problem. It can clearly be observed that it encourages the development of people who do not care about advancing humanity to a lasting better future. It encourages people to focus on getting the best possible present for themselves any way they can get away with (often marketed as it being fundamentally essential for everybody to have the "Freedom to do as they please", without any reasoning being allowed to restrict their preferred chosen pursuits).

-

Jose_X at 01:14 AM on 14 March 2016New Video: Why Scientists Trust the Surface Thermometers More than Satellites

satellite data now has feb 16 highest ever

-

BBHY at 11:48 AM on 13 March 2016New Video: Why Scientists Trust the Surface Thermometers More than Satellites

Multiple independent lines of scientific evidence all point to the same thing; global warming caused by increased CO2 in the atmosphere from, burnnig fossil fuels.

Anecdotal evidence is not terribly valuable from a scientific standpoint, but is still valid from a human perspective. My family used to go skiiing in the mountains of Pennsylvania in the 60's. Back then, it would be impossible to drive through the Pennsylvania mountains in the middle of winter and not see snow on the ground, but for at least the past decade I have often gone there in February and not a bit of snow was evident until I reached the ski resort, where they make their own. I fear that in another decade or so all the Penn ski resorts will have closed down as the season keeps getting shorter and shorter.

-

richardPauli at 03:18 AM on 13 March 2016Sea level rise is accelerating; how much it costs is up to us

"Lessons not learned, will be repeated."

-

richardPauli at 15:01 PM on 12 March 2016Why We Need to Keep 80 Percent of Fossil Fuels in the Ground

We are stuck. Since optimism can be misdirecting. We must be active and positve while retaining a ruthlessly realistic view. I can only speak for myself, but I think this is what our children must face, what they and we must do:

Suffer, Adapt, Mitigate

To suffer is to accept and endure; we make an active choice to hunker-down and face a painful, inevitable situation.

To adapt is to tap into resilience as we take real action to survive and co-exist with all beings.

To mitigate is to work on real processes to make the problem less severe in the future.we do this with tools of Palliation, Civilization, and Revision

We help with suffering by easing pain with palliative care to ourselves and others.

We best adapt when we band together in shared community effort to service and build a global human civilization.

We make tomorrow better as we revise our failed systems by radical reformation and innovation.There may be lots of other tasks, but I think that a general outline

-

Tom Curtis at 10:18 AM on 12 March 2016Greenhouse Gas Concentrations in Atmosphere Reach New Record

Romulan01 @6, I just had a closer look at the video linked to by Michael Sweet. I noticed that:

1) The CO2 was produced by an endothermic reaction, ie, one which cools the products and hence the surrounding environment;

2) The bottle with enhanced CO2 was open when the CO2 was fed in, thereby preventing pressure build up and a resulting increase in temperature. This is possible because CO2 is heavier than air so the CO2 fed in would displace normal air out of the bottle; and

3) The experiment was conducted indoors (avoiding high convective heat loss), and with only two lamps (providing symmetry in overlap heating).

In short, it avoids all of the problems mentioned in my prior post, and is in fact a very good experiment.

Prev 515 516 517 518 519 520 521 522 523 524 525 526 527 528 529 530 Next

Arguments

Arguments