Recent Comments

Prev 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 Next

Comments 41401 to 41450:

-

chriskoz at 12:05 PM on 16 October 2013Two degrees: how we imagine climate change

JH@5,

I think panzerboy priviledges should be revoked at this point.

not only did he badly distort the meaning of my post in a very careless manner, but he also distort my name to cap it up. Not that I particularly care about the latter but others might do. SkS standards should not tolerate such poor quality comments.

Moderator Response:[JH] I suspect that panzerboy is nothing more than a drive-by denier drone. If I had the authority to do so, I would immediately revoke his posting privileges. Alas, i do not have such authority.

-

Bert from Eltham at 10:31 AM on 16 October 2013Consensus study most downloaded paper in all Institute of Physics journals

What is abundantly clear, is that a refereed paper that measures or evaluates the reality of twenty years of thousands of refereed papers is a major counter to the false campaign of the deniers that attempt to throw doubt on real science with a pathetic litany of half truths and a very poorly argued inconsistent delusional world view. Bert

-

Tom Curtis at 09:02 AM on 16 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

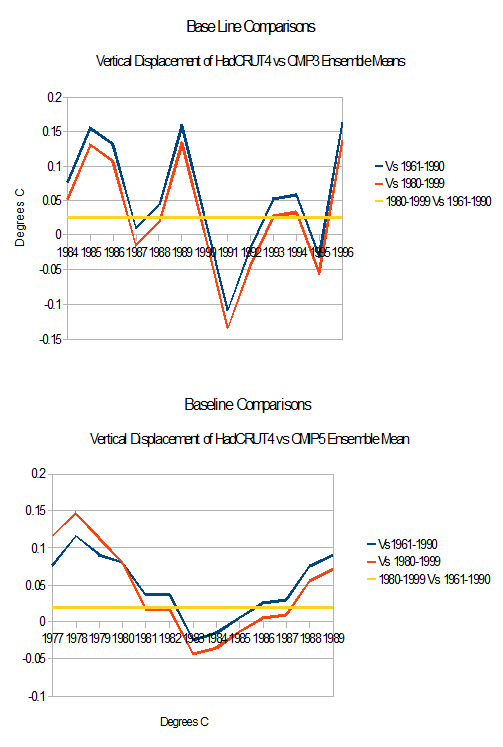

SASM @160, I have to disagree with your claim that the charts discussed "are all basically the same". For a start, your chart, Spencer's chart, and the 2nd order draft chart from the IPCC all use single years, or very short periods to baseline observations relative to the model ensemble mean. In your, and Spencer's case, you baseline of a five year moving average, effectively making the baseline 1988-1992 (you) or 1979-1983 (Spencer). The 2nd Order draft, of course, baselines the observations to models of the single year of 1990.

The first, and most important, problem with such short period baselines is that they are eratic. If a 1979-1983 base line is acceptable, then a 1985-1989 base line should be equally acceptable. The later baseline, however, raises the observations relative to the ensemble mean by 0.11 C, or from the 1.5th to the 2.5th percentile. Should considerations of whether a model has been falsified or not depend on such erratic baselining decisions?

Use of a single year baseline means offsets can vary by 0.25 C over just a few years (CMIP3 comparison), while with the five year mean it can vary by 0.15 C (CMIP5 comparison). That is, choice of baselining interval with short baselines can make a difference equal to or greater than the projected decadal warming in the relative positions of observations to model ensemble. When you are only comparing trends or envelope over a decade or two, that is a large difference. It means the conclusion as to whether a model is falsified or not comes down largely to your choice of baseline, ie, a matter of convention.

Given this, the only acceptable baselines are ones encompensing multiple decades. Ideally that would be a thirty year period (or more), but the 1980-1999 baseline is certainly acceptable, and necessary if you wish to compare to satellite data.

Spencer's graph is worse than the others in this regard. It picks out as its baseline period the five year interval which generates the largest negative offset of the observations at the start of his interval of interest (1979-2012); and the third largest in the entire interval. Given the frequency with which his charts have this feature, it is as if he were intentionally biasing the display of data. In contrast, the 1990 baseline used by you (as the central value in a five year average) and the 2nd Order draft generates a postive offset relative to the 1961-1990 baseline, and near zero offset relative to the 1980-1999 baseline. Although 1990 is a locally high year in the observational record, it is not as high relative to the ensemble mean as its neighbours. Consequently the 2nd Order draft generates a more favourable comparison then the longer baselines. It is an error, never-the-less, because that is just happenstance.

More later.

-

John Cook at 08:01 AM on 16 October 2013Consensus study most downloaded paper in all Institute of Physics journals

MichaelM, apologies for the video quality but as Kevin C points out, our resources are limited. However, at this blog post, I go into more detail, including making the Powerpoint slides fully available.

There are many different gauges of impact - downloads, tweets, citations, etc. I've read one fascinating paper that found that the number of initial tweets about a paper predict the eventual number of scholarly citations, so they provide an early indication of the long-term impact of a paper. There is similar research regarding the number of downloads of a paper and citations. The point is that the contribution that SkS readers made to make our consensus paper available in a high impact, open-access journal has had a strong, measureable effect by all available metrics.

-

Leto at 07:14 AM on 16 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Tom, and others familiar with the data:

Is it possible to take the model ensemble and purge all those runs that are clearly ENSO-incompatible with the real-world sequence of el ninos and la ninas? (Obviously this begs the question of what counts as incompatible, but reasonable definitions could be devised.) In other words, do the runs have easily extractable SOI-vs-time data?

-

Dikran Marsupial at 04:00 AM on 16 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

SASM wrote "inaccurate and falsified"

As GEP Box (who knew a thing or to about models, to say the least) said "all models are wrong, but some are useful". We all know the models are wrong, just look at their projections of Arctic sea ice extent. The fact they get that obviously wrong doesn't mean that the models are not useful, even though they are wrong (as all models are). Of course the reason skeptics don't latch on to this is because it is an area where the models underpredict the warming, so they don't want to draw attention to that.

It seems to me that SASM could do with re-evaluating his/her skepticism, and perhaps ask why it is he/she is drawing attention to the fact that the observations are running close to the bottom of model projections now, but appears to have missed the fact that they exceeded the top of model projections in 1983 (see Toms nice diagram). Did that mean the models were falsified in that year and proven to be under-predicting global warming? Please, lets have some consistency! ;o)

-

A Rough Guide to the Jet Stream: what it is, how it works and how it is responding to enhanced Arctic warming

Jubble - The paper is available at Barnes 2013 (h/t to Google Scholar).

It's only been out a month - it will be interesting to see if her analysis of atmospheric wave patterns holds up.

-

Doug Bostrom at 03:11 AM on 16 October 2013Consensus study most downloaded paper in all Institute of Physics journals

Per Kevin, a casual check w/google scholar shows 20 cites as of today. Not bad, considering how slowly the publication sausage factor generally operates.

This item from the list of work citing Cook et al is particularly interesting for those of us who frequent climate science blogs (the somewhat awkward title isn't reflective of the paper's utility):

Mapping the climate skeptical blogosphere

Figure 1 is fascinating.

-

Jubble at 01:47 AM on 16 October 2013A Rough Guide to the Jet Stream: what it is, how it works and how it is responding to enhanced Arctic warming

I read the article with interest, thank you.

I've just come across this recent paper (Revisiting the evidence linking Arctic Amplification to extreme weather in midlatitudes Elizabeth A. Barnes DOI: 10.1002/grl.50880) that states that "it is demonstrated that previously reported positive trends are an artifact of the methodology".

http://onlinelibrary.wiley.com/doi/10.1002/grl.50880/abstract

I don't have access to the full paper or the background to be able to verify the conclusion in the abstract. What do you think?

-

StealthAircraftSoftwareModeler at 01:37 AM on 16 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Tom Curtis @159: Thanks for the quality posts. If we’re ever in the same town I’d like to buy you a beer or three. I might disagree with you on a few things, but I do appreciate your cogent and respectful posts.

You have drawn another nice chart. I think all of the charts discussed, including your current one, the one I’ve drawn @117, the one drawn by Spencer (http://www.drroyspencer.com/2013/10/maybe-that-ipcc-95-certainty-was-correct-after-all/), McIntyre’s (http://climateaudit.org/2013/10/08/fixing-the-facts-2/) and the two at the top of this page are all basically the same. They show model projections and the temperature data. Dana asserts that IPCC AR5 Figure 1.4 draft (his left chart at the top) was a mistake or has errors, but is now clear to me that it does not have any errors. The draft version (Dana’s left chart) from the IPCC matches the final draft version (Dana’s right chart), it’s just that the IPCC’s final version is zoomed out and harder to see.

This thread has discussed that initial conditions and boundary conditions are important, and using trend lines is important, but I disagree. Plotting model projection lines along with temperature data (not trend lines) much the way I have done, Spencer has done, McIntyre has done, is completely reasonable and meaningful. After all, this is the way the IPCC has drawn the chart in its final draft version (Dana’s right chart).

No matter how you slice it, the global temperature data is running at the very bottom of model projections. I think that falsification of model projections is near. If it temperature continues not warm for another 15 years as theorized by Wyatt’s and Curry’s Stadium Wave paper (http://www.news.gatech.edu/2013/10/10/%E2%80%98stadium-waves%E2%80%99-could-explain-lull-global-warming) then it will be obvious that climate models are inaccurate and falsified.

-

panzerboy at 01:36 AM on 16 October 2013Two degrees: how we imagine climate change

Chriskov @ 3

The 'hundreds of years' and '10,000 times the rate' confused me.

Ten thousand times the rate of 200 years would be a little over a week.

So were going to have 2 degrees C higher average temperaures after a week?!

Perhaps its a typo, perhaps its pure alarmism.

Moderator Response:[JH] Lose the snark, or lose your posting privilege.

-

PhilBMorris at 01:19 AM on 16 October 2013Two degrees: how we imagine climate change

Claude @ 1

2oC is a political target, not one based on science. Nor is it very realistic, given that there's little sign of anything other than BAU. I’d rather assume that Hansen is right – that 2oC is a prescription for disaster – than blindly accept a politically convenient target created simply so that all governments can ‘get on board’. But keeping below 2oC is going to be politically and economically very difficult. Although some people are encouraged by the increase in renewables, the impact of that increase is really very small and will not affect the path we're heading down. Decarbonizing the grid, in itself a major undertaking, will reduce CO2 emissions by just 30%, and would merely delay the inevitable by a few years. Entire manufacturing processes need to be changed to reduce industrial CO2 emissions. But, for example, how do you produce concrete without releasing CO2? And who's going to stop impoverished people in undeveloped nations from continuing to clear land for agriculture, a practice that not only releases CO2 but reduces the ability of the biomass to act as a global sink for CO2? The 2oC 'mantra' is almost as bad as the 'tokenism' individuals are asked to perform (changing out incandescent light bulbs for CFLs or LEDs; turn down the thermostat at night; drive less etc. etc.) as if any of that will make any real difference. Nothing short of massive and rapid investment in both non-fossil fuel sources such as Thorium based nuclear power, and in effective carbon sequestration, will undo what we have done over the past 100 years – and get us back to safe CO2 levels. i.e. 350 ppm.

-

Paul D at 00:43 AM on 16 October 2013Consensus study most downloaded paper in all Institute of Physics journals

I think the morse telegraph was available in Tyndalls time, the original internet.

So it would have been possible to send messages about Tyndall.

Also 'Telegraph' shopping predates internet shopping. -

Tom Curtis at 23:01 PM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Further to my graph @126, here is a similar graph for CMIP5:

The most important difference is that I have shown trends from 1983, to match the chart shown by Roy Spencer in a recent blog.

There are some important differences between my chart, and that by Spencer. First, I have used the full ensemble for RCP 8.5 (80 realizations, including multiple realizations from some individual models); whereas Spencer has used 90 realizations with just one realization per model, but not all realizations from the same scenario. Second, I have used thirty years of annual average data. Spencer has used the five year running, terminal mean. That is, his data for 1983 is the mean of the values from 1979-1983, and so on.

Even with these differences, the results are very similar (unsurprisingly). Specifically, where he has the percentile rank of the HadCRUT4 trend vs the Ensemble as less than 3.3% of the ensemble realizations, I have it at 3.43 %ile of the ensemble realizations. For UAH, the 30 year trend is at the 3.32 %ile of ensemble realizations. Taking trends from 1979 for a more direct comparison, then we have:

UAH: 2.29 [0, 32.2] %ile

HadCRUT4: 3.34% [0.96, 23.16] %ile

GISS: 3.45 [0.92, 28.66] %ile.

For those unfamiliar with the convention, the numbers in the square brackets are the upper and lower confidence bounds of the observed trends.

Mention of confidence intervals raises interesting points. Despite all Spencer's song and dance, the 30 year trends for HadCRUT4 and UAH do not fall outside the 2.5 to 97.5 %ile range, ie, the confidence intervals as defined by the ensemble. More importantly, the confidence intervals of the observed trends encompas have upper bounds in the second quartile of ensemble trends. Consequently, observations do not falsify the models.

This is especially the case as there are known biases on the observed trends which Spencer knows about, but chooses not to mention. The first of these is the reduced coverage of HadCRUT4, which give is a cool bias relative to truly global surface temperature measures. The second is the ENSO record (see inset), which takes us from the strongest El Nino in the SOI record (which dates back to 1873) to the strongest La Nino in the SOI record. This strong cooling trend in the physical world is not reflected in the models, which may show ENSO fluctations, but do not coordinate those fluctuations with the observed record.

Indeed, not only does Spencer not mention the relevance of ENSO, he ensured that his average taken from 1979 included the 1983, ei, the record El Nino, thereby ensuring that his effective baseline introduced a bias between models and observations. Unsurprisingly (inevitably from past experience), it is a bias which lowers the observed data relative to the model data, ie, it makes the observations look cooler than they in fact were.

-

Kevin C at 21:51 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

Actually, I'll agree with MartinG to the extent that I don't think downloads are a good measure of the contribution of the paper to human knowledge. For that the long term citation counts are a probably a better measure (but not much better).

However there is another way in which scientific work is guaged: Impact (apologies to those of you in UK academia, who will be cringing right now). Impact is the current fad among UK funding bodies (and largely hated by scientists), and measures the effect of a paper on public policy, culture or the economy. The download statistics may well be a better indicator of impact, and the media coverage certainly is.

-

chriskoz at 21:28 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

MartinG@2,

Your conception that "most downloaded" can equal "most controversial, the most flawed, or the most criticised" aplies to thte world of sensationalism, false balance and loud contrarians.

In the world of science, the junk work is not simply not interesting: honestly, the sicentists simply don't have time to bother about it. The sensentionalism is not interesting to experts - check out e.g. what gavin has to say about it on RealClimate. So, if John is talking about this world, rather than the world of denialism you imply, then his "bragging" is fully justified: scientists usually download the stuff that is valuable and interesting: be it a valuable reference material, interesting new results or new, even controversial, skeptical (in a true sense) approach.

I'm confident (although I haven't measured), the scientific interest aound Cook 2013 work outweigh the interests of the contrarians who want to obfuscate its results.

-

Kevin C at 21:20 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

MichealM: I'm going to let you into a secret. Almost all science is done on the cheap. I've had quite a few of my lectures webcast, including a number from major international meetings, and mostly they not much better than the one above.

Only one was edited as you suggest, and that was from a small workshop at a national centre which hosts several Nobel prizewinners.

-

MichaelM at 20:33 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

That talk is a great example of cheap video production. Did somebody just plant a laptop and angle the screen camera upwards? The sound is mediocre (it did pick up some audience coughs perfectly), I can't hear any questions asked and I can't read what's on the screen. Would it have been so difficult to cut the slides into the video? I gave up after a few minutes.

-

John Cook at 20:32 PM on 15 October 2013SkS social experiment: using comment ratings to help moderation

Pluvial, what you're describing sounds a lot like pagerank, the system that Google use to rank websites. How it works is each time a person gets a thumbs up, they gain some "reputation". A thumbs up from someone with higher reputation is worth more than a thumbs up from someone with low reputation. Reputation needs to be calculated iteratively - you work out an initial reputation based on simple thumbs up, then recalculate reputation with ratings weighed by the reputation of each rater, and repeat until the reputation values converge on final values.

I do plan to explore that as a possible feature to integrate into SkS but I need lots of data first. So everyone, do be sure to rate comments and blog posts in order to give me lots of data to examine.

-

John Cook at 18:42 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

Plus the talk I gave at the University of Queensland where I address 5 of the major criticisms of our paper: http://sks.to/tcptalk

-

ajki at 15:50 PM on 15 October 2013Temp record is unreliable

dvaytw@274:

Your opponent makes this extreme claim:

1. No average temperature of any part of the earth's surface, over any period, has ever been made. How can you derive a "global average" when you do not even have a single "local" average?

What they actually use is the procedure used from 1850, which is to make one measurement a day at the weather station from a maximum/minimum thermometer. The mean of these two is taken to be the average. No statistician could agree that a plausible average can be obtained this way. The potential bias is more than the claimed "global warming. [emphasis added]

This "argument" already breaks if it could be shown that there is at least one location where measurements over a long time span have been made with high quality. In fact many institutes or harbours all over the world are sentimentally proud (up and including irrationally proud) of having exactly those measurement series.

Invite your opponent to a search throughout the internet and s/he might find them. But you could even help her/him. For just one example, the "Long-Term Meteoroligical Station Potsdam Telegraphenberg" has a very nice and explaining website. And s/he could even find some very frustrating series to her/his worldview there. As anyone could clearly see by the "annual mean" graph there is a clear trend over the time and this trend is far atop the often cited 0.8/9°C worldwide. This difference is due to one of the predicted outcomes of the enhanced greenhouse effect - higher latitudes will warm up more than the global mean.

But do not hope to convince deniers by facts - here you'll encounter (perhaps) something like "but it is NOT since 1850!" or, if somebody else shows another time serie beginning at around 1840, the goalpost will shift. Or anything else ;-)

-

gpwayne at 15:38 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

MartinG: to produce a logical rebuttal, a logical argument is required. Most of the arguments I've seen attempt to find error in the methodology where there was none, build straw-men only to set them on fire, or attack the authors in crude ad-hominems.

Outside of that, and with the patience of a saint, the team has responded repeatedly to criticisms, here and elsewhere. If you would really 'rather read some logical rebuttals', and given that it took me all of five minutes to find, list and link to the following, I'm moved to ask - what's stopping you?

Debunking 97% Climate Consensus Denial

Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

-

MartinG at 15:23 PM on 15 October 2013Consensus study most downloaded paper in all Institute of Physics journals

Most downloaded? Hmmmm - I wouldnt brag about that - it may be the most downloaded because it is the most interesting, or most exciting, or most informative - or that it is the most controversial, the most flawed, or the most criticised. I think I would rather read some logical rebuttals to the massive criticism that it has induced please.

Moderator Response:[JH] Lose the snark, or lose your posting privilege.

-

Consensus study most downloaded paper in all Institute of Physics journals

My compliments to all authors. IMO well written and well supported.

And considering the ongoing and multiple exploding heads over the paper in denier-ville, very effective at addressing the consensus gap.

-

scaddenp at 12:40 PM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

"have to respectfully disagree with you about testing climate model on past data. First, we really do not have enough solid and reliable measurements of the past to really determine the various components of the climate. "

Excuse me but have you read any paper on the topic at all? Looked at the paleoclimate section of WG1? You have measurements of various climatic parameters with obviously error bounds on them. You have have estimates of forcings with similar error bounds. You run the models and see whether range of results for forcings are within the error limits for forcings. Are the forcings well enough known to constrain the models for testing? Well no, in some cases, yes in others. You can certainly make the statement that observations of past climates are expliciable from modern climate theory.

Start at Chap 6 AR4. Read the papers.Moderator Response:(Rob P) - Please note that Stealth's last few comments have been deleted due to violation of the comments policy. Replies to these deleted comments have likewise been erased.

If you wish to continue, limit yourselves to either one or two commenters - in order to avoid 'dogpiling' on Stealth. And request that Stealth provide peer-reviewed literature to support his claims. Claims made without the backing of the peer-reviewed scientfic literature are nothing more than non-expert personal opinion.

Further 'dogpiling' and/or scientifically unsupported claims will result in comments being deleted.

-

chriskoz at 12:29 PM on 15 October 2013Two degrees: how we imagine climate change

Third paragraph under the graphic says:

But these events (Laurentide icesheet) happened 18,000 years ago, over a timeframe of hundreds of years, as a result of changes in the earth’s orbit and other natural forces.

That does not make sense. If you're talking about Heinrich events, you may say they lasted for few hundred years. But if you are talking about Milankovic cycles, this is a typo, you probably meant "hundred thousands of years".

If my assumption about the typo is correct, then in next paragraph you state: "human-forced climate change is happening at 10,000 times the rate". That statement is not in the right ballpark (rate too fast), because in reality AGW's happening in hundreds of years, so ~1000 times faster that Milankovic forcings.

-

Leto at 11:57 AM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Stealth wrote:

As a decent poker player myself, it is not a foregone conclusion that an expert will beat a complete novice.

I am afraid you appear determined to resist enlightenment. Try to understand the conceptual point behind the analogies, rather than getting side-tracked into a discussion of the analogy itself. The odds of my mother beating the world expert in poker over several hours of play are close to zero, despite the unpredictable short-term possibility of her getting an unbeatable hand at any one moment, but that is not the point. The point is, the long-term predictability of an expert-vs-novice poker session is obviously much higher than its short-term predictability. The long-term rise in a swimming pool's level during filling is much more predictable than the moment-to-moment splashes. The physics of heat accumulation on the planet are much more amenable to modelling than short-term cycles and weather.

Don't argue the analogies unless you put the effort into understanding the concept behind them. The analogies were raised because you seemed to take it as axiomatic that long-term predictability could never exceed short-term predictability, a view that is trivially easy to falsify.

I chose this name because it is what I do, and I think I know what I’m talking about when it comes to software and modeling. This doesn’t mean all modeling and all software, but it is easier for me to extrapolate my knowledge and experience from my domain than, say, someone in the mortuary business. (Emphasis added).

I think you have amply demonstrated that your experience in the aviation domain is actually preventing you from understanding. I see too much extrapolation from an entirely unrelated domain, which has a different dependence on small-scale and short-term phenomena completely unlike climatology, coupled with preconceived ideas about what conclusions you want to reach.

Perhaps this is what some of you were trying to explain, but it didn’t come across very clear.

See above. The problem is with the receiver. The points were very clear to anyone prepared to be educated.

-

Tom Curtis at 11:41 AM on 15 October 2013Ocean In Critical State from Cumulative Impacts

-

Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Stealth - "...the fact remains that sea surface temps and global air temps have been very steady for a long time, contrary to the models projections."

Did you actually read the opening post??? It doesn't appear so... See the third figure; recent (and by that I mean the 15-17 years discussed by deniers) trends are but short term, statistically insignificant noise, cherry-picked from an extreme El Nino to a series of La Ninas. It's noteworthy that similar length trends (1992-2006) can be shown to have trends just as extreme in the other direction - a clear sign that you are not looking at enough data.

I've said this before, discussing recent trends and statistically significant trends. Examining any time-span starting in the instrumental record and ending in the present:

- Over no period is warming statistically excluded.

- Over no period is the hypothesis of "no warming" statistically supported WRT a null hypothesis of the longer term trends.

- And over any period with enough data to actually separate the two hypotheses – there is warming.

-

bibasir at 10:05 AM on 15 October 2013Ocean In Critical State from Cumulative Impacts

The scariest piece of information to me was published in Nature July 29, 2010. I'm not good at links, but use Google Scholar and search "Boris Worm phytoplankton." His team reported a 40% drop in phytoplankton since 1950. Phytoplankton are crucial to much of life on Earth. They are the foundation of the bountiful marine food web, produce half the world's oxygen and suck up carbon dioxide. Half the world's oxygen! I don't know why that fact alone does not send shudders through the population. It may take a long time before oxygen levels are depleted enough to cause harm, but what then?

-

scaddenp at 09:58 AM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Hmm, weather is chaotic. Whether climate is chaotic is an open question. Heat a large pan of water. The convection currents and surface temperatures are a challenge to model, but one thing for sure - while the heat at bottom exceeds heat loss from pan, then pan will keep on heating.

By the way, if you want to discuss modelling directly with the climate modellers then ask away at RealClimate (read their FAQ first) - or on Isaacc Held's blog when the US government reopens.

-

Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

Stealth - Boundary value solutions can indeed deal with changes in forcings that were not in the original projection scenarios, by simply running on actual values. If the LA (or Detroit) freeway model accurately reflected those boundaries and limits, running it with a different set of economics would indeed give the average traffic for that scenario. That is the essence of running multiple projections with different emissions scenarios (RCPs) - projecting what might happen over a range of economic scenarios in order to understand the consequences of different actions.

And a projection is indeed testable - as Tom Curtis points out simply running a physically based boundary value model on past forcings should (if correct) reproduce historic temperatures, or perhaps using hold-out cross validation. And models can (and will) be tested against future developments, see the discussion of Hansens 1988 model and actual (as opposed to projected) emissions.

Finally, as to your statement "...in a chaotic and non linear system like the climate", I would have to completely disagree. Yes, there are aspects of chaos and non-linearity in the weather. But (and this is very important) even the most chaotic system orbits on an attractor, which center the long term averages, the climate. And the location of that attractor is driven by the boundary conditions, by thermodynamics and conservation of energy. Weather will vary around the boundary conditions, but the further it goes from balance the strong the correcting forcing.

Claiming that we cannot understand how the climate behaves under forcing changes is really an Appeal to Complexity fallacy - given that our models actually do very well.

-

scaddenp at 09:45 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

I picked on Luxembourgh because they come in at a whooping 300kWh/p/d.

-

MA Rodger at 09:20 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

scaddenp @78.

I'm not entirely sure why you pick on Luxembourg but they actually do have the largest per capita accumulative CO2 emissions (since 1750). There is a bit of geography here. Second on such a list is UK & Belgium is fourth (only receltly overhauled by USA) as this is the region where the industrial revolution first grew big. Luxembourg continues to have a big steel industry and is a very small country which is why it manages to remain out in front. (Thus didn't feel they should feature on this graphic of national & per capita accumulative emissions because I considered Luxembourg with its steel industry a sort of special case. And they are a small counrty.)

-

Tom Curtis at 09:01 AM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

SAM @152, I have to disagree with you on the falsifiability of models based on projections based on two points:

1) Although models can only make projections of future climate changes, they can be run with historical forcings to make retrodictions of past climate changes. Because the historical forcings are not the quantities being retrodicted, this represents a true test of the validity of the models. This has been extended to testing whether models reproduce reconstructed past climates (including transitions between glacials and interglacials) with appropriate changes in forcings.

2) Even projections are falifiable. To do so simply requires that the evolution of forcings over a given period be sufficiently close to match those of a model projection; and that the temperatures in the period of the projection run consistently outside the outer bounds of the projected temperature range. It should be noted that, because the outer bounds are large relative to the projected change in temperature in the short term, that is almost impossible in the short term. In the longer term (30+ years), however, the projected (and possible) temperature changes are large relative to the outer bounds and falsification becomes easy. In other words, the experiment takes a long time to run. There is nothing wrong with that. It is simply the case that some experiments do take a long time to run. That does not make them any less scientific.

-

StealthAircraftSoftwareModeler at 08:29 AM on 15 October 2013Why Curry, McIntyre, and Co. are Still Wrong about IPCC Climate Model Accuracy

I’ve done a little more research, and I’ve stumbled upon something from the Met Office. This clears up some of the discussion, perhaps, and it is against some of the points I have made, so please give me credit for being honest and forthcoming in the discussion:

“We should not confuse climate prediction with climate change projection. Climate prediction is about saying what the state of the climate will be in the next few years, and it depends absolutely on knowing what the state of the climate is today. And that requires a vast number of high quality observations, of the atmosphere and especially of the ocean.”

“The IPCC model simulations are projections and not predictions; in other words the models do not start from the state of the climate system today or even 10 years ago. There is no mileage in a story about models being ‘flawed’ because they did not predict the pause; it’s merely a misunderstanding of the science and the difference between a prediction and a projection.”

I think this clears up some of the discussion, I think. Perhaps this is what some of you were trying to explain, but it didn’t come across very clear. I was thinking that GCMs are making predictions instead of projections. Nevertheless, I don’t like projections because they are not falsifiable. Without known inputs and projected outputs, and truth data to compare against, how are these models tested and validated? I can’t think of a single way to verify them, and only one way to falsify them, and that is for the climate to cool when it is projected to warm. So, if the current global temperature pause extends out further or turns into cooling, only then will we have a falsification of the models.

And here a few select replies to some points made above:

Tom Dayton @144: I’ll re-read the SkS post on “How Reliable are the Models” again. I had some specific issues with it, but I’ll keep them to that post. As for Steve Easterbrook’s explaination… did you read it and understand it? I don’t think so. One sentence there sums it all up: “This layered approach does not attempt to quantify model validity”. Nuf’said.

Leto @145: As for my username and background -- I am not pulling rank or appealing to authority as I understand those logical fallacies. I chose this name because it is what I do, and I think I know what I’m talking about when it comes to software and modeling. This doesn’t mean all modeling and all software, but it is easier for me to extrapolate my knowledge and experience from my domain than, say, someone in the mortuary business.

KR @146: yes, you are correct in that I was misunderstanding boundary and initial value problems. I’ve read up on them a little, and I am not sure how useful they in the longer term. In your traffic projection example, yes they work well, but only when all of your underlying assumptions are met. For example, 10 years ago Detroit assumed much about the economy and economic growth and built a lot of stuff. Then the broader economy turned down, people left, and the city has gone bankrupt. The future is really hard to predict.

I think models are at the very low end of their boundary conditions with the current pause. Whether all the heat is stealthily sinking into the deep ocean is a separate issue, but the fact remains that sea surface temps and global air temps have been very steady for a long time, contrary to the models projections. Even all the big climate houses are admitting the pause, so you guys might as well admit it too.

And for your statement of “as we have a pretty good handle on the physics”, I disagree. Sure we have a good understanding of a lot of physics, but in a chaotic and non linear system like the climate, I think there is much that is not known. Take ENSO, PDO, and ADO, no one has a good physics model describing these, and they are major drivers of the climate. Unless you believe there is almost nothing unknown in the climate, then your statement on having a good handle in physics is a red herring. Donald Rumsfeld said it best: “There are known knowns; there are things we know that we know. There are known unknowns; that is to say, there are things that we now know we don't know. But there are also unknown unknowns – there are things we do not know we don't know.” I am pretty confident that there will be some unknown unknowns that will turn current climate science on its head -- and the pause is starting to back it up. If Wyatt’s and Curry’s peer reviewed paper on “Stadium Waves” (see: http://www.news.gatech.edu/2013/10/10/%E2%80%98stadium-waves%E2%80%99-could-explain-lull-global-warming) published in Climate Dynamics is correct, the pause could last much longer and would clearly falsify the climate models and most of AGW. I think we have time to wait another decade or two before taking an drastic action on curbing CO2 emissions to see if the IPCC’s projections are correct or not.

Barry @149: coin flipping is a false analogy and not applicable forecasting climate or any chaotic system.

JasonB @150 (and Leto @145): As a decent poker player myself, it is not a foregone conclusion that an expert will beat a complete novice. On average it is much more likely an expert will win, but in any given instance the claim is not true at all. The entire casino industry is built upon the Central Limit Theorem, which states “the arithmetic mean of a sufficiently large number of iterates of independent random variables, each with a well-defined expected value and well-defined variance, will be approximately normally distributed.” If a roulette wheel pays 36-to-1 on selecting the correct number on the wheel but there are 38 numbers (counting 0 and 00), assuming a fair wheel that is random, then the house has a 5.3% advantage over the player. In the long run, they $0.053 for every dollar bet on the wheel. This gaming theory with a well defined mean does not apply to the climate – the climate is chaotic, non linear and does not have a well defined mean.

-

scaddenp at 08:08 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

What I would really like to see is an American (or Luxembourg) version of the document. How do you actually manage to use 250kWh/p/d? That's more than double NZ or UK but I wouldnt have thought the lifestyle that much more energy intensive. I suspect the figure for consumer energy use have some issues.

-

scaddenp at 06:40 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

vroomie - the original is linked from here at Hot Topic. Oliver Bruce did an update last year, serialised at Hot Topic, starting here. The final document can be downloaded from here.

-

GRLCowan at 06:23 AM on 15 October 2013SkS social experiment: using comment ratings to help moderation

... and so over-quick posts seeking to be first, and over-persistent seekers of the last word, will be demotivated.

-

GRLCowan at 06:19 AM on 15 October 2013SkS social experiment: using comment ratings to help moderation

Magma (#17) -- "early posts will receive more views and more opportunities for votes, up or down, than later ones, regardless of 'quality'" -- that leads to seeking to be first and/or last.

A solution that should be possible is for all comments, or all comments that are replies to the above-the-line article rather than to any other BTL comment, to be presented in pseudorandom order. That way, everyone gets an equal share of the first/last visibility advantage.

-

grindupBaker at 05:50 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

@History reader #66, DSL #70. I think History's questions are better answered by locating those bell curves of weather events and discussions of them (I recall lectures by, perhaps, Dr. Wasdell, Dr. Hansen, Prof. Muller posted as videos that included explanations of the bell curve of weather events both widening at the base and moving towards the severe events. My understanding is that a weather event is not "caused by global warming", which is simplistic. The additionaI energy in the system (in the oceans) makes more events more energetic, but to greatly varying amounts so that it is incorrect to say "this is a global warming one, that's a regular one like we had before". It's important to note that "global warming" is in its early years so we should not expect every weather event to be more powerful than any prior weather event.

"powerful east coast storms", the east coast of which country or continent ? (is my so-subtle way of saying the peoples of Land Incognita really notice the navel-gazing south of the Sumas border crossing here. I mean we really really notice it and a commenter would gain great creds by searching for a similar size weather event in some country over there, study and add to their comments to show cosmopolitanism in the global topic of global warming).

-

vrooomie at 05:23 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

scaddenp, would you be so kind as to proivide a link to your analysis? I'd like to share it with my Kiwi friends.

-

scaddenp at 05:04 AM on 15 October 2013Levitus et al. Find Global Warming Continues to Heat the Oceans

Dont forget in your analysis that deep ocean heat transport is still constrained by steric sealevel rise.

-

scaddenp at 05:01 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

jdixon - It's very interesting book. It inspired me to make and publish the same analysis for my country (NZ) though with a slightly different emphasis. His approach of looking at things in terms of kWh/p/d is very useful.

-

JasonB at 04:26 AM on 15 October 2013Temp record is unreliable

dvaytw,

Addressing those points in reverse order:

Figure 5 in the advanced version of this post compares raw data with corrected data, putting the lie to the last claim.

The idea that the average cannot be determined accurately due to sparse samples is disproven by the fact that the same temperature trend can be derived by using approximately 60 rural-only stations (e.g. Nick Stokes' effort referred to in the OP; caerbannog also posts regularly about his downloadable toolkit e.g. comment #8 on this post, which itself is about Kevin C's tool). Anybody attempting to cast doubt on the basis of point 2 really has to explain how the reconstructed record is so robust and insensitive to the particular stations used.

The average = (min+max)/2 temperature issue is irrelevant; all that matters is whether it creates a bias. In the US, where temperatures were recorded by volunteers and the time of day of observation (TOBS) changed over time, it actually does create a bias (a step change to cooler readings at a given station when the change occurs, which caused a reduction in trend over time as the change rolled out), but that can be corrected for, and if it's not, it demonstrably doesn't make much difference. BEST's approach, of simply splitting the station when a step change is detected, deals with this without any correction required.

Finally, a global average is not difficult to work out, but it's also not necessary to compute in order to detect global warming — the issue is the change in temperature not the temperature itself, which is why "temperature anomaly" is always used, and the change is easy to detect. One of the reasons why so few stations are required is that anomalies are strongly correlated over large distances (demonstrated empirically by Hansen et al way back in the 80s) even while the actual temperatures between nearby stations can vary widely (e.g. with altitude and surrounding environment).

I should probably also point out that the "global warming claim" isn't based on "a graph" that shows that "mean annual global temperature" has been increasing. For a start, it goes back over 100 years, with the calculations of Arrhenius that showed increasing atmospheric CO2 concentrations would increase global temperatures, coupled with the fact that we have increased atmospheric CO2 concentrations by about 40% and continue to do so; the graph merely provides evidence to support the theory. Secondly, there's an awful lot more evidence out there than just "a graph". Tell them to look at what's happening in the Arctic sometime.

-

MA Rodger at 04:16 AM on 15 October 2013Temp record is unreliable

dvaytw @274.

The first criticism, that there is no "global average" temperature, is hardily "fundamental." The word "average" has many meanings. That it is used in a way of which the critic disapproves is of no fundamental importance, except perhaps to the critic himself who is evidently "no statistician."

Given what was said, I guess the critcism is confined to land temperature measurements.

It is true that on land the daily maximum and minimum temperature is all that is recorded. It is the standard practice and dates back to 1772 with the CET. The average of these two readings would then be "the mean recorded temperature" which makes it an average.

The critic appears to be suggesting that an average minute-by-minute daily temperature would yield a result with no global temperature rise. Quite how that could be so is unclear. Both the maximum and the minimum averages have been rising in recent decades. And that the minimums have been rising more steeply than the maximums is symptomatic of increased atmospheric insulation - or an enhanced greenhouse effect.As for the second criticism, it is pure nonsense. As DSL reminds us, the assertion that urban heat islands have significantly distorted the temperature record is difficult to maintain when the satellite record provides essentially the same result.

-

jdixon1980 at 03:55 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

I made a similar comment under the relevant "Climate Myth" post (#65, by the way), and looking back, I see that scaddenp has offered some insights there (thought I recognized your ID). I have not read MacKay's Sustainable Energy - Without the Hot Air, but I see he has a free downloadable pdf here: http://www.withouthotair.com/download.html, and I will take your suggestion of reading it.

-

jdixon1980 at 03:42 AM on 15 October 2013Why climate change contrarians owe us a (scientific) explanation

scaddenp @60:

"MacKay estimates UK consumption at 125kWh/p/d while USA manager 250kWh/p/d. By comparison Hong Kong manages on 80kWh/p/d and India on less than 20. I'd say there was scope to redefine "need" somewhat."

I'd say there is too, but I don't think we can say that energy "needs" are necessarily met by a per-capita distribution as low as India's, let alone that of many sub-Saharan African countries. I would like to see more discussion of this, as it is the only argument against rapid decarbonization of industry that I have heard from climate contrarians that seems to me like it could even potentially be valid. With (hundreds of millions?) of people the world over truly living with what we could only honestly call "energy poverty" (I'm thinking at least that a kids-huddled-under-streetlights-doing-homework-in-the-evening type of situation qualifies), there is undoubtedly a serious problem. I also understand that international treaties like Kyoto/Copenhagen/UNFCCC don't place mandatory emissions reductions or even emissions growth limits on poor (or "non-Annex 1"?) countries, so it's not like international climate policy is overtly snubbing the world's energy-poor. But on the other hand, is it possible that staying within, say, a 2-degrees-C global emissions budget could be irreconcilable with the goal of lifting X number of people out of energy poverty? Or, on the other hand, if the goals are reconcilable, how much longer will it take for the quality of life of that X number of people to be improved on a responsible (in terms of maintaining or restoring climate stability) emissions budget, compared to how long it would take by simply throwing up coal and gas power plants willy-nilly, emissions budget be damned (entertaining for the moment the idea that we have enough readily available fossil fuel on Earth to eradicate energy poverty by burning it)? If the difference is several decades, then adequately addressing the AGW problem could be imposing a continued miserable existence (or at least one that would seem so to me if I had to live it) on an entire generation of the world's energy-poor.

This bothers me a lot (I live in the US, where we overconsume and can't agree on a fiscal budget, let alone a CO2 one), especially when I consider it also in light of the concept of "global overshoot day," defined as the day of a given year on which our cumulative consumption of natural resources exceeds that which the Earth has the capacity to restore in a year, which according to the Global Footprint Network (an organization that I admittedly don't know much about, so I can't speak to the robustness or credibility of the science behind its pronouncements) occurred sometime in August this year, if I remember correctly. Meaning that, if per capita energy consumption correlates to consumption of other resources, as I assume it must to some degree, then raising India's or China's per-capita energy consumption up to say even UK's level is a scary thought for global resource conservation in general, whether or not it involves blowing past any global emissions budget that might avoid climate mayhem.

I realize I'm touching on a lot of highly specialized subjects in one rambling comment. The main point that I would like to make is that I would like to see either a thoughtful SkS post that addresses the subject of energy poverty head-on, or more discussion under the Climate Myth "CO2 Limits Will Hurt the Poor," where the main post (as of now) simply takes an end-around route by observing that unmitigated climate change itself will disproportionately hurt the poor. I don't doubt that that is true, but I would like to see some kind of comparative analysis of whether climate change will hurt the poor more or less than the differential amount of energy poverty (if any) that the poor will be made to endure by being subject to a global emissions budget that will safely avoid climate catastrophe(even if there are no limits being imposed on poorer countries now, surely limits would have to be imposed as soon as the kind of rapid emissions growth ensued that would be necessary to lift people from energy poverty - by burning fossil fuels - to anything resembling what would feel like energy prosperity to an American or Brit, e.g.) .

-

tmbtx at 03:03 AM on 15 October 2013Two degrees: how we imagine climate change

I believe the current status is we've already warmed nearly 1 degree with another degree already in the pipeline. So, essentially, we're already at 2. Is it fair to say that, even with the urgency that people are trying to invoke, it's still being understated?

-

DSL at 02:41 AM on 15 October 2013Temp record is unreliable

dvaytw, I suggest you ask the person how s/he would, ideally, determine whether or not global energy storage was increasing via the enhanced greenhouse effect. That will either push the person toward an evasive rejection of the greenhouse effect (which you can counter with directly measured surface data that confirm model expectations) or push the person into giving you their answer to the question. If you get that answer, then you can compare it with what scientists are actually doing.

It's an odd complaint anyway, since satellite data--even the raw data--confirm the surface station trend, and stratospheric cooling can only be partially attributed to other causes. Then there's ocean heat content data (an invitation to weasel via Pielke and Tisdale, though), global ice mass loss data (harder to deal with, but the move will probably be "it's happened before."), changes in biosphere, thermosteric sea level rise, and the host of other fingerprints.

Prev 821 822 823 824 825 826 827 828 829 830 831 832 833 834 835 836 Next

Arguments

Arguments