Recent Comments

Prev 2250 2251 2252 2253 2254 2255 2256 2257 2258 2259 2260 2261 2262 2263 2264 2265 Next

Comments 112851 to 112900:

-

Berényi Péter at 01:27 AM on 12 August 2010On Statistical Significance and Confidence

#13 CBDunkerson at 00:09 AM on 12 August, 2010 We must see rising temperatures SOMEWHERE within the climate system. In the oceans for instance. Nah. It's coming out, not going in recently. -

barry1487 at 01:01 AM on 12 August 2010On Statistical Significance and Confidence

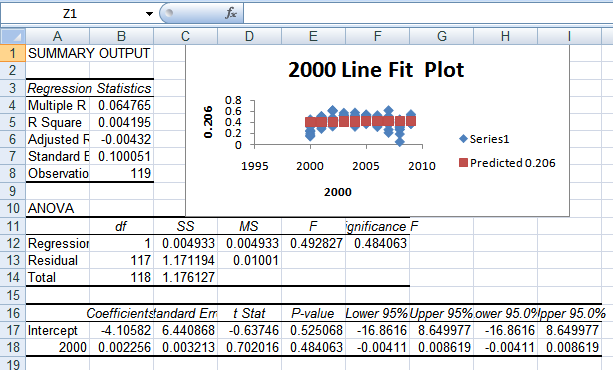

Discussing trends and statistical significance is something that I attempt to do - with no training in statistics. All I have learned from various websites over the last few years is conceptual, not mathematical. I would appreciate anyone with sufficient qualifications straightening out any misconceptions re the following: 1) Generally speaking, the greater the variance in the data, the more data you need (in a time series) to achieve statistical significance on any trend. 2) With too-short samples, the resulting trend may be more an expression of the variability than any underlying trend. 3) The number of years required to achieve statistical significance in temperature data will vary slightly depending on how 'noisy' the data is in different periods. 4) If I wanted to assess the climate trend of the last ten years, a good way of doing it would be to calculate the trend from 1980 - 1999, and then the trend from 1980 - 2009 and compare the results. In this analysis, I am using a minimum of 20 years of data for the first trend (statistically significant), and then 30 years of data for the second, which includes the data from the first. (With Hadley data, the 30-year trend is slightly higher than the 20-year trend) Aside from asking these questions for my own satisfaction, I'm hoping they might give some insight into how a complete novice interprets statistics from blogs, and provide some calibration for future posts by people who know what they're talking about. :-) If it's not too bothersome, I'd be grateful if anyone can point me to the thing to look for in the Excel regression analysis that tells you what the statistical significance is - and how to interpret it if it's not described in the post above. I've included a snapshot of what I see - no amount of googling helps me know which box(es) to look at and how to interpret.

-

Alden Griffith at 00:59 AM on 12 August 2010On Statistical Significance and Confidence

Stephan Lewandowsky: I used the Bayesian regression script in Systat using a diffuse prior. In this case I did not specifically deal with autocorrelation. We might expect that over such a short time period, there would be little autocorrelation through time which does appear to be the case. You are right that this certainly can be an issue with time-series data though. If you look at longer temperature periods there is strong autocorrelation. apeescape: I'm definitely not a Bayesian authority, but I'm assuming you're asking whether I examined this in more of a hypothesis testing framework? No - in this case I just examined the credibility interval of the slope. Ken Lambert: please read my previous post -Alden -

Arkadiusz Semczyszak at 00:12 AM on 12 August 2010On Statistical Significance and Confidence

Since we are at the basis of statistics. I studied a “long three years” statistics in ecology and agriculture. Why exactly 15 years? I have written repeatedly that the counting period for the trend may not be in the decimal system, because in this system is not running type noise variability: EN(LN) SO, etc. For example, trends AMO 100 and 150 years combined with the negative phase of AMO positive "improving "results. The period for which we hope the trend must have a deep reason. While in the above-mentioned cases (100, 150 years), the error is small, in this particular case ("flat" phase of the AMO after a period of growth for 1998 - an extreme El Nino), the trend should be calculated from the same phase of EN(LN)SO after a period of reflection after the extreme El Nino, ie after 2001., or remove the "noise": extreme El Nino and the "leap" from cold to warm phase AMO. This, however, and so may not matter whether you currently getting warmer or not, once again (very much) regret tropical fingerprint of CO2 (McKitrick et al. - unfortunately published in Atmos Sci Lett. - here, too, went on statistics, including the selection of data) -

CBDunkerson at 00:09 AM on 12 August 2010On Statistical Significance and Confidence

Ken Lambert #12 wrote: "The answer is that the temperatures look like they have flattened over the last 10-12 years and this does not fit the AGW script!" This is fiction. Temperatures have not "flattened out"... they have continued to rise. Can you cherry pick years over a short time frame to find flat (or declining!) temperatures? Sure. But that's just nonsense. When you look at any significant span of time, even just the 10-12 years you cite, what you've got is an increasing temperature trend. Not flat. "With an increasing energy imbalance applied to a finite Earth system (land, atmosphere and oceans) we must see rising temperatures." We must see rising temperatures SOMEWHERE within the climate system. In the oceans for instance. The atmospheric temperature on the other hand can and does vary significantly from year to year. -

Ken Lambert at 00:00 AM on 12 August 2010On Statistical Significance and Confidence

Alden # Original Post We can massage all sorts of linear curve fits and play with confidence limits to the temperature data - and then we can ask why are we doing this? The answer is that the temperatures look like they have flattened over the last 10-12 years and this does not fit the AGW script! AGW believers must keep explaining the temperature record in terms of linear rise of some kind - or the theory starts looking more uncertain and explanations more difficult. It it highly likely that the temperature curves will be non-linear in any case - because the forcings which produce these temperature curves are non-linear - some and logarithmic, some are exponential, some are sinusoidal and some we do not know. The AGW theory prescribes that a warming imbalance is there all the time and it is increasing with CO2GHG concentration. With an increasing energy imbalance applied to a finite Earth system (land, atmosphere and oceans) we must see rising temperatures. If not, the energy imbalance must be falling - which either means that radiative cooling and other cooling forcings (aerosols and clouds) are offsetting the CO2GHG warming effects faster that they can grow, and faster than AGW theory predicts. -

Dikran Marsupial at 23:36 PM on 11 August 2010On Statistical Significance and Confidence

I'm going to have a go at explaining why the 1 - the p-value is not the confidence that the alternative hypothesis is true in (only) slightly more mathematical terms. The basic idea of a frequentist test is to see how likely it is that we should observe a result assuming the null hypothesis is true (in this case that there is no positive trend and the upward tilt is just due to random variation). The less likely the data under the null hypothesis, the more likely it is that the alternative hypothesis is true. Sound reasonable? I certainly think so. However, imagine a function that transforms the likelihood under the null hypothesis into the "probability" that the alternative hypothesis is true. It is reasonable to assume that this function is strictly decreasing (the more likely the null hypothesis the less likely the alternative hypothesis) and gives a value between 0 and 1 (which are traditinally used to mean "impossible" and "certain"). The problem is that other than the fact it is non-decreasing and bounded by 0 and 1, we don't know what that function actually is. As a result there is no direct calibration between the probability of the data under the null hypothesis and the "probability" that the alternative hypothesis is true. This is why scientists like Phil Jones say things like "at the 95% level of significance" rather than "with 95% confidence". He can't make the latter statement (although that is what we actually want to know) simply because we don't know this function. As a minor caveat, I have used lots of "" in this post because under the frequentist definition of a probability (long run frequency) it is meaningless to talk about the probability that a hypothesis is true. That means in the above I have been mixing Bayesian and frequentist definitions, but I have used the "" to show where the dodgyness lies. As to simplifications. We should make things a simple as possible, but not more so (as noted earlier). But also we should only make a simplification if the statement remains correct after the simplification, and in the specific case of "we have 92% confidence that the HadCRU temperature trend from 1995 to 2009 is positive" that simply was not correct (at least for the traditional frequentists test). -

andrewcodd at 23:29 PM on 11 August 2010On Statistical Significance and Confidence

"While this whole discussion comes from one specific issue involving one specific dataset, I believe that it really stems from the larger issue of how to effectively communicate science to the public. Can we get around our jargon? Should we embrace it? Should we avoid it when it doesn’t matter? All thoughts are welcome…" More research projects should have metanalysis as a goal. The outcomes of which should be distilled ala Johns one line responses to denialist arguments and these simplifications should be subject to peer review. Firtsly by scientists but also sociologists, advertising executives, politicians, school teachers, etc etc. As messages become condensed the scope for rhetoricical interpretation increases. Science should limit its responsability to science but should structure itself in a way that facilitates simplification. I think this is why we have political parties, or any comitee. I hope the blogsphere can keep these mechanics in check. The story of the tower of babylon is perhaps worth remembering. It talks about situation where we reach for the stars and we end up not being able to communicate with one another. -

Dikran Marsupial at 23:20 PM on 11 August 2010On Statistical Significance and Confidence

John Russell If it is any consolation, I don't think it is overly contraverisal to suggest that there are many (I almost wrote majority ;o) active scientists who use tests of statistical significance every day that don't fully grasp the subtleties of underlying statistical framework. I know from my experience of reviewing papers that it is not unknown for a statistican to make errors of this nature. It is a much more subtle concept that it sounds. chriscanaris I would suggest that the definition of an outlier is another difficult area. IMHO there is no such thing as an outlier independent of assumtions made regarding the process generating the data (in this case, the "outliers" are perfectly consistent with climate physics, so they are "unusual" but not strictly speaking outliers). The best definition of an outlier is an observation that cannot be reconciled with a model that otherwise provides satisfactory generalisation. ABG Randomisation/permutation tests are a really good place to start in learning about statistical testing, especially for anyone with a computing background. I can recommend "Understanding Probability" by Henk Tijms for anyone wanting to learn about probability and stats as it uses a lot of simulations to reinforce the key ideas, rather than just maths. -

Alden Griffith at 23:05 PM on 11 August 2010On Statistical Significance and Confidence

John Brooks: yes, this is definitely one way to test significance. It's called a "randomization test" and really makes a whole lot of sense. Also, there are fewer assumptions that need to be made about the data. However, the reason that you are getting lower probabilities is that you are conducting the test in a "one-tailed" manner, that is you are asking whether the slop is greater instead of whether it is simply different (i.e. could be negative too). Most tests should be two-tailed unless you have your specific alternative hypothesis (positive slope) before you collect the data. -Alden p.s. I'll respond to others soon, I just don't have time right now. -

adelady at 22:44 PM on 11 August 2010Models are unreliable

rcglinski. Not precipitation and not a century, but this item gives a really neat alignment of humidity over the last 40 years. I've not followed the references through, but you might find some leads to what you're after if you do. http://tamino.wordpress.com/2010/08/08/urban-wet-island/#comments -

Bern at 22:41 PM on 11 August 2010On Statistical Significance and Confidence

As has been mentioned elsewhere by others, given that the data prior to this period showed a statistically significant temperature increase, with a calculated slope, then surely the null hypothesis should be that the trend continues, rather than there is no increase? I guess it depends on whether you take any given interval as independent of all other data points... stats was never my strong point - we had the most uninspiring lecturer when I did it at uni, it was a genuine struggle to stay awake! -

chris1204 at 22:38 PM on 11 August 2010On Statistical Significance and Confidence

The data set contains two points which are major 'outliers' - 1996 (low) and 1998 (high). I appreciate 1998 is attributable to a very strong El Nino. Very likely, the effect of the two outliers is to cancel one another out. Nevertheless, it would be an interesting exercise to know the probability of a positive slope if either or both outliers were removed (a single and double cherry pick if you like) given the 'anomalous' nature of the gap between two temperatures in such a short space of time. -

Dikran Marsupial at 22:28 PM on 11 August 2010Has Global Warming Stopped?

fydijkstra A few points: (i) just because a flattening curve gives a better fit to the calibration data than a linear function does not imply that it is a better model. If it did then there would be no such thing as over-fitting. (ii) it is irellevant that most real world functions saturate at some point if the current operating point is nowhere near saturation. (iii) there is indeed no physical basis to the flattening model, however the models used to produce the IPCC projections are based on our understanding of physical processes. They are not just models fit to the training data. That is one very good reason to have more confidence in their projections as predictions of future climate (although they are called "projection" to make it clear that they shouldn't be treated as predictions without making the appropriate caveats). (iv) while low-order polynomials are indeed useful, just because it is a low-order polynomial does not mean that there is no over-fitting. A model can be over-fit without exactly interpolating the calibration data, and you have given no real evidence that your model is not over-fit. (v) your plot of the MDO is interesting as not only is there an oscillation, but it is super-imposed on a linear function of time, so it too goes off to infinity. (vi) as there is only 2 cycles of data shown in the graph, there isn't really enough evidence that it really is an oscillation, if nothing else it (implicitly) assumes that the warming from the last part of the 20th century is not caused by anthropogenic GHG emissions. If you take that slope away, then there is very little evidence to support the existence of an oscillation. (v) it would be interestng to see the error bars on your flattening model. I suspect there are not enough observations to greatly constrain the behaviour of the model beyond the calibration period, in which case the model not giving useful predictions. -

John Russell at 22:25 PM on 11 August 2010On Statistical Significance and Confidence

I hate to admit this -- I'm very aware some will snort in derision -- but as a reasonably intelligent member of the public, I don't really understand this post and some of the comments that follow. My knowledge of trends in graphs is limited to roughly (visually) estimating the area contained below the trend line and that above the trend line, and if they are equal over any particular period then the slope of that line appears to me to be a correct interpretation of the trend. That's why, to me, the red line seems more accurate than the blue line on the graph above. And this brings me to the problem we're up against in explaining climate science to the general public: only a tiny percentage (and yes, it's probably no more than 1 or 2 percent of the population) will manage to wade through the jargon and presumed base knowledge that scientists assume can be followed by the reader. Some of the principles of climate science I've managed to work out by reading between the lines and googling -- turning my back immediately on anything that smacks just of opinion and lacks links to the science. But it still leaves huge areas that I just have to take on trust, because I can't find anyone who can explain it in words I can understand. This probably should make me prime Monckton-fodder, except that even I can see that he and his ilk are politically-motivated to twist the facts to suit their agenda. Unfortunately, the way real climate science is put across, provides massive opportunities for the obfuscation that we so often complain about. Please don't take this personally, Alden; I'm sure you're doing your best to simplify -- it's just that even your simplest is not simple enough for those without the necessary background. -

Berényi Péter at 20:55 PM on 11 August 2010Temp record is unreliable

#109 kdkd at 19:37 PM on 11 August, 2010 Your approach still gives the appearance of cherry picking stations You are kidding. I have cherry picked all Canadian stations north of the Arctic Circle that are reporting, that's what you mean? Should I include stations with no data or what? How would you take a random sample of the seven (7) stations in that region still reporting to GHCN every now and then? 71081 HALL BEACH,N. 68.78 -81.25 71090 CLYDE,N.W.T. 70.48 -68.52 71917 EUREKA,N.W.T. 79.98 -85.93 71924 RESOLUTE,N.W. 74.72 -94.98 71925 CAMBRIDGE BAY 69.10 -105.12 71938 COPPERMINE,N. 67.82 -115.13 71957 INUVIK,N.W.T. 68.30 -133.48 BTW, here is the easy way to cherry pick the Canadian Arctic. Hint: follow the red patch. -

fydijkstra at 20:43 PM on 11 August 2010Has Global Warming Stopped?

In my comment #20 I showed that the data fit better to a flattening curve than to a linear line. This is true for the last 15 years, but also for the last 50 years. I also suggested a reason why a flattening curve could be more appropriate than a straight line: most processes in nature follow saturation patterns instead of continuing ad infinitum. Several comments criticized the polynomial function that I used. ‘There is no physical base for that!’ could be the shortest and most friendly summary of these comments. Well, that’s true! There is no physical basis for using a polynomial function to describe climatic processes, regardless of which order the function is, first (linear), second (quadratic) of higher. Such functions cannot be used for predictions, as Aldin also states: we are only speaking about the trend ‘to the present’. Aldin did not use any physical argument in his trend analysis, and neither did I, apart from the suggestion about ‘saturation.’ A polynomial function of low order can be very convenient to reduce the noise and show a smoothed development. Nothing more than that. It has nothing to do with ‘manipulating [as a] substitute of knowing what one is doing’ (GeorgeSP, #61). A polynomial function should not be extrapolated. So far about the statistical arguments. Is there really no physical argument why global warming could slow down or stop? Yes there are such arguments. As Akasofu has shown, the development of the global temperature after 1800 can be explained as a combination of the multi-decadal oscillation and a recovery from the Little Ice Age. See the following figure. The MDO has been discussed in several peer-reviewed papers, and they tend to the conclusion, that we could expect a cooling phase of this oscillation for the coming decades. So, the phrase ‘global warming has stopped’ could be true for the time being. The facts do not contradict this.

What causes this recovery from the Little Ice Age, and how long will this recovery proceed? That could be a multi century oscillation. When we look at Roy Spencers ‘2000 years of global temperatures’ we see an oscillation with a wavelength of about 1400 years: minima in 200 and 1600, maximum in 800. The next maximum could be in 2200.

The MDO has been discussed in several peer-reviewed papers, and they tend to the conclusion, that we could expect a cooling phase of this oscillation for the coming decades. So, the phrase ‘global warming has stopped’ could be true for the time being. The facts do not contradict this.

What causes this recovery from the Little Ice Age, and how long will this recovery proceed? That could be a multi century oscillation. When we look at Roy Spencers ‘2000 years of global temperatures’ we see an oscillation with a wavelength of about 1400 years: minima in 200 and 1600, maximum in 800. The next maximum could be in 2200.

-

John Brookes at 19:43 PM on 11 August 2010On Statistical Significance and Confidence

Another interesting way to look at it is to look at the actual slope of the line of best fit, which I get to be 0.01086. Now take the actual yearly temperatures and randomly assign them to years. Do this (say) a thousand times. Then fit a line to each of the shuffled data sets and look at what fraction of the time the shuffled data produces a slope of greater than 0.01086 (the slope the actual data produced). So for my first trial of 1000 I get 3.5% as the percentage of times random re-arrangement of the temperature data produces a greater slope than the actual data. The next trial of 1000 gives 3.5% again, and the next gave 4.9%. I don't know exactly how to phrase this as a statistical conclusion, but you get the idea. If the data were purely random with no trend, you'd be expecting ~50%. -

kdkd at 19:37 PM on 11 August 2010Temp record is unreliable

BP #108 Your approach still gives the appearance of cherry picking stations. As I said previously, you need to make a random sample of stations to examine. Individual stations on a global grid are not informative, except as curiosities :) -

Berényi Péter at 18:59 PM on 11 August 2010Temp record is unreliable

This one is related to the figure above. It's adjustments to GHCN raw data relative to the Environment Canada Arctic dataset (that is, difference between red and blue curves). Adjustment history is particularly interesting. It introduces an additional +0.15°C/decade trend after 1964, none before.

Adjustment history is particularly interesting. It introduces an additional +0.15°C/decade trend after 1964, none before.

-

gallopingcamel at 16:30 PM on 11 August 2010Why I care about climate change

Some great posts! Here are a few comments: macoles (#123 & #124), The irony was unintended. For me the establishment/consensus is often wrong whether it be based on religion or science. It is in my nature to question authority whether it is based on church, ideology or science. muoncounter (#125), Like you, I care about the teaching of science in K-12 as well as college level. In my state, there are 370 high schools but less than 40 teachers with physics degrees teaching science. The quality of science text books is critical when so few teachers have an adequate background in the subject. I hope you will want to support John Hubisz in his efforts to improve science text books: http://www.science-house.org/middleschool/ doug_bostrom (#126) In Newton's day they used to talk about "Laws" but modern physicists understand that they are always wrong even though their theories often have great predictive power. The perihelion of Mercury does precess as Einstein predicted, GPS systems need relativistic corrections and the energy released from nuclear reactions appears to follow the E=mc2 relationship. In spite of all this success, Einstein understood the limitations of his theories better than the folks at Conservapedia. muoncounter (#127), Loved the cartoon (how did I miss it?). At least one more pane needed for evolution vs. creationism. -

rcglinski at 16:12 PM on 11 August 2010Models are unreliable

Do any climate models have substantial agreement with the last century of precipitation data? -

rcglinski at 15:42 PM on 11 August 2010CO2 was higher in the past

Thanks Doug. -

Doug Bostrom at 14:10 PM on 11 August 2010Models are unreliable

Fun! Schmidt and Knappenberger are found at Annan's blog, discussing M&M 2010. Minor celebrities For extra credits in "Climate Science Arcana" coursework, follow the "old dark smear" links at the top of Annan's post. Those have a bit of useful background material to the M&M 2010 treatment of Santer 2008, to do with RPjr. If you have a clue what that's all about, you spend too much time on climate blogs. -

Doug Bostrom at 12:00 PM on 11 August 2010Grappling With Change: London and the River Thames

Further to HR's remarks, I see that it's actually quite easy to find publications indicating some changes in storm behavior and frequency in the North Atlantic. I should not so easily conclude that I can't contribute a little further information here. Increasing destructiveness of tropical cyclones over the past 30 years A shift of the NAO and increasing storm track activity over Europe due to anthropogenic greenhouse gas forcing Heightened tropical cyclone activity in the North Atlantic: natural variability or climate trend? Trends in Northern Hemisphere Surface Cyclone Frequency and Intensity As the London folks noted, this information is in keeping w/predictions. As they also noted, while no particular storm can be linked to climate forcing it would not be prudent to ignore an emerging pattern of observed evidence of a predicted trend. HR, this exercise leads me to suggest you ask yourself, "Why did I talk about sea level change over the past 30 years when our topic is about sea level rise over the next 100+ years? Why am I trying so hard to ignore what's in front of me?" -

Don Gisselbeck at 11:58 AM on 11 August 2010More evidence than you can shake a hockey stick at

The record low in Guinea is interesting. I was a Peace Corps volunteer in neighboring Sierra Leone in the late 70s. Several of my students were from Guinea and had seen ice form on open water during the Harmattan. -

Doug Bostrom at 11:39 AM on 11 August 2010CO2 was higher in the past

Robert I don't see anything unusual there. WUWT folks are angry because some poor scientist found out something boxing them in a little bit more. -

apeescape at 11:33 AM on 11 August 2010On Statistical Significance and Confidence

Thanks for this, you have a great website. btw, did you check out the Bayes factor relative to the "null"? -

robert test at 11:02 AM on 11 August 2010CO2 was higher in the past

Watts has just posted a new article http://wattsupwiththat.com/2010/08/10/study-climate-460-mya-was-like-today-but-thought-to-have-co2-levels-20-times-as-high/ It refers to a new study in PNAS http://www.pnas.org/content/early/2010/08/02/1003220107.abstract?sid=08063fb7-c9e9-48d7-a515-b3db8907505c Hope you can comment on this soon. -

Doug Bostrom at 10:52 AM on 11 August 2010Grappling With Change: London and the River Thames

I'm not the person to deal with your points, HR, I'm the wrong person to challenge. You're in disagreement with experts having more knowledge of this topic than either of us. What I can surmise based on what I've read of our processes of cognition is that your disagreement with people knowing more of the topic of operating the Thames Barrier than the both of us suggests you're unwilling to confront information that makes you uncomfortable. I can't think of any other explanation. By the way, you're by no means unique or even at fault for having a hard time dealing with risk. As far as researchers can tell so far it's a universal trait of humans. -

HumanityRules at 10:42 AM on 11 August 2010Grappling With Change: London and the River Thames

30.doug_bostrom at 11:45 AM on 5 August, 2010 Doug it's a little weak to suggest the data I presented is just an aspect of a psycological problem I have. There is no significant trend for storminess in the North Sea that I can find published. The list of Thames barrier closures shows a downward trend in the surge and High Water Level readings during closure events suggesting the barrier is being closed for less severe events. Ocean levels have risen how much in the last 3 decades? 10cm? There is no justification to suggest the very large increase in barrier closures has anything to do with real changes in climate. Deal with the points rather than my mental state. -

Berényi Péter at 10:38 AM on 11 August 2010Temp record is unreliable

#102 Ned at 06:50 AM on 11 August, 2010 I thought it would be worth putting up a quick example to illustrate the necessity of using some kind of spatial weighting when analyzing spatially heterogeneous temperature data OK, you have convinced me. This time I have chosen just the Canadian stations north of the Arctic Circle from both GHCN and the Environment Canada dataset. The divergence is still huge. Environment Canada shows no trend whatsoever during this 70 year period, just a cooling event centered at the early 1970s, while GHCN raw dataset is getting gradually warmer than that, by more than 0.5°C at the end, creating a trend this way.

No amount of gridding can explain this fact away.

The divergence is still huge. Environment Canada shows no trend whatsoever during this 70 year period, just a cooling event centered at the early 1970s, while GHCN raw dataset is getting gradually warmer than that, by more than 0.5°C at the end, creating a trend this way.

No amount of gridding can explain this fact away.

-

Doug Bostrom at 10:18 AM on 11 August 2010CO2 was higher in the past

Here's an excellent writeup on main sequence stars rcglinksi. -

rcglinski at 10:10 AM on 11 August 2010CO2 was higher in the past

How is solar heat output determined for periods before direct measurement? I ask because the article says solar output was 4% lower during the Ordovician but I can't tell how the number was arrived at. -

Chris Winter at 09:41 AM on 11 August 2010Antarctica is gaining ice

Thanks for pointing this out over at CP. There, I made the assumption that "sea ice" meant floating icebergs. My bad. -

andrewcodd at 09:38 AM on 11 August 2010Models are unreliable

There is a trade off betweeen concern for the most vulnerable and mistrust of governments. I am not a confirmed beleiver in the network of socialists doctoring results for their trotskyite masters. That said inevitably there will be incidences where the responsability of stewardship weighs heavy on scientific rigour. The code should be available so we can move on. We all agree models will be better in the future. Not to heed what they are currently delivering is an imprudency beyond recall. -

Daniel Bailey at 09:32 AM on 11 August 2010On Statistical Significance and Confidence

Good post, Alden. Communicating anything to the public does indeed require a minimal usage of jargon; but as we all know, there exist those who live to be contrarians, for whom no level of clear explanations exist that cannot be obfuscated. Thanks again! The Yooper -

Stephan Lewandowsky at 09:31 AM on 11 August 2010On Statistical Significance and Confidence

I would appreciate some more background on how you computed the Bayesian credible interval. For example, what exactly do you mean my non-informative prior? Uniform? And how did you deal with auto-correlations if at all? (I realize that I am asking for the complexity you seek to simplify--fair enough, but a 'technical appendix' might be helpful for those more conversant with statistics.) -

Peter Hogarth at 08:55 AM on 11 August 2010Models are unreliable

Pete Ridley at 07:12 AM on 11 August, 2010 I'm guessing you read the comments and not the details of the paper? The paper itself is interesting, as M,M&H confirm that tropical Lower Troposhere temperature trends from 1979 to end of 2009 are significantly positive, and to an extent reflect the earlier views in Santer 2008. See my comment on tropospheric hot-spot for some background on this. At the time of writing that comment I suggested that the inclusion of the 2010 data would allow the trends to more closely approach statistical “robustness”, so confirmation is a useful step. They also confirm the known issues with the earlier models used by Santer, and also confirm that the differences between the UAH and RSS MSU datasets are now statistically significant. For the Tropical Lower Troposphere temperature data they quote “In this case the 1979-2009 interval is a 31-year span during which the upward trend in surface data strongly suggests a climate-scale warming process”. That the original model was flawed in this case is old news, and this has been discussed here previously. I note once again some of your sources (and the comments on this new paper) lack context and scientific objectivity. -

Berényi Péter at 08:54 AM on 11 August 2010Temp record is unreliable

#105 Peter Hogarth at 07:58 AM on 11 August, 2010 Bekryaev lists all sources (some of them available for the first time), the majority with links, though I admit I haven't followed them all through. Show us the links, please. I am surprised you make comments without even looking at the paper. Anyway, I genuinely thought you might be interested. I am. However, I would prefer not to pay $60 just to have a peek what they've done. I am used to the free software development cycle where everything happens in plain public view. #104 Ned at 07:11 AM on 11 August, 2010 Obviously, stations in northern Canada are mostly warming faster than those further south I see that. However, that does not explain the fact the bulk of divergence between the three datasets occurred in just a few years around 1997 while the sharp drop in Canadian GHCN station number happened in July, 1990. Anyway, I have all the station coordinates as well, so a regional analysis (with clusters of stations less than 1200 km apart) can be done as well. But I am afraid we have to wait for that as I have some deadlines, then holidays as well. -

dorlomin at 08:32 AM on 11 August 2010More evidence than you can shake a hockey stick at

Does anyone know the cause of the dip in CO2 concentrations on the slide featured in post 15, the dip being about 1500 AD. -

Doug Bostrom at 08:27 AM on 11 August 2010Greenland's ice mass loss has spread to the northwest

Fascinating article about Greenland here in the UK's Daily Mail. A reporter visits scientists working this summer on the ice sheet, investigating drainage. Some great photos. -

Doug Bostrom at 08:03 AM on 11 August 2010Models are unreliable

Pete, worth noting also that you won't find a refutation to M&M 2010 coming from here, you'll find it reported if and when such a refutation appears. The sites you mention are in full celebration but of course they're not adding any information of their own, the actual information is all in the paper itself. Hopefully for M&M the air the party won't be over before their work actually appears in print. :-) -

Peter Hogarth at 07:58 AM on 11 August 2010Temp record is unreliable

Berényi Péter at 07:07 AM on 11 August, 2010 Thanks for fixing the links, though I think Ned has actually answered one question I had quite efficiently. I'm not sure what it is I still don't get? (why so defensive?) Bekryaev lists all sources (some of them available for the first time), the majority with links, though I admit I haven't followed them all through. I am surprised you make comments without even looking at the paper. Anyway, I genuinely thought you might be interested. -

Doug Bostrom at 07:55 AM on 11 August 2010Models are unreliable

I might add as a gratuitous fling, the amount of back-slapping and rejoicing around M&M's first accepted comment in years is indicative of the general poverty of their camp. Looking at the comment threads erupting around this I'm reminded of meat being thrown into a kennel full of emaciated dogs. Folks outside the kennel have more to eat than they care to look at, frankly, are amply fed with dismal facts. Less gratuitously, this publication immediately moves me to point out that not everybody can feed from the meal on offer. Those who've committed themselves to trying to show the temperature observations under discussion are meaningless will have to go hungry unless they disagree w/M&M. Those saying there's no trend will also have to continue listening to their stomachs rumbling, because again M&M's results depend on observing a trend. -

Pete Ridley at 07:46 AM on 11 August 2010Confidence in climate forecasts

For some reason my earlier post of this comment was removed so I’ve modified it slightly. I can’t see any violation of the comments policy.Moderator Response: Putting duplicate comments into multiple threads leads to incoherent discussion. -

Doug Bostrom at 07:38 AM on 11 August 2010Models are unreliable

James Annan comments on M&M's comment as published in ASL: A commenter pointed me towards this which has apparently been accepted for publication in ASL. It's the same sorry old tale of someone comparing an ensemble of models to data, but doing so by checking whether the observations match the ensemble mean. Well, duh. Of course the obs don't match the ensemble mean. Even the models don't match the ensemble mean - and this difference will frequently be statistically significant (depending on how much data you use). Is anyone seriously going to argue on the basis of this that the models don't predict their own behaviour? If not, why on Earth should it be considered a meaningful test of how well the models simulate reality? Of course the IPCC Experts did effectively endorse this type of analysis in their recent "expert guidance" note, where they remark (entirely uncritically) that statistical methods may assume that "each ensemble member is sampled from a distribution centered around the truth". But it's utterly bogus nevertheless, as there is no plausible situation in which that can occur, for any ensemble prediction system, ever. Having said that, IMO a correct comparison of the models with these obs does show the consistency to be somewhat tenuous, as we demonstrated in that (in)famous Heartland presentation. It is quite possible that they will diverge more conclusively in the future. Or they may not. They haven't yet. Annan Should be quite a stir out of this, papers of this sort being few and far between. Worth noting that Annan is an unflinching critic of whatever he sees wrong w/IPCC, etc. Probably a useful snapshot metric of the significance of M&M's output here. -

Pete Ridley at 07:12 AM on 11 August 2010Models are unreliable

Jo Nova’s blog has an interesting new article “The models are wrong (but only by 400%) ” (Note 1) which you should have a look at, along with the comments. It covers the recent paper “Panel and Multivariate Methods for Tests of Trend Equivalence in Climate Data Series” (Note 2) co-authored by those well-known and respected expert statisticians, McIntyre and McKitrick, along with Chad Herman. David Stockwell sums up the importance of this new paper with “This represents a basic validation test of climate models over a 30 year period, a validation test which SHOULD be fundamental to any belief in the models, and their usefulness for projections of global warming in the future”. David provides a more detailed comment on his Niche Modeling blog “How Bad are Climate Models? Temperature” thread (Note 3) in which he concludes “But you can rest assured. The models, in important ways that were once claimed to be proof of “… a discernible human influence on global climate”, are now shown to be FUBAR. Wouldn’t it have been better if they had just done the validation tests and rejected the models before trying to rule the world with them?”. Come on you model worshipers, let’s have your refutation of the McIntyre et al. paper. NOTES: 1) see http://joannenova.com.au/2010/08/the-models-are-wrong-but-only-by-400/#more-9813 2) see http://rossmckitrick.weebly.com/uploads/4/8/0/8/4808045/mmh_asl2010.pdf 3) see http://landshape.org/enm/how-bad-are-climate-models/ Best regards, Pete Ridley -

Ned at 07:11 AM on 11 August 2010Temp record is unreliable

That map from my previous comment also nicely illustrates the conceptual flaw in the claim (by Anthony Watts, Joe D'Aleo, etc.) that the observed warming trend is an artifact of a decline in numbers of high-latitude stations. Obviously, stations in northern Canada are mostly warming faster than those further south. So, if you did use a non-spatial averaging method, dropping high-latitude stations would create an artificial cooling trend, not warming. Using gridding or another spatial method, the decline in station numbers is pretty much irrelevant (though more stations is of course preferable to fewer). -

Berényi Péter at 07:07 AM on 11 August 2010Temp record is unreliable

#101 Peter Hogarth at 04:25 AM on 11 August, 2010 some of the links seems to be broken? Yes, two of them, sorry.- GHCN data

- March, 1840 file at Environment Canada - this one only contains a single record for Toronto, but shows the general form of the link and structure of records

Prev 2250 2251 2252 2253 2254 2255 2256 2257 2258 2259 2260 2261 2262 2263 2264 2265 Next

Arguments

Arguments