Recent Comments

Prev 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 Next

Comments 44651 to 44700:

-

michael sweet at 21:03 PM on 18 July 2013Patrick Michaels: Cato's Climate Expert Has History Of Getting It Wrong

Tom,

I am not much of a betting man. I see your point that the IPCC projections are that you should measure a temperature increase at the 95% level after 25 years. If you like to bet, it would be a good bet to make.

On the other hand, Michaels publicly claims that the temperature rise has stopped. In this bet he is claiming the temperature has not risen when there is a 90% or greater (as long as it is less than 95%) probability that the temperature has risen. If you just look at the data the slope is always positive. In the peer reviewed data scientists prefer a 95% confidence to make a claim. In common speach when there is a 90% chance of something not being chance you would say it is happening. Michaels is making the opposite claim, ie that if it is not 95% significant there is no rise. That is a false claim.

To me, if Michaels is only willing to bet on a cherry picked date and he wins at 93% confidence, that means he is convinced the temperature is rising and will continue to rise.

-

MA Rodger at 20:47 PM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

old sage.

You have yet to explain how you obtained the 56 w/m^2 @223. It is a trivial calculation using Stephan's Law, which is the furmula you say @ 231

you used. You show yourself here as somebody who cannot even honestly perform simple arithmetical calculations but would rather talk nonsense about holding a hand out on a high mountain. Is a hand an appropriate measurement device? Especially given all the radiation whizzing about in all directions? But them you deny the existence of that radiation!

And, old sage, while you are about re-calculating the radiation from a body at 300K, perhaps you could also do another trivial back-of-the-fag-packet calculation. You are so wedded to conductivity as a means of energy distribution. So if the globe with a temperature 300K were surrounded by a solid with the same thermal conductivity as air (at STP), how large will the energy flux of conduction be? Consider this 'solid' atmosphere has constant properties with altitude. Call it 8km deep. Call it 1km deep if you like. Can you provide an honest answer here?

-

John Brookes at 20:41 PM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

But Neil has repeated the "skeptic" mantra of climate science being "discredited". The people who want to believe this will accept it uncritically. Some members of the the public will be influenced as well.

But the "skeptics" continued bleating will in the end discredit them.

-

sylas at 20:17 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Tom, thanks very much. I've learned something. Muzz, sorry for introducing confusion to the thread.

Given your lead I've looked further and indeed (for example) the Australian carbon pricing system does include emissions of Nitrous Oxide (NO2); this is also an important secondary greenhouse gas emission from conventional fuels, and has no carbon involved.

-

Dikran Marsupial at 18:54 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

funglestrumpet - my post wasn't a detailed explanation, just an appeal for the discussion not to be distracted by quibbles over terminology that obstruct the discussion. The mere fact that molecules such as CO2 can absorb outbound IR radiation is only one element of the explanation of the greenhouse effect, it is the way this affects the radiation of IR into space from the upper atmosphere that is the key to understanding the mechanism.

-

old sage at 18:46 PM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

C99 - look next but one comment above yours, it pretty well claims just that.

Also, for the benefit of others, whether something is true or not very often depends on other factors. The emission spectrum of CO2 for example - and my authorities are impeccable - will differ when it is in solution in a raindrop, as in a cloud.

Some of you I expect have invested a lot of time and energy in mapping radiation but meanwhile all the heat generated by man is getting dumped in the ice and waters courtesy of kinetic transfer. When I see formula one engineers changing their cooling arrangements depending on cloud cover I might believe it were otherwise but in the meantime, imv heat transfer by radiation is pretty feeble under atmospheric conditions compared with kinetic.

Fresher level physics(?), you're right there someone above - moving charged particles in a magnetic field generate e/m radiation.

-

funglestrumpet at 18:39 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Dikan Marsupial@5

Thanks for the detailed explanation. I notice no mention of something called dipoles, which I thought had something to do with how CO2 traps, sorry, 'absorbs' (or whatever) the longwave (re)radiation. I suppose it is around that dipole - whatever it is - where my ignorance about how global warming happens actually resides. (Perhaps it is the engineer in me that feeds my desire to know the nuts and bolts of the issue. I doubt I am alone.)

-

Aristarchus at 16:52 PM on 18 July 20132013 SkS Weekly News Roundup #29A

The link to the article "Models point to rapid sea-level rise from climate change" contains errors.

Moderator Response:[JH] Link fixed. Thank you for bringing this glitch to our attention.

-

robert_13 at 15:37 PM on 18 July 2013It's not us

@Julian Flood

Suppose we have a sink into which the faucet is pouring water.We have control of the faucet and can increase or decrease its rate of flow. We may not have the political will to do that, but that's a side issue. The point here is that we can. Now let's say that some natural forces are pouring vinegar, pee, alcohol, orange juice, and milk into the sink at the same time our water is pouring in from the faucet.

If the water level in the sink is rising at only 45% percent of the rate we would expect from the rate of our input from the faucet, why do you think our lack of knowledge of exactly how much of each of the other inputs and our resulting inability to calculate the sum of their effects is important for understanding whether our faucet is causing the water level to rise?

If the rate of increase in the level of water is 45% of the rate we would expect from our input from the faucet, we know with absolute certainty that that 55% of our water is going down the drain and all the other inputs with it as well. I don't understand what is so hard to understand about that? Why do you think there is any need for detailed knowledge about the rest of what's going down the drain?

Now, there is a very significant implication in this. If we know how much CO(2) we're putting out and how much is showing up in the atmosphere (~45% of our input), where is the rest of that CO(2) going? The only reasonable explanation is that the ocean is sequestering the vast majority of it. We do know that the CO(2) level in solution in the ocean has been increasing and ocean water is becoming more acidic. We are also seeing the effects of this on coral reefs and other marine life. As the globe becomes warmer, CO(2) becomes less solube in water, so the 45% figure is bound to go up and accelerate an already undesirabe situation.

So I don't see where your doubts are coming from unless you just don't want to see and are willing to pick at any single little corner you can find, one at a time and out of context with the bigger picture, simply to avoid seeing.

-

Tom Curtis at 14:03 PM on 18 July 2013Patrick Michaels: Cato's Climate Expert Has History Of Getting It Wrong

michael sweet @47,

First, I have just noticed that I accidentally dropped a decimal place from the standard errors of the 25 year trends in my preceding post, which should have been 0.069 C and 0.07 C.

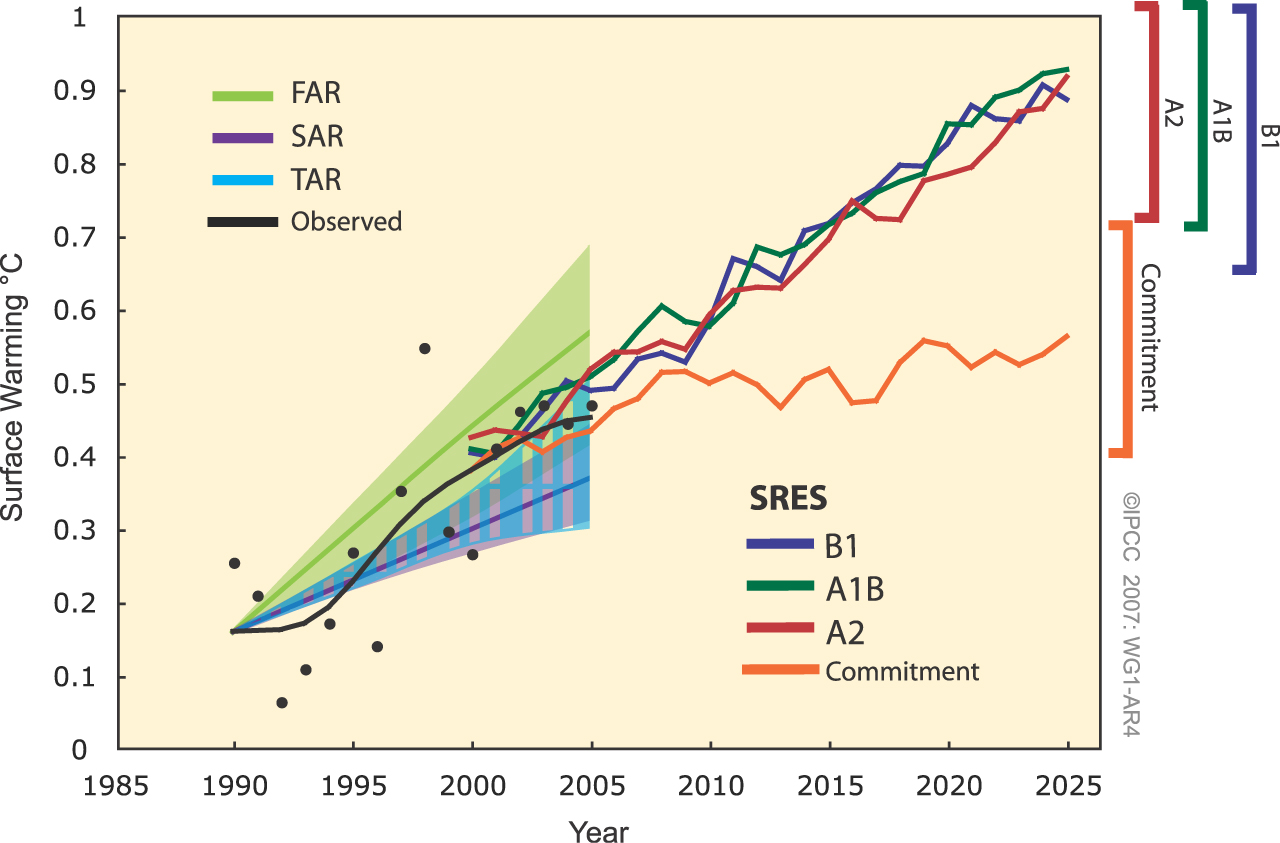

Second, here are the AR4 short term predictions:

You will notice that the "commitment" prediction matches the 1998 observations, but that all other predictions are for temperatures 0.3 C above 1998 values, or higher. If the IPCC is anywhere near accurate, 1998 values should be routinely exceeded by 2020, and I suspect will be routinely eceeded from 2015 forward. By 2023, the termination of the bet, even very strong La Ninas will be hotter than the 1998 El Nino.

Put another, way, if the IPCC is correct, then the trend to 2023 has only a 2.5% chance of being less than 0.13 C per decade. Using the 1997 El Nino as a start point increases that chance, but not enough to bring the lower 95% confidence interval below 0.07 C per decade. Michaels bet accurately represents the claim of 25 years without warming (if we ignore the cherry picked start point). Not taking it if you are prone to bet would reflect a lack of confidence in the IPCC predictions.

-

Tom Curtis at 13:44 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Muzz @10, you missed my main point. Of course, I framed that point as a rhetorical question so I must accept blame for that.

The fact is that "carbon tax" as a short hand for "a tax on carbon dioxide equivalents" is perfectly reasonable nomenclature. That does not mean there are not other reasonable names for the same thing. Nor does it mean people cannot, for rhetorical purposes, deliberately misunderstand the name. Australia's Fringe Benifits Tax, for example, is not a tax on the length of peoples hair hanging over their forehead, but could be so misconstrued if somebody wanted to make a political point from it.

These defects will aflict almost any name chosen.

Given that, arguing about mere naming is rather silly.

(As an aside, why would I want to take a cane to a TLA, and what is a TLA in the first place?)

-

Tom Curtis at 13:37 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Sylas @10, from the law for Australia's Carbon Tax:

"The following is a simplified outline of this Act:

• This Act sets up a mechanism to deal with climate change by encouraging the use of clean energy.

• The mechanism begins on 1 July 2012, and operates on a financial year basis.

• The mechanism is administered by the Clean Energy Regulator.

• If a person is responsible for covered emissions of greenhouse gas from the operation of a facility, the facility’s annual emissions are above a threshold, and the person does not surrender one eligible emissions unit for each tonne of carbon dioxide equivalence of the gas, the person is liable to pay unit shortfall charge.

• If a natural gas supplier supplies natural gas, and does not surrender one eligible emissions unit for each tonne of carbon dioxide equivalence of the potential greenhouse gas emissions embodied in the natural gas, the supplier is liable to pay unit shortfall charge.

• If a person opts in to the mechanism, the person acquires, manufactures or imports taxable fuel in specified circumstances, and does not surrender one eligible emissions unit for each tonne of carbon dioxide equivalence of the potential greenhouse gas emissions embodied in the fuel, the person is liable to pay unit shortfall charge.""14 Carbon pollution cap

Carbon pollution cap

(1) The regulations may declare that:

(a) a quantity of greenhouse gas that has a carbon dioxide equivalence of a specified number of tonnes is the carbon pollution cap for a specified flexible charge year; and

(b) that number is the carbon pollution cap number for that flexible charge year."(My emphasis)

Such laws must be in terms of carbon dioxide equivalents (or some similar unit) or else they will not effect emissions of any controlled substance under the Kyoto protocols other than CO2.

Carbon dioxide equivalents is the standard unit for measuring global warming potential, defined by the IPCC as follows:

"Global Warming Potential (GWP) An index, based upon radiative properties of well-mixed greenhouse gases, measuring the radiative forcing of a unit mass of a given well-mixed greenhouse gas in the present-day atmosphere integrated over a chosen time horizon, relative to that of carbon dioxide. The GWP represents the combined effect of the differing times these gases remain in the atmosphere and their relative effectiveness in absorbing outgoing thermal infrared radiation. The Kyoto Protocol is based on GWPs from pulse emissions over a 100-year time frame."

The idea is that a substance with a strong greenhouse effect measured in forcing, but a very short life time before decomposing is not as dangerous as a substance with a weaker forcing but which endures (effectively) forever. Necessarilly the approach is crude, but duration in the atmosphere is a factor in how dangerous the emissions are, so some such measure is required. I am all for anybody who can proposing and defending a better unit than CO2 eq, but absent such a proposal we must use the units we have or, for example, not tax fugitive emissions of methane from garbage dumps, or the production of halo-carbons etc.

-

sylas at 12:59 PM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Muzz, you still have this wrong.

"Carbon" is NOT a short hand for "carbon dioxide equivalent". Carbon is simply the substance which, when burned, produces carbon dioxide. Carbon based fuels come in many forms. But it is the carbon content of what is burned that counts, because this is the major factor that determines how much carbon dioxide is produced.

The carbon tax, or price, applies for carbon based fuels. It is a tax on carbon. Not on equivalents; on carbon specifically.

Carbon dioxide equivalence is actually a (fairly crude) unit of measure for the impact of other greenhouse gases. I am not entirely sure why Tom mentions this; it is not, as far as I am aware, used as a basis for taxes on other gases. (Is it?) This might be a reasonable basis for fixing appropriate prices on other substances as a proprotion of the carbon price; though I not heard of such a thing.

In the meantime, we put a price on carbon because it is the burning of carbon based fuels which are by far the largest driver of the enhanced greenhouse effect though the emissions of carbon dioxide, and the consequence global warming.

-

michael sweet at 12:07 PM on 18 July 2013Patrick Michaels: Cato's Climate Expert Has History Of Getting It Wrong

Supak,

So if there is a 93% chance of a temperature rise Michaels wins and can claim there is no temperature rise? Perhaps if p<.05 you win .20>p>.05 no winner and p>.2 Michaels wins.

If Michaels wants to win on a 90% probability of temperature rising (with a cherry picked start date), he certainly thinks the temperature will go up. The question is how much will it go up. -

Muzz at 11:43 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Tom Curtis @8, all correct points I'm sure. Thankyou. "Carbon" no doubt is a convenient short hand reference for "carbon dioxide equivalents", but IMO is very misleading and I would suggest is one that scientists would or should shy away from using. A name for a tax? How about a greenhouse gas tax (GGT)? Its hard to beat a TLA

-

Tom Curtis at 11:04 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Muzz @7, well actually its carbon dioxide and carbon monoxide, and Methane (CH4), and flourocarbons. Are we noticing a common theme here? Of course, there is also ozone and nitrous oxide, but in general the most potent well mixed greenhouse gases are carbon based, and most are produced by the use of carbon based fuels. Further, the greenhouse potential of all well mixed greenhouse gases is measured in terms of "carbon dioxide equivalents". I wonder what a convenient short hand reference for a tax on "carbon dioxide equivalents" of emissions would be?

-

Muzz at 10:32 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Finally some scientific clarity. It's CO2 not carbon that causes global warming. Please inform the politicians and mainstream media.

-

Tom Curtis at 10:17 AM on 18 July 2013Patrick Michaels: Cato's Climate Expert Has History Of Getting It Wrong

Supak @45, notice how he offers a cowards bet by choosing the largest (or second largest depending on index) El Nino of the twentieth century as a start year. He also reinforces that by using the thermometer based index which is most effected by ENSO fluctuations due to lack of coverage in the Arctic and North Africa. Nevertheless the bet is safe for you, IMO, provided you have a clause calling the bet of if there is a major volcanic eruption (equivalent to Pinatubo or larger) in the last five years of the bet period. You may also want a clause calling the bet of if carbon sequestration schemes reduce CO2 concentration below 400 ppmv by the 25th year, but that is sufficiently unlikely to not be a necessary clause.

More technically, the current 25 year HadCRUT4 trend is 0.147 +/- 0.69 C. The current trend since 1997 is 0.045 +/- 0.12 C. The error for twenty five year trends tends to be just below 0.7 C, so you are betting that the trend will increase by more than 0.35 C to about half the current 25 year trend and to about two thirds of the IPCC predicted trend over that period. Failing a major volcano, if you do not win the bet the IPCC have got it wrong in a big way.

Finally, you may want a clause switching the bet from HadCRUT4 to later HadCRU products which supercede it if they have more extensive coverage, of if the HadCRUT4 product is no longer produced.

-

Composer99 at 10:16 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

old sage:

Anyway, I take it you folks all believe there is absolutely no radiation from earth other than i/r.

Please provide direct quotes from other commenters explicitly making this claim. Otherwise it is a blatant misrepresentation of the responses directed to you.

What us "folks" have been doing is pointing out that physics theory, experiment, and empirical observation contradict your claims against the fact of longwave infrared absorption and emission properties of greenhouse gases in the atmosphere, a contradiction you have so far failed to effectively deal with.

Largely, it seems to me, by simply ignoring what people are actually saying in favour of resorting to unsubstantiated misrepresentations as the above.

-

Tom Curtis at 09:55 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

Zen @4, Hans von Storch is a german climate scientist who has an unfortunate tendency to portray differences of opinion with other scientists as to just how harmful global warming will be in terms of the other scientists "over selling" the dangers. He, along with Dennis Bray, he has published the most interesting and detailed survey of climate scientist's opinions on climate science.

Unfortunately, the survey has (at least) one major technical flaw. Many of the questions are phrased as "How convinced are you that ...", which choices listed from "not at all convinced" (1) to "very much convinced". Technically, anybody who is a little convinced that the statement is not true, or has no opinion on the matter would be "not at all convinced" under this scheme. Thus, anybody responding "2" or higher at least thinks the statement is more likely true than not. Certainly somebody responding "4", ie, that they are moderately convinced are not epistemically neutral on that issue.

Bray and von Storch, and likely most respondents have interpreted questions with that format as representing a range of opinion from complete disagreement to complete agreement with "4" representing a neutral response. They have done this based on normal conventions in survey responses which tend to over ride strict interpretation of questions. The result is that the survey response is, IMO, biased against agreement on various issues.

Despite this, on the crucial questions, "20. How convinced are you that climate change, whether natural or anthropogenic, is occurring now?", and "21. How convinced are you that most of recent or near future climate change is, or will be, a result of anthropogenic causes?" (both on page 46), climate scientists overwhelmingly support the consensus responses. In the first case, the mean response is 6.4 with a standard deviation of approximately 1, and a total of 93.8% "agreeing", ie, having a response of "5" or higher. In the second case, the mean response is 5.7 (st dev = 1.4), with 83.5% "agreeing".

The discrepancy between the 83.5% figure from Bray and van Storch (2010) and Cook et al 2013 is partly due to the bias discussed above, and partly because they survey different things. Specifically, Cook et al survey the proportion of scientific papers endorsing the consensus while Bray and von Storch directly survey the opinions of scientists. From Anderegg et al, we know that scientists "convinced by the evidence" in favour of the consensus have, on average, published twice as many peer reviewed articles as those who are "unconvinced by the evidence". On that basis alone, even accepting Bray and Von Storch as accurate, we would expect 91% of papers to endorse the consensus. Further, the evidence simply does support the consensus. Consequently those who are less convinced, if accurately reporting the evidence, will less frequently be able to find results that actually endorse their beliefs. There will be a tension between their beliefs, and the evidence that will be reflected in the literature.

Given this, Bray and von Storch (2010) does not rebut, or call into question the results of Cook et al (2013). What it does do, or should do, is put a brake on the misinterpretation of Cook et al as showing that 97% of climate scientists endorse the consensus. Cook et al does not show that, and cannot show that because it is a survey of the literature, not of scientists opinions.

-

scaddenp at 09:45 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

9 years to retirement here - I'm flattered to be young know-all. However, I still take the time to study what is actually being claimed before criticizing it, especially when out of my field. What I find irritating is your criticism of imaginary claims of climate science, and as far as I can see, a flat out refusal to actually look at what the science really says. Virtually nothing you say makes sense because this level of misunderstanding.

-

tmac57 at 09:15 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

Being from the U.S. I am unfamiliar with Andrew Neil,but I have to say that I am very put off by his type of interview style,which has become so common these days,where he asks a question,and then proceeds to step on the answer given,in mid-sentence,and then refuses to let the interviewee complete their thought.Also the agressive bulldozing assertion of 'facts' without giving the other side a chance to rebut without,again,interrupting is both rude and frustrating for those whom actually want to hear what the person has to say.

The whole process is clearly being proffered more as a sort of boxing match to be won,rather than a forum to enlighten,educate,and discuss one of the most important issues that mankind has ever faced.That is truly shameful,cynical,and disgusting!

By the way,Ed Davey did remarkably well under the circumstances,and he seemed spot on with his facts,so good job to him!

-

chriskoz at 09:03 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

It's amazing how many times in this interview Ed points out in the simple words that climate sicence is not only about land temperatures but Andrew goes back to his imaginary "plateau" in XXI century because it's very "intriguing". Every time, he denies every point he hears.

Ed would heve done better job saying that we know the physical mechanisms behind the "plateaus" such as the XXi century's one (recal the SkS's escalator to prove there were many of those) with ElNino/LaNina episodes acting as heat exchangers between Ocean (the main heat energy reservoir - 90%) and Athmosphere (which holds only small fraction of heat enrgy - therefore the exchange resulting in wider swings of temperature). But I don't think that argument would make any impression on Andrew: like all other arguments, he would simply ignore it.

-

michael sweet at 08:58 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

Old Sage,

You have a lot of chutzpah for someone who has been demonstrated wrong several times. You need to break out your freshman physics textbook and review it again.

You keep making comments about the ionosphere. Everyone else knows that the ionosphere has no relationship to the surface temperature. This is a comment that is usually called "not even wrong" because it is so far off base. That is why no-one has responded to your points. The surface heat budget is allradiated from the top of the stratosphere in the IR spectrum. This is basic black body physics. If you do not understand why the ionosphere does not affect the surface either read your textbook or ask a question to clear up your misconception.

You will find that people are less cutting in their responses to you if you stop being so condescending to others. It is especially irritating since you are so often completely wrong and then you are demeaning to others who are in fact correct. For example, you frequently suggest others should read their textbook because you do not understand basic atmospheric physics. Tom can read his textbook all day and you will still be wrong. If you start asking questions about what you obviously do not understand you will come across much better. Your basic physics of the atmosphere is lower than a freshman physics student is expected to understand.

-

supak at 08:46 AM on 18 July 2013Patrick Michaels: Cato's Climate Expert Has History Of Getting It Wrong

Tom Curtis @ 17

<<

First, you should insist on using HadCRUT4 rather than HadCRUT3.

Second, find out the start year for Michael's predicted 25 years with no warming.

Third, from existing HadCRUT4 data, find the standard error on 25 year trends.

Fourth, bet that on the 25th year, the warming trend will be greater than two times the standard error for HadCRUT4 trends.

>>

Here's his offer:

<<

statistically significant (p= .05) warming trend for 25 years based on annual data beginning in 1997, using HadCRU4

>>

-

Glenn Tamblyn at 08:21 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L

"Is anything an indicator "unique to human-caused global warming" other than the TOA energy balance and its link to greenhouse gases? "

Several things are strong indicators that narrow the possibilities for the cause of the observed warming radically.

Cooling of the Stratosphere at the same time as the Troposphere is warming is a powerful piece of evidence. If the warming was due to some other source such as increased sunlight we wouldn't see this pattern, we would see a general change increase in the atmospheric temperature at all altitudes. Stratospheric cooling is caused by the fact that more energy is being radiated to space from higher in the atmosphere than from lower down, shifting the balance of where in the atmosphere radiation to space is occurring. Only a change in the optical properties of the atmosphere could cause this.

Heat accumulation in the oceans is at least 5 times greater than the largest available heat source here on Earth. Therefore, that additional heat cannot be coming from anywhere here on Earth. That leaves as the only possible source as being a change in the Earth's radiative balance with space.

That nighttime and winter temperatures are warming as fast, in fact somewhat faster, than daytime or summer temperatures rules out heating from the sun - directly or indirectly due to changes in cloud cover for example - as heating from the sun would cause more heating when the sun shines.

With terrestial heat sources, the sun and cloud changes ruled out, that only leaves a change in the GH Effect as the remaining possible cause. And the primary change that is expected from a change in the GH Effect - Stratospheric cooling - is being observed.

Moderator Response:[TD] For more see the counterargument "It's Not Us," including the Basic, Intermediate, and Advanced tabbed panes.

-

old sage at 07:37 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

Bit of a joke that - ageism - didn't go down well!

Anyway, I take it you folks all believe there is absolutely no radiation from earth other than i/r. Please allow me to beg to differ, and we will leave it at that. There are some solid physical reasons for believing alternative mechanisms not only can but must exist. There is obviously a lot of sense in studying the detailed influences of all the man made pollutants on radiative transfers in the atmosphere but I worry that it offers a distraction from a serious problem consuming the time of good men. An eye 2 cms from liquid helium at 1deg hardly increases the boil off - fail to keep a liquid oxygen cold trap several meters away down a room temperature tube topped up and the results soon get catastrophic. I would suggest more time be spent looking at kinetic transport and the cold trap. I might take another look at the nitty gritty but I doubt it.

-

John Hartz at 07:08 AM on 18 July 2013Models are unreliable

Many of the issues being dicussed on this thread are addressed in the article,

Seeking Clues to Climate Change: computer models provide insights to Earth's climate future, Science & Technology Review, June 2012, Lawrence Livermore National Laboratory

-

John Hartz at 06:42 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L @89:

Your assertion that modelers don't know how to model clouds is totally unfounded.

See SkS post, New tool clears the air on cloud simulations

-

Phil at 06:13 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

old_sage @238

Incidentally your biology is not too sharp either: there is no such thing as "physics DNA". What could you possibly have meant by that ?

-

Zen at 06:03 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

Neil refers to a study by Hans Von Storch and also quotes him. Can anyone enlighten me as to who Hans Von Storch is? Thanks.

-

Phil at 05:59 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

old_sage @238

There seems to be a lot of misunderstanding here due to a little knowledge being a bad thing.

Thank you for concedeing that; the basics of Rotational and Vibrational absoption spectra can be learnt from any standard undergraduate text on Phyisical Chemistry. I learnt mine from P.W Atkins (aged 73). "Phyisical Chemistry" (OUP, 1st Edition, 1978) has an introduction to the subject in chapter 17, including the Boltzmann distribution mentioned by gws. He goes into more detail in Molecular Quantum Mechanics Part III (OUP, 1970), in Chpater 10.For exhaustive detail on Rotational Spectroscopy (not strictly relevant to the GHE, but my "bible" during my Ph.D) see Microwave Spectroscopy by Townes and Schawlow (Dover Press, first published in 1955)

-

Composer99 at 05:47 AM on 18 July 2013Empirical evidence that humans are causing global warming

Finally, your final paragraph in #88 states:

I would be more impressed with the scientists if they accepted the evidence on face value and tried to hypothesise why the hot spot is not there rather than trying to find excuses as to why it might be there just, somehow, hidden.

is, quite simply, uncalled for. Frankly it is at best very close to accusing scientists of either incompetence or malfeasance, either way without any good evidence of your own to support such a claim. What is more, it is directly contradicted by the discussion in the tropospheric hot spot thread.

In keeping with the comments policy here if you wish to go into detail in discussing the tropospheric hot spot I recommend posting in the thread linked by the moderator in response to your post #85. I also recommend desisting from any insinuations of ill intent on the part of scientists in future; persevering in such behaviour will very quickly see you ousted from participating at this site.

Moderator Response:[TD] Thank you for supporting my frustrating (for me) attempts to keep discussions on the right threads. Everybody, continue discussion of the tropospheric hot spot on the tropospheric hot spot thread, not here. I will delete anybody's further discussion of the hot spot from this thread. Sorry to all you well-meaning responders, but you are just encouraging Matthew L to continue misbehaving.

-

Rob Painting at 05:40 AM on 18 July 2013They didn't change the name from 'global warming' to 'climate change'

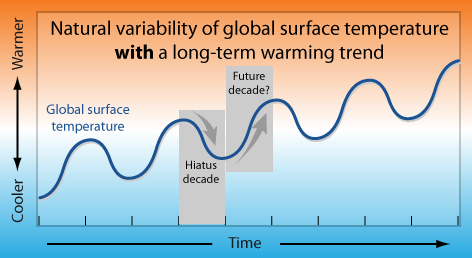

Nichol - the heat being transported downward and poleward by the currently intensified wind-driven ocean circulation may be shielding us from more warming of global surface temperatures but, based on past observations and modelling, it is unlikely to persist. The global weather tends to oscillate between periods where heat is stored in the deeper ocean layers (negative Interdecadal Pacific Oscillation), and periods where it remains in the surface layers (postive IPO). A long-term warming background climate does not cause La Nina or El Nino (which are largely responsible for this natural variation) to disappear.

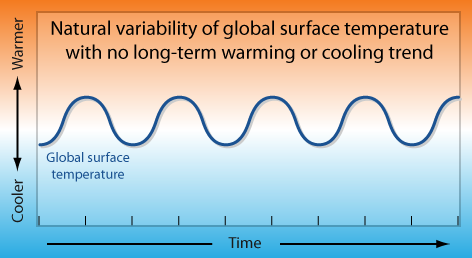

The following images should make this clearer - the variation is unrealistically smooth, but it's just for illustrative purposes.

-

Composer99 at 05:38 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L:

Is anything an indicator "unique to human-caused global warming" other than the TOA energy balance and its link to greenhouse gases? Everything else is an indicator of "warming".

Quite the contrary:

- By itself, the energy imbalance at TOA tells us only that the globe is warming. We need additional evidence to tie it to an enhanced greenhouse effect (such evidence exists, as it happens).

- There are multiple lines of evidence showing that the enhanced greenhouse effect causing the present warming is itself human-caused, as well as evidence excluding other sources. These lines of evidence are discussed here (and elsewhere) on Skeptical Science (and from thence the primary literature).

In addition, you state:

I have to say the argument regarding the lack of a troposphere hot spot being likely due to "data errors" sounds mighty like a cop-out to me. There are plenty of measurements, both Radiosonde and Satellite, none of which show evidence of the hot spot. I would be very surprised if they were all wrong.

Your claim here appears to be directly contradicted by the papers discussed in the link you were provided by a moderator. Some choice quotes (emphasis mine in all cases):

Observing the hot spot would tell us we have a good understanding of how the lapse rate changes. As the hot spot is well observed over short timescales (Trenberth 2006, Santer 2005), this increases our confidence that we're on track. That leaves the question of the long-term trend.

The three satellite records from UAH, RSS and UWA give varied results. UAH show tropospheric trends less than surface warming, RSS are roughly the same and UWA show a hot spot.

Weather balloon measurements are influenced by effects like the daytime heating of the balloons. When these effects are adjusted for, the weather balloon data is broadly consistent with models (Titchner 2009, Sherwood 2008, Haimberger 2008). Lastly, there is measurements of wind strength from weather balloons. The direct relationship between temperature and wind shear allows us to empirically obtain a temperature profile of the atmosphere. This method finds a hot spot (Allen 2008).

Weather balloons and satellites do a good job of measuring short-term changes and indeed find a hot spot over monthly timescales. There is some evidence of a hot spot over timeframes of decades but there's still much work to be done in this department. Conversely, the data isn't conclusive enough to unequivocally say there is no hot spot.

I should like to see what sources, and in particular what papers in the literature, form the basis of your claim that "There are plenty of measurements, both Radiosonde and Satellite, none of which show evidence of the hot spot."

Specifically with regards to the accusations of "cop-out[s]", there have been two important cases in the past where apparent data contradicted model predictions - and the data were found to be wrong (vindicating the models):

- That enhancing the atmospheric greenhouse effect would indeed cause warming. After Svante Arrhenius suggested this, Knut Angstrom ran an experiment which appeared to contradict Arrhenius. Subsequent experiments and observations eventually vindicated Arrhenius' model against the data Angstrom's experiment turned up.

- For quite some time, UAH satellite data appeared to contradict models showing warming; in the event significant errors were found with the UAH data, such that it ceased to conflict with models.

(See discussions here, here, or perhaps elsewhere on Skeptical Science.)

-

KR at 05:12 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L - "It has turned out to be difficult to measure or model the overall net effect, but that very difficulty is evidence that the net effect is not strongly one way or the other..."

Simply put, strong signals are easier to detect. If cloud feedbacks were (positive or negative) on the order of magnitude of observed direct forcings or the observed water vapor response, we would have significant evidence for it. And... we don't.

Personally, I consider cloud forcing primarily an issue on transient climate sensitivity, the speed with which the climate responds - longer term responses are fairly well constrained by the paleo data to the 2-4.5C range, and that includes any cloud response.

-

Composer99 at 05:00 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

There seems to me to be a bit of ageism creeping in here from young know-alls!

Snippy, sarcastic answer: I can't imagine why that would be.

Actual answer: Age has essentially nothing to do with the objections raised against your claims.

The objections are:

- You have provided no basis to accept your claims save for your own authority and a good deal of handwaving.

- What is more, your claims are contradicted by directly measurable phenomena which are consistent with the physics of CO2 molecules and their interactions with electromagnetic radiation at various wavelengths, for which references have been given in the OP and comments.

- Your sole line of counterargument to the above, to date, has been little more than a wordy equivalent of "nuh-uh!"

-

r.pauli at 04:58 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Carbon dioxide is just the by-product of human blunder. Global warming is caused by humans who ignore, deny or permit carbon emissions. CO2 is just part of the chemical mechanism we discovered.

When a plane crashes, we don't blame gravity.

-

Matthew L at 04:53 AM on 18 July 2013Empirical evidence that humans are causing global warming

Moderator:

"but that very difficulty is evidence that the net effect is not strongly one way or the other"

How so? My reading of "that very difficulty" is that the modellers don't know how to model clouds. Until they know how to model them they won't know whether the net effect is strong, weak, negative or positive.

The fact that they only tentatively suggest that it might be slightly positive or slightly negative suggest that they simply don't know and don't want to get it too wrong (or is that just me being cynical?).

In the absence of decent results from the models it might be useful to measure the clouds albedo / greenhouse effect directly. Is anybody doing that? Surely it is necessary anyway in order to find out if the models are right or wrong.

In my (very humble) opinion, this is the least convincing aspect of the current crop of climate models. The two fundamental forces in our weather are the wind and the clouds. To not be able to accurately model the effect from clouds has to be a prime suspect at the root of the current divergence between the model outputs and the measured temperatures.

Moderator Response:[TD] As I already instructed, please put this further discussion of clouds on the thread I pointed you to. I will give you a little while to copy this comment over there, then I will delete this comment here.

Sigh... I don't want to orphan the excellent replies to your comment here, so I will let this comment stay. But no more off-topic comments; I will delete them.

-

Matthew L at 04:38 AM on 18 July 2013Empirical evidence that humans are causing global warming

Thanks for the responses. Enough reading to keep me going for a while there! Shame there does not seem to be any direct measurement of the TOA energy balance at the Top of the Atmosphere rather than being inferred indirectly from ocean heat content numbers - but you obviously just have to work with the tools available.

No comment on my points about the divergence between the models and the tropics - which (as you will know) is a hot topic on many blogs at the moment.

Re your comment:

"The tropical hot spot is not an indicator unique to human-caused global warming"

Is anything an indicator "unique to human-caused global warming" other than the TOA energy balance and its link to greenhouse gases? Everything else is an indicator of "warming". I have to say the argument regarding the lack of a troposphere hot spot being likely due to "data errors" sounds mighty like a cop-out to me. There are plenty of measurements, both Radiosonde and Satellite, none of which show evidence of the hot spot. I would be very surprised if they were all wrong.

I would be more impressed with the scientists if they accepted the evidence on face value and tried to hypothesise why the hot spot is not there rather than trying to find excuses as to why it might be there just, somehow, hidden.

-

old sage at 04:25 AM on 18 July 2013Trenberth on Tracking Earth’s energy: A key to climate variability and change

There seems to me to be a bit of ageism creeping in here from young know-alls!

The minutiae of trying to model everything everywhere is an heroic task and good luck to those doing it. We could all be toast by the time it's finished.

There are two false premises upon which this GG quantum depends.

One is that the net energy from the sun all escapes within the envelope of the infra red. Is that reasonable? I do not believe that anyone holding their hand up to earth from a satellite would feel anything like the amount of heat radiating from it which these radiation models of i/r require. You would even notice this from a high snowy mountain pointing your hand at the valley.

Number two, the same really, is that all that kinetic energy absorbed by gases can only find its way out by direct radiation at i/r. A molecule highly excited can make a multistage transition say from rotational to vibrational as it relaxes giving rise to two or more lines but as its energy ultimately decays into thermal as a result of an inelastic collison the question is can this higher translational energy be handed back? It cannot in the absence of a background of i/r radiation because the gap is equivalent to several thousands of degrees. Can one then imagine an already excited molecule changing up a gear in an inelastic collision to a nearby level by the amount available from translational movement. The odds are stacked highly against this. Decay is available to a continuum of kinetic levels, recharging needs a precise collision into discrete levels with large, in kinetic terms, separation.

That means by reducing outgoing i/r as the only sink for energy generated - either by man or sun - you have to conclude there is another major activity going on taking up kinetic energy and radiating it.

That energy can only be found in the largely unexplored frequency ranges - and they might be spiky and intermittent (e.g. huge numbers of wireless energy radiators (^10-17 ergs) such as are found in the ionosphere.)

There is an analogy. Just as the underlying thermal oscillations prompt radiation from a solid's electron covering in BB radiation, the electrically charged ionosphere covers a pulsating, swirling and mobile atmosphere. This has to stimulate radiation and being at the top of the earth, it will escape unhindered. The earth's response to heating up has to be that robust a mechanism and not too sensitive to the gaseous constituents as it has supported life for millenia. That is not to deny the impact of man's activity, mankind is just an additional burden.

There is clear confusion, and not mine, between the emissions in gases - in which the spectral lines are far fewer than those observed in the absorption spectrum. But at bottom, the absolute determination to avoid any concept of heat transfer by conduction, convection and mass transfer within the atmospheric envelope to a cooler upper layer is fatal to the argument from my viewpoint. I don't dispute what Ramanthan and Coakley may have said, I simply don't wish to wade through it. It involves what is probably second, may be third or perhaps even fourth order argument involving the shuttling around of photons by CO2 before their final resting place as kinetic energy.Moderator Response:[tD] Your "ageism" remark is inappropriate. And unless you are 100 years old, you are not older than all the people responding to you, let alone all the people who have written the peer-reviewed scientific papers being cited by your responders and the original post above.

-

KR at 04:18 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L - My apologies, the reference I gave on OHC was for shallow waters only. More appropriate references would be to Meehl et al 2013 on short and medium term variability, Levitus et al 2012 on increasing total OHC, and the SkS discussion on heat sequestration here.

The current period appears to be one of increased deep sequestration of ocean heat, resulting in rather slower warming of the atmosphere - but when that sequestration returns to normal (or lesser) rates we can expect rather fast atmospheric warming in response.

-

philipm at 04:13 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

MA Roger: tell him about URL shorteners.

-

KR at 03:32 AM on 18 July 2013Empirical evidence that humans are causing global warming

Matthew L - One of the most robust measures of energy imbalance is the rise in ocean heat content, which integrates/averages that TOA imbalance, and those measures indicate ongoing TOA imbalances of ~0.5-0.6 W/m2. There are multiple threads here on Sks regarding ocean heat content, and I would also point to Loeb et al 2012, 'Observed changes in top-of-the-atmosphere radiation and upper-ocean heating consistent within uncertainty'.

"I have always struggled with the lack of negative feedbacks in the models" - Observations on climate sensitivity are in the 2-4.5 C/CO2 doubling range, indicating an overall positive feedback to forcings. Current data on cloud feedback indicates it has a small and probably positive value (Dessler 2010).

"...then it could simply be that there is currently a (temporary?) balance at the TOA that means that there is no extra heat being accumulated...?" - GHG forcings have certainly not halted, OHC is still rising, particularly in the deep ocean, and observations are consistent both with models of ocean circulation (Meehl et al 2011) and measured short term climate variability (Foster and Rahmstorf 2011). Currently there is no evidence whatsoever of a TOA rebalancing.

-

Dikran Marsupial at 03:27 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

In public discussion of science there will always be a compromise between using language that will be understood and remaining completely accurate to finest of detail. Saying that the energy is "trapped" is reasonable as a place to start. If one were to be really pedantic, we could take Fraser's statement

"No, the atmosphere absorbs radiation emitted by the Earth. But, upon being absorbed, the radiation has ceased to exist by having been transformed into the kinetic and potential energy of the molecules. The atmosphere cannot be said to have succeeded in trapping something that has ceased to exist."

and say that matter and energy can't be created or destroyed, only transformed. Therefore Fraser's statement is innacurate as the radiation emitted by the surface has not ceased to exist, but has merely been transformed into kinetic and potential energy of the molecules, exchanged a bit and then re-transformed to come back to us as "backradiation". However there comes a point where attention to detail becomes pedantry and obstructs communication of the central point, rather than assisting it, the point at which this happens is not fixed, but depends on the audience.

The "intactness" is irrelevant to the issue, essentially energy is energy is energy - the thing that matters is the rate at which it enters the system and the rate at which it leaves the system.

-

empirical_bayes at 03:05 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

Yeah, except that I object to the use of the term "trapped" with respect to energy and greenhouse gases, for the same reason Professor Fraser does here ... http://www.ems.psu.edu/~fraser/Bad/BadGreenhouse.html It's misleading. It assumes the energy radiating from Earth remains intact somehow.

-

grindupBaker at 02:56 AM on 18 July 2013They changed the name from 'global warming' to 'climate change'

Maybe typo "'climate change' is now". I want "...refers to the long-term trend of a rising average global temperature" to be "...increasing global heat content" (I mean everywhere, not this article), would it cause a foofaraw if I get it changed ?

-

John Hartz at 02:47 AM on 18 July 2013They didn't change the name from 'global warming' to 'climate change'

Nichol: Generally speaking, when scientists use the term "climate change," they mean changes to the "climate system." I suggest that you review the IPCC definitions of "climate", "climate change", and "climate system" presented in the SkS Glossary.

-

funglestrumpet at 02:39 AM on 18 July 2013Carbon Dioxide's invisibility is what causes global warming

As a non-chemist, I would appreciate an 'intermediate' version which explains the actual mechanism that CO2 uses to trap the infra-red photons and then release them in all directions, only some of which are spacebound.

-

MA Rodger at 02:37 AM on 18 July 2013Debunking New Myths about the 97% Expert Consensus on Human-Caused Global Warming

"Neil has requested that people provide him with examples of the factual errors in this interview." How very polite of him. Yet his request is hosted on twitter which is perhaps indicative of the level of detail at which he deals with climate change. And due to the restriction to 140 characters or less, I'm unable to link to a graphical example of his "factual errors" because of the infeasibly long URLs used by google.

Prev 886 887 888 889 890 891 892 893 894 895 896 897 898 899 900 901 Next

Arguments

Arguments