Recent Comments

Prev 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 Next

Comments 25801 to 25850:

-

Kiwiiano at 05:00 AM on 4 April 20162016 SkS Weekly News Roundup #14

"A chronological listing of the news articles posted on the Skeptical Science Facebook page during the past week."

The link just loops around back to this page, not to Facebook.

Moderator Response:[JH] Link fixed. Thanks for bring this to our attention.

-

barry1487 at 22:34 PM on 3 April 2016Why is 2016 smashing heat records?

Tsk, reading that back it's so garbled. I wish I'd been drunk when I wrote it so I'd have some kind of excuse. Now with added clarity...

Transient sensitivity is the response of a modeled climate system to a doubling of CO2 at the time of doubling, with a 1% increase in atmospheric CO2 concentrations year to year. Expressed as the resulting change in global surface temperature, the estimated Transient Climate Response (TCR) value is 1.8 C for a doubling of CO2, at the time of doubling.

Equilibrium Climate Sensitivity is the response to forcing after the system has equlibrated, which can take several decades. A GCM may be be run with a starting climate that is steady, and then the atmospheric CO2 content in the model is doubled instantly. The Equilibrium Climate Sensitivity (ECS) is expressed as the resulting change in surface temperature after the system has equilibrated. This is the canonical 3 C per doubling CO2.

Both sensitivity estimates are useful for different applications. TCR is a more proximate estimate for 'real time' snapshots of climate response to forcing. ECS estimates include the feedbacks that take longer to play out (several decades at least).

-

chriskoz at 19:40 PM on 3 April 2016Dangerous global warming will happen sooner than thought – study

Tom@41,

I understand your points better now, thanks for the clarification.

As you mention, your 'free market' is "an idealized but never realized condition that is defined by, among other conditions, the lack of negative externalities". Then, in practice: "a key role of government is to regulate the market so that it more closely approximates to a 'free market'".

It would seem that practice can come meet that ideal model. Examples of successful gov regulations from the past, such as against CFC emisions and SO2 emissions, eliminated externalities of ozone hole & acid rains.

However, AGW is a unique type of problem, never encountered before, on at least three grounds:

1) its international character and its unequal consequences. I mean fossil fuels burned in one (predominantly Western) countries, result in largest and most unsuitable climate changes in other (predominantly african, low island) countries.

2) its length of time to develop: the climate consequences we experience today are results of coal burning by our fathers, what we burn today will influence the climate of our children

3) the economies of almost all countries so dependent on FF energy, that any action to curb FF usage, taken by those countires, would result (as they say) in their economy slowdown, and being overtaken by the neighbouring countries.

Due to 1), the model of CO2 externalities at intrernational scale, is not as simple as your 'prisoners dilemma' model, or Hardin's TOC model. In fact PD model is not realistic at all here. Polluting countries who don't face the direst consequences of climate change (e.g. Canada re tar sand exploits) do not have the slightest incentive to take cooperative action unlike the players in your PD model.

Due to 2) (and also partially due to 1), AGW problem is often seen as not an environmental but as inter-generational ethical problem. The govs rarely go try to go with their goals beyond dozen or so years. In fact gov lifecycles are 3-4 years in most democratic countries. To data, I don't know of any policies that would be taken with such long forsight as AGW policy demands.

Due to 3) we have a virtual lockdown, that no county wants to engage in a binding agreement, for fear that their economy will be "ruined". Paris COP agreement is just a wishful thinking, nothing that anyone wants to take responsibility for.

These are jusat examples, that your ideal 'free market', is currently unachievable in today's world. I cannot see how such market would create the forces/incentives able to overcome the perversive current trends to burn even more FF as of today. -

barry1487 at 19:23 PM on 3 April 2016Why is 2016 smashing heat records?

TomR,

The IPCC only used a Transient Climate Sensitivity of 1.8C instead of the more scientific estimate of 3.0C+

Transient Climate Response is roughly the response to forcing at the present (it's more technical than that*, but this explanation will suffice to make the point). The value is currently around 1.8C as you said.Equilibrium Climate Sensitivity is the response to forcing after the system has equlibrated to the forcing, which can take several decades. This is the canonical 3C per doubling CO2 you refer to.

Policy makers are interested in short and long term response, which is one reason for the two values. TCR is also a handy metric for testing sensitivity against recent observations (eg, since 1850 or 1900 or more recently). Instrumental record is a little short to test ECS.

* Transient sensitivity is the response of climate system to a doubling of CO2 at the time of doubling, assuming a 1% increase in atmos CO2 concentrations year to year. It ignores feedbacks that will happen after the time the climate system is measured for any change.

Some GCMs are run with a steady starting climate state, and then 2X atmos CO2 is introduced immediately. Model is run to see the change from that large inititating pulse, equilibrating decades later. Equlibrium Climate Sensitivity is deduced from model response after equlibrium is reached. That kind of model can't be used to compare with observations for any given time inside the equilibrating process.

Both sensitivity estimates are useful for different applications.

-

Tom Curtis at 15:37 PM on 3 April 2016Great Barrier Reef is in good shape

The Queensland Government has just approved the Carmichael Coal Mine. As James Hansen has long pointed out, continuing mining of coal will push as well beyond the 2 C target. On a related note, the Great Barrier Reef this year is suffering just its eigth ever mass coral bleaching, the first having been in 1981. This is part of just the third global mass coral bleaching, the first having been in 1998.

This is unquestionably the result of global warming. The three global events have all occurred in years when Global Mean Surface Temperature has approximated 1 C above preindustrial levels, yet as a best case we will allow global temperatures to rise by another 0.5 C above that level, and the international target is another 1 C above that level. To keep temperatures below 2 C we need to phase out the use of coal. Phasing out the use of coal means approving no more coal fired power plants, and above all, no more coal mines.

That means the Queensland Government have just, in effect, approved the destruction of the Great Barrier Reef. I ask that you, as I did earlier, contact the Queensland Premier to register your disgust at that short sighted decission.

-

Tom Curtis at 09:52 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

OPOF @48, until you specify the limits you will not transcend in the pursuit of your goals, there is not point in conversation between us. And if you will not specify those limits, you are as much an enemy of humanity as the Koch brothers.

I will leave you with a thought:

"So far is it not true that the means are justified by the ends, that rather ends are only ever justified by the means used to pursue them."

-

mark bofill at 09:26 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis,

When someone I disagree with strongly on an issue stands up for the civilized principles our country rests on that defend us all, I believe I incur the obligation to let that person know I agree with them, support them, and appreciate their effort in that regard.So, there you have it. Thank you.

-

One Planet Only Forever at 08:51 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

Tom,

You appear to be deliberately unable to grasp that I distinguish between acceptable and unacceptable attitudes and actions based on the evaluation of the simple rule 'does it advance or impede the advancement of humanity to a lasting better future for all'. I have been very clear that 'specific people' need to be targeted and for good reason. You have chosen to misunderstand and misrepresent that.

As a final point of fact I will remind you that global wealth and global GDP and many other global preceptions of prosperity have grown faster than the global population and yet a significant percentage of humanity continues to suffer brutish short existences (not lives, just an existence). That is not because rich people are kept from helping others, it is because some will try to get as much personal reward as they can no matter what damage their actions can be shown to have created. Those are the people who can be identified and should be removed from positions of influence and wealth accumulation, until they change their minds and choose to behave better.

By the way, there is plenty of evidence that most of the most horrific violence that is going on is due to fighting to get away with acquiring the most possible reward from known to be unsustainable and damaging actions. That violence would fade away if the specific type of people I am referring to could not succeed. Sure, they would still be angry mean-spirited people, but at least they could no longer be national leaders or significantly influencing leaders.

-

Tom Curtis at 08:10 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

OPOF @46:

1) That future generations can deal with 2.5 C is (or should be) beyond question. It will not lift the temperature of any area of the planet to unlivable conditions, nor for the majority of the planet will it lift temperature and climate levels beyond those are not already experienced and lived in today in some part of the world. This is not a great comfort. London becoming a new Manilla will not be a pleasant experience for the British. But it means that 2.5 C is still in the realm of cost/benefit analysis in how we deal with it.

Further, while costs will likely increment exponentially (or some near approximation), the cost differential we should be looking at is not the full cost of 2.5 C, but the cost difference between the Global Mean Surface Temperature we can no longer avoid, and 2.5 C. As 1 C is no locked in, even if we end all net GHG emissions instantaneoudly, and 2 C is probably locked in for any realistic pathway to emissions reductions, the cost we should compare when choosing two strategies to achieve zero emissions is the difference between 2 C and (assuming that is the likely outcome for the democratic/constitutional approach) 2.5 C. And abandoning the rule of law, and constitutional government is far to high a cost to achieve the cost differential between 2 and 2.5 C.

2) The analysis at point (1) above takes your program on its face value. In fact, your program is disasterous in itself. The biggest threat of global warming for small to medium increases in GMST (<7 C) will come from the threat of famine, of war, and of the break-down of civil society. Your program makes all three certainties. It is analogous to proposing the amputation of an arm with an infected wound on the hand because it may, if neglected, require the amputation of the arm.

This is made very clear by your uses of such phrases as "making it clear that a rich and popular person can be declared to be absolutely unacceptable and need to be dealt with accordingly". Granted you quote that phrase (from where?) but certainly appear to endorse it; but its implication is that all means to rid the world of "rich and popular" people are appropriate, including murder. That is what 'absolutely unacceptable means". Specifically it means that no condition can make their situation (or them, it is not clear) acceptable, which in turn means that at as a last resort, even murder can be resorted to to make sure that no person is both rich and popular.

More generally, your program means eliminating the market economy which in turn means implimenting a command economy, with all of the corruption and inefficiency thereby implied. It means as a matter of practical fact, the imposition of the command economy by force over much of the world (who will not accept it voluntarilly). It means the institution of a secret police to maintain the forcibly emplaced government that will impliment the program.

3) I am glad in one way that I continued this conversation with you. I have made a number of (to my mind) obvious inferences about the level of violence that your program will (and certainly may) require and you have not ojbected, nor placed any limit on what you would do to get rid of the "unacceptable people" that you "will not condone" (which apparently now includes me). That refusal to place a limit, to reject the use of force, even of lethal force reveals your true colours to anybody interested.

It also, to my mind, puts you beyond the pale of rational conversation.

-

Tom Curtis at 07:07 AM on 3 April 2016Heat from the Earth’s interior does not control climate

KR @69, it always stuns me when climate change deniers makes statements such as:

"All the above is true and accepted by all, but embarrassingly, what has been

forgotten is that radiation is but one method of transferring energy, the other two

being conduction and convection, and it is principally using these processes that

Earth heats its atmosphere."(From pjcarsons Chapter 1B, emphasis in original)

That was a fair criticism of climate science prior to 1964. In 1964, however, Manabe and Strickland published their landmark paper, Thermal Equilibrium of the Atmosphere with a Convective Adjustment. We are less than a fortnight from the 52nd anniversary of the acceptance of that paper, and 3 month from the 52nd anniversary of its publication - so to say that climate science has "forgotten is that radiation is but one method of transferring energy" is to forget nearly all the history of climate science. Manabe and Strickland used a specified, convection induced lapse rate, but certainly by 1981 models were determining the lapse rate by analyzing the combined radiative and convective energy flux. Modern models also include latent heat, and have for decades. All of that science is just scrubbed from the record for deniers, at best, because they are two lazy to read the actual science.

From the earliest such analysis it was shown that the effect of convection was to cool the atmosphere relative to the temperature it would have been with radiative heat transfers alone:

Thus while convection dominates in determing the thermal structure of the lower atmosphere (troposphere), it is untrue to say that "conduction and convection" are the principle means of heating the atmosphere.

PJCarson goes on to say:

"Radiation does transfer energy by far the quickest – equilibrium is achieved in tiny fractions of a second – compared to convection and conduction which vary considerably depending on the circumstances. "

Contrary to pjcarsons supposition convective equilibrium is achieved much more rapidly (within hours) than radiative equilibrium, which as can be seen above takes almost a year to achieve equilibrium. That is why convection dominates the troposphere's thermal structure.

Of course, fundamentally, the greenhouse effect does not depend on how the thermal gradient in the atmosphere is established, only that it exist.

In the end, that pjcarson and his like get the minor details wrong is immaterial. The fundamental claim is what is extraordinary. It is comparable to criticizing Newton's theory of gravity for not taking into account the inverse square law.

-

One Planet Only Forever at 06:24 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

Tom, on a separate point, your faith that future generations will be able to deal with the challenges of a 2.5 C increase is something I cannot share or condone.

I have shared my opinion on this point many times. Common Sense says that is not acceptable for any already very fortunate person of this current generation to continue to obtain any additional personal benefit from the burning of fossil fuels.

I understand the reluctance to 'making it clear that a rich and popular person can be declared to be absolutely unacceptable and need to be dealt with accordingly'. But there are no excuses for defending the ability of such people to continue to have influence. They need to become 'spectators unable to influence anything' until they choose to become decent particpants in the advancement of humanity.

Until they are effectively kept from getting away with making bigger problems that decent peopel try to deal with the problems will continue to become unacceptably bigger, just like the current climate challenge is unacceptably bigger because of what some rich and powerful people were able to get away with through the past 30 years, and continue to be excused and allowed to make even bigger challenges that 'others will have to deal with'.

Making problems others have to deal with is indeed an easier and potentially very popular way to develop personal perceptions of prosperity and success that are ultimately damaging and unsustainable (for those others who have no power to stop the challenge they will face that is being created by people who are confident, or at least declare, that the amount of trouble they are making is no big deal).

1.5 C increase is achievable. It will be hard work. And some people will need to be very disappointed. But that is what leadership is all about, deciding who deserves to be disappointed and seeing to it that they are. Anyone in a position of social, political or business leaderhip who is unwilling to do that hard work deserves to become a spectator (even against their will or the will of their supporters, no matter what type of hooliganism they might potentially resort to).

-

Tom Curtis at 06:09 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

OPOF @44:

1) While I agree that in the end the solution to climate change will devolve down to the actions of individuals, institutional settings (such as a carbon tax) can greatly influence those actions. Further, peoples choices are such, as is illustrated by the variant of the prisoners dilemma you describe, that without those institutional settings, and without some measure of transparency of action, they will act in a way that is not in their own long term interest because it will give a slightly better outcome than if they act in the best interest of all while others default.

2) While I agree that greedy people acting in their own interests at the expense of others create a major problem, we are restricted in how we can ethically gain compliance to methods that strengthen democratic approaches including persuasion and regulation achieved by democratic (or at least constitutional) means. People fighting wars, including civil wars, do not restrict CO2 emissions. They have higher priorities. And if we step outside democratic and constitutional means that is what we invite - particularly if we do so as external powers.

3) Further, "the by any means necessary" doctrine for resolving climate issues is counter productive. Climate change deniers have implausibly cloaked themselves in a mantle as defenders of freedom. The "by any means necessary" doctrine gives them rhetorical teeth for that charade, without which they would be revealed as defending naked self interest. By not being emphatic defenders of democratic and constitutional means only, we feed the ranks of the tea partiers and other right wing groups opposed to action on climate change. Even where I not committed to the democratic and constitutional means only as a matter of principle, this would be sufficient for me to oppose your approach.

4) Within the strictures of democratic and constitutional means only, I am in favour of pushing for action on global warming as strongly as possible, both individual, in state/provincial and national regulations (including carbon prices) and through international agreements.

-

KR at 04:43 AM on 3 April 2016Heat from the Earth’s interior does not control climate

I took a quick look at pjcarson2015's blog - in his screed on CO2 there is no mention of the lapse rate, the tropopause, 'top of the atmosphere', pressure, effective radiating altitude, etc. A word search of his 'Chapter 1' fails to find any of those terms.

As a result it's clear that pjcarson doesn't understand how increasing CO2 raises the altitude where emitted IR can escape, the altitude of effective emission to space, which due to the lapse rate means radiating from a cooler parcel of gas. This reduces the rate of radiation with respect to the ground temperature, requiring a warmer surface to balance energy flows - the very core of the radiative greenhouse effect. Failing to understand the basics, pjcarsons blog is simply D-K nonsense, and a waste of time and mental energy.

Pjcarson - educate yourself. Until you learn some of the basics you have no chance of relevance.

Moderator Response:[JH] You and other responder to pjcarson2015 are now skating on the thin ice of dogpiling, which is prohibited by the SkS Comments Policy.

Since most of what pjcarson2015 has posted to date is unsubstantiated personal opinion, there is no need for more than one or two people to respond to his/her future posts.

-

One Planet Only Forever at 01:42 AM on 3 April 2016Dangerous global warming will happen sooner than thought – study

Tom,

The focus needs to be on individuals, not nations or even corporations. The trouble-makers need to be identified and be kept from success. And the measure of acceptability needs to be that “a person's attitude and resulting actions must not impeded the advancement of humanity to a lasting better future for all as sustainable parts of the robust diversity of life on this or any other planet”. That means surgically removing the appropriate people from positions of influence, including removing wealth from them if they would abuse that wealth to promote or prolong actions that impede the advancement of humanity. I do not care how it is accomplished. My point is that 'it must be accomplished'. I would be thrilled to see global freedom for all to do as they please as long as no trouble-maker gets away with spoiling things for everyone else.

Interesting discussion about which Game Theory example best explains what is going on in global politics and economics related to climate change (and many other issues where damaging ultimately unsustainable activities develop popularity and profitability).

The obvious answer is that, like any specialized field of science, each Game Theory is only evaluating a small part of the bigger picture. That detailed understanding of each part of the big picture is important, but it is the understanding of the much more complex integrated bigger picture that is needed.

There actually is a Game Theory example that I learned about in my MBA course in the early 1980s that is quite relevant. I will describe the game, even if not exactly the way it was “officially presented by the game maker”, rather than try to find the proper name for it.

- The participants are divided into 5 groups (an uneven number of sub-groups is important for the game to more clearly lead to understanding of the fundamental controllable human choice behaviour it is meant to identify).

- The game is played through several rounds, with each round having discussion between the groups about how they plan to vote (Yes or No) followed by privately cast votes by each group that are then shared each round to determine how many points each team gets as a result of that round of private votes.

- The points obtained by each group are structured in the following way and this is fully understood by all participants in the game at the start of the game: If all teams vote the same way then each team gets 25 points (125 total points); If one team votes different from the other 4 teams, that team gets 30 points and the other teams get 20 points (110 total points); If two teams vote one way and three the other way then the two teams each get 20 points and the three teams each get 15 points (85 total points).It is obvious to all participants that the best play of the game is for all teams to vote the same in every round. However, there is bound to be a competitor who sees an opportunity to 'win compared to the others' by being deceptive.

Of course the point of the game is to show the potential appeal of getting a competitive advantage by being deliberately deceptive. The facilitator wraps up the session by pointing how the total points of all teams was far below the potential. They are also almost certain to be able to point out that the total number of points gotten by the team that 'won the most points' was invariably less than that team would have gotten if none of the teams had ever 'chosen to deliberately deceived the other teams'. And they can also have people connect with their 'new-found distrust of others' because of the game they were in.

What the game cannot represent is the accumulating damage done by people who choose to pursue personal reward in ways that can be understood to not advance humanity. One of my favourite small scale examples of that behaviour is the driver who deliberately chooses to go as far up a line of 'other drivers who have politely accepted their position at the back of the line' then expects to be able to force their way into the line delaying everyone else that they passed - some I have talked to about this even try to defend that they 'must be let in because of a rule of the road' - they are referring to rules for merging traffic which do not apply to the case they try to defend. What they are really doing is preying on the polite helpfulness of most others to the detriment of all others, just like the competitive predator spoils the Game Theory example I have shared.

That Game Theory example nicely highlights the real issue of the way that those among humanity who choose to try to get away with obtaining personal reward in ways they can understand are less acceptable, chosen actions that can be understood to not be part of the advancement of humanity to a lasting better future for all, actions that clearly only provide more personal reward for a person in their lifetime. That problem was very well described in the quote from the 1987 UN report “Our Common Future” that I shared in my comment @13.

What that example Game and the astute observation of reality it highlights point out is that humanity is unlikely to be advanced by the total freedom of all people to do as they please (and certainly cannot be expected to be obtained by discussion that ends with what sounds like everyone understanding the problem and what is required collectively to address it), unless those who would choose to 'win unacceptably' are effectively kept from 'winning undeserving', even having them removed from positions of influence they got away with getting into.

Some people will clearly need to have their ability to influence things restricted. And the current game based on popularity and profitability clearly fails to properly identify and effectively limit the trouble-makers who would choose to try to get away with actions that clearly cannot be part of the future of humanity and actions that would be impediments to the advancement of humanity to a lasting better future for all.

And other things I learned from my MBA education were:

- misleading marketing can be a very powerful and damaging weapon.

- case studies of truly ethical business success are very difficult to find (the ones getting away with behaving less acceptably are more likely to be the 'winners').Putting it all together it is clear that the way the US (and a few others) played the Kyoto game, 'refusing to reduce the global trade competitive benefit that could be gotten from continuing to keep costs down by burning massive amounts of coal and oil', was to the temporary advantage of the portion of humanity who benefited from that being gotten away with. It was the expected behaviour from the group that had also deliberately delayed reducing sulphur in diesel fuel to gain a global trade cost advantage while also keeping newer better diesel technology that needed the better fuel quality from 'gaining any traction within the US mass consumption economy'. I know that US total impacts are not as bad as they could have been, but that is because of the efforts of a portion of Americans who tried to behave better. One sad reality I am aware of is that even California is not a total leader in behaving better. They may lead in some areas but they also have some of the worst oil extraction processes still going on, hidden by the truly commendable efforts of others in California who strive to behave better.

What is going on is easy to understand, as long as you understand that the only valid measure of acceptability is that 'an attitude or action will not impeded the advancement of humanity to a lasting better future for all as sustainable parts of the robust diversity of life on this or any other planet', and you are honestly skeptical of the belief that the freedom of all to do as they please will advance humanity to that better future.

-

MA Rodger at 21:00 PM on 2 April 2016Heat from the Earth’s interior does not control climate

The publication date of pjcarson's latest efforts, his Chapter 1B, was April Fool's Day. If it had any other author, it would be treated as an April Fool wheeze. But for somebody who has exhibited such egregious stupidity as pjcarson, I feel this is for real.

pjcrson's latest contribution to the blog-o-sphere turns on its head his previous assertion that there is a greenhouse effect from CO2 (but no AGW) and makes the following incredible pronouncements. (Excuse my while I stifle a guffaw.)

(1) CO2 can only contribute to the usually accepted GHG effect of +33°C in proportion to its concentration within the atmosphere, that is 0.04% of it. (2) The thermal mass of air tells us that N2 & O2 are "the real Greenhouse molecules" . (3) "The size of Greenhouse heating (blanketing) is constant" and determined by the number of molecules within the atmosphere. So burning FF which converts O2 into CO2 has zero effect. (4) The greenhouse effect is constant over 100,000s of years and is "degrees less" than +33°C.

All this remains off-topic on this thread. I do not see pjcarson clearing the bar suggested by Tom Curtis @68. Moderators please moderate away to restore the sanity.

Moderator Response:[DB] Inflammatory snipped. Please, all participants, keep it clean.

-

Tom Curtis at 20:42 PM on 2 April 2016Heat from the Earth’s interior does not control climate

I note that pjcarson having been comprehensively proved wrong on one lie (that I could not derive his simplistic equation) immediately launches another. IMO, his latest comment, as many before it, contains no substantive argument and should be considered sloganeering only. Given the absurd nature of his claims, and his repeated failure to present substantive argument in favour of his views, I further suggest a high bar be set to establish that he is not sloganeering.

-

RedBaron at 20:24 PM on 2 April 2016Global food production threatens to overwhelm efforts to combat climate change

Ger,

Don't confuse the active fraction with the stable fraction of soil carbon. Your claim that soil CO2 is at most 100years old and that it is in almost perfect balance is a bit simplistic and factually incorrect. Those stats you are addressing refer to the active fraction, not the stable fraction of soil carbon.

The rest of your post is true in so much as it does apply to the majority of agricultural systems today, but not necessarily so, because there are alternative agricultural systems that function with respect to the carbon and nitrogen cycles quite differently.

-

pjcarson2015 at 20:20 PM on 2 April 2016Heat from the Earth’s interior does not control climate

And you spent all that time getting everything wrong!?

My simple equation is correct, as shown such diverse atmospheres as Earth Venus and Mars. The equation simply shows how much energy is re-radiated from any planet’s surface to a point at a height h in its sky. It’s independent of sensors as it only deals with energy flows. Each satellite has different sensors.

The dip is supposed by St P to represent an extra amount that CO2 can absorb (because it is “unsaturated”). But one can easily see, for a species such as CO2 that’s already absorbed all Earth’s re-radiated IR in its wavelength and then re-radiates it in all directions, that there must be a dip of at least 50% (because at least 50% is directed away from the satellite).

Your analysis of the derivation of my equation fails. You worry about the Earth surface bending the wrong way, ie bends towards the satellite, however, from the satellite’s perspective, it makes no difference which way the surface bends as the area “cut out” from the sphere centred on the satellite is the same.

I’d be interested in seeing what you get wrong with Chapter 1B newly on my site.

Moderator Response:[DB] Multiple instances of sloganeering snipped. Simply declaring a dog's tail to be a 5th leg does not make it so. Similarly, handwaving away the explicit analysis of another that demonstrates your specific errors as being "wrong" without any subsequent analysis of your own to rebut it is also wrong and is sloganeering.

Either step up, do the hard work and analysis to support your position, replete with citations to credible sources, or cede the point and move on.

That is what an actual skeptic would do. -

Tom Curtis at 20:18 PM on 2 April 2016Heat from the Earth’s interior does not control climate

Correction to my post @65.

I made an error in my spreadsheet that resulted in a significant error in calculating the ratio (planet sector area exposed to satellite) / (satellite-centred sphere area). Correcting that error gives a correct value of approximately 0.214. That is much greater than the incorrect value of 0.035 cited above, and means pjcarson's incorrect formula overstates the value by about 66%.

In double checking the formula, I noticed that, counter-intuitively the ratio approaches 0.25 as you approach 0 Km altitude. That is not a mistake, and is a consequence of the fact that the visible area of the surface approaches the shape of a circle (area = pi*r^2) rather than a sphere (area = 4*pi*r^2). It does mean that pjcarson would probably prefer to state his ratio in terms of the area of the occluded part of the sphere with radius d centered on the satellite relative to the area of the full sphere. So calculated, the value for the satellite bearing the airs instrument is approximatly 0.283. Even thus reduced, pjcarson's formula overstates the value by about 26%.

-

chriskoz at 20:14 PM on 2 April 2016Global food production threatens to overwhelm efforts to combat climate change

Tom & michael, thanks.

Now I undesratand the red bars are CO2 "balance sheet" and they all add up to 0.

-

Ger at 18:32 PM on 2 April 2016Global food production threatens to overwhelm efforts to combat climate change

1. Fossil fuel CO2 is additive, unlike the CO2 in soil being at most 100 years old. 1+2 is the amount actually added but the landsink in the figure is taking in just about the same amount of CO2-eq from LUC. Meaning that current areal of (food-crop) land is in perfect balance. If their would be no slash and burn and primaire forest conserved, all is fine. Of course not as the current areal is depleted and has released CO2 over the years now adding artificial fertilizers releasing even more potent greenhouse gasses.

2. CH4 from animals, manure is from very recent stored carbon and can not influence the balance. Though CH4 is 7 times more potent over 100 years than CO2, capturing CH4 from dairy farms, waste water treatment, landfills (should be phased out) is already done and could be extended. CH4 from permafrost is far more older and does give a problem as it is 1000th of years 'old' carbon.

3. from https://en.wikipedia.org/wiki/Nitrous_oxide the total amount of N2O is 5.7 Terragrams where a 3.5 terragrams is by natural activities. Being 285 to 310 times more potent than CO2 that would result in 1.76 Petta grams CO2-eq. yr-1, Not 4 as the drawing suggest.

4.Furthermore the Nitrogen cycle in soil is quite complex and hardly any N2O gets away. More problematic is the escape of N20 by production of nylons and the use of N2O as inhibiting gas/driver gas. My guess is that N20 production from industry is quite an amount higher, not a 20% as suggested but more in the range of 50%.

5. Nitrogen loss in soil can be controlled by using correct type of fertilizers. Although only 17.1% of the nitrogen ends up in the food (part), the food part represents only 20% of the plant. As for some fertilizers type 40% ends up in the air (as ammoniak), this can be reduced to a mere 5% by applying N fertiliser in the soil and not on top only.

"Importantly, CO₂ emissions from deforestation together with methane and nitrous oxide emissions are mainly associated with the process of making land available for food production and the growing of food in croplands and rangelands."

CO2 emissions and N20 emissions are mainly due to preparing exisiting, intensive used and depleted -therefore provided with high amounts of artificial N fertilisers- crop lands. Deforsted land is not capturing any CO2 anymore, but it is not releasing vast amounts of CO2. De-watering, and other mechanical does break down soil-life and will release CO2 (and equivalent GHG)

2.

-

Tom Curtis at 17:19 PM on 2 April 2016Heat from the Earth’s interior does not control climate

pjcarson @63:

"Perhaps you can help Tom as he seems unable to do so?"

As you insist on niggling...

The formula being discussed is the found on pjcarsons's addendum to his 'chapter one'. He attempts to explain the CO2 notch seen in IR spectra for Venus, Earth and Mars, as shown in Pierrehumbert (2011):

pjcarson follows Pierrehumbert in calling it a 'dip'.

pjcarson conjectures that:

"The experimental relative dip (eg from figure 6 or 8) is the ratio (measured IR) / (hypothetical directly transmitted IR, ie black body curve)."

(My emphasis)

Note carefully that it is the measured IR, not the IR that strikes the satellite. Measured IR can only be that observed by the instrument itself. It follows that in calculating the ratio of the area above the limb of the planet (approx: horizon) for the satellite to a sphere with a radius equal the the satellite limb distance, he is calculating an irrelevant quantity. Much of that radiation falls on the outershell of the satellite, or other instruments, and not the AIRS instrument that did the observing (for Earth).

If we look at the description of the AIRS instrument we read:

"AIRS looks toward the ground through a cross-track rotary scan mirror which provides +/- 49.5 degrees (from nadir) ground coverage along with views to cold space and to on-board spectral and radiometric calibration sources every scan cycle. The scan cycle repeats every 8/3 seconds. Ninety ground footprints are observed each scan. One spectrum with all 2378 spectral samples is obtained for each footprint. A ground footprint every 22.4 ms. The AIRS IR spatial resolution is 13.5 km at nadir from the 705.3 km orbit."

Plus or minus 49.5 degrees turns out to be approximately 77% of the limb to limb angle, meaning pjcarson's formula (if it were the correct formula) would significantly overestimate the ratio of " (measured IR) / (hypothetical directly transmitted IR". Worse, that arc is not what is observed in a single spectrum. Rather, there is one spectrum per footprint, with a resolution of 13.5 km, so each spectrum as in panel a in the figure above represents the IR radiation from an area just 13.5 km wide (unless explicitly stated to be a composite spectrum). That is, pjcarsons formula (if it were correct) would overestimate incoming radiation by a factor of 116 or so.

You can see this mismatch of pjcarson's concept of the resolution of the instrument and the actual resolution from some of the AIRS products:

The images on the right show a 13km resolution within a single scan path. Where pjcarson's model of how the instrument operated correct, there could be no resolution of details across the width of the scan path, and the width of the pass would be wider.

When confronted with this essential fact above, pjcarson responded that:

"The instrument doesn’t appear in the equation and so the results are independent of the sensor."

As he is attempting to explain properties of the measured results (ie, the actual IR spectrum) his claim that the results are independent of the sensor are absurd. Apparently, in his world, the mission planers could have greatly reduced the budget by simply leaving the instrument on the ground, given that the "measured results" are "independent of the sensor".

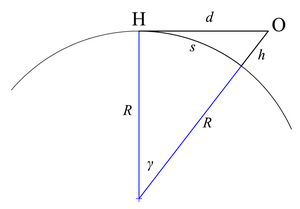

Pressing on, pjcarson then shows a diagram essentially similar to this one from wikipedia:

The only significant differences are some difference in the labelling and the fact that he shows the reflection around the R,h axis. He then states:

" Then the calculated relative dip is the ratio ≈ (planet sector area exposed to satellite) / (satellite-centred sphere area) = 2* sin-1 (R/(R+h)) / 360".

sin^-1(R/(R+h)) is the inverse sine of the opposite (R) over the hypotenuse of the included angle for line segments d and h. It returns the value of that angle. Multiplying by 2 gives the included angle from limb to limb from the satellite. Dividing by 360 gives the ratio of that included angle to the number of degrees in a circle. At this point, pjcarson simply assumes that that ratio is also the ratio of area between the "planet sector area exposed to satellite" and the "satellite-centred sphere area". If the angles were dividing the sphere in a manner similar to the quarts of an orange, it would be. But the "planet sector area exposed to satellite" is not analogous to a quart of an orange. Rather it is a spherical cap. Therefore the formula pjcarson should in fact be using if his premises were sound (though we know they are not), is the ratio of the area of a spherical cap of the earth for the included angle γ, relative to 4*pi*d^2 (ie, the area of the satellite centered sphere, with radius equal to the satellite to limb distance).

As it turns out, for a satellite altitude of 700 km, for the Earth, that ratio is 0.035. pjcarson, by using the wrong formula, has overestimated the value he purports to find by a factor of approximately 10.

That it was the wrong forumla, that the principles used to invoke the formula, and that the formula even as concieved cannot explain the selective dip only at certain wavelengths given that the formula contains no variable for wavelength can all be seen at a glance by somebody who knows the topic. It take rather longer to explain it to people who are less familiar, and it cannot be effectively explained to somebody who will not learn.

-

pjcarson2015 at 12:38 PM on 2 April 2016Heat from the Earth’s interior does not control climate

MA Rodger.

1. #38 You do a calculation to show how much lava is required to heat the whole ocean. OK, so why do you not also consider the same when dealing with the Greenhouse” effect which you reckon is so much larger?

Simply, the whole of the oceans are NOT warmed. As it’s the air near the surface that’s measured (WMO), it is only necessary that the top of the oceans (and the land) are warmed to change measured Global Warming.2. I started here with comment #48 concerning geothermal heat. I responded (#50) to Tom Curtis’ #49 comment about Greenhouse gases’ relative size, but later comments, until #57, were “moderated”.

However, I’m glad you took the time to derive the equation. Perhaps you can help Tom as he seems unable to do so?

Moderator Response:[DB] Please note that posting comments here at SkS is a privilege, not a right. This privilege can and will be rescinded if the posting individual continues to treat adherence to the Comments Policy as optional, rather than the mandatory condition of participating in this online forum.

Moderating this site is a tiresome chore, particularly when commentators repeatedly submit offensive, off-topic posts or intentionally misleading comments and graphics or simply make things up. We really appreciate people's cooperation in abiding by the Comments Policy, which is largely responsible for the quality of this site.

Finally, please understand that moderation policies are not open for discussion. If you find yourself incapable of abiding by these common set of rules that everyone else observes, then a change of venues is in the offing.Please take the time to review the policy and ensure future comments are in full compliance with it. Thanks for your understanding and compliance in this matter, as no further warnings shall be given.

-

Tom Curtis at 09:57 AM on 2 April 2016Dangerous global warming will happen sooner than thought – study

OPOF @21:

"International Policy is only words if effective means of enforcement do not exist (that would be effective methods of dismissing sovereignty when required, something you called totalitarian which was a rather gross misrepresentation of my position, but I did not choose to claim it was)."

Your specific words that I responded to were:

"Essentially, the International Community would have to be able to 'remove from power' any elected representatives in a nation like the USA (or leaders of businesses) who would claim that already fortunate people should still be allowed to benefit as much as they can from actvity that produces CO2 from the burning of fossil fuels (or be able to convince a bunch of voters who are easily tempted to be greedy or intolerant to change their minds and choose not to try to get away with getting what they want even though they probably could collectively get away with it, for a little while, perhaps long enough that they enjoy the undeserved benefits in 'their lifetime'.)."

I take it as obvious that a government that will not impliment a specific policy will not agree to be removed from power by unconstitutional means so that that policy can be implimented. Ergo, any attempt to remove governments from power to implement anti-global warming policies will be resisted by force. For the only countries with large enough emissions for this to make a substantive difference, there armed forces are sufficiently large and advanced that only a sizable coallition of the worlds major powers could hope to remove them from power by conventional force. Even then, all such countries are nuclear powers and there is every reason to believe they would resort to nuclear weapons rather than be forcably removed from power.

Even if they could be removed from power, you would then need to sustain your government against a guarantteed hostile population. That in turn will require all of the mechanisms of a police state. Hence a totalitarian option.

You may not see the implications of what you suggested, but it does not mean we are blind to it.

At its best, you may have suggested a change to the international order such that the UN has the unvetoable constitutional power to removes such governments, where said governments have agreed to that power and altered their constitution so that it is permissible. We might just as well wish for the solution to the worlds energy problems by the invention of a perpetual motion machine.

"And I return a question about your timelime. How is your timeline affected if the current cast of Republicans continue to control the House and Senate after the upcoming election? And what if the Republicans also win the Presidency? And worst of all, what if the Republican President is Ted Cruz (a known deliberate misrepresenter of information hoping to win more success for those who do not care about advancing humanity)? If you would claim such events would have little effect on your timeline then there really is nothing more to discuss, no other way for me to understand your position."

In that event, it would be put back, but given the increasing tendency of US states to go it alone, not by as much as you might think. However, the difference between 2 C and 2.5 C GMST that that might imply is not as great a harm as would be caused by war with the US, the only method of changing their government.

-

Tom Curtis at 09:38 AM on 2 April 2016Dangerous global warming will happen sooner than thought – study

nigelj @23, Naomi Klein may well want to impliment the reforms in parallel, but she ties the reforms together. That serves only to increase resistance to the measures we can take against global warming now. It is counter productive in the short term, and probably in the long term as well.

-

Tom Curtis at 09:35 AM on 2 April 2016Dangerous global warming will happen sooner than thought – study

chriskoz @28, on the more substantial issues.

First, I apologize if I was insufficiently clear. I do not accept a "free market with no negative externalities within the set of sovereign nations being the best available playground for sustainable enonomy". Rather, a 'free market' is an idealized but never realized condition that is defined by, among other conditions, the lack of negative externalities. It serves an analogous role in economic thought to that served by frictionless surfaces in physics. We know they can never actually exist, but it is often helpful to assume that they can.

In practise, a key role of government is to regulate the market so that it more closely approximates to a 'free market', including most especially for this discussion, regulations to prevent avoidable negative externalities and to price in unavoidable negative externalities with the income raised being used to mitigate the externality or being returned as a dividend to those affected by the externality. Importantly, it should do that with a light hand because one of the assumptions of a 'free market' is no transaction costs, and government intervention tends to increase transaction costs.

With regard to greenhouse gases, this means governments should impliment a carbon price (carbon tax or emissions trading scheme) with the money recieved being returned as an (ideally) per capita dividend. Some other regulations may have a greater impact in reducing negative externalities than their impact on transaction costs, but the case is not obvious and will not be the same across all circumstances.

(As an aside, this also means the government should also impliment fees to cover the externalities on road transport, cigarette smoking, alcohol consumption, etc. If you have a socialized medical system, those externalities include the cost of that system.)

Of course, you are quite correct that such action does not go beyond national borders, while the externalties of fossil fuel use certainly do. That must be dealt with by negotiated agreements to, ideally, set up an international emissions trading scheme; but failing that as rigorous an agreement as can be negotiated. Such an agreement should ideally included enforcement measures such as tariffs applied to the goods of non-compliant nations (including those not in the agreement) to recover the cost of the externalities of those nations GHG production.

Even without an international agreement, national governments should (IMO) consider imposing carbon taxes at a rate equivalent to the domestic carbon tax (or current mean cost of emissions permits) less the value of any carbon tax (or emissions permits) paid at the source nation. Unfortunately relative stenght of economies creates a major problem with this, as potentially does the General Agreement on Tariffs and Trade and in particular the enforcement provisions under the WTO.

However, what is not acceptable is the attempt to impose such an agreement by force. Still less acceptable is any thought of replacing governments of foreign nations to ones more amenable to the agreement, which can only be done by force. These options are unaceptable simply based on transacton cost considerations; but far more importantly, they are unacceptable violations of democratic principles except where the foreign government is so undemocratic that it ought to be replaced on those grounds alone. In the later case, the replacement of the foreign government should have as its objective democratic government, and should not impose policy positions on that government, including regarding global warming.

I will note that an agreed international ETS or Carbon Tax does not restrict sovereignty because it is mutually agreed by sovereign governments. Nor does an ETS or Carbon Tax imposed at point of entry, as it applies only to goods within sovereign borders.

I will also note that restricting ourselves negotiated agreements (and acts only carried out within sovereign borders) may not be as efficient, to one way of looking at things, as imposition of appropriate policy by force (as suggested by OPOF). That, however, is only the case if we only consider the costs of global warming.

-

MA Rodger at 09:30 AM on 2 April 2016Heat from the Earth’s interior does not control climate

pjcarson @60/61.

So you tell me @60 that if an equation happens to give the hoped-for answer, then the equation can be considered to be correct. Are you serious? (I'm not sure here how the IPCC fit into the issue. Do they use a different equation? Or is it that they obtain a different answer, one you disagree with?)

And if that is not enough to provide a measure of the lunacy of you spout, do note that if, as you argue, all the IR that leaves the surface of a planet emerges after a few adventures at the top of the atmosphere and then shoots off into space; if this were true, how can CO2 (or for that matter, any other gas in the atmosphere) be acting as a greenhouse gas? The very notion that GHGs can operate without creating back-radiation is pure thermodynamical nonsense!!

And you may be glad to learn that a quick back-of-fag-packet calculation of you geometric explanation for a change in 'fantasy-total-globe' satellite radiation measurement due to different heights of emission suggests the % of the Earth visible from the satellite drops by 1.5% for an increase of 10km in height (~65K temperature drop) but due to the inverse square law having twice the opposite effect, the radiation measurement at the satellite would actually rise with increased in height. So you also supply a goodly quantity of geometrical nonsense.

(Note - I would attempt to find a more appropriate thread for this comment but the scope of argument being addressed defies easy categoraisation.)

-

pjcarson2015 at 08:48 AM on 2 April 2016Heat from the Earth’s interior does not control climate

MA Rodger.

1. #38 You do a calculation to show how much lava is required to heat the whole ocean. OK, so why do you not also consider the same when dealing with the Greenhouse” effect which you reckon is so much larger?

Simply, the whole of the oceans are NOT warmed. As it’s the air near the surface that’s measured (WMO), it is only necessary that the top of the oceans (and the land) are warmed to change measured Global Warming.2. I started here with comment #48 concerning geothermal heat. I responded (#50) to Tom Curtis’ #49 comment about Greenhouse gases’ relative size, but later comments, until #57, were “moderated”.

However, I’m glad you took the time to derive the equation. Perhaps you can help Tom as he seems unable to do so?

Moderator Response:[DB] Inflammatory snipped.

-

Tom Curtis at 08:46 AM on 2 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @26:

"That would take additional studies of course. However since a very high % of intensively managed cropland is used to produce food for livestock, and that change in management to a forage based system was the change in management showing the highest increase in methanotroph numbers and activity, it leands me to believe all that is needed in confirmation studies."

From the final study to which you linked, and partly quoted by you above:

"Soil from a calcareous site (pH 7.4) under deciduous woodland (Broadbalk Wilderness wooded section) oxidized CH4 6 times faster than the arable plot (pH 7.8) with the highest activity in the adjacent Broadbalk Wheat Experiment (with uptake rates of −80 and −13 nl CH4 1−1 h−1, respectively). The CH4 uptake rate was only 20% of that in the woodland in an adjacent area that had been uncultivated for the same period but kept as rough grassland by the annual removal of trees and shrubs and, since 1960, grazed during the summer by sheep. It is suggested that the continuous input of urea through animal excreta was mainly responsible for this difference."

Doing the maths, the CH4 uptake in the woodland is 6 times that on the arable plot, and 5 times that in the grazed grassland. If follows that the CH4 uptake in the grazed grassland in 1.2 times that in the arable plot. In other words, switching from cropping to grazing does not result in the 400% increase in methane uptake you desire, but only a 20% increase.

Meanwhile your second last link indicates that increasing soil carbon content (which increases water retention) will increase methane production, as will increasing water content (as by irrigating improved pasture), or fertilizing. Ergo, increasing net methane oxidization in soil is at the expense of increasing CO2 emissions from LUC; while intensive pasturing which does increase soil organic content (just not enough to reverse the atmospheric effects of the industrial revolution) will increase methane generation, and therefore decrease net methane oxidation in soils.

Your linked studies do not support the idea that methane oxidation can be increased by a factor of 5 in agricultural land, let alone in all upland soils by any definition.

-

pjcarson2015 at 06:57 AM on 2 April 2016Heat from the Earth’s interior does not control climate

#59 MA Rodger.

1. If my simple equation is incorrect, why does it give the correct answer!? [The equation is simple, isn't it!] It does show why I regard IPCC's IR badly.2. Please give quantitative answers to show my lunacy.

3. No ad hominem! It only degrades its user.

Moderator Response:[DB] Argumentative, baiting and inflammatory snipped.

-

TomR at 05:15 AM on 2 April 2016Why is 2016 smashing heat records?

According to University of Maine's daily global temperatures on the "Climate Reanalyzer" website, March has definitely blown away February's all-time hottest month in modern Earth history record. Global warming is 93% in the ocean which is why El Nino years are tending to get hotter as the oceans giving back some of that warmth.

There is some evidence that this El Nine might not totally go away but rather make a small comeback after September. This makes is very likely that 2016 will be even warmer than 2015 as projected several months ago by the UK Met. All major global warming gases are at record levels and are still increasing.

Many world governments are still not being honest about their emissions. The IPCC only used a Transient Climate Sensitivity of 1.8C instead of the more scientific estimate of 3.0C+. The Arctic is already starting to release large amounts of methane 70 years ahead of schedule. Arctic sea ice is at a record low and looks very likely to set a record low maximum this year.

We need to do all we can to reduce the scale of the coming catastrophe. It's going to be huge and sooner than you think. Ban new fossil fuel vehicles, new fossil fuel power plants, cattle except in zoos, deforestation for any reason. Pass a global carbon tax of at least $200/ton of CO2 with money returned to the people and to help finance the shift to renewable energy. We are in for a hell of a ride. Billions are certain to die this century.

-

RedBaron at 04:45 AM on 2 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

Tom,

No, you misunderstand. I am not talking about increasing the upland soils so that they cover 5 times their current area. I am talking about changing the agricultural practises to those that increase the effectiveness of the methane sink by 5X or more. That's why I provided those additional studies and quotes from them. Of all the CH4 sources and sinks, the biotic sink strength is the most responsive to variation in human management, and examples I showed prove that increases of 5X or more is at least possible. Although I am fully aware that one published study is not vetted enough to proclaim it is necessarily possible on all oxic cropland. That would take additional studies of course. However since a very high % of intensively managed cropland is used to produce food for livestock, and that change in management to a forage based system was the change in management showing the highest increase in methanotroph numbers and activity, it leands me to believe all that is needed in confirmation studies. The principle itself seems sound.

BTW we are also using two different definitions of "upland soils". The definition I am using (and that of the citation I provided) is land above the level where water flows (i.e., well-drained, oxic soils), to differentiate from swamp, delta or paddy soils. That means most agricultural land excepting rice production.

-

PluviAL at 02:52 AM on 2 April 2016Global food production threatens to overwhelm efforts to combat climate change

RedBaron, Awsome. I am not familiar with your figures, but sense this is true from my house vegetable gardening, and from my studies for other solutionis. We can do it, we must be a positive contributor to a healthy ecosystem, and we will be one with nature soon.

Imagine walking through San Diego Zoo, and that this is the way the whole world is, but instead of enclosures to keep nature in, we live in exclosures, to keep civilization in designed balance with nature. Yes we can!

-

KR at 02:49 AM on 2 April 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis - I had considered the 'prisoners dilemma' as an alternative term myself, but that has the implication that all actors in the decision are fully aware of the tradeoffs and potential costs of their actions. That's not the case in the situation of unpaid external costs from fossil fuels, or even in many cases industrial pollution.

An interesting discussion of emantics - now, back to the original discussion...

-

MA Rodger at 01:09 AM on 2 April 2016Heat from the Earth’s interior does not control climate

Tom Curtis @58.

The link to pjcarson's 'grand work' has been moderated away and it should be reinstated to allow understanding of this interchange.

I do sympathise with you in trying to correct his thesis. And we are off topic on this thread, but where do you start on something so heavily loaded with nonsensical assertions. For instance, that equation he presents is simply the diameter in degrees of the planetary disc visible from the satellite divided by 360 degrees. It is obviously wrongly applied but his description of what he thinks it represents is simply nonsensical.

I think myself I would make a start at unravelling his lunacy at the beginning by asking for clarification of his opening statement:-

"IR properties have been somewhat neglected, eg in the thousands of pages churned out by IPCC, very few refer to IR – and even these are of dubious scientific character."

I am not familiar with any parts of IPCC ARs that could be described as being "of dubious scientific character." I consider it quite outrageous for someone to make such an statement entirely unsupported.

-

Tom Curtis at 23:39 PM on 1 April 2016Heat from the Earth’s interior does not control climate

pjcarson @57, I was in no way intrigued by your 'analysis'. That sort of flat earther level denialism is a dime a dozen. Because it is premised on not undersanding the science it criticizes, its criticizisms cannot be interesting. I made the comment only because I thought my 8 minute comment meant I owed you that much. So, I have delivered. You have responded with flat denial and more nonsense. So, you won't learn hence there is no point my trying to teach you. End of conversation.

(If any readers want more details on why pjcarson's website is nonsense, I will be happy to do so on a more appropriate thread, but he himself at this stage can only escape the charge of dishonesty by pleading idiocy, given his response. Horse, water, etc.)

-

Tom Curtis at 23:33 PM on 1 April 2016Dangerous global warming will happen sooner than thought – study

chriskoz @38, as noted in my original comment, I was just flagging my dislike of the Hardin sense of the phrase. I did not think I was making a substantial point on the issues being discussed before my comment. I think flagging my dislike and the reasons is usefull because sometimes terms are designed to set us up to accept certain positions without due scrutiny. "Tragedy of the commons" in Hardin's sense and use was certainly designed with that purpose. Noting the Orwellian nature of that term pulls its rhetorical teeth.

-

michael sweet at 23:32 PM on 1 April 2016Global food production threatens to overwhelm efforts to combat climate change

Chriskoz,

I think you have misunderstood the figure. It accounts for all the emitted CO2. As you know, the atmospheric CO2 is increasing (3ppm last year), that is a sink. Since about half the emitted CO2 stays in the atmosphere, it is the largest sink.

-

Tom Curtis at 23:29 PM on 1 April 2016Global food production threatens to overwhelm efforts to combat climate change

chriskoz @2, it may be better to think of it as a partition. The total value of fossil fuels plus net LUC should equal the total value of atmospheric growth, land sink and ocean sink, with the relative ratios showing how the increased CO2 in the total reservoir is partitioned. Atmospheric growth is then just the growth in atmospheric CO2 concentration.

-

Tom Curtis at 23:26 PM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @24, yes, all we need to do is increase the upland soils (dark blue on the map below) so that they cover 5 times their current area:

(Source)

How could there be any problem with that at all? /sarc

And please note that in the US, nearly all cropland is in lowland (ie, net methanogenic) soils, while in the rest of the world for the most part (by area), crop intensity is very low. The exceptions are in countries with very high population densities where a loss of cropland would result in a severe risk of famine.

-

chriskoz at 20:04 PM on 1 April 2016Global food production threatens to overwhelm efforts to combat climate change

Unexplained on the figure is the huge CO2 sink (ca -5pGC/y) called "Atmospheric Growth". What is it??? I don't recall seeing such sink on any carbon cycle picture of any scientific publication. Is it some mistake/ misnomer? A sink (whatever mysterious its origin is) should not be called "Growth"...

-

RedBaron at 19:55 PM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

Tom,

Lets try and discuss the relevant parts only please.

I happen to agree with this part of your statement, right up until your conclusion:

"3) In fact, with regard to upland soild Conrad et al cite Holmes et al (1999), who conclude that globally, acidic soils are a net sink of 20-60 million tonnes of CH4 annually. Even at 60 million tonnes per annum, that is substantially less than CH4 emissions from cattle. That, of itself, makes it unlikely that the complete biome (upland grasslands plus grazers) is a net CH4 sink, and it is, it is certainly a small one. More importantly it means that even without grazers, the loss of grassland cannot account for even a very small fraction of total emissions anthropogenic emissions (just short of 500 million tonnes per annum in 2012)."

How is it that we can look at exactly the same evidence and draw the exact opposite conclusion? I agree that now the cropland that supports animal husbandry is not nearly large enough to offset emission. It functions quite differently with regards to methanotroph activity. So why would citing the sink is only 20-60 million tonnes of CH4 annually somehow refute what I am saying? That's actually my point. Increase that sink by 5x or more and you get 100-300 tonnes of CH4 annually. that actually puts a big dent in the just short of 500 million tonnes per annum total anthropogenic emissions from all sources. By the time you add in abiotic oxidation and improvements in rice production, it appears as if we might be able to remove the "mystery CH4" in the OP, as well as actually reducing atmospheric CH4.

-

chriskoz at 19:10 PM on 1 April 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis@30,

Yet, term tragedy of the commons can be ambiguous. But I @28, use the term tragedy of the commons in Hardin sense. It's obvious in the context of topic at hand, as KR@31 & yourself@32 confirmed.

Therefore, I don't understand why you're trying to explain the other, Orwelian sense of it, a discussion that goes off topic here, which is rapidly increasing CO2 emissions and difficulty stopping it. As you did not provide any reason for your Orwelian deliberations about TOC ambiguity, I consider your comment off topic trolling, unless you clarify it.

-

bozzza at 18:48 PM on 1 April 2016Dangerous global warming will happen sooner than thought – study

Of course, Dcrickett, I hope you are feeling well!

-

bozzza at 18:47 PM on 1 April 2016Dangerous global warming will happen sooner than thought – study

@ 34,

You just seemed to be having a mindless dig at so called 'socialism' without realising all 'legitimate' markets are Governed. Year 11 economics starts with this as truth.

You can say everyone prefers market forces but they are just as imaginary as the fabled invisible hand! Ronald Reagan is famous for saying, "If it moves tax it; if it keeps moving regulate it; if it stops moving subsidise it!"

-

bozzza at 18:36 PM on 1 April 2016Why is 2016 smashing heat records?

Simon,

Is Argo confirming any trends?

-

Simon Johnson at 17:53 PM on 1 April 2016Why is 2016 smashing heat records?

Jim Hansen has said that the best measure of the Earth's energy budget is the heat content of the oceans. That has been measured for about a decade by the Argo 'floats/buoys' project.

See http://skepticalscience.com/Ocean-Warming-has-been-Greatly-Underestimated.html

See http://www.skepticalscience.com/cooling-oceans.htm and

http://www.skepticalscience.com/measuring-ocean-heating-key-to-track-gw.html and

http://www.skepticalscience.com/graphics.php?g=12

-

pjcarson2015 at 17:34 PM on 1 April 2016Heat from the Earth’s interior does not control climate

Tom. I’m glad you are intrigued. You write, you read my site casually. Consequently you haven’t got anything correct. Try to read it without bias; it’s simply a scientific investigation, not a manifesto. It’s there to be corrected if it’s wrong, but you haven’t done so.

1. No

2. No

3. No. I don’t asume anything about the AIRS instrumentation. You’ve misread again. My diagram actually shows the section of Earth’s surface that is able to direct energy to the satellite. The instrument doesn’t appear in the equation and so the results are independent of the sensor.

4. The equation is independent of wavelength. Apart from the equation itself, you really should have tweaked IF you really did read my Conclusions …

“ ALL satellite spectra will need to be adjusted with regard to their measured magnitudes” .

Your “Without further need to check the maths” suggests you haven’t been able to work out my equation. Although it’s simple, it does require a little trick – changing one’s perspective from Earth’s (first), then to what the satellite sees. Take it as an exercise.

Anyway, if it’s wrong, how does it get the correct answer?

5.Try to not make “ad hominem” remarks. You have not shown any errors at all.

To cap it all off, I’m trying to add another section which explains much more simply why the Greenhouse Effect is minuscule.

[By trying, I mean working with Wordpress can often be difficult! I’ll get there.]

However, its essence is

All the above is true and accepted by all, but embarrassingly, what has been forgotten is that radiation is but one method of transferring energy, the other two being conduction and convection, and it is principally using these processes that Earth heats its atmosphere.”

You say you left a message. My site hasn’t registered any comments from you.

Moderator Response:[DB] Inflammatory and argumentative snipped.

-

Tom Curtis at 14:54 PM on 1 April 2016Heat from the Earth’s interior does not control climate

For whatever it is worth, I have just posted this comment at pjcarson's website:

"pjcarson:

A casual read of your chapter 1 plus annex reveals:

1) That you have taken purported evidence that CO2 emissions of IR to space come from high in the troposphere (an essential feature of the theory of the greenhouse effect, see https://www.skepticalscience.com/basics_one.html) as a disproof of that theory, thereby showing you do not understand even the basics of the theory you purport to disprove;2) That you misread Pierrehumbert (2011)'s explanation of the maths of line by line radiation models as itself an explanation of the greenhouse effect (it is not), thereby showing you have misunderstood even basic level explanations of the evidence;

3) That you assume in your equation that the AIRS instrument scans the entire visible globe in each frame, whereas it in fact scans an area 1600 km wide, thereby destroying the logical justification of your equation (see http://airs.jpl.nasa.gov/mission_and_instrument/instrument);

4) That your equation contains no variable for the wavelength or frequency of light, thereby applying equally to all wavelengths. From that it follows that it cannot explain changes in the brightness temperatures at specific wavelengths as is observed in the satellite instrument. Without further need to check the maths, this is sufficient to show your equation does not explain what you claim it to explain.

5) These very basic errors, discoverable with a very superficial reading, show that you do not bother with basic fact checking, and are out of your depth in logical analysis. That gives sufficient reason to check no further.

From this, an 8 minute turn around on your submission merely shows the sub-editor had a basic knowledge of climate science, and therefore sufficient knowledge to reject the paper on such a superficial reading."

-

Tom Curtis at 14:36 PM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @22, what I clearly refuted was the claim that largescale return to intensive grazing will solve greenhouse gas problems into the future. Your claims about methane above are of the same nature. However, seeing you challenge the point:

1) You say "Extraordinary claims require extraordinary evidences", but provide not significant evidence. A list of unexplained links coupled to out of context quotes is not evidence. It is a smokescreeen. You need to explain what the papers (and blogs) show in detail and how they support your views.

2) You claim @18 that "According to the following studies those biomes actually reduce atmospheric methane" but Conrad et al (1996) studies only the trace gas emissions and sinks of soils. It is not a study of the entire biome, which of course includes the animals on them. Ergo, your extraordinary evidence consists of exageration. (This is not suprise as the papers are available to the IPCC, who take them into account. It follows that if you come to signficantly different conclusions to the IPCC from those papers, you are likely misreading or exagerating them.)

3) In fact, with regard to upland soild Conrad et al cite Holmes et al (1999), who conclude that globally, acidic soils are a net sink of 20-60 million tonnes of CH4 annually. Even at 60 million tonnes per annum, that is substantially less than CH4 emissions from cattle. That, of itself, makes it unlikely that the complete biome (upland grasslands plus grazers) is a net CH4 sink, and it is, it is certainly a small one. More importantly it means that even without grazers, the loss of grassland cannot account for even a very small fraction of total emissions anthropogenic emissions (just short of 500 million tonnes per annum in 2012).

These arguments just echo in form the arguments with regard to CO2 that refuted your positions on that gas. There is not substantial difference between the cases, and no reason to consider your claims of any more interest with regard CH4 than there was with CO2 unless you actually unpack all the numbers, including peer reviewed estimates of loss upland grassland to horticulture, CH4 sink per km^2 for grassland relative to CH4 emissions per km^2 for grazing cattle at expected herd densities etc

Prev 509 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 Next

Arguments

Arguments