Recent Comments

Prev 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 Next

Comments 25851 to 25900:

-

Simon Johnson at 17:53 PM on 1 April 2016Why is 2016 smashing heat records?

Jim Hansen has said that the best measure of the Earth's energy budget is the heat content of the oceans. That has been measured for about a decade by the Argo 'floats/buoys' project.

See http://skepticalscience.com/Ocean-Warming-has-been-Greatly-Underestimated.html

See http://www.skepticalscience.com/cooling-oceans.htm and

http://www.skepticalscience.com/measuring-ocean-heating-key-to-track-gw.html and

http://www.skepticalscience.com/graphics.php?g=12

-

pjcarson2015 at 17:34 PM on 1 April 2016Heat from the Earth’s interior does not control climate

Tom. I’m glad you are intrigued. You write, you read my site casually. Consequently you haven’t got anything correct. Try to read it without bias; it’s simply a scientific investigation, not a manifesto. It’s there to be corrected if it’s wrong, but you haven’t done so.

1. No

2. No

3. No. I don’t asume anything about the AIRS instrumentation. You’ve misread again. My diagram actually shows the section of Earth’s surface that is able to direct energy to the satellite. The instrument doesn’t appear in the equation and so the results are independent of the sensor.

4. The equation is independent of wavelength. Apart from the equation itself, you really should have tweaked IF you really did read my Conclusions …

“ ALL satellite spectra will need to be adjusted with regard to their measured magnitudes” .

Your “Without further need to check the maths” suggests you haven’t been able to work out my equation. Although it’s simple, it does require a little trick – changing one’s perspective from Earth’s (first), then to what the satellite sees. Take it as an exercise.

Anyway, if it’s wrong, how does it get the correct answer?

5.Try to not make “ad hominem” remarks. You have not shown any errors at all.

To cap it all off, I’m trying to add another section which explains much more simply why the Greenhouse Effect is minuscule.

[By trying, I mean working with Wordpress can often be difficult! I’ll get there.]

However, its essence is

All the above is true and accepted by all, but embarrassingly, what has been forgotten is that radiation is but one method of transferring energy, the other two being conduction and convection, and it is principally using these processes that Earth heats its atmosphere.”

You say you left a message. My site hasn’t registered any comments from you.

Moderator Response:[DB] Inflammatory and argumentative snipped.

-

Tom Curtis at 14:54 PM on 1 April 2016Heat from the Earth’s interior does not control climate

For whatever it is worth, I have just posted this comment at pjcarson's website:

"pjcarson:

A casual read of your chapter 1 plus annex reveals:

1) That you have taken purported evidence that CO2 emissions of IR to space come from high in the troposphere (an essential feature of the theory of the greenhouse effect, see https://www.skepticalscience.com/basics_one.html) as a disproof of that theory, thereby showing you do not understand even the basics of the theory you purport to disprove;2) That you misread Pierrehumbert (2011)'s explanation of the maths of line by line radiation models as itself an explanation of the greenhouse effect (it is not), thereby showing you have misunderstood even basic level explanations of the evidence;

3) That you assume in your equation that the AIRS instrument scans the entire visible globe in each frame, whereas it in fact scans an area 1600 km wide, thereby destroying the logical justification of your equation (see http://airs.jpl.nasa.gov/mission_and_instrument/instrument);

4) That your equation contains no variable for the wavelength or frequency of light, thereby applying equally to all wavelengths. From that it follows that it cannot explain changes in the brightness temperatures at specific wavelengths as is observed in the satellite instrument. Without further need to check the maths, this is sufficient to show your equation does not explain what you claim it to explain.

5) These very basic errors, discoverable with a very superficial reading, show that you do not bother with basic fact checking, and are out of your depth in logical analysis. That gives sufficient reason to check no further.

From this, an 8 minute turn around on your submission merely shows the sub-editor had a basic knowledge of climate science, and therefore sufficient knowledge to reject the paper on such a superficial reading."

-

Tom Curtis at 14:36 PM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @22, what I clearly refuted was the claim that largescale return to intensive grazing will solve greenhouse gas problems into the future. Your claims about methane above are of the same nature. However, seeing you challenge the point:

1) You say "Extraordinary claims require extraordinary evidences", but provide not significant evidence. A list of unexplained links coupled to out of context quotes is not evidence. It is a smokescreeen. You need to explain what the papers (and blogs) show in detail and how they support your views.

2) You claim @18 that "According to the following studies those biomes actually reduce atmospheric methane" but Conrad et al (1996) studies only the trace gas emissions and sinks of soils. It is not a study of the entire biome, which of course includes the animals on them. Ergo, your extraordinary evidence consists of exageration. (This is not suprise as the papers are available to the IPCC, who take them into account. It follows that if you come to signficantly different conclusions to the IPCC from those papers, you are likely misreading or exagerating them.)

3) In fact, with regard to upland soild Conrad et al cite Holmes et al (1999), who conclude that globally, acidic soils are a net sink of 20-60 million tonnes of CH4 annually. Even at 60 million tonnes per annum, that is substantially less than CH4 emissions from cattle. That, of itself, makes it unlikely that the complete biome (upland grasslands plus grazers) is a net CH4 sink, and it is, it is certainly a small one. More importantly it means that even without grazers, the loss of grassland cannot account for even a very small fraction of total emissions anthropogenic emissions (just short of 500 million tonnes per annum in 2012).

These arguments just echo in form the arguments with regard to CO2 that refuted your positions on that gas. There is not substantial difference between the cases, and no reason to consider your claims of any more interest with regard CH4 than there was with CO2 unless you actually unpack all the numbers, including peer reviewed estimates of loss upland grassland to horticulture, CH4 sink per km^2 for grassland relative to CH4 emissions per km^2 for grazing cattle at expected herd densities etc

-

RedBaron at 12:57 PM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

Rebuttal of Rudiman is not exactly the same as actually taking a minority view of methanotrophs and claiming you refuted their function in controlling atmospheric gasses is it? Do you honestly believe anything you posted on that other thread refutes the work of Dr Ralf Conrad? Really? Extraordinary claims require extraordinary evidences. You provided none at all regarding methanotrophs. Your post of biomass that includes human biomass has little relevance regarding herbivores existing on a grassland/grazer biome either. Except as the prairie was plowed at about the same time as the industrial revolution, the curve could support either hypothesis.

-

tcflood at 12:33 PM on 1 April 20162016 SkS Weekly News Roundup #13

The news article "Peru Tries to Adapt to Dangerous Levels of UV Radiation Brought on by Climate Change" by Lucas Iberico Lozada, Newsweek, Mar 15, 2016, in the Sunday, March 20 list badly confuses climate change with depletion of the ozone layer. This could be very confusing to those who don't understand the difference.

-

Tom Curtis at 10:30 AM on 1 April 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @20, I was merely pointing out that your claim about megafauna was false. I am not interested in rediscussing your other views, which have already been sufficiently refuted here.

-

Tom Curtis at 09:09 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

KR @33, the term 'prisoners dilemma' is the general term for such situations in the discipline that actually studies strategies for dealing with them.

-

Dcrickett at 08:01 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

@29 Bozzza — I see no conflict in what I wrote. Maybe I am merely in a drug-induced stupor; I had major surgery. But I would be glad to attempt an explanation, if you would explain what you see as conflict.

What I do see as not entirely clear is my point that there is little time for taking proper and adequate action to address the CO₂ crisis. I fear the point of no return (more like a span of time than a point in time, but the phrase stands) could pass before the paradigm of inaction gives way. Maybe Ragnarök would be a better analogy than updated French Revolution. Either way, not a good time to be a one percenter. Or a human of any kind.

-

KR at 04:32 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis - Do you have a preferred alternative term for the tendency of individual self-interests to drive actions contrary to the common good? Such as in pollution of the air, water, etc, the externalities that are currently not accounted for in fossil fuel prices?

-

RedBaron at 02:56 AM on 1 April 2016Global food production threatens to overwhelm efforts to combat climate change

There is just one tiny point I disagree with slightly. You said, "The greenhouse gas footprint of food is growing while the role of the food system in climate mitigation is not receiving the attention that it urgently needs." In my opinion food production has the attention. It even has well vetted solutions that are simply huge due to the very size of agriculture worldwide. BUT the problem is that there is tremendous backlash and opposition to actually making those changes. Almost insanely high levels of backlash.

This should help a whole lot.

The System of Rice Intensification (SRI)… … is climate-smart rice production

This also has great promise, although not quite as vetted.

Pasture Cropping: A Regenerative Solution from Down Under

And of course one thing that keeps popping up in agriculture all over everywhere one looks:

Holistic management (agriculture)

One reason I believe the resistance is so huge for these changes is the results are so huge and so beneficial it literally is embarrassing to those who implemented their destructive agricultural practices we have now. For example take a look at the results from pasture cropping.

"Jones calculates that 171 tons of CO2 per hectare has been sequestered to a depth of half a meter on Winona."

Any climate scientist that starts plugging in numbers like that into worldwide agricultural acreage and they get numbers so huge they proclaim it just is too good to be true.

171tons CO2 per hectare X 224.2 million hectares = over 38,000 million tons CO2 sequestered just in changing one crop, wheat, to regenerative agriculture. Start applying similar practices to all agriculture and the numbers get ridiculously huge. People just can't accept numbers so huge. They refuse to believe it really is that simple as simply restoring our soils to fertility.

"I am an organic farmer. I am not afraid of change, I am the change."

-

PhilippeChantreau at 02:41 AM on 1 April 2016Global Warming Basics: What Has Changed?

I think Tom Curtis has it right. Billev has been provided with more reading than he even had time to do before responding with again the same objections, indicating that he has either not attempted to understand the material, failed to do so, or has no interest in anything that deviates from his already held belief, regardless how invalid that belief is. This seems to be a waste of time. Perhaps there should be a quick test to pass on suggested reading before one can post again...

-

Tom Curtis at 02:36 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

KR @31, I am quite aware of the standard definition of the "tragedy of the commons". I am pointing out that that definition is Orwellian in nature, and motivated by a desire to supplant customary tenure held by (primarilly) indigenous peoples with fee simple tenure held by corporations.

For what it is worth, the term "tragedy of the commons" was coined by Hardin in 1968. It was not coined by Lloyd, although he discusses a model of the commons, and draws an analogy from that to the labour market, arguing that because the labour market is a commons, laborours will essentially breed without restraint. (pp 30-33).

-

KR at 01:19 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis - While that abuse may be part of the history of the term "Tragedy of the Commons", originally and in particular when describing climate change it follows a different definition, that put forth by William Forster Lloyd in 1833:

The tragedy of the commons is an economic theory of a situation within a shared-resource system where individual users acting independently and rationally according to their own self-interest behave contrary to the common good of all users by depleting that resource.

The term may have been misused, but that doesn't invalidate the original meaning.

-

Nick Palmer at 00:54 AM on 1 April 2016Six burning questions for climate science to answer post-Paris

Nice to see someone else on Skepsci who's aware of the large potential of agicultural/pasture soils to sequester large enough amounts of carbon quick enough to counterbalance our current emissions and holds out the hope of actually reducing atmospheric C levels.

It would require changing most agriculture away from the big chemical input methods we have today. Most people, when hearing about this for the first time, usually expect that crop yields would fall but, on balance, it seems crop yields can even improve. -

Tom Curtis at 00:24 AM on 1 April 2016Dangerous global warming will happen sooner than thought – study

chriskoz @28, I will respond to the greater substance of your comment later. Just now I want to flag my dislike of the term "tragedy of the commons" in its current usage. That goes back the the first, and genuine tragedy of the commons, also known as the enclosure movement. In essence, at the end of the midle ages, land holdings were based on a system of enfiefdom, wherein a tennant would hold land from their lord based on a requirement for certain duties. The duties varied based. For peasants they could include mandatory service on their lord's land, serving in their lord's militia on demand, payement of a small percentage of their own corn, and payment of a fee on inheritance of the rights. For that they typically had possession of a small amount of arable land, the right to liven in the village, to protection by their lord, and the right to graze livestock on the commons and/or fallow land, and the right to forrage (but not hunt) in the commons. The key point was that the system of responsibility was mutual, and in particular, the lord could no more refuse the relationship than could the peasant, or the king refuse the equivalent relationship to the lord - at least in principle.

In practise, of course, customary rights tend to be enforcible for those with power, and not for those who are weak. As a result, when it was discovered that more money could be had by grazing sheep on the land, many lords started to enclose their land, either just the commons or all of the land for sheep. This was in breach of customary law, and later in breach of several acts of parliament, but no effective action was taken. The tragedy here was not that the commons were being over used. It was that a very large number of the relatively poor and powerless were rendered destitute so that the rich and powerful could gain more wealth by seizing the commons to their exclusive use.

In principle and in practise, this was no different to the English seizure or Australian land under the doctrine of terra nullius, or equivalent denial of customary land rights in Kalimantan and West Papua by the Indonesians.

In current usage, the term "tragedy of the commons" is used to justify the seizure of customary rights of access to land/and or fisherys and giving them a simple property rights to the wealthy. It is in fact, a modern enclosure movement. Nothing more, and nothing less. And as their rhetorical justification for that seizure, they choose as their flag a misdescription of what actually happened to common land in Europe all those centuries ago, and what the actual tragedy of the commons in fact was.

The term is Orwellian.

-

bozzza at 23:35 PM on 31 March 2016Dangerous global warming will happen sooner than thought – study

@ 27, your comment seems conflicted.

You say taxes would be better than regulation but then you go on to say that systemic change is required.

-

chriskoz at 21:36 PM on 31 March 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis,

Your acceptance of free market with no negative externalities within the set of sovereign nations being the best available playground for sustainable enonomy, can easily be challenged as inadequate with respect to the climate change problem.

The issue is that CO2 polution is the tragedy of the commons not just within the economies of each of the sovereign nations. If it was, the externality of CO2 polution could be fixed by known mechanisms such as emission tax/trading scheme or regulations. However, CO2 polution is a truly global tragedy of the commons problem, in a sense that it goes beyond the realm of all sovereign economies. Therefore, the mechanisms cited above cannot fix it. It is described by some as the big TOC where sovereign nations are the actual players. IMO, you must ultimately resolve such TOC problem at this level to succeed.

Assuming the so called "global economy" is the playgrond here, it is far more difficult to fix the CO2 emision externalities while preserving the sovereignty of the players. The same applies to other TOC types of issues that you consider, e.g. the problem of ocean overfishing. The question is not just that mechanisms suitable in global economy do not exists. In fact a global carbon tax ideas have been circulating for some time, e.g. Hansen's idea of ubiquitous tax and dividents at all levels, at the source point (i.e. mining), import points and finally burning. But that idea is unimplementable without a breach of players' sovereignty. For a starter, how are you going to police it to ensure the enforcement?

-

RedBaron at 21:17 PM on 31 March 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

Tom,

Lets say you are right, and we have more total ruminents now. (I am not convinced of that but not worth arguing) That is a good thing if the animals are part of the grazer/grassland biome which is a net sink. The only reason it is a problem is due to removing those animals from the biome and putting them in CAFOs instead.

What we have now is a loss of ecosystems services over a vast area of land, because a cropfield most certainly does not function the same in regards to methane oxidation as a grazer/grassland biome. In fact at least one of the sources I quoted above found it functioned at only 20% the effectiveness of an adjacent grazed area. Think of all the cropland in the world producing grains and operating at only 20% efficiency in methane oxidation!

It is not unusual for agriculture to actually produce more food than a natural ecosystem. It is managed after all. But grasslands can be managed as well. There is no need to overproduce grains, far more than we could possibly eat, and then feed them to livestock, just so we can count that productivity twice. More important to AGW mitigation is to bypass all the "middle steps" and go straight from grassland to animal so we don't loose that ecosystem service.

-

BBHY at 21:13 PM on 31 March 2016New survey finds a growing climate consensus among meteorologists

We had what I would consider a very mild winter. Temperatures even in late December were in the 70's, and in late February/early March we had more warm spells, even touching 80 degrees in some places.

But in January we had one big snowstorm, and somehow that was reason enough for folks on social media to claim that we had a record cold winter. It wasn't even that cold during the snowstorm. That is really some extreme spin they are putting on.

-

barry1487 at 17:57 PM on 31 March 2016Global Warming Basics: What Has Changed?

Here is an eyeball test of whether or not there has been a pause since 2002.

Frist line is the trend 1970 to December 2001.

Second line is the tend 2002 to december 2015.

Doesn't look like much of a pause to me.

How about if we include January and February of 2016?

Doesn't look like a pause since 2002.

Now, that's just the 'eyeball' analysis. It's totally insufficient. But if you are not interested in doing it properly, billev, if you're just going to go with what your eyes see, then you have to agree that there's been no pause since 2002, right?

Here's the link to that web page, too, so you can check for yourself.

-

RedBaron at 17:15 PM on 31 March 2016Six burning questions for climate science to answer post-Paris

Obviously reducing emissions is one way to help. But Nick is correct. Regenerative agriculture is the solution. Most the schemes mentioned might help, but they are no where near large enough to actually solve the problem. Agriculture is easily large enough. Need methane oxidised? Methanotrophs. Need carbon sequestered, soil carbon actually increases food yields. Water shortages? Soil carbon actually holds water resisting the effects of drought. Floods? Same. Soil carbon increases infiltration rates mitigating the effects of floods. Is the soil sink large enough? Absolutely. Larger than all the carbon in the atmosphere. We might actually be forced to slow down a bit in our efforts just so we don't actually pull too much carbon out of the atmosphere and accidently trigger a glaciation event. But we need to get started soon. We have worldwide such massive soil degradation that in about 50-60 years agriculture will fail worldwide. Once that happens all is lost. With no agriculture as a base both civilization fails and any chance at using agriculture to mitigate AGW is lost as well. Then we are stuck with the much slower natural biomes to do the work, and they are not up to the task as they are also highly degraded. Mass Extinction event in my opinion.

-

barry1487 at 16:50 PM on 31 March 2016Global Warming Basics: What Has Changed?

billev,

No one expects a long-term warming trend to follow a straight line year after year. There are long and short-term spikes, dips, flat periods etc. If someone was trying to pull the wool over your eyes than those temperature graphs from NASA and other groups would look like a straight line.

I don't know why you expect the warming from CO2 to be represented as a straight line going from cooler in the past to hotter. I don't know why you think the variation that we see means something important about CO2 warming. As we all agree, there are other short term and long term factors that influence temperature. That's why we see the variation, the spikes and dips and flattish periods. The long term signal doesn't dominate at short time scales. It is, however, more persistent. We're going to see more warmth in the future, with spikes and dips and flattish periods. The signal is clear.

For most of your other points, the list of links in KR's post answers them well.

I tried to explain why no one can claim a pause, warming or cooling from short data sets (like since 2002). I can only link you back to the explanation.

http://www.skepticalscience.com/gw-basics-what-has-changed.html#116608

When you say 'pause', you are instantly in the domain of trend analysis. You need at least a basic understanding of that to make any kind of claim, or to have an understanding of the claim you are making. Eyeballing a graph isn't good enough.

But if you are only going to use your eyeball, then I showed you the results since 2002 for a bunch of graphs, all but one of which showed a warming trend. The line went from lower temps in 2002, to higher temps at the end. A naive reading is that this is a warming, not a flat trend. If you are going to stick with a naive reading, an eyeball assessment, then you must conclude that there is no pause since 2002?

I even linked you to the application where you can check for yourself. It's very easy to use.

http://www.ysbl.york.ac.uk/~cowtan/applets/trend/trend.html

If you want NASA's trend, then click on GISTEMP (GISS is the acronym for the department of NASA that does the temperature records), type 2002 in the 'start date' box and click 'calculate. Then look at the trend you get.

Tell me what you see for yourself. A pause or a warming trend?

-

bozzza at 15:42 PM on 31 March 2016Why is 2016 smashing heat records?

The bottom line according to Hansen is that surface temperatures are not necessarily the best indicator!

So what is then? According to Hansen it is the total energy in versus out budget: which includes various unkowns.

So, where do we go from here?

It's risk managament 101 and rather than change billions of dollars of plant the big swingers are going to play pause button politics until they can get the jump on the affirmed data. Oh noes.. what if the new guy starts with a well placed bet!

Divestment wins...

-

Tom Curtis at 13:53 PM on 31 March 2016Global Warming Basics: What Has Changed?

billev @42:

"The NOAA temperature charts clearly show me that while the Earth is warming, the warming is not continuous. If this is not the case then the charts are misleading so why are they provided to the public? There is a determined attempt to argue that there has been no pause in continuous warming in this century even though the chart shows there to be."

Nobody here has argued the warming is continuous. What has been argued is that your choice of breakpoints for long term trends are arbitrary, that there is no justification for seeing them as a repeating pattern, and that there is no breakpoint in 2002.

What the charts show after 2002 is a continuing positive trend that is not statistically significant only due to the small duration of the period. Further, the slope is lower than that of the trend of the preceding 30 years only because of strong La Ninas in 2008, and 2011/12. You argue that unusual peaks should not be factored into determining trends, but insist on including those in your trend calculation while refusing to acknowledge they are the impact of a short term effect.

What is worse, since this has been clearly pointed out to you, you have introduced no new evidence, made your claims vaguer and simply repeated the claims with no attempt of justification. Your argument has reduced to the repetition of a slogan.

You have similarly studiously ignored the refutation of your claim that CO2 has not effect @22 above. Once again, the graph shows the IR spectrum at the top of the atmosphere, plotted so that equal areas under the graph represent equal amounts of total energy transmitted. The large trough due to CO2 therefore implies a large reduction in energy transmitted to space due to CO2. That in turn requires that the surface of the Earth be warmer to drive greater energy transmission away from the CO2 trough to compensate, and bring the total energy transmission up to levels that match input of solar energy.

That is a very simple, very direct proof of the impact of CO2 on climate. It is based both on direct observations, and on models based on direct laboratory observations of the radiative properties of gases. In this instance, theory matches observations to an astonishing degree. Your commentary on the topic since has shown that you are determined to avoid evidence you do not like. If you are going to simply avoid evidence that challenges your views (as clearly you are) it will be much more convenient for both you and us if you do it elsewhere.

-

denisaf at 10:19 AM on 31 March 2016Six burning questions for climate science to answer post-Paris

The tipping point was reached decades ago so irreversible rapid climate change and ocean acidification and warming is under way. The most mitigation that can possibly be done is to institute a number of measures to reduce the rate of emissions so slow down the rate of increase in atmospheric and ocean temperature. Suggesting that this temperature rise can be limited is erroneus. There should be more focus on measures to cope with the consequences, such as sea level rise and storm surges.

-

Nick Palmer at 09:30 AM on 31 March 2016Six burning questions for climate science to answer post-Paris

Regenerative agriculture holds the promise of being way more effective than biofuels with carbon sequestration

https://en.wikipedia.org/wiki/Regenerative_agriculture -

Dcrickett at 09:01 AM on 31 March 2016Dangerous global warming will happen sooner than thought – study

Certain CO₂ abatement policies — such as the Carbon Tax — are clearly more effective than others — such as massive regulation. I think 'most anybody prefers the Invisible Hand of Adam Smith over the Heavy Hand of a Soviet Five-Year Dictum. And regardless of the currently apparent popularity of certain US and other wuzbe Cæsars, a real turnaround in attitudes is at hand. The problem appears to be that certain people have attained unjustifiable wealth and power and are ready to use unjustifiable means to maintain their unjustifiable holds on the aforementioned unjustifiables.

The best option is to try to change the system while change is possible. Otherwise we will hear the people singing the songs of angry men as they get out their torches, tumbrels and Remington 870’s, which will not have happy outcomes.

-

Tom Curtis at 08:58 AM on 31 March 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

RedBaron @18:

"In my opinion methane is a problem primarily because of CAFO's. It is not a problem in a properly managed grassland/savanna biome. After all those biomes supported many millions and millions of grazers who were extirpated. The methane levels before they were extirpated were actually lower than now!"

You have this backwards. The current megafaunal biomass is approximately 7 times that prior to human induced mass extinctions. The biomass of human livestock alone is 4.5 times that of all megafaunal biomass prior to human induced mass extinctions. See Barnosky 2008:

So, quite aside from the fact that not all grazers produce methane in the quantities of cattle, the amount of cattle now far exceeds the quantity of wild grazers they have displaced. Even the estimated 60 million American Bison pre-1800 have been replaced by 90 million cattle in the US alone, a further 12 million in Canada, (plus 500,000 Bison).

-

RedBaron at 06:17 AM on 31 March 2016A methane mystery: Scientists probe unanswered questions about methane and climate change

In my opinion methane is a problem primarily because of CAFO's. It is not a problem in a properly managed grassland/savanna biome. After all those biomes supported many millions and millions of grazers who were extirpated. The methane levels before they were extirpated were actually lower than now! According to the following studies those biomes actually reduce atmospheric methane due to the action of Methanotrophic microorganisms that use methane as their only source of energy and carbon. Even more carbon being pumped into the soil! Nitrogen too, as they are also free living nitrogen fixers.

http://blogs.uoregon.edu/gregr/files/2013/07/grasslandscooling-nhslkh.pdf

http://www.ncbi.nlm.nih.gov/pmc/articles/PMC239458/pdf/600609.pdf

LINK

http://www.ncbi.nlm.nih.gov/pubmed/11706799

http://journal.frontiersin.org/article/10.3389/fmicb.2013.00225/full

http://www.sciencedirect.com/science/article/pii/0038071794903131Grasslands and their soils can be considered sinks for atmospheric CO2, CH4, and water vapor, and their

Cenozoic evolution a contribution to long-term global climatic cooling.The subsurface location of methanotrophs means that energy

requirements for maintenance and growth are obtained from

CH4 concentrations that are lower than atmosphericUpland (i.e., well-drained, oxic) soils

are a net sink for atmospheric methane; as methane diffuses from the atmosphere into

these soils, methane consuming (i.e., methanotrophic) bacteria oxidize itNevertheless, no CH4 was released when soil surface CH4 fluxes were measured simultaneously. The results thus demonstrate the high CH4 oxidation potential of the thin aerobic topsoil horizon in a non-aquatic ecosystem.

Of all the CH4 sources and sinks, the biotic sink strength is the most responsive to variation in human activities

The CH4 uptake rate was only 20% of that in the woodland in an adjacent area that had been uncultivated for the same period but kept as rough grassland by the annual removal of trees and shrubs and, since 1960, grazed during the summer by sheep. It is suggested that the continuous input of urea through animal excreta was mainly responsible for this difference. Another undisturbed woodland area with an acidic soil reaction (pH 4.1) did not oxidize any CH4.

Moderator Response:[RH] Shortened link that was breaking page format.

-

Global Warming Basics: What Has Changed?

billev - Your last comment is really just a Gish Gallop, a collection of slogans, anecdotes, and nonsense. I suggest reading:

- How do we know more CO2 is causing warming (empirical evidence)

- Explaining how the water vapor greenhouse effect works, also Richard Alley on the CO2 'Control Knob'

- CO2 is not the only driver of climate (why warming isn't monotonic wrt CO2)

- On Statistical Significance and Confidence (why we're certain that AGW hasn't stopped, despite short term variations)

- Argument from incredulity fallacy (disbelieving the effect of increased CO2 because of the ratio to non-IR active gases)

- Greenhouse effect basics (why your hot feet are really irrelevant to what's going on at the top of the atmosphere)

Seriously, your post is nothing but a bag of sloganeering nonsense, the errors of which has already largely been pointed out to you.

-

MA Rodger at 02:28 AM on 31 March 2016Global Warming Basics: What Has Changed?

billev @42.

I will take just one of the questions you pose and provide an answer, in exchange for an answer from you.

If we stand on the hot sand of a beach the bottoms of our feet are most uncomfortable but the tops of our feet, only an inch from the sand, are not. If the heat retention power of CO2 is enough to drive the air temperature on Earth why does it appear so ineffectual at preventing such significant heat loss so close to the source.

When you stand on that hot sand, the reason the blood above the soles of your feet does not freeze in your veins is because there is an insulating atmosphere above you preventing the low temperatures of outer space from sucking the heat from your body. That atmosphere above you stretches eight miles up to the stratosphere and up beyond that as well. Over that eight miles the temperature drops about 70ºC, less that 10ºC per mile, a rate of temperature reduction not noticable over a few feet. It is the accumulative effect over the whole height of the atmosphere that does the job. So most of that insulating air is well over a mile away. At such a distance, why would it reflect back the heat from hot sand and toast the top of your foot at the same time as the bottom? Simply, it would not because it is almost entirely far too far away for to catch anything significant of the heat emitted by the sand and return it to the top of your foot.

And my question to you - You say "I have previously stated that the presence of a current pause in temperature rise will probably be more evident in another ten or fifteen years, toward the end of the pause." We know day follows night, winter follows summer and that they recur at fixed intervals. What mechanism is it that makes you think that your "pauses" recur at a fixed interval?

-

billev at 00:48 AM on 31 March 2016Global Warming Basics: What Has Changed?

I have read the thread about CO2 and have read all of the comments in answer to my last comment. Nowhere have I found any measure of how effectively CO2 captures and retains the heat radiating from the surface of the Earth and solid objects on its surface. I do know that the difference between daytime and night temperatures is significantly different in arrid areas than more humid areas indicating that water vapor may play a role in heat retention in the hours of darkness. The NOAA temperature charts clearly show me that while the Earth is warming, the warming is not continuous. If this is not the case then the charts are misleading so why are they provided to the public? There is a determined attempt to argue that there has been no pause in continuous warming in this century even though the chart shows there to be. I have previously stated that the presence of a current pause in temperature rise will probably be more evident in another ten or fifteen years, toward the end of the pause. Also, comparing a small amount of arsenic with a small amount of CO2 is meaningless. It also is an argument that can be taken two ways. A one inch thick steel sheet can, no doubt, stop a pistol bullet fired into it. But a sheet of steel 1/2500th of an inch thick would probably not even slow the bullet as it passed through. Finally, where is there any physical manifestation in our daily life that shows the heat retention powers of CO2. If we stand on the hot sand of a beach the bottoms of our feet are most uncomfortable but the tops of our feet, only an inch from the sand, are not. If the heat retention power of CO2 is enough to drive the air temperature on Earth why does it appear so ineffectual at preventing such significant heat loss so close to the source. Since that heat loss increases as distance from the surface increases is there any evidence that the current level of CO2 has any measureable effect on temperature?

Moderator Response:[JH] You are now skating on the thin ice of sloganeering which is prohibited by the SkS Comments Policy.

Please note that posting comments here at SkS is a privilege, not a right. This privilege can be rescinded if the posting individual treats adherence to the Comments Policy as optional, rather than the mandatory condition of participating in this online forum.

Please take the time to review the policy and ensure future comments are in full compliance with it. Thanks for your understanding and compliance in this matter.

-

h4x354x0r at 00:25 AM on 31 March 2016CO2 measurements are suspect

Thank you for the explanation, Tom Curtis. I had to get into my wayback machine and go visit my high school physics class, but the pointer towards Henry's Law helped break my metal logam, there. The annual variability is between 6-7 PPM; the annual increase is now a solid 3 PPM, and of course growing. It would be nice not to find out what will happen when the annual increase overtakes annual variability.

Again, thank you! -

Tom Curtis at 22:56 PM on 30 March 2016Dangerous global warming will happen sooner than thought – study

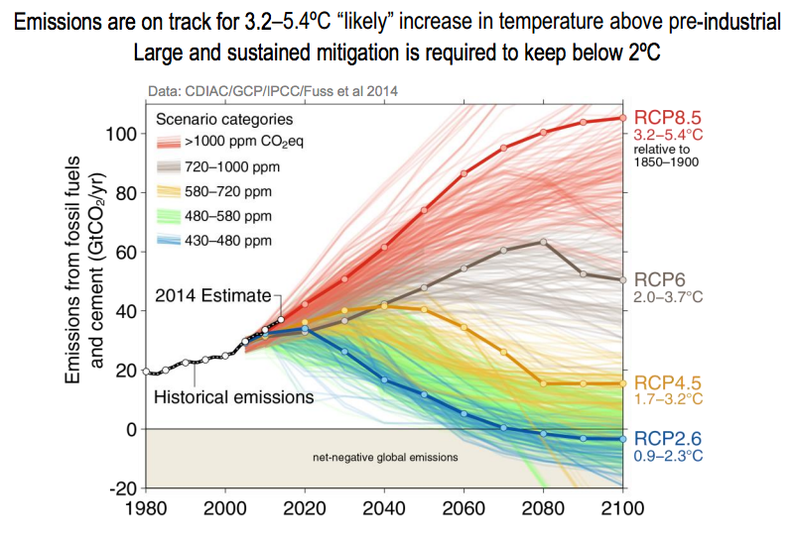

A comment by Trevor_S elsewhere has shown me I was too optimistic in my point 3 @12 above. In particular, he shows a graph from Fuss et al (2014) that shows CO2 emissions as of a 2014 estimate to be tracking RCP 8.5. Checking more recent data including LUC, I see that the comparison of level of emissions between current levels and RCP 2.6 above was (unintentionally) misleading. We are in fact doing better than RCP 8.5 in that we are plateauing emissions, but from RCP 8.5 equivalent levels, not from RCP 2.6 levels.

While this slightly reduces my optimism, it does not alter the appropriate strategy; or considerations in favour of it.

-

Tom Curtis at 22:45 PM on 30 March 2016Heat from the Earth’s interior does not control climate

If the moderators will allow it I would like to note for the benefit of pjcarson:

1) "sloganeering" is implicitly defined in the updated comments policy, which states:

"No sloganeering. Comments consisting of simple assertion of a myth already debunked by one of the main articles, and which contain no relevant counter argument or evidence from the peer reviewed literature constitutes trolling rather than genuine discussion. As such they will be deleted. If you think our debunking of one of those myths is in error, you are welcome to discuss that on the relevant thread, provided you give substantial reasons for believing the debunking is in error. It is asked that you do not clutter up threads by responding to comments that consist just of slogans."

I have added emphasis to indicate the key sentence which defines "sloganeering" (underlined), and the key feature of sloganeering that makes it mere noise in any attempt at reasonable discussion (bolded).

In registering with SkS, you agreed to abide by the comments policy when posting, and therefore should have read it.

2) By the same token, moderation complaints are also forbidden in the comments policy (the reason your most recent post was deleted).

3) For what it is worth, I am not a moderator; but was when the updated comments policy was introduced.

4) If you were to post the substance of your most recent post with actual peer reviewed numbers regarding "the change of heat due to tectonics", it would probably get past the moderators (unless you do something silly like all capitals, etc). Absent actual peer reviewed numbers, a quick look at your site shows your own calculations to be worthless - something easilly discovered to be the case with regards to your comments on the greenhouse effect in substantially less than eight minutes. If you want to criticize a scientific theory, it is essential that you actually understand it first, which clearly you do not. If you want to learn, here is a good starting point.

-

Tom Curtis at 22:30 PM on 30 March 2016New survey finds a growing climate consensus among meteorologists

denisaf @9, by a similar verbal contortion we can say that (in the majority of cases of violent death) people do not kill people, rather guns kill people. However, a murderer running that argument in support of a plea of not guilty would get short shrift indeed. That is because people understand perfectly well the concept of indirect agency, and hence you have no point.

-

Tom Curtis at 22:26 PM on 30 March 2016Six burning questions for climate science to answer post-Paris

TomR @2, do a google search for biochar.

-

TomR at 22:20 PM on 30 March 2016Six burning questions for climate science to answer post-Paris

Burning biofuels for energy would hurt food production as it already is with corn ethanol, and soy and palm oil diesel. It would also add to emissions as is occurring with UK chopping down American forests to feed the huge Drax power plant with wood pellets. With wind and solar, we really don't need biofuels which also add to air pollution since only about half of the CO2 is captured.

It seems to me that simply burying dead wood and plants in manmade lakes would imitate nature, i.e., how coal and peat deposits were created. It would also appear lower cost per ton of carbon captured. Such deposits would seem much more stable than pumping gas into the earth. I haven't heard anyone explore this idea, although I have read of its reverse, i.e., logging century old forests under the waters of dammed up rivers.

-

denisaf at 20:37 PM on 30 March 2016New survey finds a growing climate consensus among meteorologists

Misleading terminilogy doea not help in convincing what is happening. People are not causing cliamt change. It is the operation of technical systems that is producing the emissions doing the damage. people only make decisions, good and bad, about the use of technical systems.

-

radioAlex at 11:16 AM on 30 March 2016Dangerous global warming will happen sooner than thought – study

Listen to my Radio Ecoshock interview with Ben Hankamer here:

http://tinyurl.com/jguk7gy

-

Trevor_S at 09:40 AM on 30 March 2016Six burning questions for climate science to answer post-Paris

>We will not meet the targets if the world relaxes on mitigation efforts

wait...what ? We're bang on track for RCP8.4,

we've not mitigated at all, 2015 was a record year for CO2 emisisons and CH4 emisions have increased significantly over the last decade or so.

-

barry1487 at 09:35 AM on 30 March 2016New survey finds a growing climate consensus among meteorologists

BBHY, there is a list of record-breaking hot/cold days per city/state since 2002 on the web.

-

barry1487 at 09:31 AM on 30 March 2016New survey finds a growing climate consensus among meteorologists

bozza,

https://www.jeffreydonenfeld.com/blog/2013/01/is-it-really-snowing-at-the-south-pole/

That's something I found after 5 seconds. No doubt you could find more technical stuff with little effort. Google is your friend.

-

Hank11198 at 08:43 AM on 30 March 2016Models are unreliable

Does anyone know where I can find a graph that shows how the climate models correlate with temperature back to around 1880? Can't find that by using google for some reason.

-

John Hartz at 08:37 AM on 30 March 2016Dangerous global warming will happen sooner than thought – study

Here's another way our current economic system should be changed...

A new relationship with our goods and materials would save resources and energy and create local jobs, explains Walter R. Stahel.

The circular economy by Walter R. Stahel, Nature, Mar 23, 2016

-

nigelj at 08:16 AM on 30 March 2016Dangerous global warming will happen sooner than thought – study

Tom Curtis

Thanks for your response, and yes it does explain things a lot better. I subsribe to basically the same views on politics, markets and economics in general. You might find the book "Freefall" by Jospeph Stiglitz relevant, if you have not already read it. The book is about economics and the financial crash, but he also includes some specific discussion on climate change and market regulation.

I also 100% agree we cant wait to reform the world economy into something ideal before acting on climate change. However I'm sure Naomi Klein would also agree with this. Many things simply need to be done in parallel.

Agree also with your third world comments. It's a tough one, but I had reached the same conclusions myself.

-

bozzza at 04:59 AM on 30 March 2016How to inoculate people against Donald Trump's fact bending claims

Fossil Fuel prices aren't tanking for any logical reason: some say it's to stop America drilling for their own reserves and thus it's Geo-political rather than for any natural limiting factor!

-

bozzza at 04:31 AM on 30 March 2016New survey finds a growing climate consensus among meteorologists

Also, @ 4, did you know you can dry your par boiled chips in the freezer before their second deep fry?

-

bozzza at 04:18 AM on 30 March 2016New survey finds a growing climate consensus among meteorologists

@4, I don't want to ask in the wrong forum but can you tell me: does it not snow at the poles?

I was told it doesn't snow at the poles for the reasons you gave in the previous answer but was wondering how far away from the poles(if at all true) this takes place and how it changes,... or indeed if it is a useful indicator of climate change while we're on the subject?????????

Prev 510 511 512 513 514 515 516 517 518 519 520 521 522 523 524 525 Next

Arguments

Arguments