Recent Comments

Prev 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 Next

Comments 36101 to 36150:

-

Tom Curtis at 19:11 PM on 9 August 2014CO2 effect is saturated

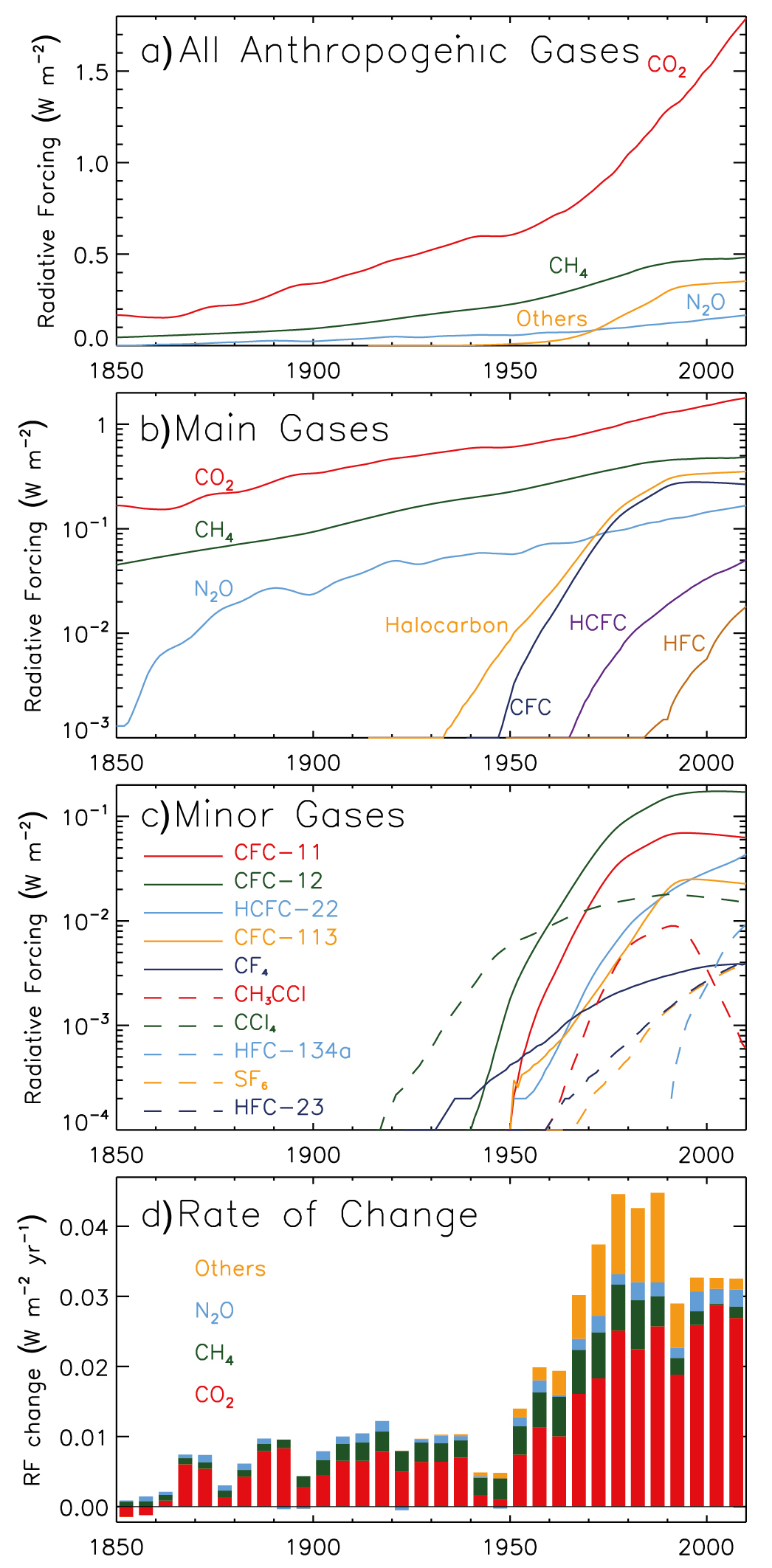

Dan Smith @279, your respondent is thoroughly confused, having mistaken a response to temperature increases (the water vapour feedback) for the initial cause of temperature increase (the radiative forcing). The theory that CO2 is saturated, ie, that increases in CO2 in the atmosphere will cause not increase in radiative forcing does, however, contradict the IPCC:

In fact the IPCC gives the formula for radiative forcing due to a change in concentration of CO2 as being:

ΔF = 5.35 x ln(C/C0),

where ΔF is the change in radiative forcing, C is the atmospheric CO2 concentration in part per million by volume (ppmv, or ppm), and C0 is the CO2 concentration in the period to which you are comaring, and ln is the natural logarithm. The specify this in the supplementary material to chapter 8 (section 8.SM.3), as they have done in the two preceding reports.

Anybody who thinks CO2 is saturated does not understand the basics of the greenhouse effect. The change in CO2 concentration reduces IR radiation to space. Ignoring the stratosphere, any increase in CO2 concentration means the IR radiation to space must come from a higher level, and hence cooler level. Because the amount that a gas will radiate depends on its temperature, this means it will radiate less to space, and hence the total IR from the Earth to space will decrease. To restore radiative balance, some change in the atmosphere will need to occur. As changes in the atmosphere are driven by changes in temperature, and as increasing temperature increase radiation (and hence radiation to space), that will certainly require an increase in temperature. I have explained this in more detail here.

-

Tom Curtis at 18:29 PM on 9 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

wili @9, I am not specifically aware of the study. I have seen reports of the MIT Integrated Assessment Model which is pessimistic both in terms of radiative forcing for a BAU scenario by 2100, climate sensitivity, and impacts relative to other models. Further, I believe there is a remote risk of human extinction from global warming, primarilly due to the increased risk of nuclear war in a world massively, and adversely effected by global warming. Therefore I was willing to entertain ubrew's assertion as a basis of discussion, but probably should have made it clear I was supposing the existence of the study rather than confirming it.

-

wili at 17:50 PM on 9 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

Could someone provide a link to these "MIT model results" mentioned by ubrew and by Tom Curtis?

-

Dan Smith at 14:55 PM on 9 August 2014CO2 effect is saturated

I am technically a laymen in the world of climate change, but I have a decent understanding of climate change principles. I am by no means near the level of understanding as all the people posting on here. But I have a quick question that I don't think would require much thought for most of you.

I was in a back and forth with someone on a comment board and he brought up the saturation argument. I then sent him the link to this article. He then said that this article contradicts the IPCC:

"CO2 has reached its reflective saturation limit, we accept that. However, rising CO2 levels cause a rise in relative atmospheric humidity and water vapor is amount the most powerful global warming forces. So CO2 causes higher humidity and that causes global warming. This is the OFFICIAL IPCC explanation that there is a supposed consensus on.

If you can’t see how your source contradicts the actual explanation the climate scientists give at IPCC and you can’t admit that you had no clue as to the actual explanation, we are done, I have no more time to waste on you."

I don't see what the contradiction is. Am I missing something? Thanks for any thoughts.

Moderator Response:[JH] You are correct. There are no contradictions between the OP and the IPCC report. The role of water vapor is explained in the SkS rebutal article, Explaining how the water vapor greenhouse effect works.

-

michael sweet at 08:20 AM on 9 August 2014Air pollution and climate change could mean 50% more people going hungry by 2050, new study finds

Donny,

This is a scientific board. The OP uses data to show that there is great risk that billions of people will be left undernurished by AGW. They only look out to 2050, after that it will be much worse. Your argument from ignorance has no standing. You must provide data to support your wild claims.

If fact, currently in the USA the cost of beef has gone up substantially due to AGW related drought in the midwest. California is losing billions of dollars from AGW related drought damaging agriculture. Where is the new farmland going to come from, under the glaciers in Greenland?

-

Donny at 07:33 AM on 9 August 2014Air pollution and climate change could mean 50% more people going hungry by 2050, new study finds

To elude that more people will go hungry if the climate warms is so ridiculous it's almost funny. Globally much more land would become viable farm land than would be lost. Not to mention CO2 is a plants best friend. Everyone should agree that using up all the fossil fuel this planet has is a dumb idea... however making up "consequences" is just going to hurt credibility.

Moderator Response:[PS] Please see Dai et al and CO2 is plant food to come to a rather more informed position.

-

longjohn119 at 07:15 AM on 9 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

The deep oceans aren't cooler per se, they simply hold less Heat Energy than they once did but the Law of Conservation of Energy tells us that can only mean the Heat Energy went somewhere else .... like towards the surface.

Heat is a definitive term, cool, cold hot are relative terms denoting more or less Heat Energy. Cold has no Magic Powers to destroy Heat Energy although it seems to be a very common misconception from the Scientific Mental Midgets that deny the Reality of Global Warming because they simply aren't intelligent enough to grasp basic physics .....

-

RFMarine at 01:19 AM on 9 August 2014Air pollution and climate change could mean 50% more people going hungry by 2050, new study finds

this is why we are in a hurry and that means the risk of using a combo of nuclear power + renewables to get fossil fuels retired faster is far less than insisting on 100% renewables which causes fossil fuels to hang around longer

-

Falk at 20:13 PM on 8 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

After having a brief look at the paper, it seems to contain a lot of information regarding my question on the blog post of July 28th.

The write:

A total change in heat content, top to bottom, is found (discussed below) of approximately 4 × 10e22 J in 19 yr for a net heating of 0.2 ± 0.1 W m−2, smaller than some published values (e.g., Hansen et al. 2005, 0.6 ± 0.1 W m−2; Lyman et al. 2010, 0.63 ± 0.28 W m−2; or von Schuckmann and Le Traon 2011, 0.55 ± 0.1 W m−2.

Even though they find a smaller total heat content increase than the other studies I find the summary at the beginning (first 2 pages or so) very helpful. For the rest of the publication I would greatly appreciate a blog post summing up its contents as my background knowledge on this is quite limited.

Moderator Response:[PS] Added link to earlier question.

-

Tom Curtis at 19:45 PM on 8 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

ubrew12 @5:

1) The accusation that people know of potential genocidal consequences of a policy and intentially promoting it for that reason is offensive and should never be entertained except in the case where you have overwhelming evidence in support of it. In this case you do not. It is mere speculation that should not be intertained by any rational or decent person.

2) Specifically with relation to the Australian, I am (or was) a long term reader and know how some of their columnists think quite well. Some of them are eminently rational even if I frequently (in one case nearly always) disagree with them. Among these are Paul Kelly, Dennis Shanahan and Greg Sheridan. They are not the type of people who would find potential genocide in anyway attractive and they would certainly move heaven and earth to stop it if they thought it was in prospect. Some others of their commentators I also know well and more or less despise them, but I cannot think of the slightest reason to think that poorly of them as to accept your suggestion.

3) The MIT model results are in any event an over estimate of the risk of extinction from global warming. That's OK. Scientific results will never be precise, particularly in an area as complex as climate science. As a result you will get models that overshoot, and models that undershoot. The thing to do is not to fixate on the results of a single model, particularly (as with the MIT result) it is a clear outlier.

-

ubrew12 at 14:40 PM on 8 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

I got snipped for a too forceful reference to what I think Lloyd and his publisher are doing, but I'm going to persist once more in the charge in a more politic way. There is now a 10% chance AGW will cause humanity to go extinct (MIT model results), so the chance is very high AGW will, at a minimum, significantly 'thin the ranks' of humanity in the near future. The 'misinterpretation' of Climate conclusions over the last 40 years can no longer reasonably be ascribed to tribal pride or fossil influences. We must now entertain the notion that Lloyd and his publisher are knowledgeable of AGW's lethal outcomes and are hastening us there intentionally. They are comfortable doing so because they read in themselves a superior survival probability in such an attritious environment. So Lloyd did not misunderstand the deep ocean findings. He did not make a mistake. Instead, he did his part to help set a multi-generational and multi-ethnic trap whose targets are your children.

Lets at least entertain the notion that this is happening. History suggests that at times like these, by the time the targets say something, the trap has long been set.

-

MattJ at 14:25 PM on 8 August 2014It's planetary movements

Before they changed the name to "technical analysis" to fool the unwary, hucksters used a similar "curve fitting exercise" to ensnare investors. It was called 'chartism'. But once so many economics textbooks had debunked 'chartism', they change the name to something sounding more respectable, not to be confused with genuine financial time-series analysis.

But I see no campaign as successful as the past campaign against chartism to persuade the public this "Jupiter/Sun Gravity Model". Forbes has jumped on this bandwagon, too. Worse yet, Scaffeta got his chartism published in a supposedly "peer reviewed" journal.

I haven't done the calculations myself, but it seems to me that a quick back of the envelope calculation of the force on Earth due to Jupiter and Saturn even at it's peak would show that they can't even lift a feather, far less influence the Earth's orbit and therefore climate. Why the 'peers' of the peer reviewed journal never did this calculation is a mystery to me. It should be a cause of disgrace for them, too.

Reviewers who give a pass to garbage like this need to be outed and publicly humiliated.

-

chriskoz at 13:27 PM on 8 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

The Australian, in pasrticular their env columnist Graham Lloyd, have developped quite a history of climate science misrepresentations. Some of them, e.g. recent incorrect critique of 97% concensus in Cook 2013 by Richard Tol - misrepresented by Lloyd - have been discussed here.

More comprehensive list of Lloyd's biased coverage is available here. Clearly, based on that history, we need not to be surprised at this latest development; furthe3r may expect more distortions of climate science from Mt Lloys in the future.

But it is hartening that scientists do not ignore those incidents but fight back the misinformation straight at its source, as Carl has done here. Another example of a scientist who "fights back" is Michael Mann who not only writes comments/op-eds to the affected newspapers but also enters legal battles if required to stand his ground. Others should also be encouraged: their time doing it is well spent. I'm personally thankful for that: great job guys!

-

Doug10673 at 09:16 AM on 8 August 2014Facts can convince conservatives about global warming – sometimes

I have found when dealing with deniers that talking with them helps, and I mean talking as oppossed to writing back and forth in e-mails or blogs. Also, not being afraid to back them into corners helps. Leave them no room for escape in a non confrontational way. Most people can be got at. It also helps to anticipate what they are going to say, so that you have ready proof to show them. Know what you are talking about.

-

Rob Honeycutt at 08:35 AM on 8 August 2014CO2 lags temperature

Sorry edgberht, but the Earth is 4.6 billion years old, not 600M years old.

-

ecgberht at 07:30 AM on 8 August 2014CO2 lags temperature

The earth is 600M years old. Do you show CO2 levels for earlier periods than 400K (when the levels were far higher and the earth was far warmer) anywhere on this site?

Moderator Response:[PS] your comment tone is bordering on sloganeering. Ie posting long-debunked myths in disguise. If you are genuinely interested in the science, then certainly this is a site to help for instance see "Climate changed before" and "CO2 was higher in the past". Even a cursory read of the appropriate chapters in the IPCC WG1 report will tell you what the science is really saying as opposed to what misinformation sites might claim.

-

scaddenp at 06:03 AM on 8 August 2014A Relentless Rise in Global Sea Level

The processes involved in sealevel rise, especially ice melt are non-linear. What models we have for sealevel rise predict accelerating. This post at Realclimate links to many of the relevant papers.

"and a slower rate of growth in sea level rise". Um I am not seeing that. Do mean the pothole in 2011? That La Nina moving water onto land, not any reducing rate in ice loss.

Acidification is tightly bound to concentration of CO2 in atmosphere, completely independent of surface temperature, and no, no reduction in that.

As for locked-in sealevel rise, the sea will stop rising when the ice stops melting. If temperatures stopped rising tomorrow, then you would still get more glacier melt since so many are out of balance with current temperature, but you would expect rate of rise to decline. A recent paper on West Antarctica ice sheet suggests it may be too late with warmer ocean already doing the damage.

-

MA Rodger at 03:27 AM on 8 August 2014Error identified in satellite record may have overestimated Antarctic sea ice expansion

BojanD @18.

I am a bit mystified by your comment that it is possible the "the error, if found, will turn to be a minor one." Surely the size of the error in question is not in doubt. Or do you think otherwise?

Beyond that, consider where you place yourself w.r.t. Eisenman & Comiso. Eisenman gives no preference for the error being within either BootstrapV1 or BootstrapV2. Thus his position could be characterised as 50:50. Comiso insists the error is within BootstrapV1 so his position could be characterised as 100:0. The neutral position between the two would thus be 75:25. Your stated position (>68%) could perhaps be considered as centered on 84:16, closer to the neutral position than the Comiso position.

Does that make sense?

-

michael sweet at 01:14 AM on 8 August 20142014 SkS Weekly News Roundup #31B

MThompson,

Since when did "few" mean "none"? The IPCC quote you cite is not in contradiction to the OP. North east scallop aquaculture is a well documented example of one of the few.

I think scientists are handicapped by always limiting comments to things that have been proved beyond doubt. Meanwhile, skeptics repeat the same old myths over and over until people believe them. Then some people insist that scientists need many examples, one is not enough. This single example is the tip of the iceberg, more are coming. our insistance that scallps are not currently affected by pH change is contrdicted by the facts on the ground. You need to read the background on the article you object to.

-

One Planet Only Forever at 00:39 AM on 8 August 2014Facts can convince conservatives about global warming – sometimes

The "Communitarian" "Individualist" split of the sample may be missing key factors that could significantly affect a person's attitude toward investigating and interpreting infromation regarding this issue.

More applicable differentiatiors would be:

- "Desiring the development of a better future for others" vs. "Desiring a better present for themselves". Communitarians can be tribal and not care about others or the future. Individualists can recognise the benefit they obtained from others who cared about the future they contributed to developing through their individual actions.

- "Accepting that something profitable or propular is justified by its popularity ot profitability" vs. "Understanding that profiatbility is increased by the amount of unacceptable activity that can be gotten away with due to popular support, unwitting or aware, for the unacceptable activity". Again, communitarians and Individualists could develop either attitude.

-

Tom Curtis at 00:18 AM on 8 August 20142014 SkS Weekly News Roundup #31B

MThompson @7, the first rule of science is to keep an accurate emperical score. In this case, the emperical score is a few studies showing harm to organisms in situ as a result of declining pH. There are also some more studies that show in the presence of low pH, certain organism have greatly reduced frequency of occurence, even over seperations of mere meters. That is, in addition to laboratory studies, field studies show both that the hypothesized fall in pH with increase in atmospheric CO2, and the harm to some marine organisms due to low pH are actually occuring now. Repeatedly quoting the IPCC out of context to suggest that there are few relevant studies while ignoring the fact that those studies that exist support the hypothesis is not being scientifically "pedantic". It is misleading and deceptive conduct.

-

MThompson at 23:30 PM on 7 August 20142014 SkS Weekly News Roundup #31B

Perhaps once again my commentary was unclear to some. In my original comment numbered 4, I was referring to the article “Intensifying ocean acidity from carbon emissions hitting Pacific shellfish industry,” and not to all the possibilities of extreme anthropogenic global climate change in general. The IPCC quote I provided stands in plain opposition to the headline of the referenced article, unless some dramatic new evidence has come to light since the final draft of the IPCC AR5.

If I correctly understand Tom Curtis’ missives (5 & 6), I have left some readers with the impression that, since the IPCC reports that there are few examples of PH decrease beyond natural variability, my assertion is that marine life will not be harmed by increasing atmospheric CO2.

Please forgive the pedantic, but the scientific method applied to this situation:

1) It is observed that atmospheric CO2 levels are rising, and have been doing so for decades.

2) It is observed that reduced PH is harmful to marine organisms, both in natural and laboratory settings.

3) It is hypothesized that the increase in atmospheric CO2 will lead to decreased PH of the oceans, and that will in turn be harmful to marine life.

Thus I restate:

"Few field observations to date demonstrate biological responses attributable to anthropogenic ocean acidification, as in many places these responses are not yet outside their natural variability and may be influenced by confounding local or regional factors."

IPCC AR5 WGII p.9Moderator Response:[Rob P] - That's a poorly-worded paragraph in the IPCC assessment. Not as bad as the Himalayan glacier error from the previous report, but the inference is that ocean acidification needs to be outside natural variability to cause problems for marine calcifiers - which is just silly.

This is like suggesting that even though the Earth is warming, and causing heatwaves and droughts to intensify and occur more frequently, we can't attribute enhanced tree mortality in drought-affected regions to global warming, because the mean temperature has not moved outside natural variability.

I suspect this has been watered down by the political process involved in signing off the reports. Watered down to such an extent that it is nonsensical. No matter, there's plenty of emerging research being published on ocean acidification, and we'll start to get a better idea of which species are likely to survive, and which will perish.

As for your assertion that marine life will not be affected by ocean acidification, it's a nice idea, but one not supported by the scientific literature, nor present-day observations. The dissolution of the shells of pteropods around Antarctica, and in the California Current System are a case in point.

It certainly seems that corrosive seawater can be tolerated by many marine organisms, provided that the exposure is brief, but long-term exposure creates energy demands that simply cannot be met under normal conditions. In other words, as long as the calcifier can get sufficient food to power the calcification process, it can make up for the dissolution occurring outside the calcifying space. In the real world, this isn't going to happen over the long-term as the entire carbon chemistry of the ocean continues to change. Ocean acidification is like a rising tide in that it raises all boats i.e. the energetic demand increase right throughout the life-cycle.

-

ozboy1 at 18:47 PM on 7 August 2014A Relentless Rise in Global Sea Level

My thoughts these days go to implications. 3.3 mm is a global average and there are strong regional variations, or so I read. At that rate in 10 years its 3.3 cm and in 100 years .33 of a metre. In the end I wonder how much of an issue that is?

This leads to another question of how much future sea level rise is locked in? If we magically reduced excess CO2 output tomorrow for how much longer would sea levels rise?

My third question is that we have seen in recent years a slower rate of growth in surface air temperature (now about 5% higher than the 20th century mean) and a slower rate of growth in sea level rise. El Nino is invoked as a partial explanation for both. Do we see a similar development in the rate of change in water acidity?

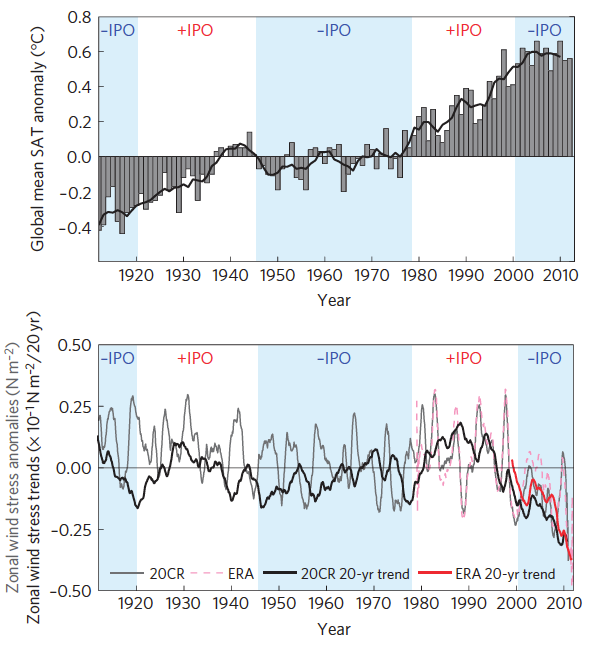

Moderator Response:[Rob P] - No, El Nino does not explain the slower warming rate in surface air temperatures in the 21st century. Stronger and more frequent La Nina, however, are only a partial explanation - see Climate Models Show Remarkable Agreement with Recent Surface Warming for instance.

La Nina is dominant during the negative phase of the Interdecadal Pacific Oscillation [IPO]. As the spin-up of the wind-driven ocean circulation is able to mix more heat down into the ocean (mainly the Western Pacific & the subtropical ocean gyres), and draws up more cool water from below the thermocline in the eastern tropical Pacific, we get this stronger ocean warming/weaker surface warming pattern.

It so happens that the trade winds connected with the current negative IPO have been exceptionally intense, and have thus temporarily counteracted the strong greenhouse gas-forcing during this century. See England et al (2014).

-

Mikemcc at 15:59 PM on 7 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

Unfortunately it appears that is no legislation that holds newspapers to account to publish factually correct information beyond the various libel laws. The worst culprits appear to be the Murdoch press (Scum, Austalian, Faux News, etc) closely followed by papers like the Daily Fail, the Telegraph does seem to have seen the light a bit recently in getting rid of the likes of Chistopher Booker.

-

scaddenp at 12:37 PM on 7 August 2014A Relentless Rise in Global Sea Level

Stardustoz - if you look to the right of this comment, you will see the heat widget. Currently heat gain is equivalent to more than 2 billion Hiroshima bombs since 1998 - so no - the heat release from bombs is insignificant compared to the extra solar trapped from GHG accumulations.

-

Stardustoz at 11:59 AM on 7 August 2014A Relentless Rise in Global Sea Level

First of all, I'd like to thank you all for the information you provide here on a daily basis. Second, I'd like to say that I am in no way scientific or have a degree in the sciences. However, I do have a very inquisitive mind, especially when it comes to the effects that human interactions has on the Earth. The question I wish to pose in regards to sea level rise and warmth is whether the large scale nuclear testing that has been conducted at sea over the years has contributed and whether such anthropogenic factors are considered in the models. Would this have a significant impact on sea level temps and rise? Again, please forgive me if this question is a silly one to ask here.

-

Bob Loblaw at 10:56 AM on 7 August 2014A Relentless Rise in Global Sea Level

r.pauli:

Yes, warmer air is capable of holding more moisture. The relationship is roughly exponential.

At cold temperatures (say, -30C), the air holds little moisture and large changes in temperature don't change that capacity by much. You don't see much snow falling at cold temperatures, because there isn't much moisture and you can't get much out for a given decrease in temperature.

At warmer temperatures (say +30C), the capacity is much higher, and small changes in temperature make a much bigger difference. A little bit of cooling leads to significant condensation, which lleads to thick clouds and lots of potential for heavy rain.

-

michael sweet at 10:37 AM on 7 August 2014Error identified in satellite record may have overestimated Antarctic sea ice expansion

The NSIDC discusses this error in their current sea ice report (scroll to the end). They say their data was unaffected by the reported error. The NSIDC seems to feel that it was a small error and that other data, including their own, confirm the amount of Antarctic sea ice. Antarctic sea ice ahs increased over the past three years. The quesiton is whether it is a long term gain or just a short term fluxuation.

-

scaddenp at 10:28 AM on 7 August 2014A Relentless Rise in Global Sea Level

The atmosphere isnt a very large reservoir. On the other hand, moving enough water from sea to land can indeed lower sea level as it did in 2011.

-

Falk at 09:12 AM on 7 August 2014Nigel Lawson suggests he's not a skeptic, proceeds to deny global warming

Thank you Rodger @19 and Tom @ 20 for both your answers. I knew somebody would notice the 'roughly' in my doubling. I did it too but didn't know how to edit.

I indeed did not notice that it was a stacked graph. Thanks for pointing it out. And it makes sense to take the earths whole surface into acount I just thought that 0.44 W felt too small (with 21e22 J instead 35e22 J even more so) and I didnt' know if it was a valid estimate.

Tom Curtis @20: I really was thinking about the ~2 W/m^2 for anthropogenic causes. Thanks for the brief summary with the feedback effects that explains the difference in my head. By the way step three was coming from the mislead thought about sun incidence on the earth projected area but as you pointed out the energy imbalance is mainly due to the insulating effect of greenhouse gases and not in combination with direct sun light passing through them.

-

r.pauli at 09:00 AM on 7 August 2014A Relentless Rise in Global Sea Level

I thought warmer air holds more moisture. Wouldn't increased atmospheric heating carry water vapor sufficient to affect sea level?

If we have deluge rainstorms that can release many inches of water, wouldn't there be some quatifyable increase of water removed from oceans??

Moderator Response:[Rob P] - The caption in Figure 2 does mention that the comparatively small increase in atmospheric water vapour is included in the calculations of Cazenave et al (2014). So this effect, albeit small so far, is accounted for.

As for your 2nd question, yes, that certainly seems likely and is something I've mentioned in previous posts - these fluctuations could increase in magnitude as the Earth grows warmer. The atmosphere, in the absence of changes in the large-scale circulations, should be able remove and dump more water on land during La Nina-dominant (negative Interdecadal Pacific Oscillation) periods, and during La Nina events themselves.

In the last 4-5 years the magnitude of the year-to-year variation in global sea level seems to have gone up quite a few notches - which suggests that the large-scale circulations are playing role as well.

-

longjohn119 at 06:12 AM on 7 August 2014Harvard historian: strategy of climate science denial groups 'extremely successful'

These same Paid Politcal Propagandists were also involved in the denial of Acid Rain and it's causes (like mercury and CO2 it was from burning coal) as well as denial of the Ozone Hole and CFC's contributions to it.

-

BojanD at 04:54 AM on 7 August 2014Error identified in satellite record may have overestimated Antarctic sea ice expansion

As for the mainstream result, I was of course refering to the 'corrected' data sets, so the word 'still' was indeed unfortunate. It's tough to get to probabilities, but I can do this.

In favour:- other researchers obtained similar results

- (minor due to overlap) algorithm was already compared to another one with negative results

not in favour:

- (minor since models don't work well for Arctic sea ice, too) models predicted the trend, but not as steep

- the change to algorithm was made and reverted inadvertantly, which is kind of weird, and was not detected by other researchers. So this kind of weakens the first bullet.

IMO it's likely (more than 68%) that current mainstream results reflect the real trend or that the error, if found, will turn to be a minor one. -

Paul D at 04:44 AM on 7 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

It does seem that many newspapers today are willing to lie and fabricate 'truths' to further a political agenda when it comes to climate change.

Lets not forget that national newspapers are not interested in pure news, they all have an agenda, every inch of page space has a 'meaning' and a message even when an opposing view to the papers ideology is expressed. The problem is at the top and the agenda of the editor and owners.

In the context of democracy and politics this probably works to the advantage of our communities. But when it comes to facts and science, it clearly fails us all.

-

Donny at 03:40 AM on 7 August 20142014 SkS Weekly News Roundup #30A

It is an incredulous stretch to link rain storms (which may not even be related to global warming let alone man's influence on the warming) to Chicago's lack of maintaining it's own infrastructure. Maybe someone should suggest spending tax dollars on things that matter. ... like controlling ecoli.

Moderator Response:[Rob P] - The increase in heavy downpours in a warming world has long been expected based on the Clausius-Clapeyron relation, i.e. a warmer atmosphere can hold more moisture and thus when it rains, bursts of rain tend to be heavier.

As the article points out there has been a 20% increase in these heavy downpours in the midwest USA. But this is, obviously, a global phenomenon - see Westra et al (2013) - Global Increasing Trends in Annual Maximum Daily Precipitation, who write:

"Furthermore, there is a statistically significant association with globally averaged near-surface temperature, with the median intensity of extreme precipitation changing in proportion with changes in global mean temperature at a rate of between 5.9% and 7.7% K−1, depending on the method of analysis. This ratio was robust irrespective of record length or time period considered and was not strongly biased by the uneven global coverage of precipitation data."

SkS will have a rebuttal to your myth (which is not uncommon) in the near-future.

-

Tom Curtis at 03:27 AM on 7 August 2014Nigel Lawson suggests he's not a skeptic, proceeds to deny global warming

Falk @18, the Earth's surface area is the area of an oblate spheroid. The area of a disc of the same diameter as the Earth's equatorial diameter is 1.27 x 10^14, ie, approx 1/4 of the Earth's surface area. Therefore your step three, which attempts to compensate for the difference between a circular and a spherical surface is double counting.

I am unsure as to the point of your step two. Most of the change in the energy balance is due to increased greenhouse effect reducing outward radiation, and an increase in temperature increasing outward radiation. Both of these effects occur approximately equally over the whole globe, so there is no need to determine the hypothetical case where it occurs only on one side of the globe.

I assume you are trying to reconcile the global energy imbalance (average of 0.44 W/m^2 over the last fifty years) with the change in forcing due to anthropogenic causes (around four times that). The difference is because the change in forcing is the calculated effect of change atmospheric compostion (primarilly) since 1750 on the assumption that there are no temperature changes at the Earth's surface. In fact there have been temperature changes, which have increased outgoing radiation. Therefore the expected energy balance is Forcing minus λ temperature change, where lambda is a linear factor representing the effects of feedbacks. That is calculated to be around 0.5-1 W/m^2, and calculated values lie comfortably within the error margins of observed values.

-

MA Rodger at 03:13 AM on 7 August 2014Error identified in satellite record may have overestimated Antarctic sea ice expansion

☺ On what do you base your view that it is a “fact that (the) mainstream result is probably still correct”? Note that the implication of using the word “still” suggests it has remained correct. Also what levels of probability do you consider to be factual (as to not place some bound on it would make the statement meaningless)?

☻ I also note that you seem to be presuming that I am signed up to the error (which I think we can agree exists) as having been fixed. On what do you base such a presumption?

You'll likely agree it best to clear this matter up before becoming embroiled in the explanation of an actual enigma with all that that may entail.

-

MA Rodger at 03:04 AM on 7 August 2014Nigel Lawson suggests he's not a skeptic, proceeds to deny global warming

Falk @18.

Two point for you.

No 3 is not "roughly another doubling" but exactly a doubling. The area of a sphere is 4πr2 and the area of a disc is the well-known πr2.

Your initial 35e22J estimate appears to be based on a misinterpretation of the graph and thus too high. It is a "stacked" graph, thus the sum of each element can be directly read from the graph. So about 21e22J would be nearer the mark. In my book, the graph is a bit on the schematic side as Ocean Heat Content fell 1960-70 if you plot out the usual Levitus 0-2000m data.

-

ubrew12 at 02:19 AM on 7 August 2014Scientists lambast The Australian for misleading article on deep ocean cooling

"The article by Graham Lloyd will likely leave a mis-impression"

What's with the kid-gloves? How hard is it to say Lloyd lied? His first sentence makes two claims neither of which is true. How likely is this unintentional? His paper should be sued and he should be fired. Until this happens, this 'Pied Pipering' of society will lead to its ruination. At this point, what he's doing is criminal.

(-snip-) People like Lloyd are actually encouraged by the meekness with which society responds to their outright fabrications on behalf of their power structure. Make an example of him and his publisher that truth matters, or they will happily whistle our children to the cliff and bid them jump, and see in that their own obvious superiority.

Moderator Response:[JH] Offensive personal opinion deleted.

-

Falk at 23:57 PM on 6 August 2014Nigel Lawson suggests he's not a skeptic, proceeds to deny global warming

Hi everyone!

I am not a climate scientist but have been interested on a personal level in the the research going on for some time now. I like reading (and have been for a while) the discussions on this site even though I sometimes get annoyed by the sceptics/alarmist fights.

Anyway I finally registered because everytime I see the figure posted in this blog post above I ask myself if one of the following estimates is somewhere near the 'real' thing :)

If I sum up the total energy accumulated in all three regions I get something like a 35e22 J (or W*s) increase over 50 years (1960-2010). If I just devide this by the time of 1.577e9 s (50y in seconds) and by:

1. the earths total surface of 5.1e14 m^2 I get a net power per squaremeter of 0.44 W/m^2 that is needed over 50 years to deposit the heat.

2. half the earths surface as only one half is hit by the sun at a given time. It gives double the power per squaremeter nedded with 0.87 W/m^2

3. the projected area of the earth (aka circle of earths radius) with an area of 1.27e14 m^2 which is roughly another doubling to 1.75 W/m^2

If someone could just give me a quick response if this is complete nonesense or if one of them is a useful estimate I would appreciate it a lot. Thank you!

-

Mick9422 at 18:07 PM on 6 August 2014A Relentless Rise in Global Sea Level

So many arguments for and against.

I have concerns, mainly due to the excessive amount of carbon now in the atmosphere compared to past low levels.

But then again, am I worried over nothing, and the Earths systems can cope with these levels, and all will be well.

Who knows !!

One end of the argument is depicted by this cartoon . . . . .

http://cartoonmick.wordpress.com/editorial-political/#jp-carousel-891

Cheers

Mick

Moderator Response:[JH] Activated link.

-

Tom Curtis at 16:34 PM on 6 August 2014Postma disproved the greenhouse effect

Thankyou Glenn. Much appreciated. I am somewhat loathe to pay twice for the same paper as you can imagine.

-

PhilippeChantreau at 13:03 PM on 6 August 2014Harvard historian: strategy of climate science denial groups 'extremely successful'

Matt, you'll find of plenty of returns by trying "NIPCC" (not NICPP). This organization is well known of SkS contributors, as is the grotesque parody of information with which they have tried to infect the public place.

-

wili at 12:01 PM on 6 August 2014A Relentless Rise in Global Sea Level

Good presentation. But isn't the larger point that 20 years is not long enough to accurately chart anything? It's nice to be able to be able to identify some of the factors that go into natural variation here. But really, 20 years?

Isn't it true that if you take a slightly longer time frame (and anything longer that 20 years would surely be more appropriate), that there has actually been an acceleration in the rate of global warming? 1990 or so actually seems to be something of an inflection point, with the rate twenty years before it being about half that of the rate over the following twenty years or so.

Moderator Response:[PS] Fixed link.

The length of time required to draw inference about a trend depends on the amount of noise (variability) compared to magnitude of trend. 20 years is short for surface temperature but long for sea level.[RH] Shortened link.

-

Glenn Tamblyn at 10:18 AM on 6 August 2014Postma disproved the greenhouse effect

Tom

I have a copy of Conrath. If you want I can email you a copy

-

Tom Curtis at 08:09 AM on 6 August 20142014 SkS Weekly News Roundup #31B

Sorry, I picked up the wrong original quote. Here is the proper one:

" Few field observations to date demonstrate biological responses attributable to anthropogenic ocean acidification, as in many places these responses are not yet outside their natural variability and may be influenced by confounding local or regional factors. See also Box TS.7. Natural climate change at rates slower than current anthropogenic change has led to significant ecosystem shifts, including species emergences and extinctions, in the past millions of years."

(Bolded elided by M Thompson)

Also relevant are the two following from the Executive Summary of Chapter 6:

"Rising atmospheric CO2 over the last century and into the future not only causes ocean warming but also changes carbonate chemistry in a process termed ocean acidification (WGI, Chs. 3.8.2, 6.4.4). Impacts of ocean acidification range from changes in organismal physiology and behavior to population dynamics (medium to high confidence) and will affect marine ecosystems for centuries if emissions continue (high confidence). Laboratory and field experiments as well as field observations show a wide range of sensitivities and responses within and across organism phyla (high confidence). Most plants and microalgae respond positively to

elevated CO2 levels by increasing photosynthesis and growth (high confidence). Within other organism groups, vulnerability decreases with increasing capacity to compensate for elevated internal CO2 concentration and falling pH (low to medium confidence). Among vulnerable groups sustaining fisheries, highly calcified corals, mollusks and echinoderms, are more sensitive than crustaceans (high confidence) and fishes (low confidence). Trans-generational or evolutionary adaptation has been shown in some species, reducing impacts of projected scenarios (low to medium confidence). Limits to adaptive capacity exist but remain largely unexplored. [6.3.2, CC-OA]

Few field observations conducted in the last decade demonstrate biotic responses attributable to anthropogenic ocean acidification, as in many places these responses are not yet outside their natural variability and may be influenced by confounding local or regional factors. Shell thinning in planktonic foraminifera and in Southern Ocean pteropoda has been attributed fully or in part to acidification trends (medium to high confidence). Coastward shifts in upwelling CO2-rich waters of the Northeast-Pacific cause larval oyster fatalities in aquacultures (high confidence) or shifts from mussels to fleshy algae and barnacles (medium confidence), providing an early perspective on future effects of ocean acidification. This supports insight from volcanic CO2 seeps as natural analogues that macrophytes (seaweeds and seagrasses) will outcompete calcifying organisms. During the next decades ecosystems, including cold- and warm-water coral communities, are at increasing risk of being negatively affected by ocean acidification (OA), especially as OA will be combined with rising temperature extremes (medium to high confidence, respectively). [6.1.2, 6.3.2, 6.3.5]"My summary of the preceding post remains fair comment.

-

MattJ at 08:07 AM on 6 August 2014Harvard historian: strategy of climate science denial groups 'extremely successful'

Yes, the disinformation campaign has been successful — if you call 'success' winning a policy determined to make the lives of your few generations of descendants absolute misery.

But it is about to get worse: I just saw an announcement on Quora of an even more shameful, bold fraud: the mysteriously and suspiciously named "NICPP", a new front group for The Heartland Institute, claiming scientific backing for many of the false criticisms of the ICPP that we have all heard before.

I also noticed that nothing comes up in the search here at Skeptical Science when I input "NICPP" or "nicpp". That ought to change pronto.

-

Tom Curtis at 07:58 AM on 6 August 20142014 SkS Weekly News Roundup #31B

Speaking of sticking to what is known, how about we start by quoting in context:

"A few studies provide limited evidence for adaptation in phytoplankton and mollusks. However, mass extinctions in Earth history occurred during much slower rates of change in ocean acidification, combined with other drivers, suggesting that evolutionary rates may be too slow for sensitive and long-lived species to adapt to the projected rates of future change (medium confidence)."

(Bolded sections elided by M Thompson)

Also from the summary on Ocean Acidification:

"Ocean acidification poses risks to ecosystems, especially polar ecosystems and coral reefs, associated with impacts on the physiology, behavior, and population dynamics of individual species (medium to high confidence). See Box TS.7. Highly calcified mollusks, echinoderms, and reef-building corals are more sensitive than crustaceans (high confidence) and fishes (low confidence), with potential consequences for fisheries and livelihoods (Figure TS.8B). Ocean acidification occurs in combination with other environmental changes, both globally (e.g., warming, decreasing oxygen levels) and locally (e.g., pollution, eutrophication) (high confidence). Simultaneous environmental drivers, such as warming and ocean acidification, can lead to interactive, complex, and amplified impacts for species."

(Bold in original)

So, to "stick with what is known" in M Thompson's version, you need to quote out of context, and ignore the IPCC's findings. That is not what I would call integrity.

-

Jutland at 07:55 AM on 6 August 2014Postma disproved the greenhouse effect

Tom @85: that is a very, very helpful answer, thank you. The confusion was my own, not in any discussion with anyone else. I have been wondering where I might have got definition (2) from, and now think that I may have misinterpreted statements in texts online or in books, eg a statement like "IR photons escape the atmosphere at the tropopause" might have been misinterpreted in my mind as "the tropopause is the height at which IR photons escape." Oh well. On the other hand, I am feeling a bit chuffed (especially as a non-scientist) that I worked out that different frequency IR photons must escape the atmosphere at different altitudes. -

MThompson at 07:19 AM on 6 August 20142014 SkS Weekly News Roundup #31B

Well said, DavidBird at comment #3. To maintain integrity we have to stick to what is known:

"Few field observations to date demonstrate biological responses attributable to anthropogenic ocean acidification, as in many places these responses are not yet outside their natural variability and may be influenced by confounding local or regional factors."

IPCC AR5 WG11 p.9

Prev 715 716 717 718 719 720 721 722 723 724 725 726 727 728 729 730 Next

Arguments

Arguments