Arguments

Arguments

Software

Software

Resources

Comments

Resources

Comments

The Consensus Project

The Consensus Project

Translations

Translations

About

Support

About

Support

Latest Posts

- Skeptical Science New Research for Week #10 2026

- Will climate change bring more major hurricane landfalls to the U.S.?

- Just have a Think - The Primary Energy Fallacy finally laid to rest!

- The AI-Augmented Scientist

- 2026 SkS Weekly Climate Change & Global Warming News Roundup #09

- Skeptical Science New Research for Week #9 2026

- Fossil fuel pollution’s effect on oceans comes with huge costs

- Fact brief - Do solar panels work in cold or cloudy climates?

- After a major blow to U.S. climate regulations, what comes next?

- 2026 SkS Weekly Climate Change & Global Warming News Roundup #08

- Skeptical Science New Research for Week #8 2026

- Introducing the Climate Brink Dashboard

- Climate Adam - Climate Scientist Reacts to AI Overlords

- Trump just torched the basis for federal climate regulations. Here’s what it means.

- 2026 SkS Weekly Climate Change & Global Warming News Roundup #07

- Skeptical Science New Research for Week #7 2026

- These key strategies could help Americans get rid of their cars

- Fact brief - Can nearby solar farms reduce property values?

- Sea otters are California’s climate heroes

- 2026 SkS Weekly Climate Change & Global Warming News Roundup #06

- Skeptical Science New Research for Week #6 2026

- The future of NCAR remains highly uncertain

- Fact brief - Can solar projects improve biodiversity?

- How the polar vortex and warm ocean intensified a major US winter storm

- 2026 SkS Weekly Climate Change & Global Warming News Roundup #05

- Help needed to get translations prepared for our website relaunch!

- Skeptical Science New Research for Week #5 2026

- Climate Variability Emerges as Both Risk and Opportunity for the Global Energy Transition

- Fact brief - Are solar projects hurting farmers and rural communities?

- Winter 2025-26 (finally) hits the U.S. with a vengeance

Comment Search Results

Search for marcott

Comments matching the search marcott:

- John F. Clauser: the latest climate science-denying physicist

Rob Honeycutt at 02:09 AM on 29 October, 2023TWFA... "The climate would and will continue warming at this phase even if man never existed..."

Here, yet again, you literally have no clue what you're talking about but present something as if it were fact.

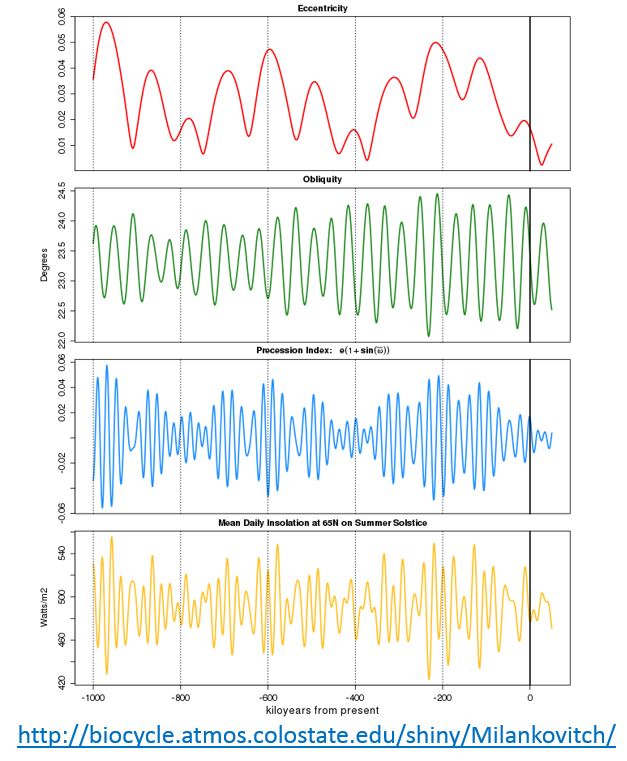

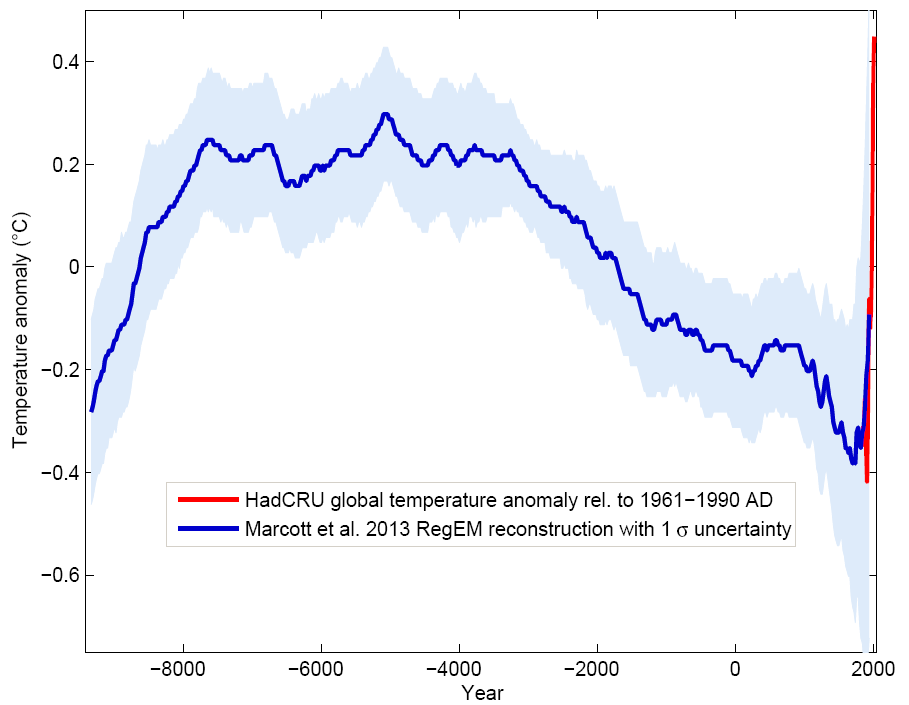

If you look at the paleo record it is very clear that the Earth was entering a cooling phase due to slow changes in orbital forcings. It's only when humans started burning fossil fuels and substantially altering surface albedo (deforestation, farming, etc) that the planet abruptly changed and entered a steep warming trend.

- Skeptical Science New Research for Week #9, 2020

nigelj at 06:30 AM on 7 March, 2020MAR, "More directly addressing your question, the ice cores do show a small increase in CO2 levels over the last 8,000 years..."

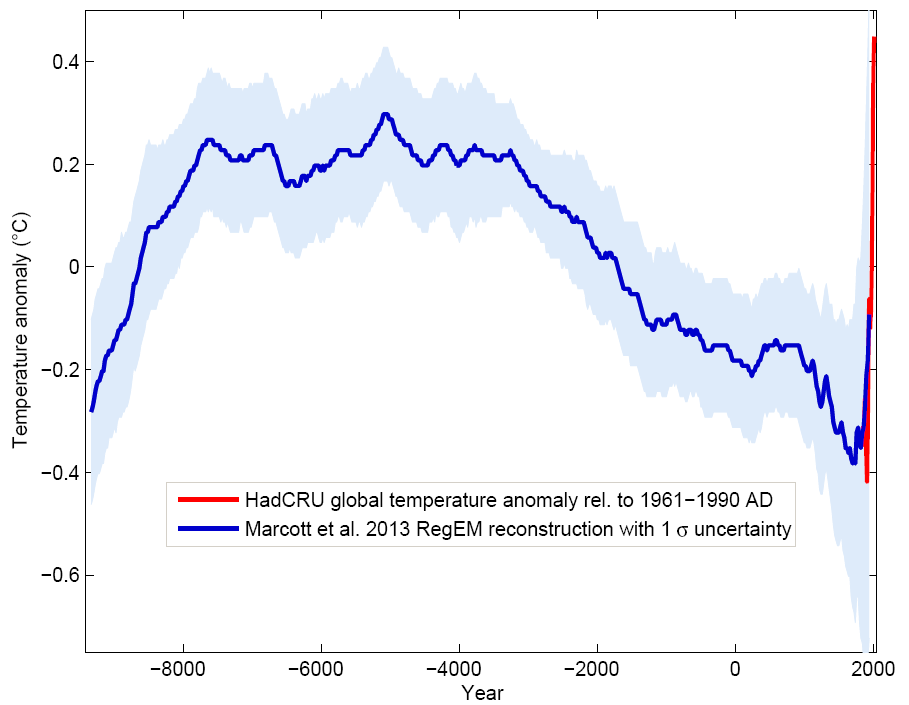

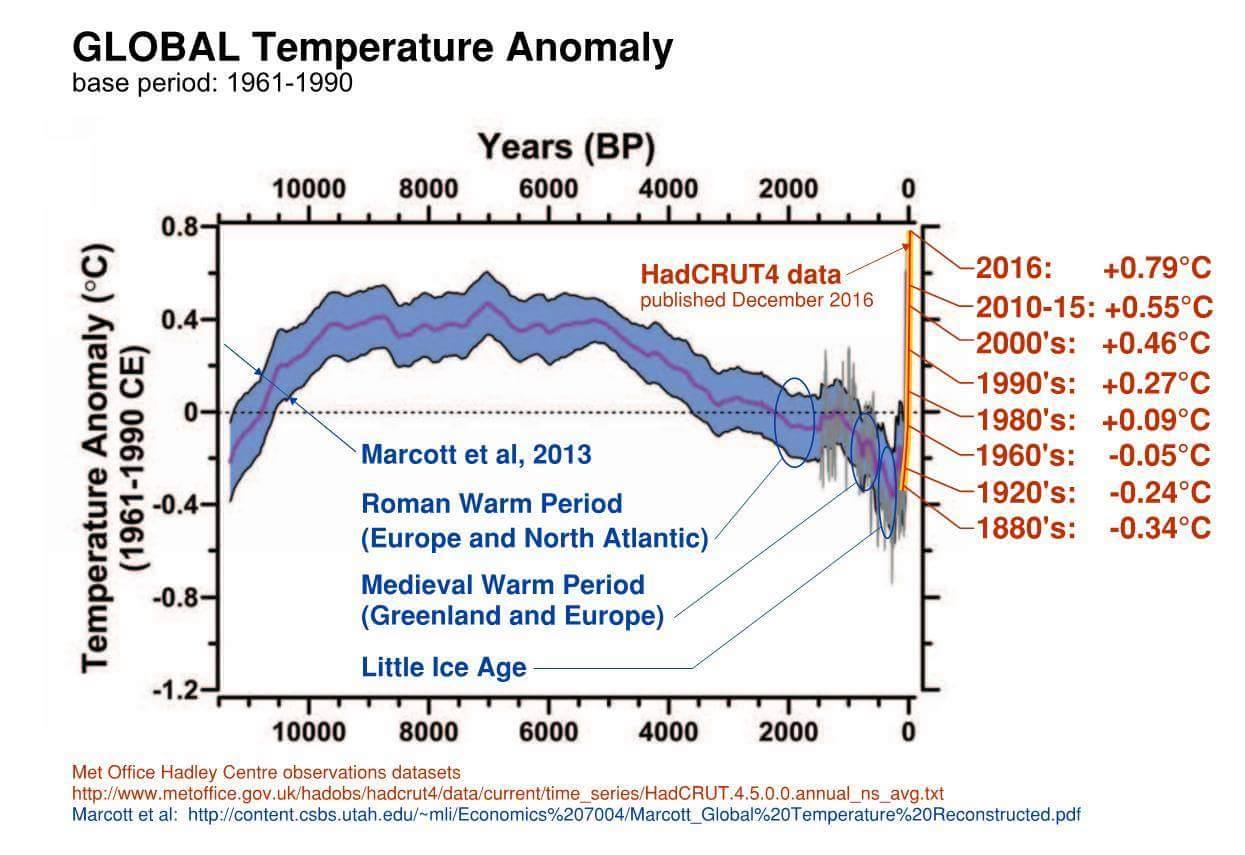

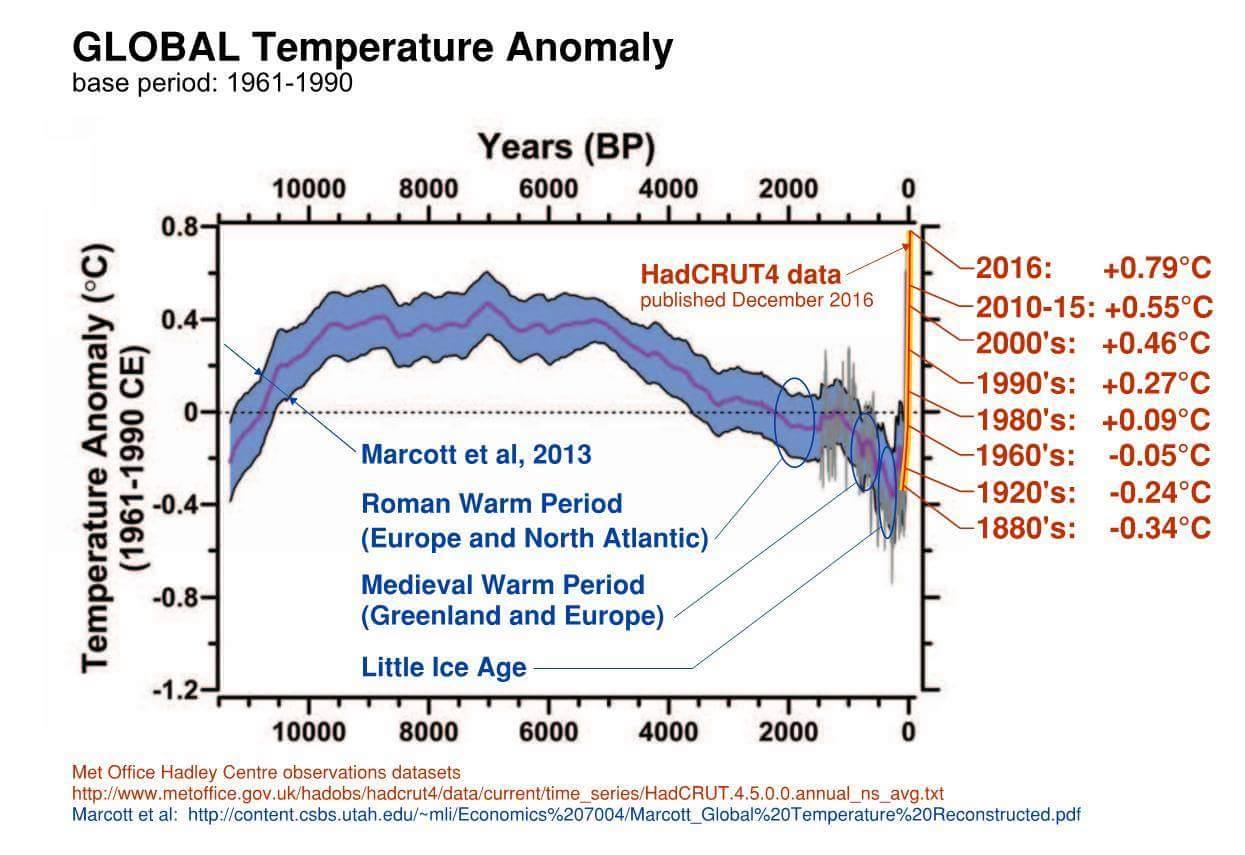

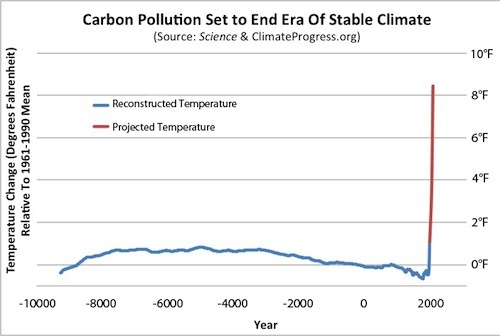

The Marcott study here shows global temperatures falling over about the last 3500 years until about 1900. So perhaps the milankovitch cycle cancelled out the slow low level rise of CO2 concentrations over the same period?

- Climate's changed before

MA Rodger at 19:35 PM on 23 July, 2019TVC15 @760/761,

It is a smorgasbord of denialist assertions you present.

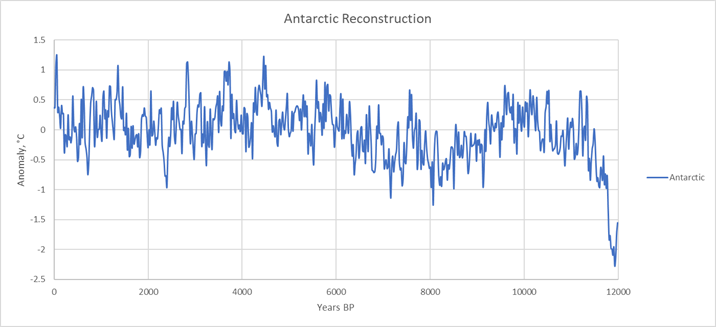

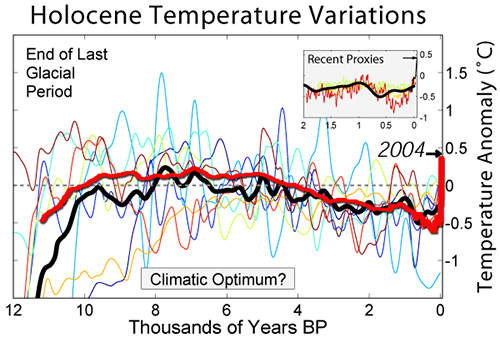

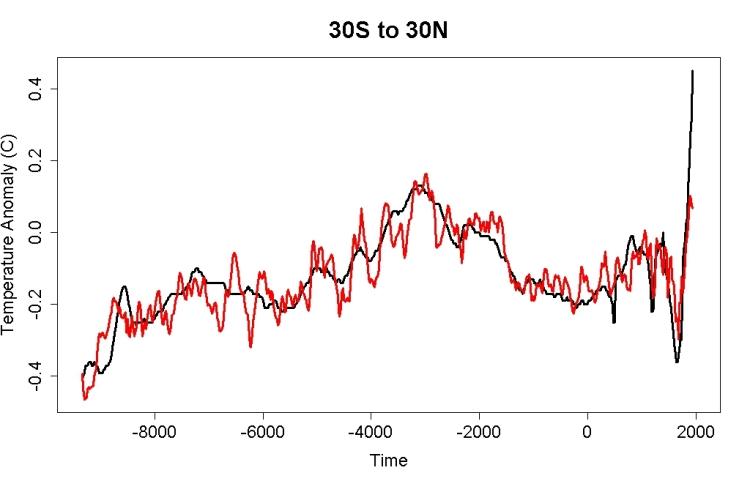

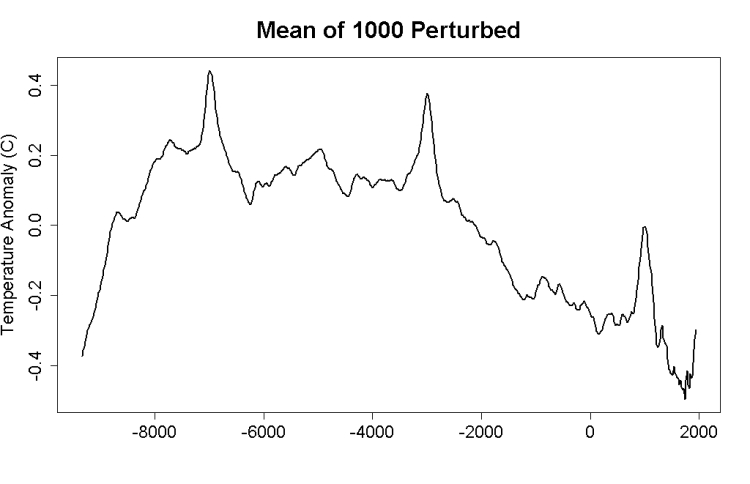

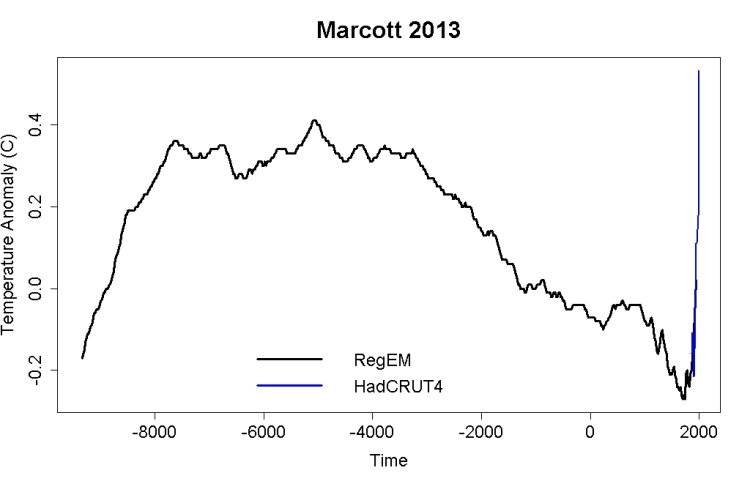

☻ The "Antarctic was warmer 1,000 years ago" assertion looks a little difficult to uphold in any way. There are reconstructions from ice core data (for instance Ciais et al (1994) fig 7) which shows Antarctic temperatures over the last 10,000 years waggling about by a maximum of about a degree centigrade. (Note the Ciais et al graphic is sensibly using an 'average-over-the-last-5,000-years' as a datum.) The thermometer record (eg Berkeley Earth) shows recent warming of a similar amount (although there is a lot of variability in the warming depending where you are in Antarctica) so this evidence suggests it was colder in Antarctica 1,000 years ago. The image below is gleened from a posting on the planet Wattsupia and appears to be based on data from Marcott et al (2013). The 1,000 years ago temperature again is shown as being colder.

☻ The interglacial sea level has been discussed before, introduced up-thread @715. It was shown that only two of the last 8 interglacials had higher SLR than today, not all eight. The SLR will mainly depend on how much of Greenland & Antarctica melts out, a process that stopped in this present interglacial 8,000 yers ago, and AGW is the process that is doing that melting today.

☻ The "Earth is always warmer and wetter, never warmer and drier" assertion isn't correct. The missing word word is 'atmosphere' which will be, as scaddenp @765 points out, wetter under the CC relationship. So if we have more wet in the atmosphere, will that translate firstly into more rain always falling on the earth beneath. Globally apparently not. This NOAA graphic shows global temperature and global rainfall are not well aligned. (The graph is from here and is for precipitation over global land)

And secondly, as scaddenp @766 describes, even if rain and temperature were inexorably linked, that warmer atmosphere is demanding to be always wetter than it was when cooler and will thus be sucking more moisture right out of that very same land to re-charge its wetness.

- Climate's changed before

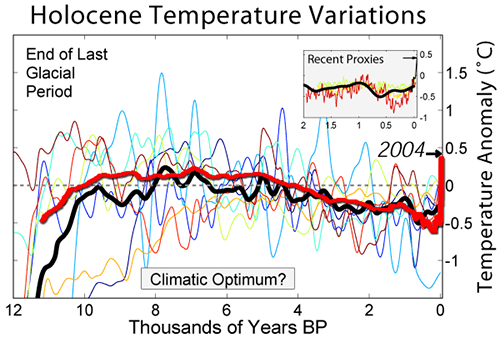

scaddenp at 06:13 AM on 4 April, 2019TVC15 - I would say likely it is from here. The graphic itself has the source references for its data. In denier space, this usually goes with arguments that CO2 isnt related temperature - ignoring all the other drivers of climate.

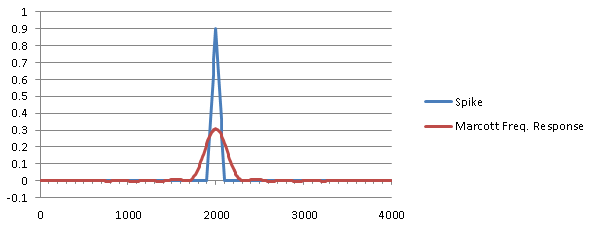

The NPR article was on the Marcott 2013 paper, extensively discussed here. Put Marcott into the search box on top right. A criticism is that the methodology may not capture high frequency temperature change. The usual denier take is to point major spikes in the NH temperature record (eg Younger Dryas) associated with exit from glacials. There is some evidence of similar, anti-phased events, in SH record. These proxies to indeed indicate very rapid temperature change but I am not aware of evidence for a global temperature change of that speed as opposed to regional change. Mechanism is disputed, but is associated with end of glacial periods so relevance to present climate is doubtful to say least.

- Welcome to the Pliocene

Daniel Bailey at 00:31 AM on 25 August, 2018

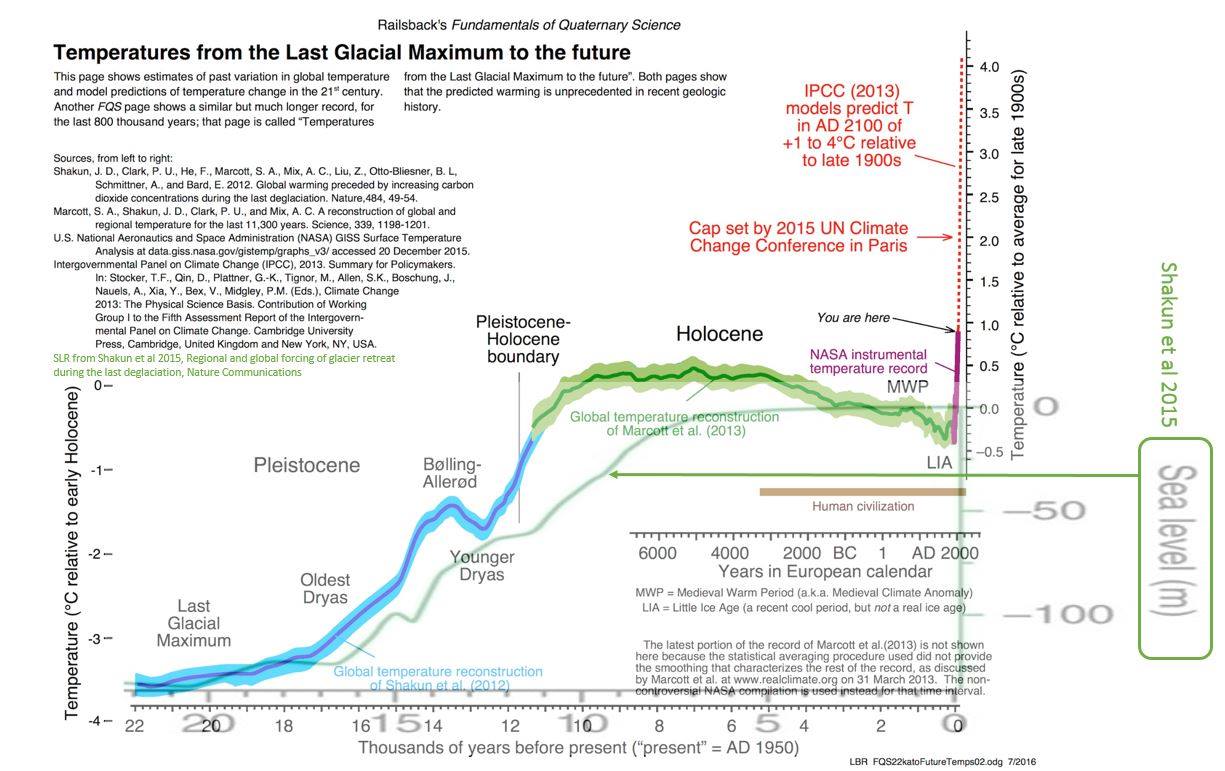

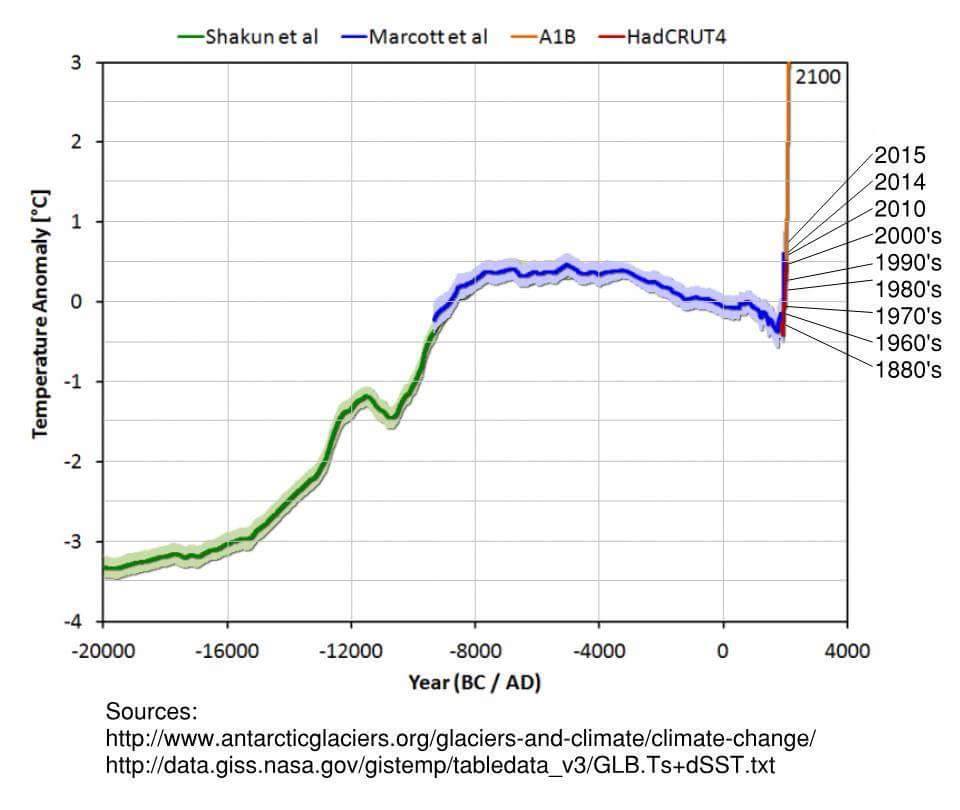

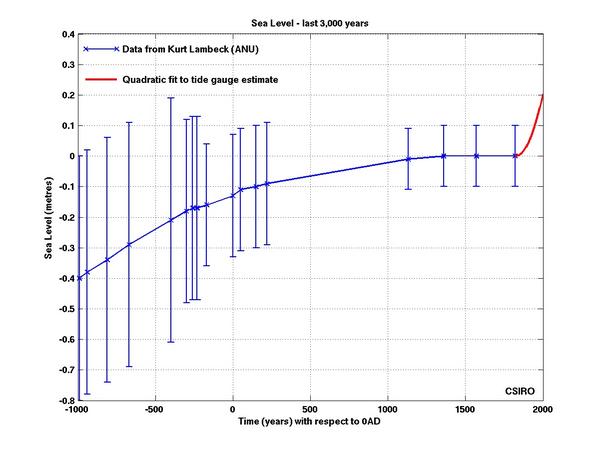

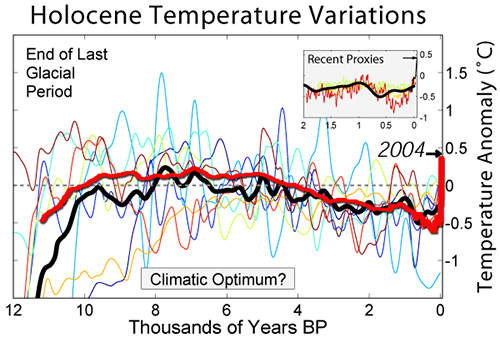

Orbital forcing peaked in the early Holocene and has declined since. Less energy went into melting ice, and into warming the oceans, slowing the rate of ice sheet mass losses and slowing the rising of sea levels.

Typically, when climate scientists try to understand some of the expected future effects of global warming and climate change, they first look to the past. And in looking to the past, we can use the example of the climate transition from the icy depths of the Last Glacial Maximum into our current Holocene Interglacial to guide us. From about 21,000 years Before Present (BP) to about 11,700 years BP, the Earth warmed about 4 degrees C and the oceans rose (with a slight lag after the onset of the warming) about 85 meters.

However, the sea level response continued to rise another 45 meters, to a total of 130 meters (from its initial level before warming began), reaching its modern level about 3,000 BP.

This means that, even after temperatures reached their maximum and leveled off, the ice sheets continued to melt for another 7,000-8,000 years until they reached an equilibrium with temperatures.

Stated another way, the ice sheet response to warming continued for 7,000-8,000 years after warming had already leveled off, with the meltwater contribution to global sea levels totaling 45 additional meters of SLR.

Which brings us to our modern era of today: over the past 100 years, global temperatures have risen about 1 degree C…with sea level response to that warming totaling about 150 mm.

Recently, accelerations in SLR and in ice sheet mass losses have been detected, which is what you’d expect to happen when the globe warms, based on our understanding of the previous history of the Earth and our understanding of the physics of climate.

Sources for my SLR commentary:

- Shakun et al 2012 - Global warming preceded by increasing carbon dioxide concentrations during the last deglaciation

- Marcott et al 2013 - A Reconstruction of Regional and Global Temperature for the Past 11,300 Years

- Shakun et al 2015 - Regional and global forcing of glacier retreat during the last deglaciation

- Clark et al 2016 - Consequences of twenty-first-century policy for multi-millennial climate and sea-level change

- Shakun et al 2012 - Global warming preceded by increasing carbon dioxide concentrations during the last deglaciation

- Sea level rise predictions are exaggerated

Bob Loblaw at 05:57 AM on 2 April, 2018NorrisM:

I will try to separate three aspects of scientific study: observations, interpretations, and conclusions. Most of what I wll be disagreeing with in your comments falls into the interpretation and conclusion categories.

I am not sure what gives you the idea that I am interested specifically in a lawyer's perspective - what I have been interested in seeing from you is a scientific argument that supports your position. That you tend to take a lawyerly approach to the discussion has been apparent, but I tend to see that as a bug, not a feature.

Michael Sweet has already pointed out how your argument seems to pick the low end of most available data. It has been pointed out to you in the past that this is not good risk management.

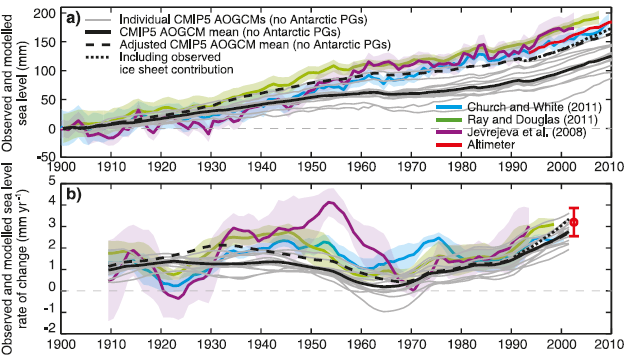

You comment on "the bump" from 1920-1950 in figure 3.14 of the Fifth Assessment. The figure shows results from three studies. The bump is particularly high in one of those studies: Jevrejeva et al. The RealClimate post comments on this, saying

"The only outlier set which shows high early rates of SLR is the Jevrejeva et al. (2008) data – and this uses a bizarre weighting scheme, as we have discussed here at Realclimate.

The RealClimate post's figure 3 provides both the sea level rise rates from the IPCC figure, and modelled values. The models tend to underestimate sea level rise, but have been improving (since previous assessment reports).

Please also note that in the IPCC report, figure 13.12, that different semi-empirical studies on sea level projections tend to give higher values if using the Jevrejeva data, and that even work by Jevrejeva gives results within the IPCC range. You need to have a scientific argument as to why you want to pay attention to the Jevrejeva bump, but discount the Jevrejeva projections. It looks like you are just choosing thw answers you like.

Even if the 1920-1950 "bump" is not well explained, that is not a scientific argument as to why future projections are therefore wrong. We know a good deal less about past inputs than current, and that limits our ability to be sure of what happened historically. This has been discussed with you in the past. Uncertainty in historical sea level rise itself occurs because of the reliance on tide gauges. You allude to this in your post when you discuss the mid-ocean data that comes available with sateliite monitoring.

A lengthly discussion on models, data, etc. is hand-waved away with the paragraph:

"What this tells me is that there is a “theoretical” danger but so far we do not have any evidence of an actual retreat or the time frame over which this could occur. We cannot base our rational responses to AGW based upon theories which have not been supported with observational evidence.

This is basically a wholesale rejection of science. You basically seem to be rejecting any projections because they haven't happened yet, as there is no observational evidence. I consider this to be irrational. You may wish to reword this or provide further explanation.

In quoting p1159 of the IPCC report, you neglect to include the closing statement that says:

From 1993, all contributions can be estimated from observations; for earlier periods, a combination of models and observations is needed. Second, when both models and observations are available, they are consistent within uncertainties. These two advances give confidence in the 21st century sea level projections. The ice-sheet contributions have the potential to increase substantially due to rapid dynamical change (Sections 13.1.4.1, 13.4.3.2 and 13.4.4.2) but have been relatively small up to the present (Sections 4.4 and 13.3.3.2). Therefore, the closure of the sea level budget to date does not test the reliability of ice-sheet models in projecting future rapid dynamical change; we have only medium confidence in these models, on the basis of theoretical and empirical understanding of the relevant processes and observations of changes up to the present (13.4.3.2, 13.4.4.2).

I have chosen to bold parts of the quote.

- Your interpretation that the "biump" in the 1920-1950 period is a game-ender is not in agreement with the IPCC.

- Your opinion that historical sea-level data are independent of models ("theory") and are purely observational is not in agreement with the IPCC.

- Your interpretation that there is too much uncertainty to make projections is not in agreement wiht the IPCC.

- Your opinion that the only reasonable choice it to linearly-extrapolate the historical trends is not in agreement with the IPCC.

You also comment about "...the average rate of 10 mm/yr during the deglaciation after the Last Glacial Maximum ...". You appear to think that this places some upper physcial limit on rates of sea level rise. The rate of sea level rise is not a function of ice volume, it is a function of the rate of change of ice volume, which depends on the rate of climate change. The temperature rise projected for the remainder of the 21st century is far higher than anything that occurred at the end of the last glacial maximum.

All-in-all, you present little more than an argument from incredulity.

(Note: in lawyer-speak, I reserve the right to ask further questions regardling NorrisM's posts. This comment is limited by time available today.)

- From the eMail Bag: A Deep Dive Into Polar Ice Cores

David Kirtley at 08:54 AM on 29 January, 2018Great comments/questions, qwertie. You said: "not a single climate scientist, including any of the contrarians (the 3%), has thought of any way that CO2 could disappear from the atmosphere as quickly as it has been added."

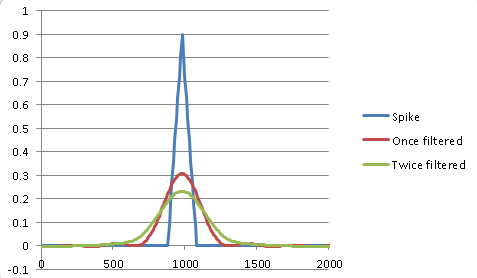

Exactly. Which is what Dr. Buizert was saying. A sudden spike in CO2 would leave a mark in the record because everything we know about the carbon cycle tells us that the spike wouldn't "quickly disappear". Also, a sudden CO2 spike would have other effects on the ice core records: they would show a spike in temperatures which are also measured in the ice cores.

Your skeptic, in your example, wants to just look at the ice core CO2 measurements in isolation, without taking into account what is known about the carbon cycle.

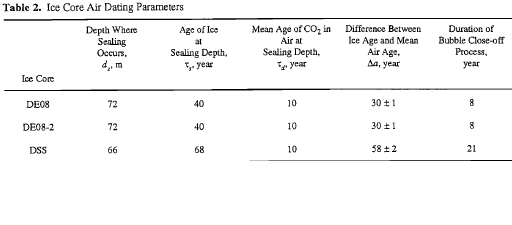

You said: "Unfortunately this article isn't clear enough to draw such a conclusion. One way it would be more clear is if it gave at least one example to demonstrate how to calculate the gas age distribution."

Finding the "age distribution" isn't a very straight-forward process. This is all rather complicated stuff and I confess not to have a complete grip on all of it...especially all of the math involved. But conceptually I think I understand it (mostly). I'll try to explain it, but you may want to check the papers yourself. I would suggest these (you can find the links to them above in my list of refs.): Etheridge et al. 1996, Trudinger et al. 1997, Marcott et al. 2014. Also this one not listed: Buizert et al 2015.

When I was corresponding with Dr. Buizert I asked him where I could find age distributions for the various ice cores. He said that they are better known for firn air but not for the older air trapped deeper and deeper in the ice core bubbles. However the Delta-ages of ice cores are easier to find (see Figure 6 in OP), hence his "short-cut" for finding the age distributions: Δ-age x 5% = age dist.

Here is how these various things are related:

ice age - gas age = Δ-age

"Ice age" is easy to find, it can be found simpy by counting the layers in the ice core, like counting tree rings. And it is possible to tie this count into our calendar years by noting specific points in the core, like a layer of volcanic ash from an especially large and known volcanic eruption.

For the "gas age", we know that the air in bubbles is younger than the surrounding ice (described in the OP). But how much younger? Well that depends on the conditions (amount of snow accumulation and temperature) at the ice core location, and how long it takes for the bubbles to get "locked in" through the lock in zone. So to find the "gas age" scientists work backwards from the equation above, they start by calculating the Δ-age.

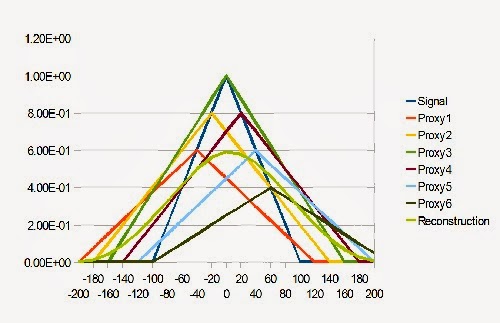

They find the Δ-age by using mathematical models using the specific firn conditions of the ice core location. With the modelled Δ-age and the known ice age, they can then use the equation to find the gas age. Here is an example of this technique, as described in the Marcott et al. 2014 paper from my ref. list:

"Gas ages were obtained by subtracting a modelled ice-age/gas-age difference (Δ-age) from the ice-age timescale. Δ-age was calculated using a dynamical firn-densification model with heat diffusion. The modelling is constrained by measurements of δ15N of N2, a proxy for past firn column thickness."

Let's say, for example, that our firn model gave us a Δ-age for the Law Dome ice core of 30 years. That means there would be a 30 year difference between the ice age and the gas age. Look at Figure 4 of the firn cross-section. The 30 year Δ-age is basically the time it takes for the top snow layer to get buried and eventually reach the close-off depth. At that depth any air in the ice is locked into bubbles and is closed off from further contact with the air in the firn above which is open to the atmosphere.

Back to Law Dome, a 30 year Δ-age is the actual value found in Etheridge et al. 1996. Here is a table from that paper:

Notice the last column: "Duration of Bubble Close-off Process". This is another way of describing the "age distribution" of the gas within the bubbles. Look again at Figure 4 of the firn cross-section. The bubble close-off process takes place in the "lock in zone". The length of time it takes for the air to become finally trapped in bubbles in the lock in zone defines the "age distribution". This is different for every ice core location, again, depending on snow accumulation, temperature, firn density, etc.

Notice the last column: "Duration of Bubble Close-off Process". This is another way of describing the "age distribution" of the gas within the bubbles. Look again at Figure 4 of the firn cross-section. The bubble close-off process takes place in the "lock in zone". The length of time it takes for the air to become finally trapped in bubbles in the lock in zone defines the "age distribution". This is different for every ice core location, again, depending on snow accumulation, temperature, firn density, etc.The time in the lock-in zone is roughly 10% of the time span given by the Δ-age. (But, again, this rough approximation would be different for each different ice core location.) So yes, the age distribution of the gas is directly related to the Δ-age. Hence, the quote from the Mitchell paper:

"The bubbles are known to close off over a vertical ice age range [the Lock-in Zone] that corresponds to roughly 10% of the gas age-ice age difference, which intuitively should result in a gas age distribution width corresponding to 10% of the gas age-ice age difference [the Δ-age]. However, the observed smoothing of the trapped gas record often appears to be less than would be expected from this rate of gradual bubble closure."

And my further comment: "So rather than 10%, a good rule-of-thumb, according to Dr. Christo Buizert, is to take 5% of the Δ-age to find the age distribution."

Back to Law Dome, again. Ten percent of the 30 year Δ-age would give an age distribution of only 3 years, not the 8-15 years I alluded to from Figure 5. Well, like I said, these are rough approximations.

The main point is to simply realize that the date of the CO2 in ice core bubbles cannot be pinpointed to a single year in the past. There is an age distribution or range of years that the gas is dated to. It may be very difficult to determine the exact width of a given ice core's age distribution, i.e. the span of years in the distribution, but we can get in the right ballpark.

- Climate's changed before

michael sweet at 05:36 AM on 11 September, 2017Norrism,

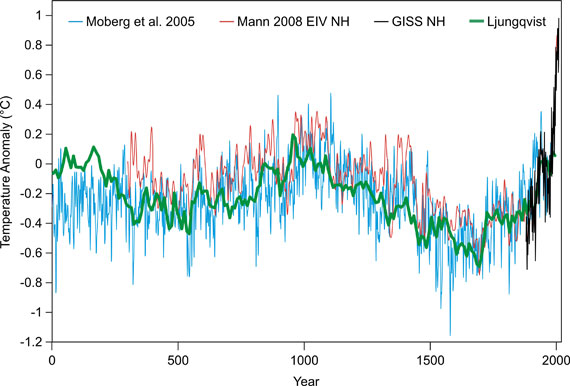

This is the graph for the Northern Hemisphere from Mann et al 2008 :

There might be a more recent paper that I did not find. Mann has done the analysis with no tree ring data and it is the same as with the tree ring data.

It is usually possible to get free copies of papers if you Google them (I found this paper using Google). Apparently it is only for the Northern Hemisphere (as was the other graph I posted). More data is available for the Northern Hemisphere so Mann only did the Northern Hemisphere in 1998.

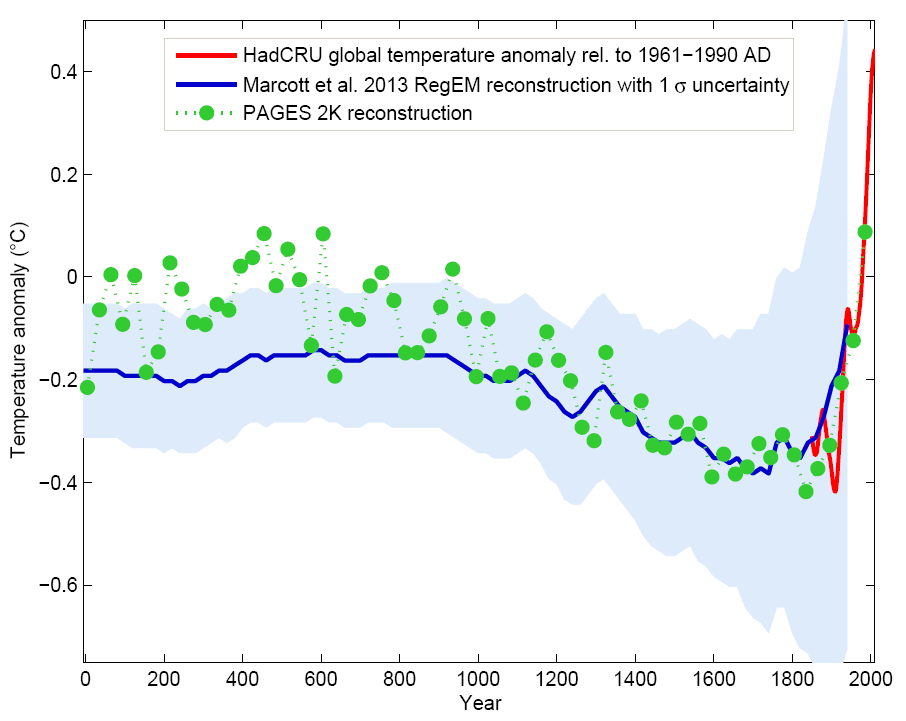

Here is a global analysis by Marcott et al (SkS article about Marcott)

Marcott is the red line. The small bump up is around 1000 years ago and is too early for the MWP. Current temperature is about 1.0 on this graph.

Keep in mind that we expect the temperature to decline after the Holocine maximum to a new ice age. This is the decline from 5000 bp to 150 bp. AGW then kicks in in earnest. AGW might have slowed the decline in temperature from 5000bp on from early farming releasing CO2.

I see no indication of a MWP in any of this data.

- Ivar Giaever - Nobel Winning Physicist and Climate Pseudoscientist

Daniel Bailey at 05:14 AM on 29 July, 2017Right here:

And Here:

"It is natural for climate to change as it has for millions of years"

So many fallacies, so little time...

FYI, the Earth's climate only changes in response to warming or cooling forcings. No known natural forcing fits the fingerprints of observed warming except anthropogenic greenhouse gases.

And this gem:

"There is less than a 1-in-27 million chance that Earth's record hot streak is natural"

Lol. Let's see what else you got:

"The theory of global warming is completely debunked by this chart"

Nope. You smear the difference between a scientific hypothesis and a scientific theory.

Indeed:

Below is a generalized sequence of steps taken to establish a scientific theory:

1. Choose and define the natural phenomenon that you want to figure out and explain.

2. Collect information (data) about this phenomena by going where the phenomena occur and making observations. Or, try to replicate this phenomena by means of a test (experiment) under controlled conditions (usually in a laboratory) that eliminates interference's from environmental conditions.

3. After collecting a lot of data, look for patterns in the data. Attempt to explain these patterns by making a provisional explanation, called a hypothesis.

4. Test the hypothesis by collecting more data to see if the hypothesis continues to show the assumed pattern. If the data does not support the hypothesis, it must be changed, or rejected in favor of a better one. In collecting data, one must NOT ignore data that contradicts the hypothesis in favor of only supportive data. (That is called "cherry-picking" and is commonly used by pseudo-scientists attempting to scam people unfamiliar with the scientific method. A good example of this fraud is shown by the so-called "creationists," who start out with a pre-conceived conclusion - a geologically young, 6,000 year old earth, and then cherry-pick only evidence that supports their views, while ignoring or rejecting overwhelming evidence of a much older earth.)

5. If a refined hypothesis survives all attacks on it and is the best existing explanation for a particular phenomenon, it is then elevated to the status of a theory.

6. A theory is subject to modification and even rejection if there is overwhelming evidence that disproves it and/or supports another, better theory. Therefore, a theory is not an eternal or perpetual truth.For a good discussion of science terminology (especially for the "Evidence, not Proof" bit), see here.

FYI: Anthropogenic climate change (ACC)/anthropogenic global warming (AGW) is not a hypothesis. It is a robust theory, referred to as "settled fact" by scientists.

Per the National Academies of Science, science advisors to Congress and the Office of the Presidency since Lincoln, in their 2010 publication Advancing The Science Of Climate Change (p. 22):

"Some scientific conclusions or theories have been so thoroughly examined and tested, and supported by so many independent observations and results, that their likelihood of subsequently being found to be wrong is vanishingly small.

Such conclusions and theories are then regarded as settled facts.

This is the case for the conclusions that the Earth system is warming and that much of this warming is very likely due to human activities."

And note that the above National Academies paper is available for free download after a free registration. No purchase necessary. And the quote is from page 22.

"Settled facts"... Just rollsssss off the tongue...

Back to you. Be warned, I'm just getting warmed up.

- Climate's changed before

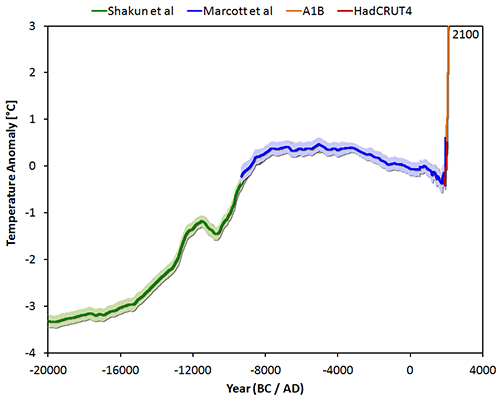

ubrew12 at 07:38 AM on 8 July, 2017About a month ago, I took the Pages-2k graph (last 2000 years of global temperature using tree ring proxies) and roughly calculated the temperature change per century, last 20 centuries, and the standard deviation in this metric for this 2000 year period. I then took 5-century intervals from the Marcott graph (last 11,000 years, ocean sediment data), calculated the average temperature change per century (over 5 centuries), and imposed the standard deviation I'd gotten from Pages-2k to each of these to calculate my best estimate of the temperature change per century for the 100 centuries prior to Christs birth. I then applied this same technique to the Shakun graph (last 20,000 years). However, in that case I used 10-century intervals to get the average temperature change per century and imposed the Pages-2k standard deviation upon that average to get 10 data points representing the likely variance over them. At the end of all this activity, I had 219 data points representing the likely temperature change per century for the 220 centuries (22,000 years) before the 20th century. The average was 0.014 C/century, the standard deviation was 0.077 C/century, so the 3-sigma point is 0.24 C/century. Warming in the 20th century was 0.78 C/century. To me this proves, statistically, that modern warming is nothing like anything that has occurred in the previous 22,000 years. Its about 3 times what would be considered extremely unusual from the natural record. And warming in the last 25 years, if it continues, is about 3 times that again (2.2 C/century). My question is: does anybody know where this kind of analysis has been performed in the Science record? I'm sure it has, and to a much greater degree. I just want to know where to find it so I can refer to it whenever somebody claims 'Its all natural'.

- Why the Republican Party's climate policy obstruction is indefensible

Daniel Bailey at 03:40 AM on 8 July, 2017@too

Note that the next figure (Fig. 3) from your link is a complete fabrication/misrepresentation, as it uses Alley's GISP2 core data (last data point 1855), so it misses all the warming of the instrumental record.

- New research may resolve a climate ‘conundrum’ across the history of human civilization

chriskoz at 23:37 PM on 17 June, 2017Nigelj@1,

It's always better to look at the actual published source rather than dodgy 'skeptical' blog.

The 'conundrum' Dana is talking about likely comes from (Liu et al 2014) where they state:

A recent temperature reconstruction of global annual temperature shows Early Holocene warmth followed by a cooling trend through the Middle to Late Holocene [M13]. This global cooling is puzzling because it is opposite from the expected and simulated global warming trend due to the retreating ice sheets and rising atmospheric greenhouse gases. Our critical reexamination of this contradiction between the reconstructed cooling and the simulated warming points to potentially significant biases in both the seasonality of the proxy reconstruction and the climate sensitivity of current climate models.

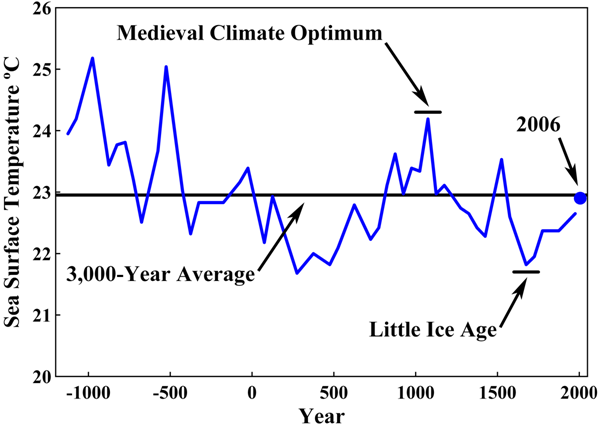

So L14 has already pointed out the possible M13 sesonal and hemispheric bias. They, hower, looked at SST reconstructon biases only. Look at Figure3 in L14: it has been known that N hemisphere models (3B) do match Marcott (3A) at least in sign.

However, note that the total cooling shown by M13 from the peak of Holocene (ca 7ka BP) to the LIA dip, is some 0.5-0.6 degC only. Not 1.4C as the 'skeptical' blog clearly exaggerated. BTW, that latter graph is hardly readable with 4 plots superposed. The obliquity plot has nothing to do with the rest of the plots because Milankovic forcings do not have direct effect on global temperature, they only produce variations in Arctic temperatures. However 'skeptics' have superimposed and scaled the obliquity plot only to suggest to uninformed that obliquity is in direct correlation with temperature shown by M13, maybe to justify the bogus 'neo-glacial' label there. My 'uninformed' question would be then: why the obliquity is so different than T in the very first section of it labeled 'pre-boreal' (whatever that mysterious term means)? Logical answer: because the obliquity has nothing to do with this picture and does not belong there.

- New research may resolve a climate ‘conundrum’ across the history of human civilization

nigelj at 07:40 AM on 15 June, 2017Good article, that resolves a long standing mystery. It shows the considerable power of climate models, but this message will be lost on the sceptics.

The following graph may be useful, and was posted on RC some time ago. It covers the last 12,000 years and includes the temperature data from Marcott, plus various model temperature estimates, plus CO2 levels, all on the same graph, so the divergence is clear. Be warned, it appears to be from a sceptics blog, but does appear accurate.

- Over 31,000 scientists signed the OISM Petition Project

Tom Curtis at 10:06 AM on 26 February, 2017Deaner @38, and Kirdee @ 37, you may be waiting a long time for a detailed rebutal of the accompanying paper to the OISM petition. That is because the paper constitutes a Gish gallop. It is so dense with cherry picks, data taken out of context and other errors that it would take a paper just as long simply to provide links to related rebutals. Given that all of the claims can be (and have been rebutted on SkS) in relation to other issues, the time that would be involved in tracing down all the references, and composing a rebutal is not sufficiently well rewarded.

To give you an idea of what I mean, I will consider just a few claims made by the paper.

The paper leads with a Sargossa Sea proxy from Keigwin (1996):

It is a real proxy, and I do not know of any problems with Keigwin (1996). What I do know (and which should be obvious) is that no proxy from a single location is a proxy of global temperature. To think it is is as absurd as thinking that temperatures in Darwin, Australia must vary in sync with those of Boston, Massachussets. Because temperatures in different regions do not vary in sync, when taking a global average they will regress towards the mean. Large variations will be evened out, and global mean temperature peaks (and troughs) are unlikely to coincided with peaks (and troughs) of individual regions.

Robinson, Robinson and Soon (hereafter RRS) will have nothing of that, and conclude from a single proxy that:

"The average temperature of the Earth has varied within a range of about 3°C during the past 3,000 years. It is currently increasing as the Earth recovers from a period that is known as the Little Ice Age, as shown in Figure 1. George Washington and his army were at Valley Forge during the coldest era in 1,500 years, but even then the temperature was only about 1° Centigrade below the 3,000-year average."

In contrast to their finding, if you look at a genuine multi-proxy reconstruction of Holocene temperatures (in this case 73 proxies from diverse regions), you see that global temperatures have varied within a 1 to 1.5 C temperature range, and that "Current global temperatures of the past decade have not yet exceeded peak interglacial values but are warmer than during ~75% of the Holocene temperature history", including, as it happens, the MWP:

RRS have created an entirely false impression by using clearly inadequate, and cherry picked, data.

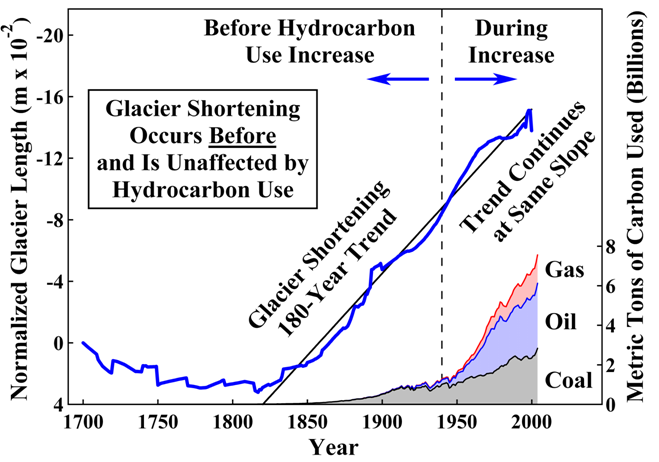

Next consider their use of Oelermanns (2005) regarding glacer length, which RRS show as follows:

For comparison, here is the actual figure (2 B) from Oerlermans (2005):

You will notice that RRS show the figure inverted. You will also notice that while the all glaciers figure (in red) jogs down towards the end, it is only the "Alps excluded" figure that jogs up at the end, as shown (once allowing for the inversion) by RSS. From that evidence, they have deliberately chosen the more restricted data, and chosen it because it better fits their narrative (because it is smoother).

What is worse, they know and neglected the fact that a Oerlermans (2005) used the data to reconstruct global temperatures. The result is very different from the impression they are trying to create:

Temperatures are seen to be more or less stable from 1600, with the slight rise starting around 1850 in keeping with what has gone before. The 20th century, however, is marked by an unprecedented, rapid, rise in temperature. That has lead to an unprecedented and rapid retreat of glaciers.

Once again RRS create a false impression by cherry picking the data, and by forcing us to rely on an intuitive, but false understanding of the relationship between glacier length and temperatures (which are modulated by slope and precipitation, factors Oerlermans takes into account but for which we have no information). Worse, they portray the data from approximately 70 glaciers (ie, the total number of glaciers used excluding those from the Alps) as though it were the full 169 glaciers considered.

I could go on, but you will already see from my brief treatment of just two points how extensive a full treatment of RSS would be. You will also have noted the dishonest tactics used repeatedly by RSS in their paper.

- CO2 lags temperature

Tom Curtis at 00:24 AM on 22 February, 2017Adri Norse Fire @549, your discussion is becoming increasingly wide ranging, and off topic. I am sure the moderators would appreciate your taking the various points to their most appropriate threads. In particular, where you say,

"The theory is essentially that solar observations are increasingly recording fewer sunspots and it is thought that solar cycle 25 or 26 will practically have no stains, a event that has precedents +200 years ago where especially the Maunder Minimum coincided with the colder phase of the Little Ice Age."

You should read, and take further discussion to this article.

Discussion of the MWP should be taken to one of numerous threads devoted to it, or that on Pages 2k. That includes discussion of farming in Greenland, although this thread would be more apt. Discussion of Holocene temperatures should probably be taken to a thread on Marcott et al.

- NOAA was right: we have been underestimating warming

Tom Dayton at 05:34 AM on 7 January, 2017Echo, for global temperatures extending far earlier than the previous 2,000 years, see the post on "the wheelchair" graph.

- Climate change in 2016: the good, the bad, and the ugly

nigelj at 08:44 AM on 4 January, 2017Michael Sweet, 1.5 degrees Celsius could well be true if you take it wider from 1750 right to this year. I personally have no argument with that.

However regardless of exact numbers and start and end points, studies like Marcott going back over 10,000 years show just how unprecedented recent temperatures are. I remain optimistic that if the public are made aware numerous studies keep duplicating the original hockey stick the facts will eventually sink in.

Debates are eventually won on the facts. Even Trump is going to find that out the hard way because right now all his policies (climate change, foreign policy, and economic etc) are all based on fallacies of various kinds, and are therefore very foolish policies. They are foolish for other reasons as well.

- Oceans heating up faster now than in the past 10,000 years, says new study

rugbyguy59 at 14:11 PM on 6 December, 2016jzk,

While the paper summary says the findings support the idea of a global MWP, this only means the data here is consistent with it. This isn't real evidence of a global anything because it is simply of one area. There are many valid studies in that list of "Studies supporting a MWP" on that other website of ill repute, which say their findings support the idea of a global MWP. However, those studies are not put together by anyone I'm aware of. When people do put all the data together (PAGES2K for example) there doesn't seem to be one. Even the MWP that seems to appear in the Northern Hemisphere studies is a pale shadow of what deniers want it to be.

When I look at the graph of OHC this study presents, it seems to be quite analogous to the same period in Marcott. Although the beginning of the lang slow cool down begins earlier. Granted I'm no scientist but that seems to me to not really support a global MWP or LIA as described by denialists. There is no sudden or pronounced warming or cooling in either period there is just the long term trend (Milankovitch Cycles?) plus noise.

- Tracking the 2°C Limit - November 2015

Tom Curtis at 12:24 PM on 22 January, 2016As per the moderators suggestion, I have responded to angusmac across three posts elsewhere. I will make one point in response here, however, because it gets to the core of why angusmac raised HCO temperatures on this thread in the first place. To recap, angusmac argues that the MWP temperature should be used as the "preindustrial temperatures" for reasons sufficiently refuted above. His reason for doing so comes down to his opinion that, "...“1.669 °C” above the 1961-1990 mean [and MWP temperatures] ... does not sound nearly as bad as 2 °C". This in turn is related to his belief that "... many parts of the world exceeded the 2 °C limit [in the HCO] without any dangerous consequences and that these temperatures occurred when CO2 was at ≈ 280 ppm". The idea is that if 1.67 C above the 1961-1990 mean is not bad, then neither is 0.75 C (2015 average, HadCRUT4) and neither is 2 C above the preindustrial average. Ergo, rebaselining the 2 C guidline is justified to make that intuitively obvious.

Marcott 2013 allows us to see how flawed that intuition is.

Specifically, Marcott adds random variability to the stack of reconstructions used in the paper to make the annual scale variability of the reconstructions match actual annual scale variability. The result is an increase of 0.13 C to the standard error. It follows, that if we add 0.13 C to the standard deviation of the mean of the stack for each 20 year period in the full reconstruction, we get a good approximate measure of the potential range of annual temperatures for that 20 year period. Note that due to autocorrelation, if temperatures are low for a given year in a 20 year period (or century), in a specific member of the stack, they will not be high within that 20 year period (or with slight qualification, within that century). But because we do not know which member of the stack most closely approximate reality, the statistical data form all stacks gives us our best approximation of the temperature range. From that in turn we can calculate an approximate probability of a particular temperature in any given year:

The chart shows the probability of a given temperature (specified by year) in any given year over the Holocene. The green line shows the probabilty of 1.5 C over the 1961-1990 mean. It is consistently zero. Even 2015 tempertures are shown to be relatively rare in the HCO, though 2000-2009 temperatures were commonplace.

More interestingly, we can calculate the probability of those temperatures being reached at least once, in any year over a given period. For the period 5500-9500 BP, it is a near certainty that even 2015 temperataures will have been reached at least once. There is, however, only a 2% chance that temperatures reached 1.5 C above the 1961-1990 at any time in the HCO. The chance that it reached 2 C above preindustrial for even a single year is negligible.

It can therefore be seen that angusmac's assumption that high temperatures were commonplace in the HCO is simply false. We are very nearly at the upper limit of HCO temperatures. Nor is his assumption that HCO temperatures were always beneficial justified. Indeed, given that almost all agriculture was invented between 30 degrees North and 30 degrees South (and all was invented between 40 north and 30 south), and given that temperatures in that latitude zone have actually increased since the HCO, his assumption of beneficial effect is very shaky indeed.

Finally, for completeness, the probability of 1990-2009 twenty year average temperatures of the 950-1900 preindustrial baseline is 0.6%. The probability of 1996-2015 twenty year average temperatures of the 950-1900 baseline is just 0.03%. So much for natural variability being the cause.

- Real Skepticism About the New Marcott 'Hockey Stick'

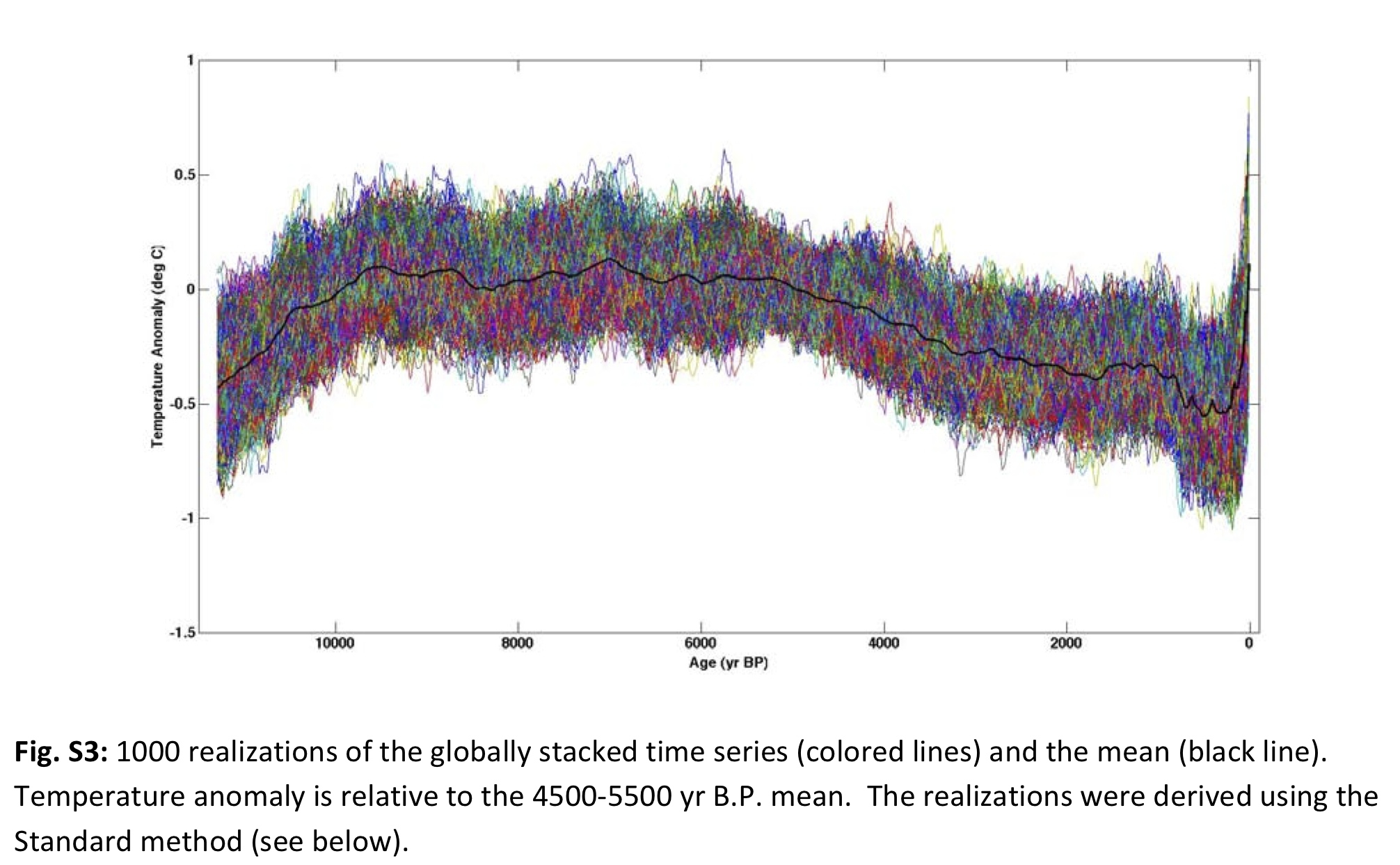

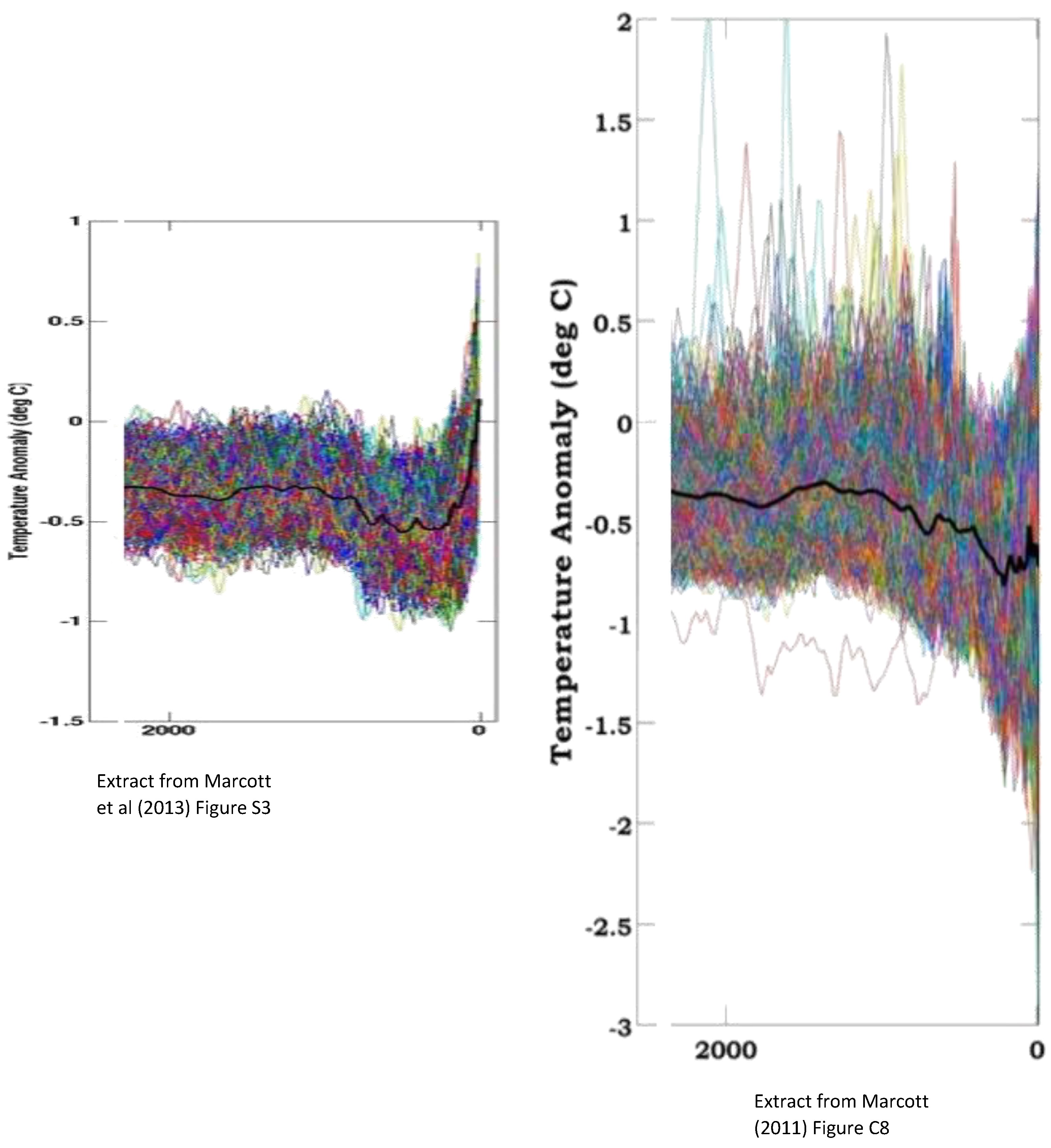

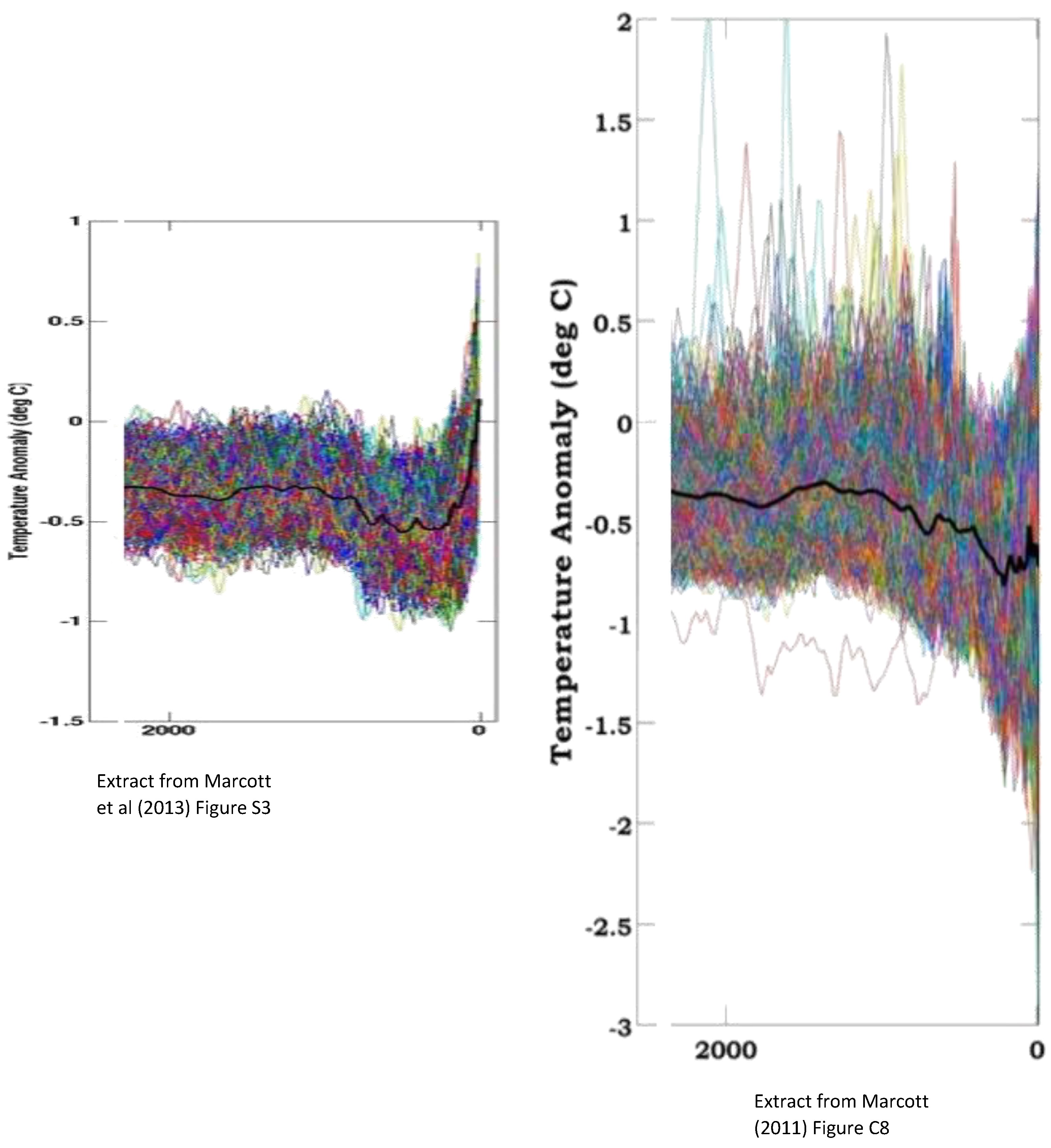

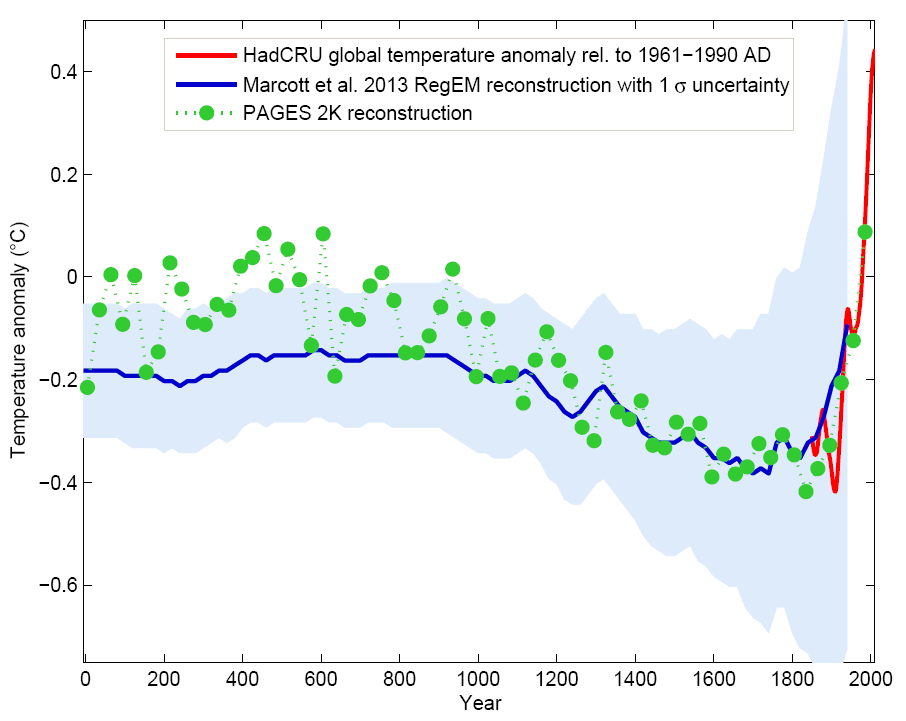

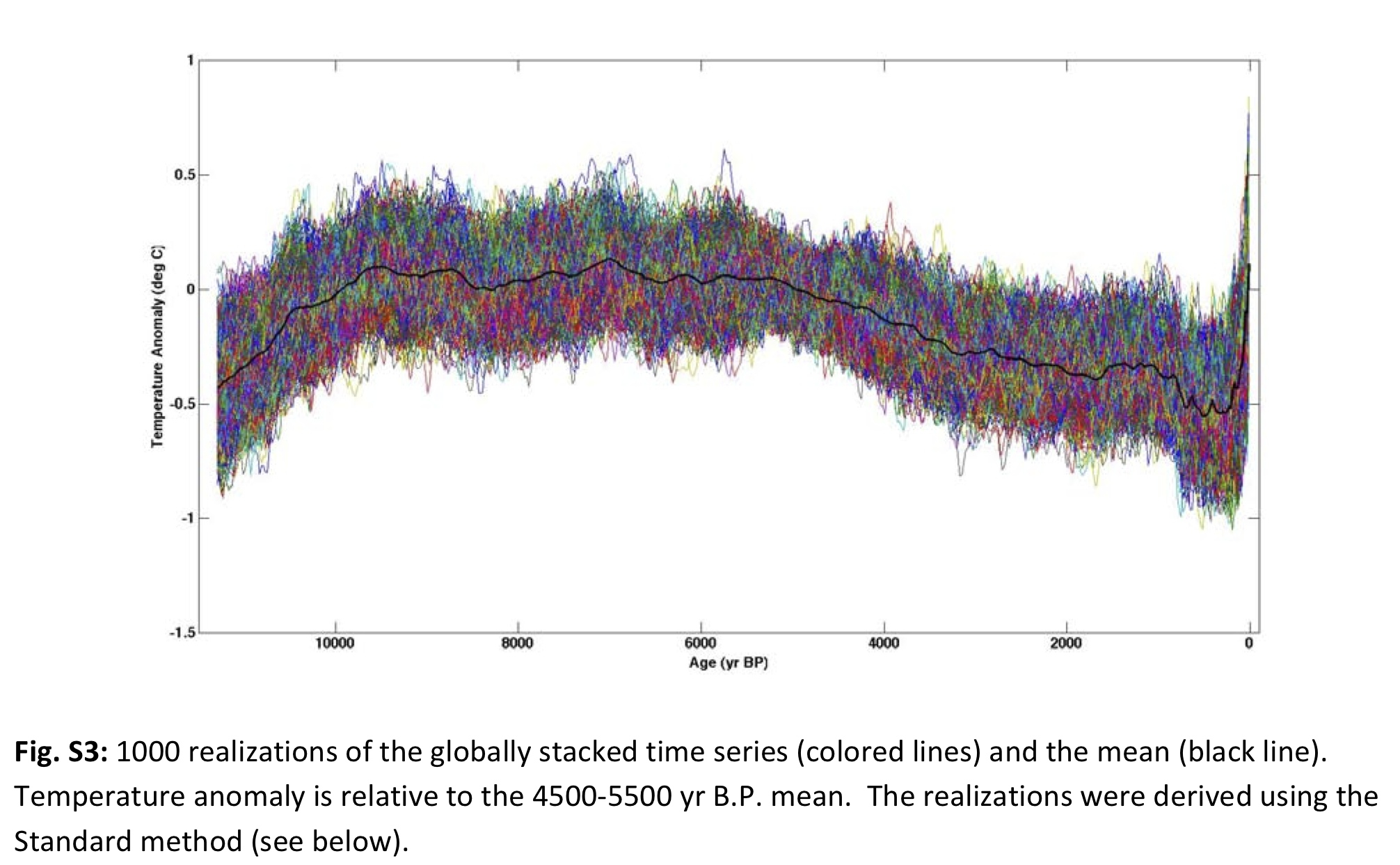

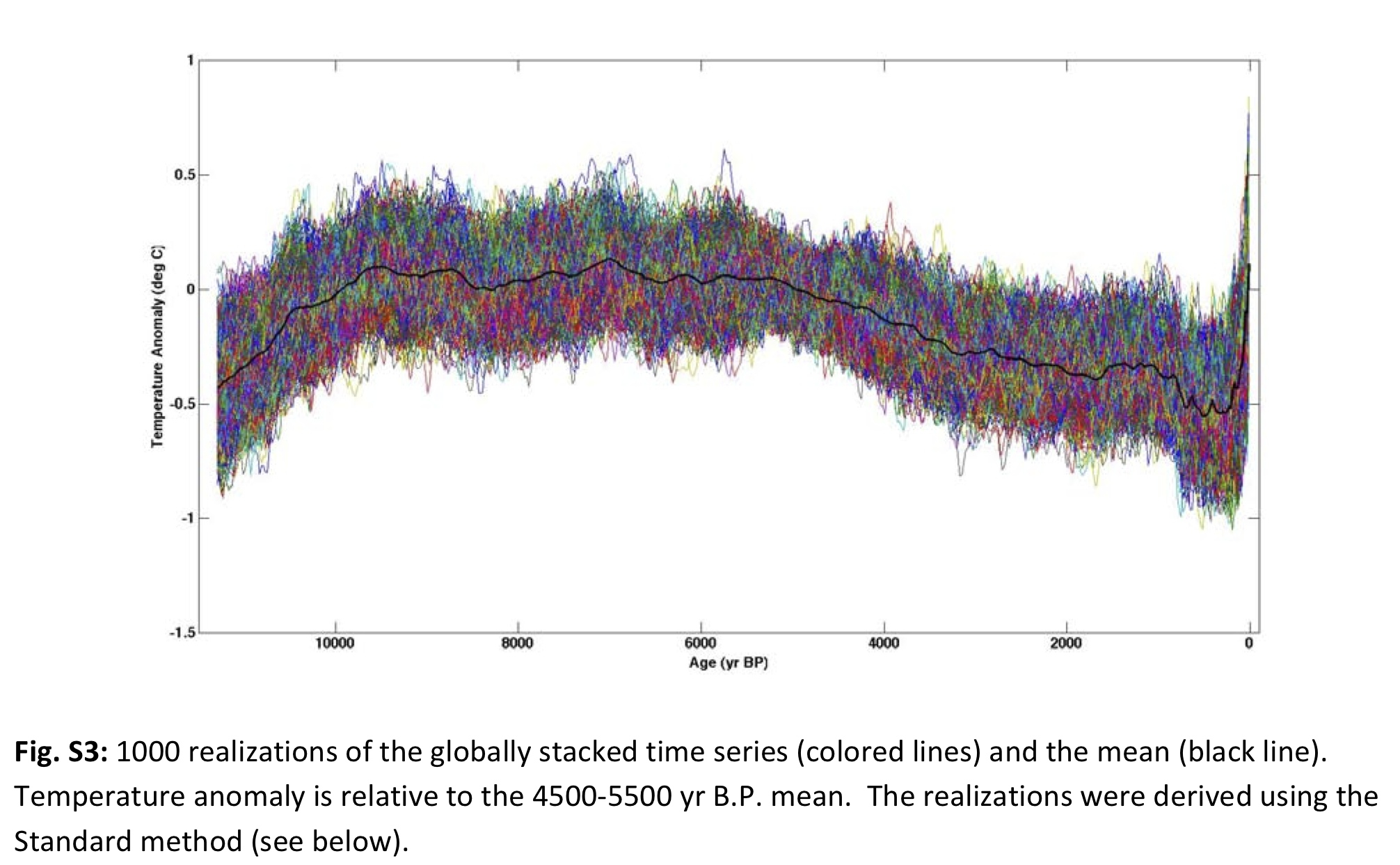

Tom Curtis at 11:33 AM on 22 January, 2016This is my final response on this page to angusmac's comment here. In that comment, in addition to the three points addressed above he draws attention to the fact that the 19th and 20th century spike in the Marcott standard mean of reconstructions is not robust (something pointed out by Marcott et al in the original paper). He reinforces the argument by comparing the final stages of Figure S3 to the equivalent Figure C8 from Marcott's thesis:

He writes:

"A difference of approximately 0.8 °C in the two versions of the reconstruction is presented in Figure 1 – yet they use the same proxies. Marcott et al do not address this significant difference by their “not robust” statement.

...

In light of the above discrepancies in Marcott et al (2013), I would not recommend it as a reasonable paleo reconstruction."

In the elided section, he adds the further, hypocritical criticism that Marcott's response to blog criticisms on a blog rather than formally through an corrigendum or explanandum published in the journal "...casts doubt on the robustness of the last 500 years of the reconstruction and perhaps even the entire paper". The double standard in not assuming that the failure of critics to formally publish their criticisms "casts doubt on the robustness" of those criticisms is breath taking. I will treat that "argument" with the contempt it disserves, and give it no further dicussion.

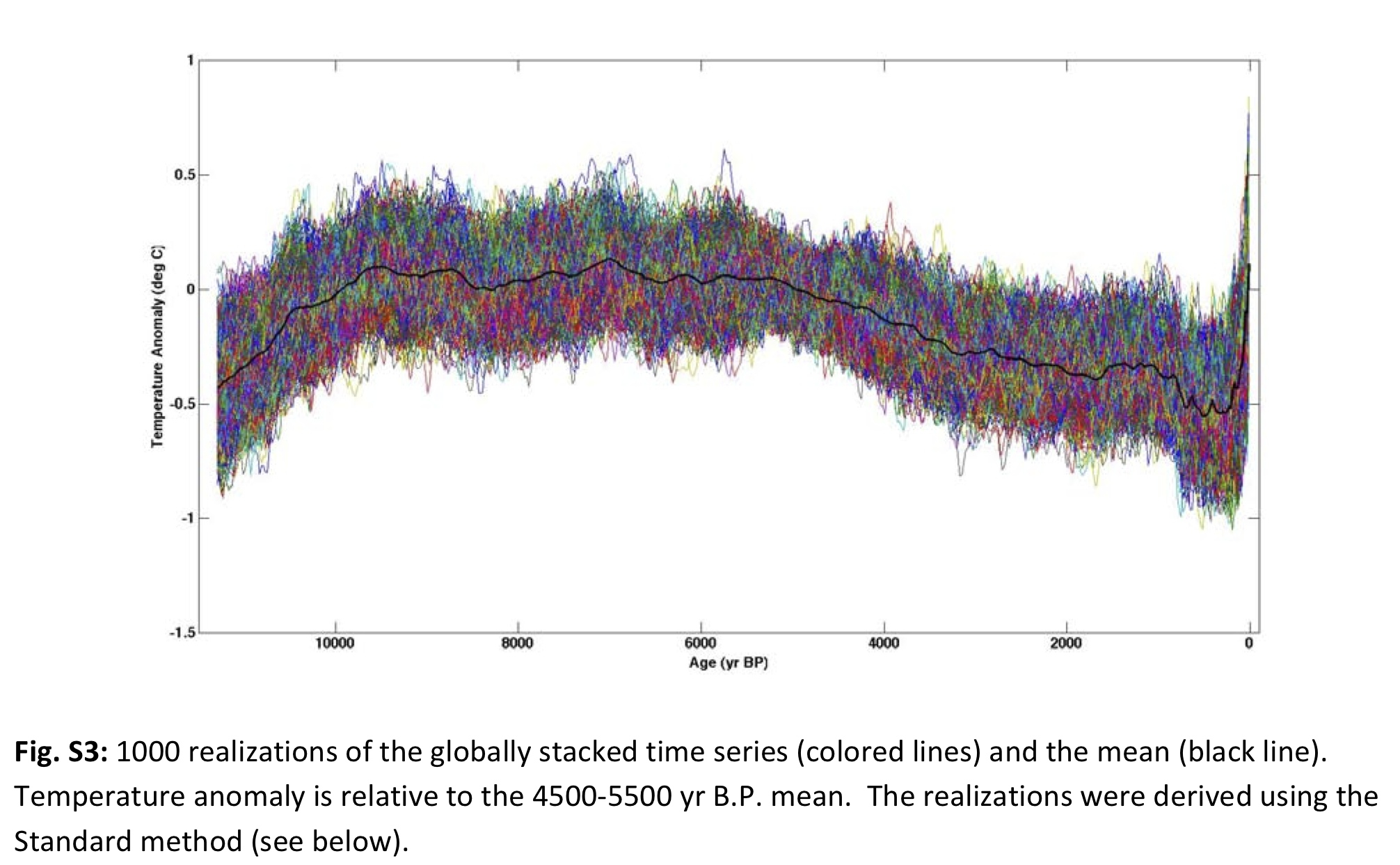

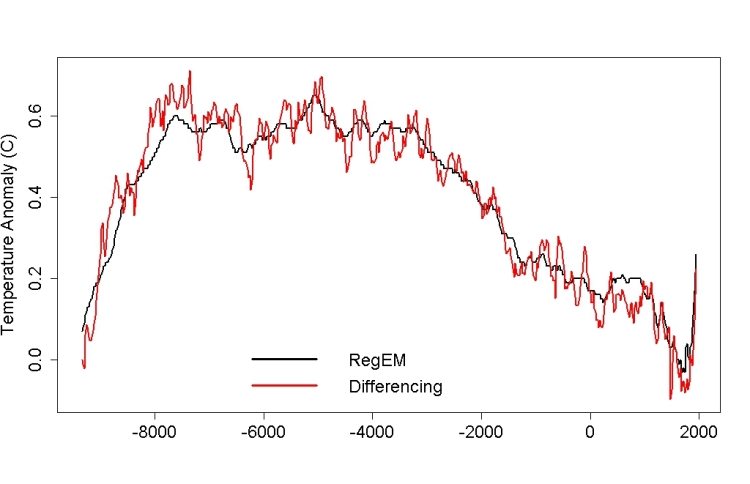

With regard to robustness, Tamino explained it first and best. It is entirely an artifact of the method of taking a simple average combined with the drop out of proxies towards the end of the record. Using other methods such as the method of difference (see Tamino's post), or the RegEM method used as an alternative method in Marcott et al, results in a much smaller, but robuts uptick occuring in the 20th century only:

Comparison of simple averages to the method of difference (or the RegEm method) shows the problem has little effect anywhere else in the reconstruction, and a scarcely discernible effect in the crucial years of the Holocene Climactic Optimum:

Given that angusmac is familiar with Marcott's Q&A at Realclimate, and even with Marcott's thesis, he must be familiar with this explanation of the problem, and ergo that it makes no relevant difference to the reconstruction in the HCO. Given that, I am surprised (to say the least) that he should raise this issue.

This, of course, does not explain the difference in the terminal section of the stacks generated for the paper (S3) and the thesis (C8). What angusmac does not show is the difference between Figure C8 and Figure C9 of the thesis:

As can be seen, calibration issues in just one proxy were able to make a significant difference to the robustness of the reconstructions through the HCO. Those issues were resolved in the paper, and the proxy consequently is used in the paper without problems. While that proxy (ODP 984) terminates around 1400 AD, and therefore does not contribute to the lack of robustness of the terminal period, it is likely that similar improvements in calibration and/or terminal dates explains the difference between C9 of the thesis and S3 of the paper with regard to the final two centuries.

Comparison of C9 and S3 shows the problem to only relate to the final two centuries which are not the point of the reconstruction, and which are not used to calibrate reconstruction temperatures to modern temperatures (which is done indirectly via the Mann 2008 reconstruction of temperatures over the last 2000 years). From this it follows that there is no basis in this data to doubt the HCO reconstruction from Marcott et al.

Nor is any substantive reason advanced to show the changes in data handling with regard to calibration and possibly terminal dates between the two does not represent an improvement. As it makes no difference to the substance of the reconstruction that is sufficient answer IMO. If it does not satisfy angusmac, he can do the necessary leg work by enquiring of Marcott re all the precise differences between thesis and paper among which the full explanation must be found.

- Real Skepticism About the New Marcott 'Hockey Stick'

Tom Curtis at 10:08 AM on 22 January, 2016Continuing my discussion of Angusmac's comment here:

Point (3) clearly misunderstands the nature of the Marcott reconstructions. (The misunderstanding is quite common among people who discuss climate science on the internet.) Specifically, while the mean of the stack of reconstructions has a resolution of approximately 300 years, the reconstructions themselves (as shown in figure S3, see Comment 101 above) have a resolution of 20 years. They are therefore quite appropriately compared to decadal temperatures. Further, in generating Figure 3 (see comment 101 above), which is the crux of Marcott et al, Marcott et al added noise to the reconstructions so that their variability matched the annually resolved Mann 2008 reconstruction. The statistical distribution is, therefore, quite appropriately compared to annual temperatures.

Given this, it is reasonable to criticize graphs that show only the stack mean vs modern temperatures. The falsely give the impression that we are experiencing temperatures never experienced before since the invention of agriculture. Rather, modern twenty year averages should be compared to the individual reconstructions in the stack, like this:

As you can see, even the 1996-2015 average was probably experience many times (if briefly) in the Holocene, but we are pushing towards the upper temperature limit of the Holocene. More significantly, the rate of increase of temperature over the last century is certainly in the highest 5% of holocene temperature trends, and may well be unprecedented. It is also ongoing.

More later.

- Real Skepticism About the New Marcott 'Hockey Stick'

Tom Curtis at 15:26 PM on 21 January, 2016Angusmac criticizes the Marcott reconstruction here, saying (in part):

"Rob, I agree that the conversation has veered off course for this comment thread but I do wish to make the following comments regarding Marcott et al (2013) that are relevant to baselining preindustrial temperatures:

- Marcott et al state that their results indicate that “global mean temperature for the decade 2000–2009 [HadCRUT3] has not yet exceeded the warmest temperatures of the early Holocene (5000 to 10,000 yr B.P.).” Therefore, if we were to use their reconstruction, we would be near to the Holocene peak.

- 80% of the Marcott et al proxies are derived from marine archives and consequently would underestimate global land-ocean temperatures. Consequently, the Marcott et al results should be adjusted upwards for an apples-for-apples comparison with land-ocean temperatures, as suggested by Glenn Tamblyn@9.

- Proxies tend to have multi-decadal to centennial resolutions and should not be compared directly with annual instrumental temperatures. Kaufman et al (2013) consider this by presenting the most recent 30-year period (1983-2012) from HadCRUT4 as shown by the star in Figure 2. However, a good case could be made for using a longer period for the instrumental mean when comparing it with proxies that are of centennial resolution e.g., Marcott et al (2013)."

Point (1) should be commonplace, although due to a common misinterpretation of Marcott et al, it is not. The misinterpretation is that the bold line in the center of the 1 sigma uncertainty interval in Marcott et al is the "reconstruction", whereas it is just the mean of an ensemble of reconstructions. Marcott et al state no preference among that ensemble as to which is the "real" value, so therefore all members of the ensemble must be given equal weight. All, or nearly all members of the ensemble frequently lie higher than the ensemble mean, and often much higher. It follows that temperatures greater than even 2015 are far more likely than not to have occured in the Holocene Climactic Optimum, and indeed may have occurred close to 1 in every 20 years over the period 5500 to 9500 BP.

Point (2), on the other hand is at best ill informed nitpicking. Ill informed because it assumes the differential rate of heating between land and ocean which leads to cooler oceans in a warming climate (and warmer oceans in a cooler climate) will be a significant factor over multi-centenial periods. For the Marcott mean value, the time resolution is 300 years, at which resolution the difference between land and ocean would be effectively nil. (This contrasts with the case for the much smaller resolution of reconstructions of the MWP.)

Nitpicking because while sea temperature proxies are 80% all proxies, the sea surface is 70% of global surface area. That is, sea surface temperatures are only overrepresented by 14.3%. This evidently creates a problem for somebody prepared to overlook that sea surface temperatures were underrepresented by 45.7% in the Lundqvist analysis that he prefers. Further, nitpicking because again in Marcott et al, NH proxies are again overrepresented (as is typically the case in reconstructions). Marcott el al write:

"The Southern Hemisphere is represented by fewer data sets (n = 11) than the equatorial (n = 33) and Northern Hemisphere (n = 29) regions, providing fewer constraints on characterizing the variability in our reconstruction for this region."

Despite Marcott's phrasing, the SH extratropics is well represented, with 15.1% of proxies for 18.3% of the surface area (17.5% under representation), but the NH extratropics has 39.7% of the proxies for 18.5% of the area (a massive 114.6% over representiation). Meanwhile tropical regions, with 45.2% of proxies for 63.7% of the area, are also under represented (29.1% under representation).

As can be seen below, NH temperatures rose much higher in the Holocene Climatic Optimum relative to tropical or SH temperatures:

As it turns out, the most under represented area relative to the proportion of the Earth's surface shows lower temperatures over the Holocene Climactic Optimum, while the area with the greatest early holocene warmth is massively over represented.

These biases are far more likely to impact the final reconstruction than are the relatively minor land/sea bias pointed to by Angusmac. Further, they bias in the opposite direction to his presumption regarding the land/sea bias, and so would surely cancel the effect (at least).

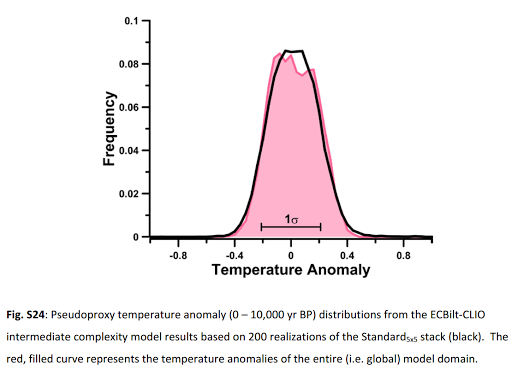

Finally, as it happens Marcott et al checked for the effect of biases against a simple model:

Clearly their methodology was sufficiently robust to eliminate all but a small part of the warm bias we would expect from looking at proxie locations alone.

I am currently short of time, and will discuss point three and further criticisms later.

- Tracking the 2°C Limit - November 2015

Tom Curtis at 21:34 PM on 18 January, 2016angusmac @41, I am not going to respond formally to your post until it is clear that it will not be deleted for its rather egregious posting under the wrong topic. If it is so deleted, by all means repost in on the correct thread. If you feel there is some part of the discussion that asolutely belongs on this thread, you can then link to that repost and highlight briefly only what is relevant, and why it is relevant to the OP here.

I will note that it is extraordinary in science to only make criticism on weblogs (which is where you will find the criticism of Marcott et al), and to expect a more formal response by Marcott without demanding a more formal critique by the science assassins "climate auditors" represents a stunning hypocrissy.

- Tracking the 2°C Limit - November 2015

angusmac at 19:15 PM on 18 January, 2016Rob Honeycutt@39 & Tom Curtis@32

Rob, I agree that the conversation has veered off course for this comment thread but I do wish to make the following comments regarding Marcott et al (2013) that are relevant to baselining preindustrial temperatures:

- Marcott et al state that their results indicate that “global mean temperature for the decade 2000–2009 [HadCRUT3] has not yet exceeded the warmest temperatures of the early Holocene (5000 to 10,000 yr B.P.).” Therefore, if we were to use their reconstruction, we would be near to the Holocene peak.

- 80% of the Marcott et al proxies are derived from marine archives and consequently would underestimate global land-ocean temperatures. Consequently, the Marcott et al results should be adjusted upwards for an apples-for-apples comparison with land-ocean temperatures, as suggested by Glenn Tamblyn@9.

- Proxies tend to have multi-decadal to centennial resolutions and should not be compared directly with annual instrumental temperatures. Kaufman et al (2013) consider this by presenting the most recent 30-year period (1983-2012) from HadCRUT4 as shown by the star in Figure 2. However, a good case could be made for using a longer period for the instrumental mean when comparing it with proxies that are of centennial resolution e.g., Marcott et al (2013).

Figure 2 (Kaufman et al, 2013)

Figure 2 (Kaufman et al, 2013)Tom, I had thought that the uptick at the end of the Marcott et al reconstruction would provide a good correlation with instrumental temperatures but Marcott et al stated that their 1890-1950 warming was “probably not robust.” If it is not robust then why show it?

Further research indicated that there had been criticism of the paper, which resulted in a Q&A blog by Marcott et al in RealClimate which stated that, “the 20th century portion of our paleotemperature stack is not statistically robust, cannot be considered representative of global temperature changes, and therefore is not the basis of any of our conclusions.”

“Not robust” is an understatement when you compare Figure S3 from Marcott et al (2013) with Figure C8 in Marcott’s PhD thesis (2011). This comparison shows that there are differences in the two reconstructions in general and, in particular, the last 500 years shows completely different results. For example, there is an uptick in Figure S3 in the 2103 version and no uptick in Figure C8 in the 2011 versions (see Figure 1).

Figure 1: Extracts from Figure S3 (Marcott et al, 2013) and Figure C8 (Marcott, 2011)

A difference of approximately 0.8 °C in the two versions of the reconstruction is presented in Figure 1 – yet they use the same proxies. Marcott et al do not address this significant difference by their “not robust” statement.

Regarding the criticism of their paper, it is very unusual to address such criticism in a weblog, as done by Marcott et al. It is normal scientific practice to address criticism in clarifications/corrigenda to the original paper in the pertinent journal because this allows a technical audit on the veracity of the paper. Not having done so certainly casts doubt on the robustness of the last 500 years of the reconstruction and perhaps even the entire paper.

In light of the above discrepancies in Marcott et al (2013), I would not recommend it as a reasonable paleo reconstruction.

- Tracking the 2°C Limit - November 2015

Tom Curtis at 23:06 PM on 13 January, 2016Angusmac @29 (2), I am disappointed that you drew my attention to Ljungqvist 2011 for I had come to expect higher standards from that scientist. Instead of the standards I have expected, however, I found a shoddy paper reminiscent of Soon and Baliunas (2003) (S&B03). Specifically, like S&B03, Ljungqvist 2011 gathers data from a significant number (60) of proxies, but does not generate a temperature reconstruction from them. Rather, they are each categorized for different time periods as to whether they are more than 1 C below the preindustrial average, withing 1 C of that average, more than 1 C but less than 2 C, or more than 2 C above the preindustrial average. The primary reasoning is then presented by a simple head count of proxies in each category over different periods, shown in Figure 3, with figure 3 a showing land based proxies, and figure 3 b showing marine proxies:

(As an aside, C3 Headlines found the above graph too confronting. They found it necessary to modify the graph by removing Fig 3b, suggesting that the thus truncated graph was "terrestial and marine temperature proxies".)

If the proxies were spatially representative, the above crude method might be suitable to draw interesting conclusions. But they are not spatially representative. Starting at the simplest level, the 70% of the Earth's surface covered by oceans are represented by just 38% (23/60) of the proxie series. As the ocean proxie series, particularly in the tropics, are cooler than the land series, this is a major distortion. Worse, the 6.7% of the Earth's surface North of 60 latitude is represented by 25% of the data (15/60 proxies). The 18.3% of the Earth's surface between 30 and 60 degrees North is represented by another 43% of the data (26/60 proxies). In the meantime the 50% of the Earth's surface between 30 North and 30 South is represented by just 23% of the data (14/60 proxies), and the 50% of the Earth's surface below the equator is represented by just 15% of the data (9/60 proxies).

This extreme mismatch between surface area and number of proxies means no simple eyeballing of Fig 3 will give you any idea as to Global Mean Surface Temperatures in the Holocene Thermal Maximum. Further, there are substantial temperature variations between proxies in similar latitude bands, at least in the NH where that can be checked. That means in the SH, where it cannot be checked due the extremely small number of proxies, it cannot be assumed that the 2 to 4 proxies in each latitude band are in fact representative of that latitude band at all. Put simply, knowing it was warm in NZ tells us nothing about temperatures in Australia, let alone South America or Africa. This problem is exacerbated because (as Ljungqvist notes with regard to Southern Europe, data is absent from some areas known to have been cool HTM.

The upshot is that the only reliable claims that can be made from this data is that it was very warm North of 60 North, and North of 30 North on land in the HTM. The data is too sparse and too poorly presented to draw any conclusions about other latitude bands and about Ocean temperatures, or Land/Ocean temperatures from 30-60 North.

Given the problems with Ljungqvist 2011 outlined above, I see no reason to prefer it to Marcott et al (2013):

More illustrative is his Figure 3:

Note that the statistical distribution of potential holocene temperatures tails out at 1.5 C above the 1961-1990 baseline, or 1.86 C above a 1880-1909 baseline. Unlike the reconstruction, the statistical distribution of realizations does not have a low resolution. Ergo, we can be confident from Marcott et al that it is extremely unlikely that the Earth has faced temperatures exceeding 2 C above the preindustrial average in the last 100 thousand years.

- Tracking the 2°C Limit - November 2015

angusmac at 18:12 PM on 13 January, 2016Tom Curtis@24 Regarding your assertion of my “abuse of data” and being “fraudulent”, regarding the use of the Renssen et al (2012) HTM temperature anomalies, I can only assume that you are stating that I portrayed Renssen et al as global average temperatures. You are incorrect. I did not state that they were global average temperatures; I only stated that, “...many parts of the world exceeded the 2 °C limit” in my comment on Renssen et al. I fail to see anything fraudulent in this statement.

Referring to global average temperatures, I do not know why Renssen et al did not present global averages because they obviously have the data to do so. However, if you wished to obtain an early Holocene global average from Renssen et al, it is a simple matter to inspect one their references, e.g., Ljungqvist (2011) offers the following conclusions on global temperatures:

Figure 1: Extract from Conclusions by Ljungqvist (2011) [my emphasis]

Figure 1: Extract from Conclusions by Ljungqvist (2011) [my emphasis]I agree that with you regarding temperatures during earlier warm periods that it could be, “…plausibly argued that in some areas of the world those conditions were very beneficial” but I will stick to what you call my “faith” that they were beneficial to humanity overall. I will leave it to the proponents of 2°C-is-dangerous scenario to prove that temperatures of 1 °C or “possibly even more” were harmful to humanity as a whole.

Finally, you state that I frequently cite Marcott et al but, once again, you are incorrect. I only cited Kaufman et al (2013) which shows Marcott et al as one of their temperature simulations in their diagram. The Marcott et al Climate Optimum was only mentioned once by me in angusmac@17

- Tracking the 2°C Limit - November 2015

Tom Curtis at 13:55 PM on 10 January, 2016angusmac @21, the map you show from Renssen (2012) does not represent any specific time period. Renssen states:

"To address the questions raised in the introduction, we analyzed the monthly mean simulation results to establish for each grid cell the maximum positive temperature anomaly relative to the preindustrial mean (see example in Fig. 2). The analysis resulted in global maps of this anomaly (Fig. 3aeb), which we consider to represent peak HTM conditions in the model. It is important to note that the anomalies on this map do not represent “real” climatic conditions, as they originate from different months in the year and from different times within the last 9000 years. In addition, we also mapped the timing of this anomaly in ka BP (Fig. 4a-b) and the month of the year in which this anomaly occurred (Fig. 5). This was done for both ORBGHG and OGMELTICE. The latter experiment is used here as the standard simulation, as it contains the impact of all considered forcings."

(My emphasis)

In fact, consulting figure 5, we see that the maximum temperatures are drawn from every month except May, and the consulting figure 4a, that while most occure in the three millenium span, 6-9 kya (with approximately equal portions from each of those three millenia), some occur as recently as the last millenia.

Further, consulting Figure 2, we see that temperature trends in different seasons may be exactly opposite each other. Indeed, given the insolation history in high NH lattitudes, that is exactly what we would expect. It follows that the maximum temperatures shown by Renssen are not annual averages for any year.

Given these strictures on the data, your use of it can only be called an abuse of data. If I had any confidence you knew what you are talking about, I would call it fraudulent. That is because the rise in temperatures for the 2 C guideline is a 2 C rise in the annually averaged Global Mean Surface Temperature (GMST). It is expected that:

- The increase in land temperature will be greater than the increase in sea surface temperature;

- The increase at higher latitudes, particularly higher northern latitudes, will be greater than in tropical regions; and

- The increase in winter will be greater than the increase in summer.

All these features have already been observed. The consequence is that a map of temperature maximums on the same line as that in Jenssen (2012) at a 2 C increase, even if constrained to the same year, would show significantly greater than a 2 C increase in temperature. In fact, from Marcott et al, which you frequently cite, we know the increase in annually averaged GMST in the Holocene Thermal Maximum to have been approximately 0.5 C relative to preindustrial values. Consequently, your argument @21 amounts to the claim that, because we surived temperatures 0.5 C above the GMST it is patently clear that temperatures 2 C above the preindustrial average will be without "any dangerous consequence".

I will further note that your assumption that the HTM was "without any dangerious consequence" is as faith based as your prior, similar assumption about the MWP. It is plausibly argued that in some areas of the world those conditions were very beneficial, as evidenced by the invention of agriculture. Agriculture, however, was invented in low latitude regions close to water (The middle east, the Indus delta, southern China, and Central America). Those are regions with low temperature increases, even on Jenssen's map. They are not the regions which you highlight.

Finally, here are model projected temperature anomalies for the 2050s (approximately 2 C warming) relative to the 1971-2000 mean:

Please note the archaic units to avoid confusion. As you can see, regionally in that situation, we will be facing temperatures as high as 10 C above the 1971-2000 average. Clearly even if Jenssen 2012 was an annually averaged map, it would be considerably cooler than what we are facing with BAU.

- Hockey stick is broken

KR at 08:58 AM on 9 November, 2015dvaytw - Dr. Muller seens to have the view that if he has not personally done the work, personally checked the evidence, then it is in doubt. And he will thus blithely dismiss solid work, take as gospel tripe like M&M, etc. So I would take his pronouncements with large blocks of salt.

The 2006 NAS report states in its conclusions:

- It can be said with a high level of confidence that global mean surface temperature was higher during the last few decades of the 20th century than during any comparable period during the preceding four centuries. This statement is justified by the consistency of the evidence from a wide variety of geographically diverse proxies.

- Less confidence can be placed in large-scale surface temperature reconstructions for the period from A.D. 900 to 1600. Presently available proxy evidence indicates that temperatures at many, but not all, individual locations were higher during the past 25 years than during any period of comparable length since A.D. 900. The uncertainties associated with reconstructing hemispheric mean or global mean temperatures from these data increase substantially backward in time through this period and are not yet fully quantified.

- Very little confidence can be assigned to statements concerning the hemispheric mean or global mean surface temperature prior to about A.D. 900 because of sparse data coverage and because the uncertainties associated with proxy data and the methods used to analyze and combine them are larger than during more recent time periods.

So the work he signed off on indicates high confidence in the last 400 years, less confidence in the previous 600, and reasonable uncertainty about 1000 years and greater ago, based on the evidence available at that time.

In the intervening decade additional proxies have been located, producing work up to and including Marcott et al 2013, which concludes that recent temperatures represent a reversal of a cooling trend that started 5000 years ago, with current temps warmer than the mean temperatures over 82% of the Holocene (going back 11,500 years).

Muller's statements regarding paleotemperature reconstructions were reasonable a decade ago, but are now sadly out of date. And his assertions about MBH/M&M simply indicate that he hasn't looked into the M&M work - it's nonsense, multiply debunked, most notably by Wahl and Ammann 2007. M&M's failure to apply PCA selection rules alone invalidates the work, let alone their many other errors and misstatements. Muller is (once more) talking through his hat.

- Climate's changed before

Tom Curtis at 10:07 AM on 31 October, 2015A Real Skeptic would know, having examined the issues, that Mann Bradley and Hughes 1999 (MBH99), aka, the Hockey Stick, has not been debunked despite strenuous efforts by McIntyre and McKittrick. Rather, McIntyre and McKittrick have used a statistical measure that calls a straight line plus white noise a Hockey Stick to argue that random noise generates Hockey Sticks in an attempt to debunk MBH99.

This is not to say MBH99 is without flaws (many first pointed out by Mann himself in later publications). Rather, it is a reasonably accurate first attempt to generate a multi proxy paleo temperature index with a calculated uncertainty. Because it was a first attempt, Mann, Bradely and Hughes had things to learn and made some mistakes, but the mistakes do not undermine the fundamental conclusions. This is seen by comparing MBH98 to later resonstructions that have eliminated some of those mistakes. Of those, the PAGES consortium reconstruction has used the most data and robust methods, and hence represents the best global reconstruction over recent times:

Please note the green dots (ie the PAGES reconstruction) all represent 30 year averages, so that the graph is not smoothed at a different rate for the recent period, yet the 20th century still stands out for the rapidity and magnitude of the temperature rise, not mention the reversal of the long term cooling trend and the magnitude of the final value (1971-2000) which exceeds all others. There is, of course, a qualification on that final result:

"Many of the proxy records used in the reconstructions were generated some years ago and end before the year 2000. The reconstruction for North America, Asia and South America do not extend to the 21st century. The instrumental record shows that the last several decades have generally been warmer than previous decades. Therefore, the most recent 30-year period in our analysis (1971-2000) underestimates the actual temperature of the last 30 years."

(From the PAGES FAQ, my emphasis)

Finally, it is my instinctive reaction to treat "persuasive names" such as "A Real Skeptic Says" as indicating that the person choosing the name doesn't think their real skepcism will come across without their first telling us their presumed status. That instinctive reaction is rarely wrong. So rather than flagging the uncommon skepticism of the person involved, it tends to flag the opposite. Just a word to the wise.

- Skeptical Science honoured by the Committee for Skeptical Inquiry

Tom Curtis at 13:15 PM on 18 October, 2015fletch92131 @3, for myself, I am highly skeptical that an increase in Global Mean Surface Temperature (GMST) of 1 degree C in a century is "normal". Indeed, Marcott et al (2013) show a 1000 possible temperature histories given uncertainties with regard to time and temperature across a range of proxies:

In very few instances over periods of 100 years or less is there a gap of 1 degree C between the minimum and maximum value in any realization over that period - let alone the same one. From this data, any centenial temperature increase greater than 0.66 C is a very low probability event. That is, it is not normal.

Suggestions to the contrary seem universally to be based on either proxies for single regions (such as GISP 2), or hemispheric or sub-hemispheric reconstructions. That is, they are not based on global temperatures at all. Often they are not even based on such misinterpreted evidence, but on mere anecdote.

Yet here you are, apparently so confident in this unsupported claim that you are prepared to use it as a foundation for a "knock down argument" against AGW.

That strongly suggests to me that you are a denier. For what characterizes deniers is not what they disagree with, but with their employment of selective standards of evidence to support their claims. In short, on their reliance on pseudoscience rather than science to reject scientific claims.

By all means, if you have actual evidence that global means surfact temperatures normally vary by 1 C in a century, please present it. Or alternatively, acknowledge your lack of evidence in support of your key premise, and withdraw your argument as unfounded. But if you are unwilling to do either, then you merely demonstrate that the term "denier" applied to you is no insult, but mere description.

- CO2 measurements are suspect

Tom Curtis at 01:11 AM on 26 June, 2015APT @78, Kohler et al reference Marcott et al 2014 as being a high resolution ice core CO2 concentration record. Marcott et al in turn say:

"The West Antarctic Ice Sheet Divide ice core (WDC) (79.467u S, 112.085u W, 1,766 m above sea level) was drilled to a depth of 3,405 m in 2011 and spans the past,68 kyr.At present, the site has amean annual snow accumulation of 22 cm ice equivalent per year and a surface temperature of 230 uC. Annual layer counting to 2,800 m depth (,30 kyr ago) provides a very accurate timescale for comparison with data from other archives11. The difference in age (Dage) between the ice and the gas trapped within it, which is critical for developing a gas-age chronology, is 205 6 10 yr at present and was 525 6 100 yr at the last glacial maximum (LGM) (Extended Data Fig. 1).Given the high accumulation at the site, minimal smoothing due to gas transport and gradual occlusion, and precise chronological constraints, WDC is the best Antarctic

analogue to central Greenlandic deep ice cores, with a substantially better-dated gas chronology during the glacial period, and is able to resolve atmospheric CO2 at sub-centennial resolution."(My emphasis)

What that means in practise is seen by considering Figure 3, where temporal resolution is indicated to be +/- 20 to 40 years at various time intervals:

Even at +/- 40 years, that is too good a time resolution to not have captured Steinthorsdottir's peak in CO2 at the Younger Dryas if it in fact existed. More importantly, the the +/- 1 ppm resolution of CO2 concentration at all ages shows the fluctuations in CO2 content to not be measurement error. They are fluctuations in the CO2 concentration in the ice. That is significant because the sharp variations in CO2 concentration shown are inconsistent with the record being more heavilly smoothed than shown. Smoothing through diffusion will reduces peaks, fill troughs, and turn "cliffs" into slopes. If the peaks, troughs and cliffs persist in the ice, the CO2 has not significantly diffused after the firn has closed.

In fact, it is definitely below par for Steinthorsdottir to simply wave her hand at possible high diffusion rates as an "explanation" of discrepancy between ice core and stomatal records. If diffusion is a problem, she ought to be able to (and ought to have) created a smoothed model of the stomatal record that reproduces the ice core record. Marcott et al did exactly that when comparing the higher reolution West Antarctic Divide data (WDC) with the lower resolution East Antarctic Divide data (EDC) in extended data figure 5:

"a, The red line is the Green’s function (smoothing function) produced by a firn model using an assumed EDC accumulation rate of 0.015 m yr−1 and a temperature of 209 K. b, CO2 data from WDC (dots) and EDC (dots) plotted against artificially smoothed CO2 data from WDC using the EDC firn smoothing function (red line in both plots). WDC data have been systematically lowered by 4 p.p.m. for direct comparison with EDC."

Given this, it appears to me that the stomata data Steinthorsdottir uses is an inaccurate proxy of CO2 concentrationin the Younger Dryas.

- CO2 measurements are suspect

Daniel Bailey at 01:30 AM on 24 June, 2015

It's not. Temperature measurements began in 1659. Stations were added throughout the centuries since then, becoming a truly global network beginning in 1880. Multiple proxy records extend that record literally millions of years into the past.

http://www.ncdc.noaa.gov/paleo/globalwarming/instrumental.html

http://www.ncdc.noaa.gov/oa/climate/research/1998/anomalies/anomalies.html

http://www.ncdc.noaa.gov/ghcnm/v3.php

http://www.ncdc.noaa.gov/data-access/paleoclimatology-data/datasets

http://www.realclimate.org/index.php/data-sources/

http://en.wikipedia.org/wiki/Instrumental_temperature_recordMultiproxy reconstructions are now commonplace. For example, per the PAGES 2000 reconstruction, current global surface temperatures are hotter than at ANY time in the past 1,400 years, and that while the Medieval Warm Period and Little Ice Age are clearly visible events in their reconstruction, they were not globally synchronized events.

http://www.skepticalscience.com/pages2k-confirms-hockey-stick.html

From the peak temps and CO2 at the height of the Holocene Climatic Optimum some 7,000 years ago, temps and CO2 went into a long, slow decline, until about 100 years ago. Global temperatures dropped about 0.8 degrees C.

Over the past 100 years we have entirely erased that 5,000+ years of natural cooling (Marcott et al 2013), with global temperatures rising a full degree C: