Recent Comments

Prev 26 27 28 29 30 31 32 33 34 35 36 37 38 39 40 41 Next

Comments 1651 to 1700:

-

michael sweet at 05:39 AM on 4 May 2024Skeptical Science News: The Rebuttal Update Project

Ichinitz:

The problem with George Wills argument is that he only states the cost of one side of the equation and then concludes that it will be cheaper to just go on using fossil fuels. The current fossil fuel industry is about 10% of global gross gdp. If a renewable system only costs 2% than it will be much cheaper than the existing system. Many scientific papers (for example Jacobson et al) show that it will be much cheaper to switch to a completely renewable energy system.

I think your suggestion that you write a rebuttal to the myth "it's bad but it is cheaper than renewables" is a good one. The deniers make this type of absurd claim all the time.

-

John Mason at 01:30 AM on 4 May 2024CO2 is just a trace gas

By JJones1960's reckoning I mean!

-

John Mason at 01:29 AM on 4 May 2024CO2 is just a trace gas

Re. #55 -

One man may unleash a catastrophic shooting. On 9-11, at least 19 men were involved. Compared to the global population of around 8 billion, that's a tiny percentage so by your reckoning they must have been harmless.

-

lchinitz at 00:05 AM on 4 May 2024Skeptical Science News: The Rebuttal Update Project

Hi all,

In a previous thread I tried to convince people that this site needed to address a specific topic. I don't think I managed to do that, but I'd like to try again here.

The topic title would be something like "The Cure is Worse than the Disease." The argument to be addressed is that advocates of changes to address GW do not ever address the negative effects those changes would have, especially on less affluent people who could not pay more for gas to get to work, energy to heat their homes, food that is more expensive due to transportation costs, etc.

The response would need to include those negative effects in a cost/benefit analysis, and yet still (likely) conclude that changes need to be made.

I'm raising this again because I just saw this same argment made, again, by George Will in the WaPo, quoting an article in the WSJ. A few clips:

Will article:

https://www.washingtonpost.com/opinions/2024/05/01/rising-threat-nuclear-war-annihilation/

"A recent peer-reviewed study of scientific estimates concludes that the average annual cost of what the excitable U.N. secretary general calls “global boiling” might reach 2 percent of global gross domestic product by 2100."WSJ article:

https://www.wsj.com/articles/follow-the-science-leads-to-ruin-climate-environment-policy-3f427c05This is behind a paywall, but the first paragraph sort of lays out the argument. "More than one million people die in traffic accidents globally each year. Overnight, governments could solve this entirely man-made problem by reducing speed limits everywhere to 3 miles an hour, but we’d laugh any politician who suggested it out of office. It would be absurd to focus solely on lives saved if the cost would be economic and societal destruction. Yet politicians widely employ the same one-sided reasoning in the name of fighting climate change. It’s simply a matter, they say, of “following the science.”

So the basic argument here is that climate change is not a big enough threat to warrant the cures being proposed, and that any reasonable analysis would show that to be the case. Apparently those cures lead to "societal destruction."

I think we should rebut that. To be clear, this is different from the "It's not bad" rebuttal. That one says "yes it is bad." What is needed here is an analysis that says (1) yes there will be pain involved in making changes to address GW, but (2) that pain is justified by the badness that will result from not making those changes.

If I am able to get anyone to agree that this makes sense, I'd like to work on it with anyone else interested.

-

Bob Loblaw at 22:48 PM on 3 May 2024CO2 is just a trace gas

jjones1960 @ 54:

After two months, the best you can come up with is an empty assertion that CO2 is a trace gas? On a post/thread that is devoted to demonstrating why that is such a bogus argument?

Well, let's review the calculation that you provide or reference, in support of your claim that CO2 "cannot trap a significant amount of heat anyway."

...oh, wait. You did not actually provide any calculation. Unfortunately for you, the people that have done the calculation come up with a different conclusion.

I miss the days when contrarians/deniers could actually put together a reasonable argument (however wrong) that presented some actual analysis (however wrong) that supported their positions. These days, it seems more and more that contrarians commenting here have nothing to say that goes beyond a 240-character slogan.

-

Eclectic at 22:29 PM on 3 May 2024CO2 is just a trace gas

JJones @54 & prior :-

Your "trace" argument sounds a very useful one . . . useful in all sorts of situations ~ such as by the Flat-Earthers who will say: "The Earth must be Flat because [JJones] can only see a trace of surface curvature, from wherever he stands."

Or do I detect a trace of leg-pulling by you?

~ If so, then Congratulations [plus a trace of irony] .

-

JJones1960 at 17:58 PM on 3 May 2024CO2 is just a trace gas

Bob Loblaw @ 51:

“CO2 is not "colourless" when it comes to infrared radiation. Just because JJones1960 can't see it doesn't mean it doesn't happen.”

The point that you miss that that CO2 is a trace gas, therefore cannot trap a significant amount of heat anyway.

OPOF @52:Your quote:

“Tropospheric ozone (O3) is the third most important anthropogenic greenhouse gas after carbon dioxide (CO2) and methane (CH4).“The point you miss is that ozone traps heat in relation to CO2 and methane as the ‘third most important greenhouse gas’ but that is IN RELATION to those gases. My point is that those gasses don’t and can’t trap a significant amount of heat because they are in trace amounts, therefore neither would ozone.

-

wilddouglascounty at 01:10 AM on 2 May 2024Pinning down climate change's role in extreme weather

Yes, you're correct in pointing out the multiple causal factors in a system that contribute to the performance of a system, whether it be in a marathon or the climate. This can and does contribute to distortion and manipulation by those who want to detract.

My point is that attribution should be a conversation about a variable, i.e. carbon emissions, not the measuring tool, i.e. climate change.

-

Bob Loblaw at 00:06 AM on 2 May 2024Pinning down climate change's role in extreme weather

Wild:

In the steroids analogy, one can always say "it's not the steroids, it's the training". But the use of steroids allows for faster recovery from the training, which allows more training, more strength, more endurance, etc. The path of causality is not direct. It's not simply "steroids", it's "steroids via this path..."

I think this is similar to what you are saying about climate - the root cause is not "climate change", it's fossil fuel use, which leads to X, Y, and Z.

Causality is a whole can of worms that has many nuances. Wikipedia's article seems to be quite reasonable. People arguing against climate science's position on fossil fuel-driven climate change often distort those nuances. That's an old strategy, used in the tobacco wars, the fight to reject evolution, etc.

-

wilddouglascounty at 23:13 PM on 1 May 2024Pinning down climate change's role in extreme weather

Yes, you are precisely correct, Bob (and calling me Wild is just fine!). The point I am making in my analogy is that the "steroid" in the climate change dynamic is not climate change, it is fossil fuel use, or more generally all human activities which are contributing to increased carbon emissions that are overwhelming the system's sinks abilities to absorb it fast enough enough to keep the equilibrium in the system. It's the carbon emitting activities that causes heat retention, that result in increasingly extreme weather events, which causes climate change, and by saying that climate change CAUSED the extreme weather muddies the understanding of what triggered what and what to do about it.

Hope this helps! Attribution studies should be pointing the finger at increased carbon emissions, not climate change, at the steroids, not the changing averages, that's all.

-

BaerbelW at 18:23 PM on 1 May 2024Tree-rings diverge from temperature after 1960

Please note: a new basic version of this rebuttal was published on May 1, 2024 and includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

-

Eclectic at 15:40 PM on 1 May 2024Welcome to Skeptical Science

Brtipton @123 :

Bob, you are correct. As you know, roughly 83% of society's energy use is coming from fossil fuels. And total energy use is continuing to increase. And it is unhelpful & misleading, when "renewable" wind & solar gets reported not as actual production, but as the potential maximum production (the real production being about 70% lower, on average, than the so-called "installed capacity").

However, the biggest need is for more technological advancement of the renewables sector (and especially in the economics of batteries). Maybe in 15-20 years, the picture will look much brighter. And maybe there will be progress in crop-waste fermentation to produce liquid hydrocarbon fuel for airplanes & other uses where the (doubtless expensive) liquid fuels will still be an attractive choice.

Carbon Tax (plus "dividend" repayment to citizens generally) would be helpful ~ if political opposition can be toned down. But technological advancement is the big requirement, for now. There is political opposition to more-than-slight subsidies to private corporations . . . but surely there is scope for re-directing "research money" into both private and non-profit research ~ so long as it can avoid being labelled as "a subsidy". Wording is important, in these things.

With the best will in the world, it will all take time.

-

brtipton at 10:41 AM on 1 May 2024Welcome to Skeptical Science

I spent a large part of my career investigating, exposing and debunking scientific and engineering boondoggles or fraud within US DOD.

The SCIENCE behind climate change as about as well done as humanly possible. I have found zero politically motivated exaggeration of the situation on the part of climate the climate scientists. If anything, many reports have been watered down somewhat on the positive side.

Unfortunately, the opposite is true on the climate SOLUTIONS side of the coin. While all of the statements I can find are legally, and scientifically accurate; they are highly misleading creating a false sense of progress.

This became painfully evident during the 2022 meeting of the World Climate Coalition's conference on finance when the ONE climatologist who spoke correctly pointed out that ALL efforts to date have had no measurable effect on reducing atmospheric CO2 levels. In fact, atmospheric CO levels are accelerating upward. The MC followed up with "well, that's unfortunate. Let's move on to the good news." Followed by that session not being published on conference website.

Examples:

US Energy Information Administration (EIA) data states that about 2/3 of planned new generating capacity is green (correct.) They omit that new generating capacity if 1.2% of US total consumption and 2/3 of 1.2% is 0.8% PER YEAR for US conversion from fossil fuels to renewables.

The same source correctly states that about 25% of US sustainable energy comes from wind, but obscures that only 11% of total consumption is sustainable. This results in installed wind accounting for 4% of US total consumption. Note: That is INSTALLED wind, not ACTIVELY operating wind. A casual drive or fly by usually shows a large percentage of wind turbines are inactive. I have been unable to find data documenting the actual operating levels.

An article in the UK Guardian, about a year ago, reported that the first UK offshore wind turbine was operating. Based on their reported number and size of turbines, the entire installation, when completed, would generate about 1.9% of UK total consumption.

This linked in articles further digs into the state of affairs on "solutions." - https://www.linkedin.com/pulse/when-does-megwatt-114-watts-bob-tipton-asdfc/?trackingId=8eOLtQUcTwizRZ8AN%2Fe4Pg%3D%3D

All of these are observations and attempts to discover core facts and are in need of skeptical review. As a skeptical reviewer, I welcome this.

There is an engineering adage - you cannot control what you cannot sense. If our leaders do not know the true state of affairs it is not possible for them to make effective decisions. It's not enough to put laser focus on the accuracy of the risk reports from the climatologists while ignoring the over exaggerating capabilities of the solutions we are staking our success on.

In my OPINION, the tools we have are not adequate to win this battle. There are few to know effective efforts to develop new tools. The vast majority of out best and brightest minds are bogged down adding more volume to a case which is already well proven. Further documentation of our impending mass extinction is a poor use of strategic resources.

The true battlefield we are on is one of COST to the consumer and TAXATION of the taxpayers. Until we have solutions where the green way is the cheap way, we will be pushing a boulder up a mountain. When we achieve that point, progress will be rapid and viral.Bob Tipton

Cofounder [Howard] Hughes Skunkworks -

Charlie_Brown at 02:32 AM on 1 May 2024Simon Clark: The climate lies you'll hear this year

Martin Watson @ 5,

Bob Loblaw and Eclectic provide good explanations. To add to them, look up Kirchoff’s Law for radiant energy: Absorptance = Emittance when at thermal equilibrium. Understanding this concept will go a long way toward helping understand the mechanism of global warming. Combined with the atmospheric temperature profile, it is key as to why global warming is a result of increasing CO2 and CH4 in the cold upper atmosphere. It explains why absorption in the lower atmosphere does not prevent radiant energy in the 14-16 micron range from being transferred to the upper atmosphere. Consider a 3-step process: 1) absorb a photon, 2) collisions bring adjacent molecules to the same temperature, 3) emit a photon. It might seem like a pass-through of photons, but think of it as conservation of energy, not conservation of photons. Thus, absorption and emission are functions of temperature. The atmospheric temperature profile is controlled by several factors including adiabatic expansion, condensation, convection, and concentration of greenhouse gases. When these factors are not changing, the temperature profile is fixed. Temperature controls radiant energy. The temperature changes only when something upsets the energy balance and steady state equilibrium temperature, like increasing greenhouse gas concentrations.

-

Eclectic at 00:49 AM on 1 May 2024Simon Clark: The climate lies you'll hear this year

Martin Watson @11 and prior :

thanks for that info ~ and yes, as Bob Loblaw says, the NoTricksZone website is indeed pretty much a complete waste of time.

Note the names Pierre Gosselin and Kenneth Richard attached to the "NTZ" article you linked to. These two names have a long history of showing a shameless disregard of truth & probity, and they appear to have no hesitation in trotting out a pile of misleading half-truths ~ year in, year out. Or quarter-truths. Or worse.

NoTricksZone may have the occasional worthwhile article ~ but I have never yet come across one there (admittedly I haven't bothered to make an extensive search of that website). NoTricksZone is the sort of website which you might use to kill some time reading . . . if it's a wet weekend . . . and you absolutely, absolutely , have exhausted every other avenue of mental entertainment.

-

Bob Loblaw at 00:19 AM on 1 May 2024Simon Clark: The climate lies you'll hear this year

Martin:

To see an example of the sort of "tricks" used by NoTricksZone, you can read this pair of old posts on the 1970s global cooling myth:

https://skepticalscience.com/70s-cooling-myth-tricks-part-I.html

https://skepticalscience.com/70s-cooling-myth-tricks-part-II.html

-

Bob Loblaw at 00:05 AM on 1 May 2024Pinning down climate change's role in extreme weather

Wild (may I call you Wild?):

...but if you look at that same runner's "best times of the year", and over a few years you see that "best time" is also dropping at a rate similar to the average time, then can you not link that "best time" decrease to steroid use?

Over a year, in multiple marathons, you will have other factors affecting the time. The course. Weather (temperatures, winds). Fatigue. Seasonal factors such as training schedules. When you take all that into account, and after 10 years of steroid use the runner suddenly has a "best time" that he could not have come close to before he started steroids (and his worst time of the year is better than his "best time" from 10 years ago), then isn't it safe to say he probably would not have set that "best time" without steroids?

I think the article is quite clear about this distinction. Climate scientists do usually get this right - although media articles often don't do so well.

-

Martin Watson at 00:05 AM on 1 May 2024Simon Clark: The climate lies you'll hear this year

Thanks, Bob Loblaw

-

Bob Loblaw at 23:55 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

Martin Watson @ 8:

As a general rule, you can assume that any paper that is linked to at NoTricksZone wiil not say what NoTricksZone thinks it says. That site is pretty much a complete waste of time.

-

Bob Loblaw at 23:50 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

Martin Watson @ 5:

From a quick reading, there is nothing wrong with the information presented in the link you provide. It looks like an accurate discussion of what happens to the energy contained in an IR photon when it is absorbed by a greenhouse gas (CO2 or otherwise). That energy is almost always lost to other molecules (including non-greenhouse gases such as oxygen and nitrogen), and this leads to the heating of the atmosphere in general.

The article you link to also goes on to explain how higher temperatures in the atmosphere lead to more collisions with CO2 molecules (or other greenhouse gases), which will increase the rate at which they emit IR photons. And it explains how those are emitted in all directions, and how this leads to the greenhouse effect.

Just because very few absorbed photons lead directly to an immediate photon emission by CO2 does not mean that the energy is lost forever and the energy is not eventually emitted as a photon. The complete 100% of the absorbed photon energy is added to the atmosphere, and it continues to remain in the atmosphere until it is eventually emitted out to space or absorbed at the surface.

Eli Rabbet's blog has an excellent discussion of this same factor.

In other words, that article is an accurate description of exactly the process by which greenhouse gases such as CO2 lead to warming of the atmosphere. It provides nothing that represents a refutation of modern (the past 100+ years) of climate science. The article does not mean what the people are claiming it means.

If you are in a debate with someone making this argument, perhaps you can try asking them "what happens to the other 99.998% of the energy?" Or perhaps ask them "why are you referring to an article that accurately describes the greenhouse effect and how it causes warming, as if it refutes it?"

-

Martin Watson at 23:43 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

Hi Eclectic

Thanks for the reply.

Yes, it was another website making the claim that this research meant the effect of CO2 was infinitesimally small. I then tracked down the geoexpro as the original source of the info. I have to confess I didn't understand it!

This was the website where I first read it:

-

Eclectic at 23:30 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

Martin Watson @5 ,

the absorption and re-emission of IR-photons by CO2 molecules is discussed in "Most Used Climate Myths" Number 74 ~ check the top left of (every) page on the SkepticalScience site. [Click on View All Arguments]

The energized CO2 molecules then then immediately transfer energy (kinetic) to neighbouring molecules (being mostly N2 and O2). Much the same thing happens with other GreenHouse Gas molecules e.g. of water molecules etc.

And N2 and O2 molecules transfer energy by impact to their neighbours ~ including to CO2 as well. All these impacts happening at a rate of billions per second.

Therefore, even though the IR-photon emission "percentage" is ultra-low for a particular molecule of CO2 or other GHGas . . . the billions of impacts produce an emission of a sea of photons per cubic millimeter of air.

Also, the geoexpro article you link to, goes into all this in a more detailed way.

Martin, I did not see that article make a suggestion that CO2 had an "infinitesimally small" global warming effect. Have I missed something ~ or were you confusing your memory with some other article elsewhere on the internet? It would be interesting to examine who or what was making the claim that CO2 (or H2O or other GHGasses) was inert . . . and was making a claim that GreenHouse-type global warming does not exist. Because such a claim goes against all the evidence gathered during the last 100+ years of investigation by physicists.

-

wilddouglascounty at 23:29 PM on 30 April 2024Pinning down climate change's role in extreme weather

Not to belabor it too much, but the relationship between climate change, the causes of climate change, and extreme weather is the same relationship as exists between a marathon runner's average running time, his use of steroids, and his best running time.

If a marathon runner's average time has been dropping over time since he began taking steroids, from 3 hours to 2 hours 50 minutes, and the next marathon he ran at 2 hours 35 minutes, or 15 minutes faster than his average. The real attribution of this change goes to his continuing steroid use, so it seems a bit wonky to attribute the fast run to his changing average.

This is what we are doing when we say that climate change CAUSED an extreme weather event. I think scientists need to be very clear when talking about causality, because linking the changing profiles of weather events to the changing average, or the changing climate, is confusing the causes with the measurements, which is not as clear as linking it to the increased amount of greenhouse gases in the atmosphere and oceans caused by human activities, with fossil fuel use near the top. This also clarifies the difference between the nature of the current changes in the climate we are experiencing and past climate fluctuations caused by other changes in our climate system: Milankovich cycle, volcanism, etc.

-

Bob Loblaw at 23:27 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

nigel @ 4:

I have to admit that I did not read the link to Bendell's web page before commenting on the portions you posted. Reading the link, I still don't like the way he refers to "elites" and such. What the heck is an "elite"? Although wiktionary includes a definition of "Someone who is among the best at a certain task", it also includes a definition of "A special group or social class of people which have a superior intellectual, social or economic status as the elite of society".

In the context of climate, economic, and social debates, the latter definition is probably closer to what is intended - but it also becomes a dog-whistle for "those uppity people that are trying to control us". The wiktionary definition uses the word "superior" - but that implies some sort of measurable scale by which the ability or status can be determined. If we consider "the elite" as people that have earned that status through demonstrated ability, then it's not a pejorative. In dog-whistle politics, the term "elite" has the implied meaning that the individual or group is unjustified in asserting any sort of superior position.

In that context, Bendell probably has some sort of point to make - but I think he has lost the battle by accepting the framing of the contrarians. The vagueness of the term "elite" works to their advantage - we may not know who "the elite" are, but we know they are Bad™. There is an innate resentment of that vague group, and framing the debate that way feeds the anger (to the advantage of the contrarians).

It used to be an argument of "but that's communism", and we now see "woke" being used in the same fashion. Apply a term everyone "knows" is Bad, and avoid actually making a concrete argument. It's not a new approach.

In reading Blendell's full post and his About page, I also see that he is not a climate scientist - and that some of his comments about contrarian arguments (e.g. climate models) demonstrates a lack of understanding why some of those contrarian arguments are wrong.

-

Martin Watson at 22:31 PM on 30 April 2024Simon Clark: The climate lies you'll hear this year

I am really hoping that somebody will be able to debunk the following claim in a way I can understand, or point me to an article which already does that. Yesterday, I was Googling about climate change and I came across a claim about CO2 and photons. Basically, it was saying that out of every 100,000 CO2 molecules which absorb a reflected IR photon from the surface, only 2 will actually re-emit that photon. Instead the other 99,998 molecuales will bump into a molecule of nitrogen or oxygen. And the claim was this means the contribution of CO2 to global warming was infinitesimally small. It seems to be referencing this article. Thanks.

geoexpro.com/recent-advances-in-climate-change-research-part-ix-how-carbon-dioxide-emits-ir-photons/

-

nigelj at 05:26 AM on 29 April 2024Simon Clark: The climate lies you'll hear this year

Bob Loblow.

I agree entirely that the first paragraph was not well written. I almost didn't post it - but I felt it was needed for context and cutting bits out of it is tedious work.However it looks like hes just having a moan about mainstream media by suggesting The Guardian are far from perfect and also that its readers are allegdely unaware of the views of the current contrarians (which I would dispute). If you even just quickly scan the link its clear the writer is a bit of a media sceptic himself and also a bit suspicious of elites.

This is why I highlighted material in italics the second paragraph which seemed to summarise the main point of his article, which is the anti elite and anti globalisation agenda and how thats a convenient excuse to do nothing about the climate change. I think you seem to have got distracted by the other stuff.

-

Bob Loblaw at 03:53 AM on 29 April 2024Simon Clark: The climate lies you'll hear this year

nigel:

Reading that quote from Jem Blendell in comment #1, I can't tell if the first paragraph represents an argument against standard climate science, or an argument against the contrarian/denial viewpoint. It's not very well worded. In the second paragraph, it seems more clear that he thinks the contrarian viewpoint is not well supported.

When he talks about "the elites" and people being in the Guardian/BBC/CNN "bubble" and "the whole agenda on climate change", it certainly looks like he accepted some rather bogus arguments against climate science. Personally, don't accept climate science because of what I read in newspapers, web pages, or media outlets - I accept climate science from having learned it in university classes, teaching it in university, and reading the scientific literature and reports summarizing that literature such as the IPCC.

That final sentence in paragraph one finally reassures me that Jem Blendell has not drunk the contrarian koolaid, so the second paragraph is more palatable.

The unfortunate reality is that expecting the general public to become more scientifically-literate is a tough row to hoe. The tier-1 contrarians, who know they are peddling lies, know that emotional and bogus rhetoric wll convince a lot of people. There is the old variant of the old saying:

You can fool some of the people all of the time, and all of the people some of the time...

...and that's enough.

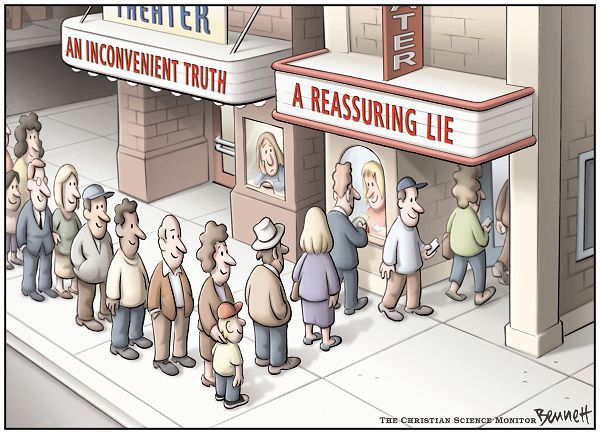

There is also the old Christian Science Monitor cartoon:

SkS used to have a web page that highlighted contradictions in "contrarian" viewpoints. Things such as "the temperature record is unreliable" while using the same temperature record to claim "no warming since 1998 2016". (The SkS page was taken down because it was too much work to maintain. There is an archived version here.)

The contrarian "science" is full of such contradictions. The ability to believe multiple contradictory viewpoints at the same time is a feature of compartmentalization.

-

nigelj at 06:12 AM on 28 April 2024Simon Clark: The climate lies you'll hear this year

Sorry the link got messed up. Corrected link:

jembendell.com/2023/10/10/responding-to-the-new-wave-of-climate-scepticism/

Moderator Response:[BL] If you do not include the "https:" part of the link when you create it, it looks like the SkS web code assumes it is supposed to be an SkS link.

-

nigelj at 05:54 AM on 28 April 2024Simon Clark: The climate lies you'll hear this year

Some commentary on a recent form of climate scepticism that I thought was interesting:

Prof Jem Bendell: When my book Breaking Together came out in May, some of my climate activist friends were surprised that I gave significant attention to rebutting scepticism on the existence of manmade climate change. I also surprised some of my colleagues at COP27 a year ago, when I gave a short talk on the rise of a new form of scepticism. That new form is couched in the important desire to resist oppression from greedy, hypocritical and unaccountable elites. I think the surprise of some that we still need to respond to climate scepticism reflects the bubble that many people working on environmental issues exist within. That’s a bubble of Western middle classes who believe they are well-informed, ethical and have some agency, despite relying on the Guardian, BBC or CNN for much of their news. Outside that bubble, there has been a rise in the belief that authorities and media misrepresent science to protect and profit themselves, while controlling the general public. That was primarily because of the experience of the pronouncements and policies during the early years of the pandemic. When people who are understandably resistant to that Covid orthodoxy have discovered the way elites have been using concern about climate change to enrich themselves, such as through the carbon credits scam, many have become suspicious of the whole agenda on climate change. Those of us who know some of the science on climate, and pay attention to recent temperatures and impacts, can feel incredulous at such scepticism. My green colleagues ask me: “How can someone deny what’s changing right before their very eyes?”

My correspondence with people expressing a new type of freedom-defending climate scepticism has led me to conclude that something else is needed than simply correcting their views with clear logic and evidence. My answers to the questions, which you can read below, may not have been perfect (he presents a list of climate myths and correct information similar to skepticalscience.com) . But the responses from sceptical people have sometimes seemed irrational. For instance, one type of response is an inconsistent switching between epistemologies (the fancy word for describing our view of how we come to know things about the world). That inconsistency involves sometimes claiming to reject all scholarship as untrustworthy instead to trust only firsthand experience. It is inconsistent because they ignore lots of firsthand experience contrary to their view, while also reaching for second hand and poorly referenced or debunked scholarship (often in the form of a blog or video clip) that might seem to support their view. Another irrational approach is the repetition of a claim that has already been debunked, which is the intellectual equivalent of raising one’s voice. One example is sending a blog or a video that repeats previously debunked claims. Another approach is to switch topic on to values and principles, while repeating false binaries given to them by the media. Specifically, that is the binary that climate change can’t be real because globalist elites are profiting from the issue and trying to control us. Instead, both the former and latter can be true at the same time (yep, quite elementary logic). Finally, the most widespread and pernicious irrationality is to regard these discussions as just one topic, and then choose criticism of the globalists as being the most important response, rather than understanding the situation of the natural environment and responding to it in a better way. That happens when people think “after all this debate, I’m not sure about climate change but I’m certain about resisting the globalists, so I’ll focus on that.” If one’s motivation for inquiring into public affairs is to feel like a moral agentic person and experience a burst of energy from belonging to the good guys in a fight, then such a conclusion is seductive. That is especially because it requires no painful recognition of the ecological tragedy, no sacrifices, no risk taking, no changing of lifestyles, and no complicated participation in community projects. It also generates easy likes on social media from people similarly addicted to narratives that avoid difficult self-reflection and change. Unfortunately, the result of this irrationality is people don’t begin to prepare emotionally and practically for what has already started unfolding around them.

wijembendell.com/2023/10/10/responding-to-the-new-wave-of-climate-scepticism

( I don't entirely agree with the writers own tendency towards criticism of globalism and elites, and of the mainstream authorities motives, and of the idea of covid lockdown policies, but I thought he makes some good points on other issues as above)

Moderator Response:[BL] Link fixed.

-

Bob Loblaw at 05:28 AM on 26 April 2024Climate - the Movie: a hot mess of (c)old myths!

The similarities of climate and Covid (or other) denial psychology are indeed of interest, and sort of loosely on-topic, but let's try to not get into details Covid discussions.

For the psychology aspect, I have several times referred to the web site The Authoritarians. There is a long ebook there, plus a few other blog posts as follow-ups. It discusses the learnings of a social psychology prof that spent a career looking at what he called "authoritarian followers". That is to say, he was more focused on the psychology and behaviour of the people that follow authoritarians - not the authority figures themselves (although that is also discussed).

Sadly the most recent note on that web page is the announcement that the author - Bob Altemeyer - had passed away. Eclectic's note that Dan Kahneman had passed away was something I had read about in my local paper.

As for the sequence of "denial" thought patterns, it has been long discussed that the sequence often goes along these lines:

- It's not happening

- It's not us

- It's not bad

- It's too late, and why didn't you warn us?

Philippe's comment about "a matter of when, not if" regarding Covid extends to my experience. As a grade school student, I learned that the Spanish Flu had killed more people than the first world war, and there was concern it could happen again. Any post-Covid cries of "we never expected this" seemed rather hollow - given I learned about it in grade school.

In a similar vein, on a weather-related topic, I remember watching a cable news channel reporter interviewing a local police official on the US east cost, a few days before a hurricane was expected to hit. (This was in the early days of TV sending reporters into the middle of disasters before they happened.)

- The reporter asked "How long have you been preparing for this hurricane?"

- The official said "25 years."

- He continued by pointing out that they lived in an area prone to hurricanes. You don't wait until one is in the forecast before you decide how to deal with it. You know that you are eventually going to need those plans.

We can see previous commenters on this thread that firmly believe that action is not warranted until after harm has happened.

-

Eclectic at 04:14 AM on 26 April 2024Climate - the Movie: a hot mess of (c)old myths!

It would be interesting to see an intensive study of the overlap in mentality of Climate-Deniers and Anti-Vaxxers and Flat-Earthers. All three groups show a rigid delusional thinking, which has (thus far) proved to be impervious to rational discussion/education.

But delusions & other irrationality of thinking woud be a hard topic to study well ~ and possibly it would be somewhat unethical to spend the effort & money in studying such intransigent minds.

# Note : The prominent psychologist, Prof. Daniel Kahneman (author of "Thinking, Fast and Slow") is known for his studies of the "kinks" in the human mind. His death at age 90, was announced last month.

-

Philippe Chantreau at 02:39 AM on 26 April 2024Climate - the Movie: a hot mess of (c)old myths!

Indeed, it would be inappropriate to launch the C subject here, although the conspiratorial thinking aspect is shared with many other efforts of organized denial found in other subjects. I personally have very little patience for it, after working in the ICU throughout a crisis that anyone who ever had a microbiology class knew was not a matter of if but a matter of when. It may be the only one I see in my lifetime but certainly won't be the last.

-

transposer85 at 20:53 PM on 25 April 2024Climate - the Movie: a hot mess of (c)old myths!

One Planet Only Forever @27

I am with you on this issue. I am currently trying to present such points in the best way to inspire deniers to change on a channel where somebody posted this film which garnered some positive resonance. As an environmentalist, vegan activist who studied ecology and human ecology in which, in 1992 we delved into the idea of man-made climate change at a time where it was generally seen as a laughing stock, I wanted to chime in with some relevant points:

The channel I am referring to is actually more Covid centred. I find myself in the fairly unusual position of being very pro consensus science in the field of environment, whereas I find medical science is riddled with corruption, mistruths and effective lobbyism which came to a head with Covid together with coercion at a level where it is entirely rational to assume plausibility of at least some conspiratorial aspects. Plenty of evidence is there.

I am mentioning this partly because you touched on the topic but more importantly because I believe it is important to understand the psychology of climate deniers in a more nuanced manner, i.e. in more depth. Unfortunately, as far as I understand it, many people with legitimate concerns regarding Covid measures, associated discrimination and other significant costs to society, got drawn in to certain (predictably) politically motivated groups and for whatever reason, emotional triggers, legitimate lack of trust in the system for some aspects, lack of time for the reviewing the details (big one) etc, have just fallen for climate denial propaganda.

This is at least one part of the spectrum of the climate denial "group" which theoretically could be won back to rationality and trust in the areas of science where trust is actually deserved.

It may be that more emotional people are attracted to these traps. The more we can refine our understanding of the psychology of transformation, the better chance we have of inspiring rationality and deserved trust. Unfortunately, simply facts normally doesn't do it very well. So we have to get creative as scientific mindsets who are characteristically not so emotional on average. It's very important for people to sense they have been heard and taken seriously, even if we strongly disagree with their perceptions and belief systems.

In short we need to apply kindness and compassion even when we feel like throwing that out of the window and slamming people for being ignorant et cetera. An invitation to follow the money could be useful, like you pointed to. Funding and wages in science it's a very variable thing depending upon the type of science. Environmental science is probably on average at the lowest end a financial return, compared with "science" that largely comes straight out of industries, wishing to make profits on their products.

I'm not going to hammer out my evidence for the C topic because my comment will most likely be deleted. I am new here so don't know how this works yet but if you really are interested I will (somewhat begrudgingly) spend even more of my free time to lay it out in a private message ;) otherwise we may have to just agree to disagree.

Moderator Response:[BL] Welcome to Skeptical Science.

Yes, let's please try to avoid any lengthy discussion of the C-word here (where C = Covid, not Climate). The comments sections here are intended for discussion of the topics presented in each Original Post. It is not a general forum for discussion of whatever pleases people.

As a new user, the Comments Policy is your guide. (It is also the guide for the moderators.)

Violations of the comments policy will see a gradual sequence of escalating actions by moderators:

- Gentle reminders of the policy

- More vehement warnings

- "warning snips", where portions of a comment will be crossed through, but still visible.

- Deletions of portions of comments

- Deletions of entire comments

- Blocking of accounts.

It usually takes as while to reach the final stage. Rapid ascent (descent?) through the process requires really obnoxious behaviour. If you read all the comments on this thread, you can see the moderation comments (green box beneath the comment) that have been added previously. One individual chose to stop commenting here and go elsewhere to complain, rather than follow the simple advice to take the discussion to another thread where it would be on topic - but that was his choice to stop, not ours.

-

MA Rodger at 19:42 PM on 25 April 2024At a glance - Is the CO2 effect saturated?

Eclectic @14,

You say the work of these jokers Kubicki, Kopczyński & Młyńczak failed the WUWT test, being too bonkers even for Anthony Willard Watts to cope-with. I would say Watts has happily promoted work just as bonkers in the past.

And as you say, there is no WUWT coverage of this Kubicki et al 2024 paper although Google shows it is mentioned once in one of the comment threads, as is an earlier paper from the same jokers. Indeed, there are two such earlier papers from 2020 and 2022. Thankfully, these are relatively brief and thus they easily expose the main error these jokers are promoting.

In Kubicki et al (2020) they kick-off by misusing the Schwarzschild equation. The error they employ even gets a mention within this Wiki-ref which says:-

At equilibrium, dIλ = 0 even when the density of the GHG (n) increases. This has led some to falsely believe that Schwarzschild's equation predicts no radiative forcing at wavelengths where absorption is "saturated".

They then measure the radiation from the Moon through a chamber either filled with air or with CO2 and show there is no difference and thus, as their misuse of Schwarzschild suggests, that the Earth's CO2 is "saturated." In preparing for this grand experiment, they research the thermal properties of the Moon as an IR source and thus tell us:-

The moon. The temperature of its surface varies a lot, but for the part illuminated by the Sun, according to encyclopaedic information, it may slightly exceed 1100ºC.

This well demonstrates that these jokers are on a different planet to us as it is well know our Moon only manages 120ºC under the equatorial noon-day sun.

-

Eclectic at 01:29 AM on 25 April 2024At a glance - Is the CO2 effect saturated?

Thanks for that, MA Rodger @13.

Possibly - just possibly - the ultimate Thumbs-Down on the Kubicki et al. paper . . . is that it has not been trumpeted at WUWT website (which usually trumpets any crackpot paper which seems "anti-mainstream" science. And that's despite many of the WUWT denizens regularly/continually asserting that the CO2-GreenHoouse Effect was now irrelevant (because "saturated") or was always non-valid anyway.

Now perhaps I have failed to remember "Kubicki" being a Nine-Day Wonder at WUWT ~ or perhaps I failed to notice "Kubicki" among the mountainous garbage-pile accumulating at WUWT. But as a final check, I used the WUWT Search Function . . . and turned up Nothing.

Something of Contrarian pretensions would need to be pretty bad, not to get 15-minutes of fame at WUWT. But maybe I speak too soon?

-

MA Rodger at 00:15 AM on 25 April 2024At a glance - Is the CO2 effect saturated?

The paper Kubicki et al (2024) 'Climatic consequences of the process of saturation of radiation absorption in gases' is utter garbage from start to finish. When something is so bad, it is a big job setting straight the error-on-error presented.

As an exemplar of the level of nonsense, consider the opening paragraph, sentence by sentence.

Due to the overlap of the absorption spectra of certain atmospheric gases and vapours with a portion of the thermal radiation spectrum from the Earth's surface, these gases absorb the mentioned radiation.

I'd assume this is saying that the atmosphere contains gases (or "vapours" if you are pre-Victorian) which absorb certain IR wavelengths emitted by the Earth's surface. Calling this "overlap" is very odd.

This leads to an increase in their temperature and the re-emission of radiation in all directions, including towards the Earth.

The absorption if IR does lead to "an increase in their temperature" but the emission from atmospheric gases is determined by its temperature. Absorbed IR only very rarely results in a re-emission of IR (and if it does, the IR energy is not cause "increase in their temperature").

As a result, with an increase in the concentration of the radiation-absorbing gas, the temperature of the Earth's surface rises.

This is not how the greenhouse effect works. For wavelengths longer than the limit for its temperature defined by 'black body' physicis (for the Earth, about 4 microns), the planet emits IR across the entire spectrum. The level of emission depends on the temperature of the point of emission which for wavelengths where greenhouse gases operate is not the surface but up in the atmosphere. For IR in the 15 micron band, CO2 will result in emissions to space from up in the atmosphere where it is colder and thus where emissions are less. If adding CO2 moves the height of emission up into a colder altitude, emissions will fall and the Earth then has to heat up to regain thermal equilibrium.

Due to the observed continuous increase in the average temperature of the Earth and the simultaneous increase in the concentration of carbon dioxide in the atmosphere, it has been recognized that the increase in atmospheric carbon dioxide concentration associated with human activity may be the cause of climate warming.

This was perhaps true before the 1950s but the absorption/emission of IR by various gasses was identified and measured when the USAF began to develop IR air-to-air missiles. The warming-effect of a doubling of CO2 (a radiative forcing of +3.7Wm^-2) has been established for decades.

So just like debating science with nextdoor's cat, taking the heed the whitterings of Messers Kubicki, Kopczyński & Młyńczak is a big big waste of time.

-

Theo Simon at 14:34 PM on 24 April 2024At a glance - Is the CO2 effect saturated?

Thanks Bob - and all of you - for taking the time to answer me. I'm just one of many lay people trying to raise the awareness, and we don't always have sufficient science training to be sure of our ground, so it's very helpful when you engage, without too much impatience! Thanks for all you are doing.

-

Bob Loblaw at 23:43 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Theo:

Taking a quick look at that paper, I see it refers to Angstrom's work in 1900 to support their "saturation" argument. This is already discussed in the Advanced tab of the detailed "Is the CO2 effect saturated?" post that this at-a-glance introduces. Short version - we've learned a few things since Angstrom wrote his paper in 1900.

Searching the recent paper for "saturation", it seems that they are using the typical fake skeptic approach that applies the Beer-Lambert law (which is exponential in nature, and a standard part of radiation transfer theory) to the atmosphere as a whole. That is - they look at whether or not IR radiation can make it through the atmosphere in a single pass.

To nobody's surprise, this turns out to not be the case - IR radiation in the bands absorbed by CO2 rarely makes it directly from the earth's surface to space. The energy in the photons needs to go through a series of absorption/re-emission cycles as it gradually works its way up through the atmosphere. When these processes are included in the calculations, it turns out that this particular flavour of the "saturation" argument falls flat on its face, and adding more CO2 (compared to our current levels) does indeed have an effect.

Executive Summary: the authors of that paper have no idea how the greenhouse effect works, as Eclectic has stated.

Read the full rebuttal here for more discussion - and the details of the Beer-Lambert Law are also discussed in this SkS blog post.

Elsevier is usually considered a reputable publisher, but they screwed up on this one. The rapid passage from "received" to "accepted" is indeed a red flag. The journal - Applications in Engineering Science - is clearly an off-topic journal for this paper. On the page I link to, it mentions "time to first decision" as 42 days, and "review time" of 94 days. If you click on "View all insights", you get to this page that also gives "Submission to acceptance" as 77 days, and "acceptance to publication" as five days. The seven days for this paper (from "received" to "accepted") is, shall we say, a bit shorter than usual?

It is worth noting that several other papers in the same issue also have very short times between "received" and "accepted". Of the four I looked at, none of them had any indication that the authors were asked to revise anything, which is rather unusual. Someone at that journal is in a rush.

(If you click on "What do these dates mean?", below the title/author section of the web page for the appear, it specifically states that "received" is the date of the original submission, and they will say "revised" if a more recent version is submitted - e.g. after review.)

-

Eclectic at 23:18 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Addendum:

Theo Simon @7 and earlier :-

I should have mentioned that, if you still have a nagging doubt and wonder if Kubicki et al. have really made an astounding breakthrough ~ then the easy course for you is to put your feet up and relax and observe for 6 or 7 months.

Because in October or November this year (2024) the Nobel Committee will maybe be announcing Kubicki & colleagues have won the Nobel Prize in Physics. If that were to happen . . . then all over the world, the enjoyers of scrambled eggs would celebrate a collapse in the price of their favorite egg-dish . . . as a million physicists wipe all the egg off their own faces. And the name Kubicki will rival the fame of Einstein ! (Excuse my flippancy.)

-

Eclectic at 20:30 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Theo Simon @7 :

Theo, please don't waste your time (or anyone's) in going through the details of that Kubicki paper.

It is just like when you encounter someone's submitted "paper" showing (with an avalanche of complex mathematics & diagrams) why his design for a Perpetual Motion Machine is certain to work beautifully, as soon as he gets finance to build it. You yourself know that there must be one or more errors buried away in the midst of his presentation ~ but why would you bother to search? Life is too short and precious, to spend time dissecting some crackpot ideas which have already been proven to be fundamentally flawed. Proven and re-proven to be garbage.

NoTricksZone is a cynical recycler of nonsenses, to get clicks & profits ~ and happily recycles any garbage which the climate-science denialists themselves keep recycling at intervals. But I admit to some interest in the psychology of how & why some people with science degrees, even professorships - usually Emeritus types - manage to delude themselves in various ways. Yes, they are often misreported . . . and yet they seem mostly motivated by rather extremist political views and are handicapped by their own Motivated Reasoning.

-

John Mason at 19:28 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Denialist "Gotcha" papers pop up most years, gain a lot of traction in those circles and then decay away to hardly anything, then that's it until the next one comes along!

-

Theo Simon at 19:05 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Thanks, I did notice that it was taking a position, which I took as a red flag. But I struggled to understand if it was making new claims regarding saturation, based on new experimental evidence, or if the explanations on this page essentially cover it already.

I'd be very interested to know how valid this papers arguments are and if they have been rebuffed, or found wanting in peer review, as I think this line of attack will be a very popular one in the coming months. I've already met it from numerous trolls elsewhere who currently think it's their "gotcha!"

-

Eclectic at 17:38 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

Theo Simon @4 :

As John Mason says @5 , there are certainly some Red Flags attached to that Kubicki paper ~ including it's citations of papers by Harde; by Humlum; and by Idso . . . those prominent luminati of the Alternate Universe.

Theo, to save your reading time in future ~ whenever you see a "gotcha" article in NoTricksZone .com , claiming that the mainstream science (of anything) is quite wrong . . . then there's a roughly 99% probability that the article is a load of taurine excrement [abbreviation = BS ].

Reading the cited [Kubicki] article's Abstract quickly demonstrates that the authors have simply failed to understand the basic physics of the atmosphere & GreenHouse Effect [abbreviation = GHE ]. And this first impression gets confirmed by reading the article's Conclusions, which are comprised of an excessive amount of word salad and bizarro politics.

Kubicki et al. seem to have discovered ideas that have been well & truly debunked . . . many decades ago. If only the authors had troubled to have their "novel" ideas reviewed by experts, before presenting their paper to the world ! They could have saved themselves so much embarrassment, as well as saving dollars.

-

John Mason at 16:53 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

re - # 4: I've just taken a look at that paper. The reason we didn't mention it was that it came out very recently.

This however is part of the conclusion:

"However, the intention of the authors of this article is not to encourage anyone to degrade the natural environment. Coal and petroleum are valuable chemical resources, and due to their finite reserves, they should be utilized sparingly to ensure they last for future generations. Furthermore, intensive coal mining directly contributes to environmental degradation (land drainage, landscape alteration, tectonic movements). It should also be considered that frequently used outdated heating systems burning coal and outdated internal combustion engines fueled by petroleum products emit many toxic substances (which have nothing to do with CO2). Therefore, it seems that efforts towards renewable energy sources should be intensified, but unsubstantiated arguments, especially those that hinder economic development, should not be used for this purpose."

In scientific literature, a conclusion should be about the work that was done, and not an arm-waving diatribe! The Introduction likewise gives its first 400 plus words over to arm-wavy waffle about the IPCC. I'm surprised it got beyond peer review on that basis. Indeed, its submission/acceptance dates (Received 4 December 2023, Accepted 11 December 2023) suggests it never was reviewed. In most cases a period of months divides those two dates because the peer review process is quite slow. These are all warning signs that 'something is up' with this item.

-

Theo Simon at 15:46 PM on 23 April 2024At a glance - Is the CO2 effect saturated?

I am not science trained but trying to understand. This rebuttal doesn't mention the alleged evidence presented in the paper "Climatic consequences of the process of saturation of radiation absorption in gases" by Kubicki and others - or does it? The current denialism talking point is that additional CO2 has now been shown to have no additional warming effect, and claims new proofs of this:

https://notrickszone.com/2024/04/23/3-physicists-use-experimental-evidence-to-show-co2s-capacity-to-absorb-radiation-has-saturated/

-

sailrick at 14:25 PM on 23 April 20242024 SkS Weekly Climate Change & Global Warming News Roundup #16

@ 1. Nigelj

Absurd indeed. The IEA has said that the clean energy transition would save the world $71 trillion by 2050. The cost would be $44 trillion, but $115 trillion in fuel savings.

So it's worth doing even without climate change costs. -

nigelj at 06:56 AM on 22 April 20242024 SkS Weekly Climate Change & Global Warming News Roundup #16

Regarding the story of the week: "Wednesday's study from the Potsdam Institute for Climate Impact Research (PIK), which is backed by the German government, stands out for the severity of its findings. It calculates climate change will shave 17% off the global economy's GDP by the middle of the century." Now compare this with the DICE economic model (Richard Nordhaus) : "The updated results imply a 1.6% GDP- equivalent loss at 3 °C warming over preindustrial temperatures, up from 1.2% in the review for DICE- 2016.19 Mar 2."

The time frames of the two studies look approximately the same. 3 degrees warming would be about mid century. The difference in estimated damages between reducing gdp by 17% compared to 1.6% looks absolutely huge.

You cant reconcile this easily. This has me puzzled so I'm hoping I haven't misinterpreted something. However the DICE model has been heavily criticised by several experts as seriously underestimating the costs of climate change and being an absurd study in its handling of risk assessments.

-

BaerbelW at 20:45 PM on 21 April 2024Scientists tried to 'hide the decline' in global temperature

Please note: the basic version of this rebuttal was updated on April 21, 2024 and now includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

-

John Mason at 16:13 PM on 21 April 2024Climate's changed before

Re - #899: there's a post in the publication-queue regarding Bolling-Allerod events - not sure as to publication-date, but the points made by Eclectic at #900 are relevant. They are regional in their extent.

-

Eclectic at 11:22 AM on 21 April 2024Climate's changed before

Spooky @899 , you should not really be surprised ~ since the OP article is referring to Global temperature changes.

Not to the local rapid changes in the boreal icesheet region (e.g. Denmark, Greenland, Alaska : during the last glacial age) as shown in the Bolling-Allerod warming and in the briefer Dansgaard-Oeschger events. Those local northern regions are affected by "sudden" changes in local oceanic currents ~ both smaller & larger (e.g. the AMOC). But that has little effect on the global scale, except when it involves a massive event like the melting of the Laurentide Ice Sheet (i.e. the Younger Dryas).

In India, the Indian Monsoons (to which you allude) show much fluctuation resulting from very small alterations in local temperatures & winds (winds which may bring more oxygen18-rich water) . . . even in the absence of a 30-year climate change.

For global temperature changes, there need to be global-scale changes in albedo / insolation / particulates / or greenhouse gasses.

Arguments

Arguments