Arguments

Arguments

Software

Software

Resources

Comments

Resources

Comments

The Consensus Project

The Consensus Project

Translations

Translations

About

Support

About

Support

Latest Posts

- Why India is key to heading off climate catastrophe

- 2024 SkS Weekly Climate Change & Global Warming News Roundup #18

- Skeptical Science New Research for Week #18 2024

- At a glance - Clearing up misconceptions regarding 'hide the decline'

- Pinning down climate change's role in extreme weather

- 2024 SkS Weekly Climate Change & Global Warming News Roundup #17

- Fact Brief - Is Antarctica gaining land ice?

- Simon Clark: The climate lies you'll hear this year

- Skeptical Science New Research for Week #17 2024

- Water is at the heart of farmers’ struggle to survive in Benin

- At a glance - The difference between weather and climate

- India makes a big bet on electric buses

- 2024 SkS Weekly Climate Change & Global Warming News Roundup #16

- EGU2024 - An intense week of joining sessions virtually

- Skeptical Science New Research for Week #16 2024

- How extreme was the Earth's temperature in 2023

- At a glance - Is the science settled?

- What is Mexico doing about climate change?

- 2024 SkS Weekly Climate Change & Global Warming News Roundup #15

- Fact Brief - Did global warming stop in 1998?

- Skeptical Science New Research for Week #15 2024

- EGU2024 - Picking and chosing sessions to attend virtually

- At a glance - The Pacific Decadal Oscillation (PDO) is not causing global warming

- Climate Adam: Is Global Warming Speeding Up?

- 2024 SkS Weekly Climate Change & Global Warming News Roundup #14

- Gigafact and Skeptical Science collaborate to create fact briefs

- Skeptical Science New Research for Week #14 2024

- How can I make my retirement plan climate-friendly?

- At a glance - Global warming and the El Niño Southern Oscillation

- A data scientist’s case for ‘cautious optimism’ about climate change

Comment Search Results

Search for temperature adjustment

Comments matching the search temperature adjustment:

- Climate Adam: Is Global Warming Speeding Up?

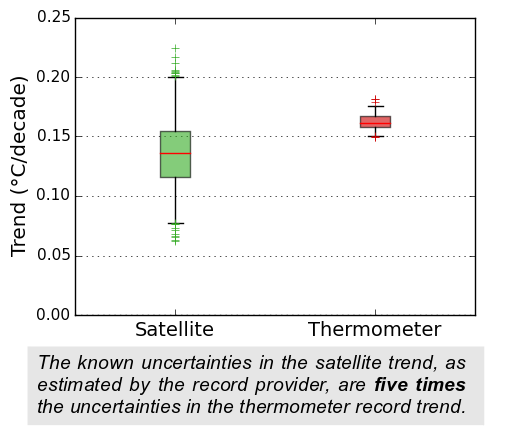

MA Rodger at 17:19 PM on 12 April, 2024ubrew12,

Tamino subsequently posted an OP titled 'Accelerations' which features this NOAA adjusted data (the last two graphics) showing a pair of break-points in the rate of warming, 1976 & 2013, with the pre-2013 rate being quoted as +0.165ºC/decade and the post-2013 rate measuring a rather dramatic +0.4ºC/decade. But that said, there will be very big 'error bars' on that last value. Additionally Tamino's adjustments did result in 2023 temperature being increased (by +0.02ºC) which, given the cause of the "absolutely gobsmackingly bananas" 2023 temperatures remain unresolved, may be very wrong.

- Is Nuclear Energy the Answer?

scaddenp at 14:26 PM on 15 August, 2023I know next to nothing about nuclear reactors but I know coal-fired power stations well. When less power is needed then less coal is fed into furnace (making a mile of other adjustments especially to air flow and feedwater as well), so steam output is reduced. I would assume nuclear similarly slows output by slowing the nuclear reaction. To me, a partial shutdown is stopped one or more generation units not reducing steam output.

All steam plants have to reject heat back into the environment to convert steam back to water, usually by cooling towers. High summer temperatures play havoc with this especially if there are restrictions on temperature of cooling water going back into rivers. This usually means easier (and more efficient) to generate at night. If close to limit, then you have to reduce power as the day warms up.

- How big is the “carbon fertilization effect”?

daveburton at 15:36 PM on 13 July, 2023Rob wrote elsewhere, "greening is now turning into 'browning.' ... fertilization [has now been] overwhelmed by other effects... In other words, the greening has now stopped," and here, "You were making the claim that natural sinks were removing more of our emissions, and that is not the case by any stretch of the imagination.""

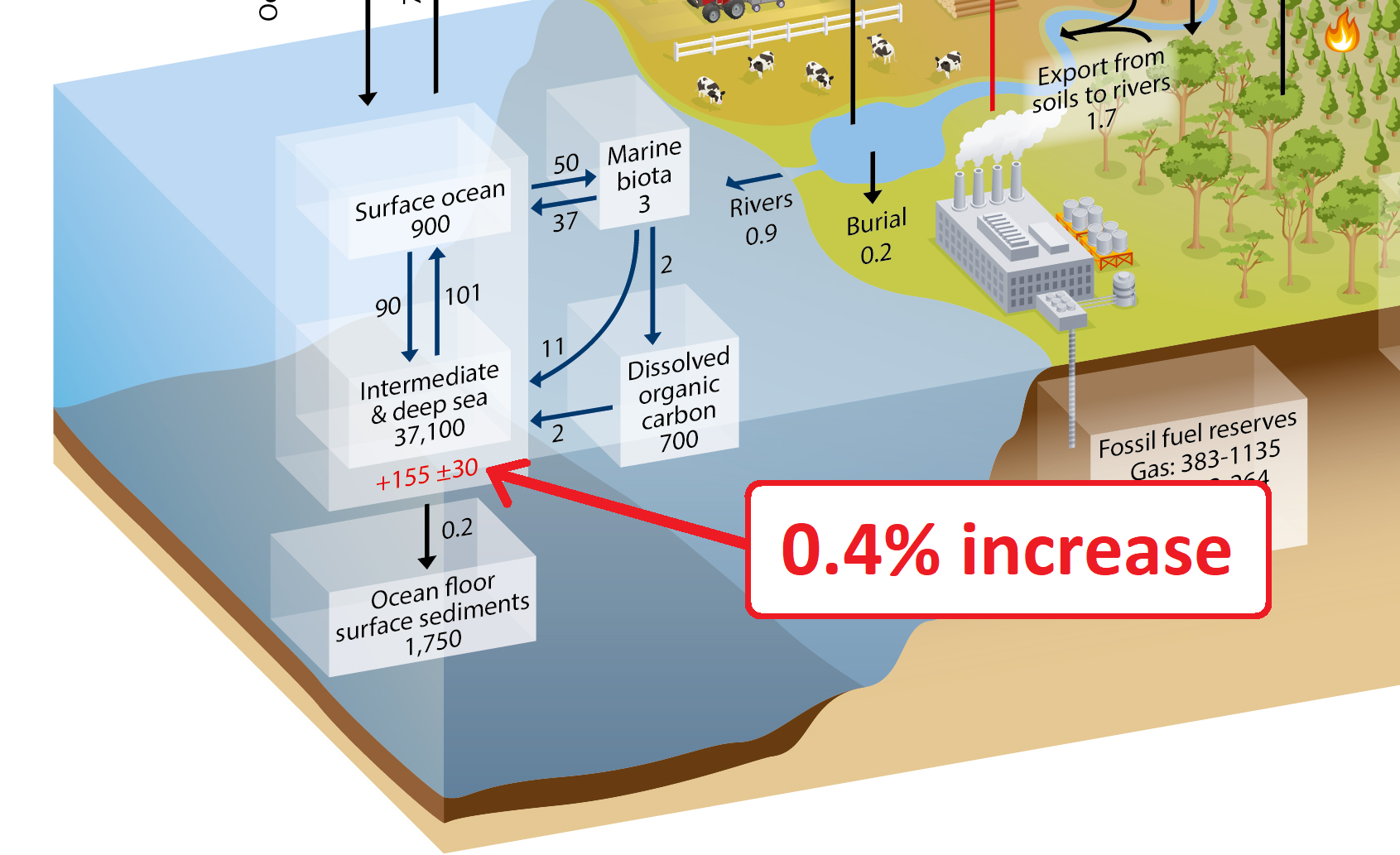

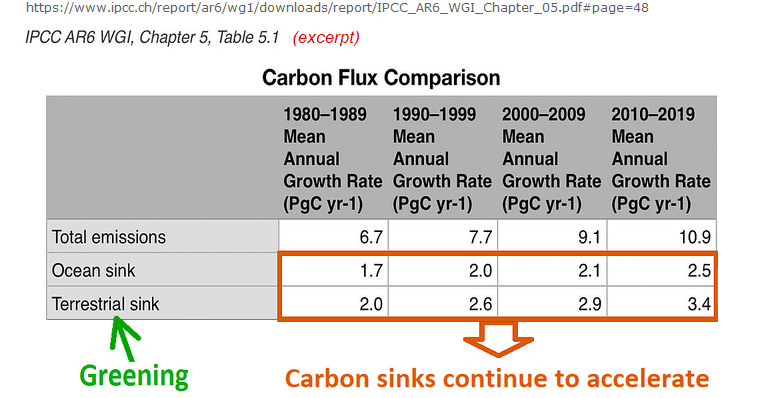

Here's AR6 WG1 Table 5.1, which shows how natural CO2 removals are accelerating:

https://www.ipcc.ch/report/ar6/wg1/downloads/report/IPCC_AR6_WGI_Chapter_05.pdf#page=48

Here it is with the relevant bits highlighted:

https://sealevel.info/AR6_WG1_Table_5.1.png

Or, more concisely:

https://sealevel.info/AR6_WG1_Table_5.1_annot1_partial_carbon_flux_comparison_760x398.png

(Note: 1 PgC = 0.46962 ppmv = 3.66419 Gt CO2.)

As you can see, as atmospheric CO2 levels have risen, the natural CO2 removal rate has sharply accelerated. (That's a strong negative/stabilizing climate feedback.)

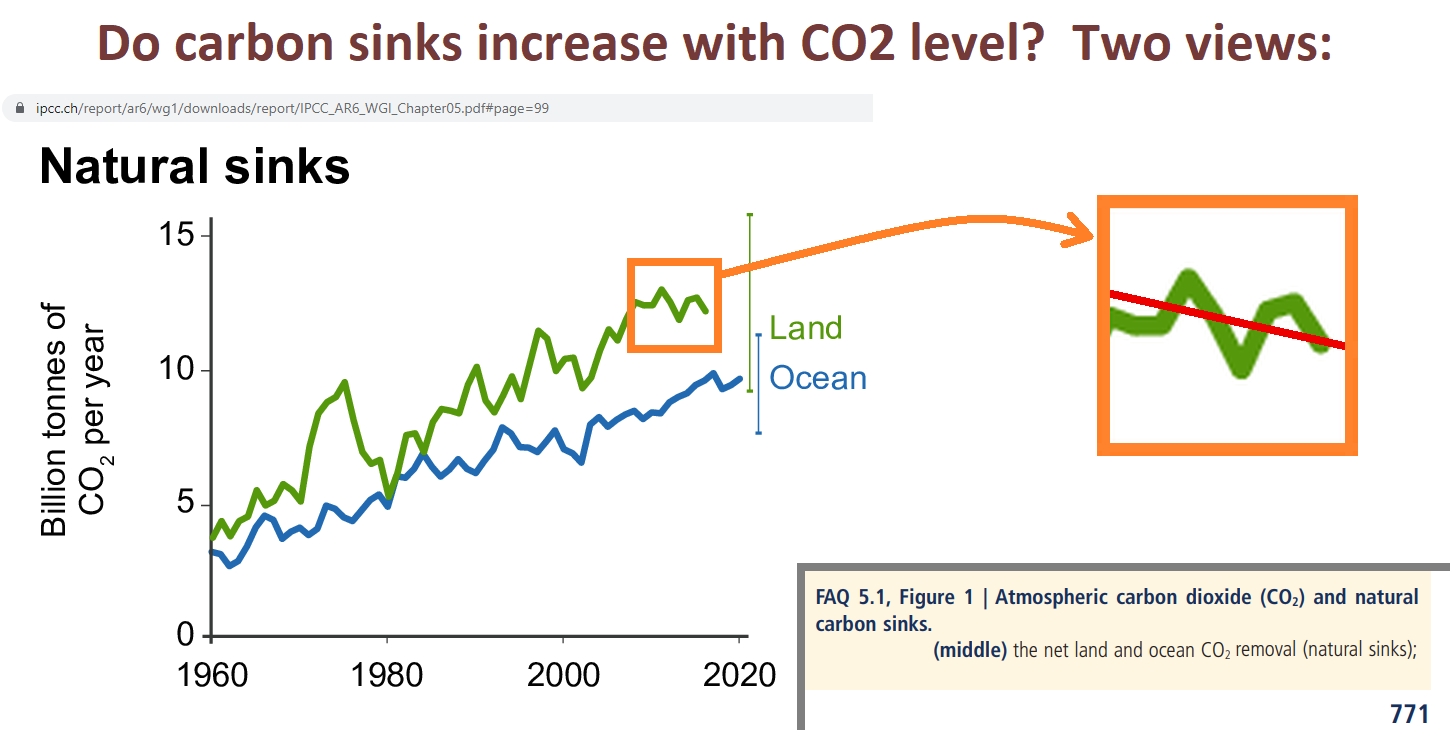

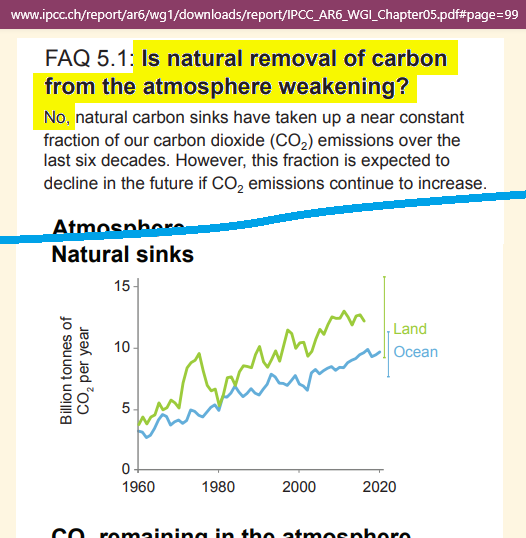

AR6 FAQ 5.1 also shows how both terrestrial and marine carbon sinks have accelerated, here:

https://www.ipcc.ch/report/ar6/wg1/downloads/report/IPCC_AR6_WGI_Chapter05.pdf#page=99

Here's the key graph; I added the orange box, to highlight the (small) portion of the graph which supports your contention that, "greening is now turning into 'browning.' ... fertilization [has now been] overwhelmed by other effects... In other words, the greening has now stopped."

https://sealevel.info/AR6_FAQ_5p1_Fig_1b_final2.png

Here's the caption, explicitly saying that natural removal of carbon from the atmosphere is NOT weakening:

The authors did PREDICT a "decline" in the FUTURE, "if" emissions "continue to increase." But it hasn't happened yet.

What's more, the "decline" which they predicted was NOT for the rate of natural CO2 removals by greening and marine sinks, anyhow. Rather, if you read it carefully, you'll see that that hypothetical decline was predicted for the ratio of natural removals to emissions.

What's more, their prediction is conditional, depending on what happens with future emissions ("if CO2 emissions continue to increase").

Well, predictions are cheap. My prediction is that natural removals of CO2 from the atmosphere will continue to accelerate, for as long as CO2 levels rise.

The "fraction" which they predict might decline, someday, doesn't represent anything physical, anyhow. (It is one minus the equally unphysical "airborne fraction.") Our emission rate is currently about twice the natural removal rate, so if emissions were halved, the removal "fraction" would be 100%, and the atmospheric CO2 level would plateau. If emissions were cut by more than half then the removal "fraction" would be more than 100%, and the CO2 level would be falling.

I wrote elsewhere, "This recent study quantifies the effect for several major crops. Their results are toward the high end, but their qualitative conclusion is consistent with many, many other studies. They reported, "We consistently find a large CO2 fertilization effect: a 1 ppm increase in CO2 equates to a 0.4%, 0.6%, 1% yield increase for corn, soybeans, and wheat, respectively.""

If you recall that mankind has raised the average atmospheric CO2 level by 140 ppmv, you'll recognize that those crop yield improvements are enormous!

Rob replied, "If you actually read more than just the abstract of that study you find this on page 3: 'Complicating matters further, a decline in the global carbon fertilization effect over time has been documented, likely attributable to changes in nutrient and water availability (Wang et al. 2020).'"

Rob, I already addressed Wang et al (2020), but you might not have seen it, because the mods deemed it off-topic and deleted it. Here's what I wrote:

Rob, it's possible that your confusion on the greening/browning point was due to a widely publicized paper, with an unfortunately misleading title:

Wang et al (2020), "Recent global decline of CO2 fertilization effects on vegetation photosynthesis." Science, 11 Dec 2020, Vol 370, Issue 6522, pp. 1295-1300, doi:10.1126/science.abb7772

Many people were misled by it. You can be forgiven for thinking, based on that title, that greening due to CO2 fertilization had peaked, and is now declining.

But that's not what it meant. What it actually meant was that the rate at which plants remove CO2 from the atmosphere has continued to accelerate, but that its recent acceleration was less than expected. (You can't glean that fact from the abstract; would you like me to email you a copy of the paper?)

What's more, if you read the "Comment on" papers responding to Wang, you'll learn that even that conclusion was dubious:

Sang et al (2021), "Comment on 'Recent global decline of CO2 fertilization effects on vegetation photosynthesis'." Science 373, eabg4420. doi:10.1126/science.abg4420

Frankenberg et al (2021), "Comment on 'Recent global decline of CO2 fertilization effects on vegetation photosynthesis'." Science 373, eabg2947. doi:10.1126/science.abg2947

Agronomists have studied every important crop, and they all benefit from elevated CO2, and experiments show that the benefits continue to increase as CO2 levels rise to far above what we could ever hope to reach outdoors. Perhaps surprisingly, even the most important C4 crops, corn (maize) and sugarcane, benefit dramatically from additional CO2. C3 plants (including most crops, and all carbon-sequestering trees) benefit even more.

Rob also quoted the study saying, "While CO2 enrichment experiments have generated important insights into the physiological channels of the fertilization effect and its environmental interactions, they are limited in the extent to which they reflect real-world growing conditions in commercial farms across a large geographic scale."

That's a reference to the well-known fact that Free Air Carbon Enrichment (FACE) studies are less accurate than greenhouse and OTC (open top container) studies, because in FACE studies wind fluctuations unavoidably cause unnaturally rapid variations in CO2 levels. So FACE studies consistently underestimate the benefits of elevated CO2. Here's a paper about that:

Bunce, J.A. (2012). Responses of cotton and wheat photosynthesis and growth to cyclic variation in carbon dioxide concentration. Photosynthetica 50, 395–400. doi:10.1007/s11099-012-0041-7

The issue is also explained by Prof. George Hendrey, here:

"Plant responses to CO2 enrichment: Much of what is known about global ecosystem responses to future increases in atmospheric CO2 has been gained through Free-Air CO2 Enrichment (FACE) experiments of my design. All FACE experiments exhibit rapid variations in CO2 concentrations on the order of seconds to minutes. I have shown that long-term photosynthesis can be reduced as a consequence of this variability. Because of this, all FACE experiments tend to underestimate ecosystem net primary production (NPP) associated with a presumed increased concentration of CO2."

Rob wrote, "It does seem that you're claiming CO2 uptake falls with increasing temperature.""

That is correct for uptake by water. Or, rather, it would be correct, were it not for the fact that the small reduction in CO2 uptake due to the temperature dependence of Henry's Law is dwarfed by the large increase in CO2 uptake due to the increase in pCO2.

Rob wrote, "But it's unclear to me how you think this plays into the conclusion that CO2 levels would 'quickly normalize' over the course of 35 years" and also, "You also claimed CO2 concentrations would quickly come down (normalize) once we stop emitting it. This is also not correct unless you're using 'normalize' to mean 'stabilize at a new higher level'."

Perhaps you've confused me with someone else. I said nothing about CO2 levels "normalizing."

I did point out that the effective half-life for additional CO2 which we add to the atmosphere is only about 35 years. I wrote:

The commonly heard claim that "the change in CO2 concentration will persist for centuries and millennia to come" is based on the "long tail" of a hypothetical CO2 concentration decay curve, for a scenario in which anthropogenic CO2 emissions go to zero, CO2 level drops toward 300 ppmv, and carbon begins slowly migrating back out of the deep oceans and terrestrial biosphere into the atmosphere. It's true in the sense that if CO2 emissions were to cease, it would be millennia before the CO2 level would drop below 300 ppmv. But the first half-life for the modeled CO2 level decay curve is only about 35 years, corresponding to an e-folding "adjustment time" of about fifty years. That's the "effective atmospheric lifetime" of our current CO2 emissions.

Rob wrote, "Dave... The fundamental fact that you disputed is that oceans take up about half of our emissions."

That reflects two points of confusion, Rob.

In the first place, our emissions are currently around 11 PgC/year (per the GCP). The oceans remove CO2 from the atmosphere at a current rate of a little over 2.5 PgC/year. That's only about 1/4 of the rate of our emissions, not half.

More fundamentally, the oceans are not removing some fixed fraction of our emissions. None of the natural CO2 removal processes do. All of them remove CO2 from the bulk atmosphere, at rates which largely depend on the atmospheric CO2 concentration, not on our emission rate. If we halved our CO2 emission rate, natural CO2 removals would continue at their current rate.

Because human CO2 emissions are currently faster than natural CO2 removals, we've increased the atmospheric CO2 level by about 50% (140 ppmv), but we've increased the amount of carbon in the oceans by less than 0.5%, as you can see in AR5 WG1 Fig. 6-1.

Sorry, this got kind of long. I hope I addressed all your concerns.

- The Dynamics of The Green Plate Effect

Bob Loblaw at 10:58 AM on 30 June, 2023The bucket analogy does relate to the greenhouse effect in terms of reducing the rate of loss, which requires an adjustment of the bucket level. The reason the bucket reaches a new equilibrium is that as the water level rises, the pressure increases (a linear function of the height of water above the hole), and that increased pressure succeeds in forcing enough water through the smaller hole. We need to remember that there is a pressure term that drives the flow.

The increased pressure in the bucket is an analogy to the increased surface temperature creating a larger temperature difference between the surface and the ubiquitous 255K emitting IR to space in the greenhouse effect.

On the other hand, the Green Plate effect is intended as a specific counterargument to the "cold object can't cause a warm object to heat up" myth. It does not need any reference to the greenhouse effect at all to demonstrate that this "cold object/warm object" myth about the 2nd law is wrong.

If the "cold object/warm object violates 2nd law" argument was correct, then the argument would have to show an error in the Green Plate scenario. If any hard-core denier want to continue with that argument here, they are going to have to do it without any reference to the greenhouse effect. If they can't "disprove" the Green Plate effect, then there is no way that they will be able to apply the [lack of] logic to the more complex greenhouse effect.

- 2023 SkS Weekly Climate Change & Global Warming News Roundup #19

nigelj at 06:21 AM on 15 May, 2023Regarding: "Climate scientists first laughed at a ‘bizarre’ campaign against the BoM – then came the harassment by Graham Readfearn , Guardian, May 7th 2023" (Where the Australian bureau of meterology was essentially falsely accused of introducing a warm bias into the temperature records).

New Zealand had a similar campaign against climate scientists as follows:

Case against NIWA (Summary)

On 5 July 2010, The New Zealand Climate Science Education Trust (NZCSET), associated with the New Zealand Climate Science Coalition, filed a legal case against the National Institute of Water and Atmospheric Research (NIWA) claiming that the organisation had used a methodology to adjust historic temperature data that was not in line with received scientific opinion.[53] The Coalition lodged papers with the High Court asking the court to rule that the official temperatures record of NIWA were invalid. The Coalition later claimed that the "1degC warming during the 20th century was based on adjustments taken by Niwa from a 1981 student thesis by then student Jim Salinger...[and]...the Salinger thesis was subjective and untested and meteorologists more senior to Salinger did not consider the temperature data should be adjusted."[54] The case was dismissed, with the judgement concluding that the "plaintiff does not succeed on any of its challenges to the three decisions of NIWA in the issue. The application for judicial review is dismissed and judgment entered for the defendant."[55] On 11 November 2013, the Court of Appeal of New Zealand dismissed an appeal by the Trust against the award of costs to NIWA.[56][57][58] NIWA Chief Executive John Morgan said the organisation was pleased with the outcome, stating that there had been no evidence presented that might call the integrity of NIWA scientists into question.[59]

There was concern in 2014 that the New Zealand Climate Science Education Trust had not paid the amount of $89,000 to NIWA as ordered by the High Court, and this was a cost to be borne by the taxpayers of New Zealand. Trustee Bryan Leyland, when asked about its assets, said: "To my knowledge, there is no money. We spent a large amount of money on the court case, there were some expensive legal technicalities...[and that]...funding had come from a number of sources, which are confidential".[60] Shortly after that, the New Zealand Climate Science Education Trust (NZCSET) was put into formal liquidation.[61] On 23 January 2014, Salinger stated that this "marked the end of a four-year epic saga of secretly-funded climate denial, harassment of scientists and tying-up of valuable government resources in New Zealand."[62] He also explained the background to the issue around the Seven-station New Zealand temperature series (7SS)[63] and how he felt this had been misrepresented by the Trust.[62]

https://en.wikipedia.org/wiki/Jim_Salinger

(My comments) I recall that during the case NIWAS methodology was also peer reviewed by an independent climate organisation in Australia and they endorsed the methods used. One of the other issues I recall was the judge dismissed the climate denialists expert witnesses because they were not qualified to give evidence on climate science. Details in this article:

hot-topic.co.nz/cranks-lose-court-case-against-nz-temperature-record-niwa-awarded-costs/

More details and link to the full ruling.

www.sciencemediacentre.co.nz/2012/09/07/niwa-climate-record-court-decision-experts-respond/

www.nzherald.co.nz/nz/sceptics-lose-fight-against-niwa-temperature-data/WJJJVHPQLYM5XP6QO3KWST463E/

- CO2 effect is saturated

Bob Loblaw at 00:58 AM on 21 November, 2022Charlie_Brown:

One minor clarification. You say "Radiant energy intensity as a function of wavelength depends only upon the composition and temperature of the emitting source."

Yes, this is correct for radiant energy emitted locally, but when it comes to measuring radiant energy at a point, you get both the locally-emitted energy plus any energy at that wavelength that was emitted elsewhere and has been transmitted through the atmosphere to that point - i.e., it has not been absorbed by the intervening atmosphere. At some wavelengths, where atmospheric absorption is large, it will be mostly locally-emitted. At wavelengths where atmospheric absorption is small, it will be mostly transmitted from elsewhere.

The complication that you refer to in terms of what is seen at any particular height in the atmosphere is that it includes both components (local emission plus transmission). From measurements of radiant energy alone you cannot know how much is from each. For that, you need models that incorporate temperature, all gases and their emission spectra, etc.

And, as you state, models such as MODTRAN will do that for you - but they are not energy balance models. You need to specify the temperature profile (and cloud profile, and gases) and then you can get the profile of radiative energy (upward and downward fluxes, absorption and emission rates).

If that radiative energy transfer does not balance (local absorption and emission are not equal), then locally you will have either heating or cooling. At that point, you can iteratively warm or cool that layer (and all other layers), recalculate the temperature profile, recalculate the radiative transfer, etc until you find a temperature profile that is at equilibrium.

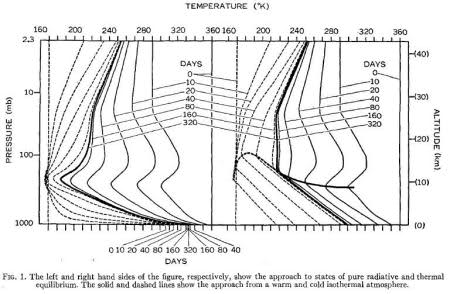

And people have done this. Classic early references are from roughly 60 years ago (and have been linked to earlier in this long comments thread).

If you only consider radiatve transfer, the atmosphere would stabilize at a much steeper temperature gradient than exists. If you adjust for this (Manabe et al's "convective adjustment") you get a very good fit to actual global mean temperature. Figure 1 from Manabe and Strickler shows these two scenarios clearly, as well as the iterative process of radiative calculations, determining heating/cooling, radiative calculations, etc:

An interesting paper appeared in BAMS earlier this month, looking at the historical importance of this early work by Manabe.

Certain stubbornly-ignorant self-proclaimed experts that have repeatedly invaded this thread seem to lose sight of the fact that the atmosphere also emits radiation in the wavelengths that are strongly-absorbed. It's easy to deny that the Greenhouse Effect exists if you deny that CO2 is a strong emitter as well as a strong absorber.

- 2022 SkS Weekly Climate Change & Global Warming News Roundup #45

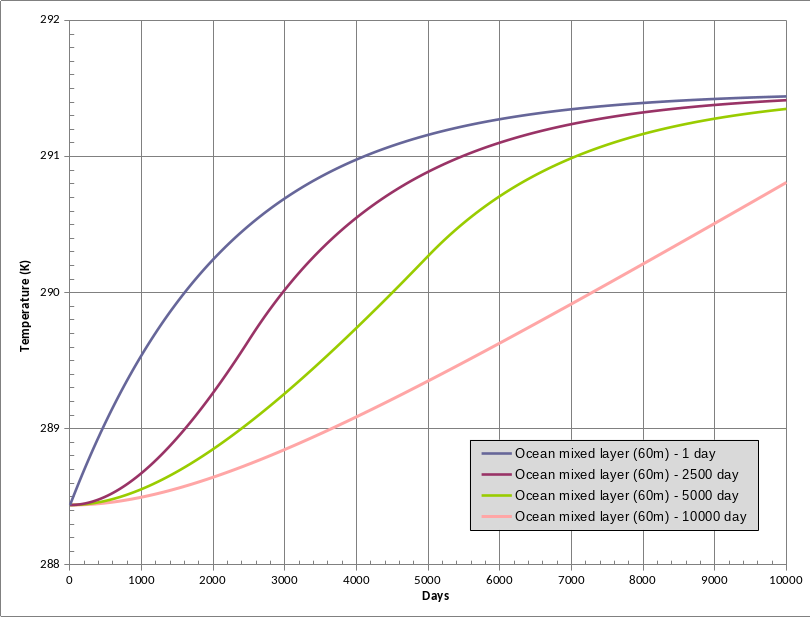

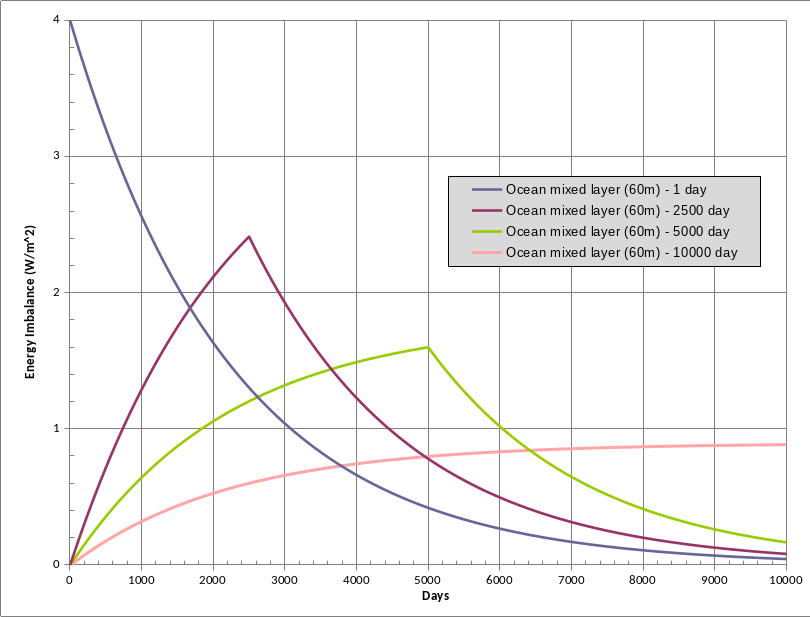

Bob Loblaw at 12:54 PM on 15 November, 2022Now, to expand a little on that zero-d model from comment # 21. I mentioned that a gradual increase in radiative forcing wlil be different from an instananeous step change of 4W/m2. We can look at that using the same model. We will do three new simulations, to add to the Ocean Mixed Layer one from comment #21, making four simulations:

- The original instantaneous 4 W/m2 step change.

- A scenario where we gradually increase the radiative forcing over 2500 days.

- A scenario where we increase it over 5000 days

- A scenario where we increase it over 10000 days

In each case, we keep the same heat capacity (ocean 60m depth - middle line from comment #21). We also keep the same final radiative forcing: 4 W/m2 at the end of the "ramping up" period (1, 2500, 5000, or 10000 days).

Here is the temperature evolution:

...and here is the radiative imbalance:

Note that the temperature evolution over the 10,000 day period is quite different if we spread the forcing over a longer period. In the fourth line, when full radiative forcing is not reached until day 10,000, we still have a ways to go before reaching equilibrium. The system has responded fully to the forcing that was added 30 years ago, but not to the recent forcing.

You will note that the imbalance graph only reaches 4W/m2 for the 1-day (instantaneious) step change. That is the difference between the radiative forcing and the radiative imbalance - they are not the same thing.

- The forcing is an input, and is always expressed relative to the pre-change conditions (day 0).

- The imbalance is the net difference between the forcing and any adjustments in the outgoing radiation related to how the system has heated up. Since we do not reach 4W/m2 forcing until day 2500 (or 5000, or 10000), the system has had a chance to adjust to the forcing that happened before that day.

In the 5000-day ramp-up, we reach 4W/m2 of forcing on day 5000, but the system has already achieved about 2.4W/m2 of adjustment, leaving only 1.6 W/m2 imbalance.

Again, the temperature evolution over time is different. You need to consider this when interpreting the temperature evolution with respect to time lags, forcing etc.

- No, a cherry-picked analysis doesn’t demonstrate that we’re not in a climate crisis

Bob Loblaw at 06:13 AM on 9 October, 2022Eric:

From the first paragraph of the link to the COOP web page you provide (emphasis added):

COOP data usually consist of daily maximum and minimum temperatures, snowfall, snow depth, and 24-hour precipitation totals.

Next question:

How did your analysis determine 1-hour and 6-hour totals from that data?

Hint: the COOP network involves manual reading of data. Temperature from a max/min thermometer (once per day), and precipitation total from a rain gauge that sits and collects rainfall for 24 hours, and is emptied manually and the quantity measured (once per day).

Side note: this is the network that requires the time of day adjustment for temperature trends.

- 2nd law of thermodynamics contradicts greenhouse theory

grindupBaker at 03:06 AM on 15 July, 2022The underlying heat-adjustment effect works like this:

---------

"GREENHOUSE EFFECT", TRYING TO WARM IF THE QUANTITY INCREASES

- The "greenhouse effect" in Earth's troposphere operates like this: Some of the "LWR" aka "infrared" radiation heading up gets absorbed into cloud above instead of going to space so that's the "heat trapping" effect of a cloud. The top portion of the cloud radiates up some of the LWR radiation that's manufactured inside the cloud but it's less amount than the LWR that was absorbed into the bottom of the cloud because the cloud top is colder than below the cloud and colder things radiate less than warmer things. That is PRECISELY the "greenhouse effect" in Earth's troposphere. It's the "greenhouse effect" of liquid "water" and solid "ice" in that example. You can see that "greenhouse effect" of liquid "water" and solid "ice" for all the various places on Earth from CERES satellite instrument at https://www.youtube.com/watch?v=kE1VBCt8GLc at 7:50. It's the pink one labelled "Longwave....26.2 w / m**2" so cloud globally has a "greenhouse effect" of 26.2 w / m**2.

- Solids in the troposphere have the exact same effect as the "cloud greenhouse effect" above for the exact same reason.

- Infrared-active gases in the troposphere (H2O gas, CO2, CH4, N2O, O3, CFCs) have the exact same effect as the "cloud greenhouse effect" above for the exact same reason. Non infrared-active gases in the troposphere (N2, O2, Ar) have no "greenhouse effect" because their molecule is too simple to get the vibrational kinetic energy by absorbing a photon of LWR radiation or by collision. The "greenhouse effect" really is that simple, and it's utterly 100% certain.

---------

SUNSHINE REFLECTION EFFECT, TRYING TO COOL IF THE QUANTITY INCREASES

- Clouds (liquid "water" and solid "ice") absorb & reflect some sunlight and the "reflect" part has an attempt-to-cool effect, which has nothing whatsoever to do with the "greenhouse effect". You can see that "sunlight reflection attempt-to-cool effect" of liquid "water" and solid "ice" for all the various places on Earth from CERES satellite instrument at https://www.youtube.com/watch?v=kE1VBCt8GLc at 7:50. It's the blue one labelled "Shortwave....-47.3 w / m**2" so cloud globally has a sunshine reflection effect of 47.3 w / m**2.

- Solids in the troposphere absorb & reflect some sunlight and the "reflect" part has an attempt-to-cool effect, which has nothing whatsoever to do with the "greenhouse effect".

- Infrared-active gases in the troposphere (H2O gas, CO2, CH4, N2O, O3, CFCs) do not absorb or reflect any sunlight (minor note: except a tiny portion in the high-frequency ultraviolet where O2 & O3 has absorbed most of it already in the stratosphere above the troposphere).

---------

NET EFFECT OF THE 2 ENTIRELY-DIFFERENT EFFECTS DESCRIBED ABOVE

- The net result of the 2 entirely-different "cloud" effects is that clouds have a net cooling effect of 21.1 w / m**2 as seen in the blue-hues pictorial at left on screen at either of my 2 GooglesTubes links above.

- The net result for solids in the troposphere is a net cooling effect because the change in this effect by humans is the "global dimming" atmospheric aerosols air pollution effect and that's a cooling effect (separate from its cloud change effect).

- The net result for infrared-active gases in the troposphere (H2O gas, CO2, CH4, N2O, O3, CFCs) is a warming effect because their 2nd effect above is negligible, essentially zero.

---------

Cartoons or text that describe a "greenhouse effect" in which photons from the surface are absorbed by infrared-active gas molecules and then are re-emitted with 50% of it going down and warming the surface are incorrect because they do not include a tropospheric temperature lapse rate which is an absolute requirement. Explanations of the "greenhouse effect" which include phrases like "the radiation from the surface does not directly heat the atmosphere" are incorrect because there are simple laboratory experiments which prove that infrared radiation does indeed heat the CO2 infrared-active gas and its surroundings (which means, of course, that molecular vibrational kinetic energy is converted on collision to molecular translational kinetic energy before it happened to "thermally relax" and emit a photon and thus no photon was "re-emitted" in that case).

++++++++++

Cloudy winter nights don't cool as much as clear-sky winter nights. It is PRECISELY the "greenhouse effect" in Earth's troposphere which causes that. - It's albedo

Bob Loblaw at 12:52 PM on 9 September, 2021Coolmaster:

Little of your most recent comment has passed moderation. In what little remains, you double-down on your claim of a strong cooling effect for clouds. Let's examine some actual science.

Note that in comment 70, although I said that the diagrams you provided in comment 69 were "a useful expansion", I also noted that "summary diagrams are summary diagrams - not detailed models."

First, you claimed in #71 that 1% increase in evaporation will lead to a 1% increase in clouds, and you have repeatedly claimed that increasing cloud has a cooling effect. You also said "I look forward to your criticism and assessment", so let's see if you really mean that.

We will start with the consequences of an increase in evaporation, and we'll limit it to the land surface you have talked about (although it doesn't really make any difference to what I will present). What happens when we manipulate surface conditions to increase evaporation?

- Atmospheric water vapour will increase above that surface.

- The atmosphere will probably move that water vapour away from the surface, either vertically (convective mixing) or horizontally (advection due to wind)..

- If conditions are suitable, that extra water vapour may rise to the point where it condenses to form cloud, but this is not always the case. If it does form cloud, the location may be local, but it is more likely to be a long way away.

- As a consequence of increasing evporation, the location where the evaporation occurs will also see less thermal energy transfer to the atmosphere, so temperatures are also affected. As a result, we see changes in both temperature and humidity, and these changes will be carried downwind.

- Downwind, the changes in temperature and humidity will affect the energy fluxes in those other locations - possibly suppressing evaporation (because the overlying air is now cooler and more humid).

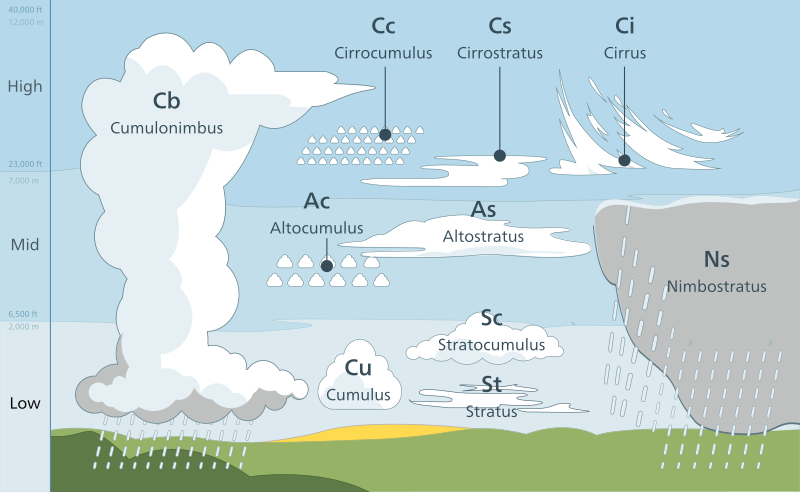

Now, if the additional water vapour forms cloud, we have to ask "what kind of cloud?". That depends on where and how the lifting of the air occurred which led to cooling and cloud formation. Cloud types vary a lot. Wikipedia has a nice discussion, and gives us this nice diagram:

So, will this "extra" humidity cause more cloud? Maybe. Maybe not. Maybe it will lead to a different cloud type. Maybe it wll lead to a similar cloud type, but at a different altitude. All of this will affect how radiation fluxes will be affected.

Coolmaster's argument then depends on claims that cloud cover will increase, and that the diagrams he has provided show the radiatove flux changes. Let us consider some of the possible radiative changes.

- A change in horizontal extent - but no change in any other cloud characteristics - will affect the ratios between clear sky and cloudy sky. This is easy to estimate.

- We may not have the same cloud type, though. Different cloud types have different radiative properties. High clouds tend to be thin, transparent, and let a lot of solar radiation through. They also may not behave as blackbodies for IR radiation.

- Low clouds are much less transparent. For IR radiation, two properties are important: cloud top temperature controls the IR emitted upward, while cloud base temperature controls the downward flux. Change the vertical temperature profile, or change the bottom or top heights of the clouds, and you change the IR radiation fluxes. This is not determined by cloud area.

None of these details are covered in the diagrams or discussion presented by coolmaster. I will repeat what I said before: summary diagrams are summary diagrams - not detailed models.

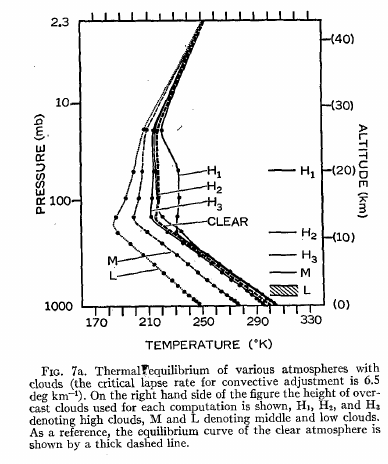

Can we find models that do include thse sorts of effects? Yes. I will dig back into two early climate change papers that were key developments in their day. They covered basics that more recent papers do not repeat, so they provide useful diagrams.

The first is Manabe and Strickler, 1964, JAS 21(4), Thermal Equilibrium of the Atmosphere with a Convective Adjustment.

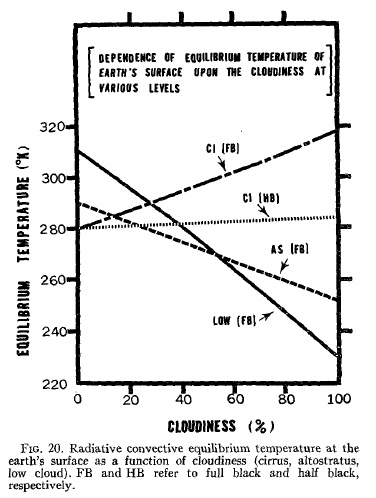

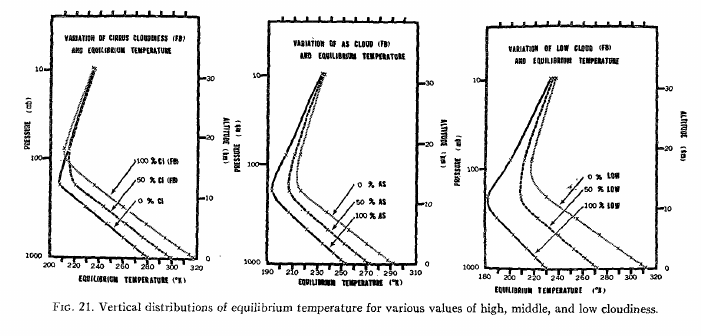

Their figure 7a shows model results that cover different cloud assumptions:

Note that cloud type and height both have significant effects on the modelled radiative equilibrium. (Follow the link to the paper if you need more context.)

The 1964 paper was followed by another in 1967: Manabe and Wetherald, 1967, JAS 24(3) Thermal Equilibrium of the Atmosphere with a Given Distribution of Relative Humidity

They give two figures of interest: 20 and 21:

Again, follow the link to the paper for context (and perhaps larger views of the graphs).

These two figures show responses to changes in cloud amounts, for several different cloud types in their model.

- In figure 20, low and middle cloud have negative slopes (temperature as a function of cloud amount), while high cloud has a positive slope. Increasing high cloud has a warming effect.

- In figure 21, we see three diagrams of equilibrium temperature, for the same three cloud types. Each diagram shows the results for three different cloud amounts (0, 50, and 100%). The diagram on the left is for high cloud, and we see warmer tropospheric temperatures for higher cloud amounts. This is the opposite for middle and low cloud, where increasing cloud amount causes cooling.

So, we can see that climate science has know for over 60 years that different cloud types and heights have significant differences in their role in radiation transfer. The papers I have cited used a one-dimensional radiative-convective model, which is simple by modern standards. Current three-dimensional general circulation models incorporate even more vertical cloud processes, and add the horizontal dimensions that include the horizontal transport of water vapour I mentioned at the start of this comment. They generate cloud internally, based on physics, rather than assuming specific distributions - but the key message is the same:

Cloud amount, cloud type, cloud height, horizontal distribution - all are important in properly assessing the radiative effect of clouds.

Coolmaster's diagrams are nice pictures that help illustrate a few aspects of the complexity of clouds and atmospheric radiation transfer, but they are totally unsuited to the sort of predictive analysis he is trying to perform.

- The New Climate War by Michael E. Mann - our reviews

Nick Palmer at 09:33 AM on 23 June, 2021Just in case you lot are still resisting the idea that the politics relating to climate science have become extremely polarised - in my view to the point where ideologues of both the left and right think it justified to exaggerate/minimise the scientific truths/uncertainties to sway the democratically voting public one way or the other - here's a video blog by alt-right hero and part of the original Climategate team who publicised the emails, James Delingpole basically saying that 'the left' have infiltrated and corrupted the science for the purpose of using political deception to seize power for themselves.

https://www.youtube.com/watch?v=866yHuh1RYM

Deconstruct or follow up Delingpoles' rhetoric elsewhere and you will find a helluva lot of intelligent articulate people who believe that the public's environmental consciences are being exploited by closet socialist forces to deceive them, using 'fear porn', into voting for policies which they otherwise wouldn't consider voting for, in a dark strategy to bring in some form of latter day Marxism. They insinuate this has got its tentacles into climate science which they assert has led to the reality of the science, as presented to the public, being twisted by them for political ends. It's absolutely not just Greenpeace, as I already said, who've 'gone red' to the point where it has 'noble cause' corrupted their presentations of environmental matters and, crucially, the narrow choice of solutions they favour - those which would enable and bring on that 'great reset' of civilisation that they want to see. It's much, much bigger than that.

I think we are seeing a resurgence and a recrystallisation of those who got convinced by Utopianist politics of the left and free market thinkers of the right taught at University - Marxist-Leninism, Ayn Rand, Adam Smith etc. Most of those students eventually 'grew up' and mellowed in time, leaving only a small cadre of incorrigible extremists but who are now, as the situation is becoming increasingly polarised politically, revisiting their former ideologies. In essence 'woking' up. I submit that the real battle we are seeing played out in the arena of climate matters is not between science and denialism of science - those are only the proxies used to manipulate the public. The true battle is between the increasingly polarised and increasingly extreme and deceitful proponents of the various far left and right ideologies and their re-energised followers.

It is now almost an article of faith, so accepted has it become, amongst many top climate scientists and commentators, that 'denialism' is really NOT motivated by stupidity or a greedy desire to keep on making as much money as possible but is rather a strong resistance to the solutions that they fear are just 'chess moves' to bring about the great Red 'reset' they think the 'opposition' are secretly motivated by.

Here's an excellent article by famous climate scientist Katharine Hayhoe identifying those who are 'solutions averse' as being a major factor in denialism. It touches on the 'watermelon' aspect. You can turn a blind eye to what I am saying if you want, but in that case you should also attack Hayhoe too - but don't expect many to applaud you...

https://theecologist.org/2019/may/20/moving-past-climate-denial

Also try this: https://www.thecut.com/2014/11/solution-aversion-can-explain-climate-skeptics.html

https://today.duke.edu/2014/11/solutionaversion

I think some people who fight climate science denialism still have the naive idea that just enlessly quoting the science to them, and Skepticalscience's F.L.I.C.C logical fallacies, will make denialists fall apart. I too used to think that if one would just keep hammering away, eventually they would give up. Anyone who tries this will find that it actually does not work well at all. Take on some of the smarter ones and you will rapidly find that you are, at least in the eyes of the watching/reading/listening public, who are the only audience it's worthwhile spending any time trying to correct, outgunned scientifically and rhetorically. That's why I don't these days much use the actual nitty-gritty science as a club with which to demolish them because the smarter ones will always have a superficially plausible, to the audience at least, comeback which looks convincing TO THE AUDIENCE. Arguing the science accurately can often lose the argument, as many scientists found when they attempted to debate such notorious, yet rhetorically brilliant sceptic/deniers such as Lord Monckton.

I haven't finished trying to clarify things for you all but right back at the beginning, in post#18, I fairly covered what I was trying to suggest is a more realistic interpretation of the truth than the activist's simplistic 'Evil Exxon Knew' propaganda one. In short, most of you seem to believe, and are arguing as if, the science was rock solid back then and that it said any global warming would certainly lead to bad things. This is utterly wrong, and to argue as if it was true is just deceitful. As I have said, and many significant figures in the field will confirm, I've been fighting denialism for a very long time so when denialists present some paper or piece of text extracted from a longer document as 'proof' of something, I always try and read the original, usually finding out that they have twisted the meaning, cherry picked inappropriate sentences or failed to understand it and thereby jumped to fallacious conclusions - similarly I read the letters and extracts that Greenpeace used and, frankly, either they were trying deliberately to mislead or they didn't understand the language properly and jumped to their prejudiced conclusions and then made all the insinuations that we are familiar with and that nobody else seems be questioning much, if at all. The idea that Exxon always knew that anthropogenic climate change was real (which they, of course, did) AND that they always knew that the results of that would be really bad and so they conspired to cover that bad future up is false and is the basis of the wilful misreading and deceitful interpretation of the cherry picked phrases, excerpts and documents that has created a vastly worse than deserved public perception of how the fossil fuel corporations acted. Always remember that, at least ideally, people (and corporations) should be presumed innocent until proven beyond reasonable doubt to be guilty. Greenpeace/Oreskes polemics are not such proof. Their insinuations of the guilt of Big Oil is just a mirror image of how the Climategate hackers insinuated guilt into the words of the top climate scientists.

Here's a clip from my post#18

NAP: "When activists try to bad mouth Exxon et al they speak from a 'post facto' appreciation of the science, as if today's relatively strong climate science existed back when the documents highlighted in 'Exxon knew' were created. Let me explain what I think is another interpretation other than Greenpeace/Oreskes'/Supran's narratives suggesting 'Exxon knew' that climate change was going to be bad because their scientists told them so as far back as the 70s and 80s. Let me first present Stephen Schneider's famous quote from 1988 (the whole quote, not the edited one used by denialists).

S.S. "On the one hand, as scientists we are ethically bound to the scientific method, in effect promising to tell the truth, the whole truth, and nothing but – which means that we must include all doubts, the caveats, the ifs, ands and buts. On the other hand, we are not just scientists but human beings as well. And like most people we’d like to see the world a better place, which in this context translates into our working to reduce the risk of potentially disastrous climate change. To do that we need to get some broad based support, to capture the public’s imagination. That, of course, means getting loads of media coverage. So we have to offer up scary scenarios, make simplified, dramatic statements, and make little mention of any doubts we might have. This “double ethical bind” we frequently find ourselves in cannot be solved by any formula. Each of us has to decide what the right balance is between being effective and being honest. I hope that means being both.""

Stephen Schneider, as a climate scientist, was about 'as good as it gets' and he said that in 1988. Bear in mind that a lot of the initial framing to prejudice readers that 'Exxon knew' used was based on documents from considerably longer ago, so what are the activists who eagerly allowed themselves to be swept up in it until no-one questioned it turning a blind eye to? It's that the computer models of the time were extremely crude because computer technology back then was just not powerful enough to divide Earth up into enough finite element 'blocks' of small enough size to make model projections of much validity, in particular projections of how much, how fast and how bad or how good... Our ideas of the feedback effects of clouds and aerosols back then was extremely rudimentary and there were widely differing scientific opinions as to the magnitude or even the direction of the feedback. The scientific voices we see in Exxon Knew tend to be those who were suggesting there was lot more certainty of outcome than there actually was. That their version has been eventually shown to be mostly correct by a further 40 years of science in no way means they were right to espouse such certainty back then - just lucky. As I pointed out before, even as late as the very recent CMIP6 models, we are still refining this aspect - and still finding surprises. To insinuate that the science has always been as fairly rock solid as it today is just a wilful rewriting of history. Try reading Spencer Weart's comprehensive history of the development of climate science for a more objective view of the way things developed...

ExxonMobil spokesperson Allan Jeffers told Scientific American in 2015. “The thing that shocks me the most is that we’ve been saying this for years, that we have been involved in climate research. These guys (Inside Climate News) go down and pull some documents that we made available publicly in the archives and portray them as some kind of bombshell whistle-blower exposé because of the loaded language and the selective use of materials.”

Look at the phrases and excerpts that were used in both Greenpeace's 'Exxon Knew' and 'Inside Climate News's' exposés. You will find they actually are very cherry picked and relatively few in number considering the huge volumes of company documents that were analysed. Does that remind you of anything else? Because it should. The Climategate hackers trawled through mountains of emails - over ten years worth - to cherry pick apparently juicy phrases and ended up with just a few headline phrases, a sample of which follow. Now, like most of us now know, there are almost certainly innocent and valid explanations of each of these phrases, and independent investigations in due course vindicated the scientists. Reading them, and some of the other somewhat less apparently salacious extracts that got less publicity, and comparing them with the 'presented as a smoking gun' extracts from Greenpeace/Oreskes/Supran etc I have to say, on the face of it, the Climategate cherry picks look more evidential of serious misdeeds than the 'Exxon Knew' excerpts. Except we are confident that the Climategate hackers badly misrepresented the emails by insinuating shady motives where none were. Why should we not consider that those nominally on the side of the science did not do the same? Surely readers here are not so naive aas to believe that everyone on 'our side' is pure as the driven snow and all those on the 'other side' are evil black hats?

Here's a 'top eight'

1) Phil Jones "“I’ve just completed Mike’s [Mann] Nature trick of adding in the real temps to each series for the last 20 years (i.e. from 1981 onwards) and from 1961 for Keith’s [Briffa] to hide the decline.”

2) “Well, I have my own article on where the heck is global warming…. The fact is that we can’t account for the lack of warming at the moment and it is a travesty that we can’t.” [Kevin Trenberth, 2009]

3) “I know there is pressure to present a nice tidy story as regards ‘apparent unprecedented warming in a thousand years or more in the proxy data’ but in reality the situation is not quite so simple." Keth Briffa

4) Mike [Mann], can you delete any e-mails you may have had with Keith [Trenberth] re AR4? Keith will do likewise…. Can you also e-mail Gene and get him to do the same? I don’t have his e-mail address…. We will be getting Caspar to do likewise.” [Phil Jones, May 29, 2008]

5) “Also we have applied a completely artificial adjustment to the data after 1960, so they look closer to observed temperatures than the tree-ring data actually were….” [Tim Osborn, Climatic Research Unit, December 20, 2006]

6) “I can’t see either of these papers being in the next IPCC report. Kevin [Trenberth] and I will keep them out somehow, even if we have to redefine what the peer-review literature is!” [Phil Jones, July 8, 2004]

7) “You might want to check with the IPCC Bureau. I’ve been told that IPCC is above national FOI Acts. One way to cover yourself and all those working in AR5 [the upcoming IPCC Fifth Assessment Report] would be to delete all e-mails at the end of the process. Hard to do, as not everybody will remember it.” [Phil Jones, May 12, 2009]

8) “If you look at the attached plot you will see that the land also shows the 1940s warming blip (as I’m sure you know). So, if we could reduce the ocean blip by, say 0.15 deg C, then this would be significant for the global mean—but we’d still have to explain the land blip….” [Tom Wigley, University Corporation for Atmospheric Research, to Phil Jones, September 28, 2008]

Please at least consider the possibility that Greenpeace, who have been deceiving the public about the toxicity and carcinogenicity of this, that and the other for decades (ask me how if you want to see how blatant their deceit or delusion is... showing this is actually very quick and easy to do) were, in a very similar way, and motivated by their underlying ideology, deliberately (or delusionally) misrepresenting innocent phrases to blacken names excessively too.

- Why does land warm up faster than the oceans?

Bob Loblaw at 00:08 AM on 9 February, 2021AerosGreen:

Regarding ocean temperature gradients, I think you are missing the aspect that ocean circualtion at depth is also driven by salinity differnces (which cause density differnces)

On an annual basis, land temperature cycles only influence the top 10m or so. The ocean mixed layer depth (mixed by surface winds - ie.. interacting more closely with the atmosphere) is more like 60-100m. So increased heat capacity plus much more volume.

TIme constant for the mixed ocean layer is decades.

For deeper oceans, we're talking hundreds of years for circulation patterns to run their course - so adjustment to surface changes is very slow.

- More CO2 in the atmosphere hurts key plants and crops more than it helps

One Planet Only Forever at 03:26 AM on 25 December, 2020ubrew12,

Tragically many people only look for a 'personal positive' to allow them to dismiss or out-weigh any other information about the negatives of something they want to benefit from. That happens regarding lots of issues. It is self-interest encouraged to become harmful selfishness by competition for status, especially when harmful misleading cheaters can get away with winning.

The latest Human Development Report (2020) presents the improved understanding of the need to consider the value of the natural environment (non-renewable resources as well as renewable biodiversity) when evaluating 'development progress'. The majority of HDR 2020 is regarding climate change impacts, including impacts on biodiversity due to climate change. Understanding that evaluation of human development sustainability needs to consider 'these externalities to human-centric considerations' is only now potentially becoming more of 'the Norm'. That more holistic evaluation on starting to be 'more of the norm' shows how harmfully biased the 'norms of the richest people and the supposedly most advanced nations with the most influence on how things are investigated and perceived' have developed to be.

An important addition to understanding in HDR 2020 is the modification of the Human Development Index to reduce the evaluated level of 'measures of human progress' by accounting for CO2 Emissions per capita and Material Footprint per capita (including counting impacts that happen in other nations but are imported - imported impacts of production that happen in another nation). That simple Common Sense adjustment punts many of the 'supposedly most advanced nations many levels down the ranks (Canada drops from 16th to 56th, USA drops from 17th to 67th, Australia drops from 8th to 80th, New Zealand rises from 14th to 8th).

It is important to understand the fuller story like the presentation in the HDR 2020. That can help argue against many possible claims of 'positive results from climate change (or any other harmful activity)'.

I try to make the point that it is incorrect to believe that a 'positive benefit' can be justified by comparing the 'positive' with any 'negatives' and deciding that the 'positives' out-weight the 'negatives' (that evaluation is really only valid for things like medical interventions where the person 'potentially harmed' is the person 'potentially helped'. Much more consideration is involved to justify comparing 'positives for Some People' with 'negatives for Others' - positives for desperately poor have to out-weigh concerns for reduced wealth of the wealthiest that still has the wealthiest as the wealthiest).

I also try to point out that a 'positives for some' vs. 'negatives for Others' comparison is hard to make 'fairly' because current day people, especially higher status people, are likely to have biased perceptions of "Their Positives" vs. "Future Negatives". And there is the constant problem in current day populations of people being biased about "Their Group's Benefits" vs. "Harm Done to Others" (which is the same problem as the current day vs. future when future people are considered to be Others).

Also, the restriction of the evaluations to what currently counts in Human Economic Activity is also a very harmful way to evaluate things. What is not yet know about the harm being done by human activities 'argued to be progress' can be very tragic for the future of humanity.

An example of how a biased person could interpret the HDR 2020 content would be focusing on the bits of 'positives'. One of those 'bits of positive' in the report is the reference to evaluations that indicate that global warming by 2100 will likely reduce the number of extreme temperature days in the Rich Northern nations. The measure is simply the expected yearly change of number of extremely hot or cold days without any consideration of the magnitude of the temperature change (if there are fewer extra extreme hot days than the reduction of the number of extreme cold days the measure is a 'drop in extreme days'). That 'Positive' perception misses the fact that the same report indicates the number of extreme days in a year will be increasing in most of the rest of the nations. And it dismisses any consideration of all the other harmful consequences of unsustainable development pursuing 'improvements' that erroneously can consider harmful over-development of conditions for the richest today to be 'worth it' because the evaluated improvements by and for the richest exceed the perceptions by the richest of the 'lack of improvement' for the future generations or for the less-rich portions of the population (those Others).

- 2020 SkS Weekly Climate Change & Global Warming News Roundup #25

Eclectic at 20:06 PM on 22 June, 2020Lawrie @9 , Slarty Bartfast maintains that there is no global warming of any significance at a statistical level or at a physical planetary level. So to him, albedo is irrelevant.

Being more than 24 hours since his last posting, it seems unlikely that Slarty will return to attempt rebuttal of criticisms of against his many positions. But we can hope he will return, to give a grand explication of his apparent errors and inconsistencies.

In order to save the valuable time of SkS readers, I have looked further into Slarty's blog of May / June 2020 , and I have pulled out some points of interest. Slarty's statistical/mathematical skills are (IMO) far exceeding his climate science knowledge . . . and somehow I am reminded of the very emeritus & climatically-challenged Ivar Giaever !

I have taken some care not to misrepresent or quote-mine Slarty. And please note that Slarty, in his blog, describes himself as: physicist / socialist / evironmentalist.

1. Sea level rise cannot be more than slight , because there is no CO2-AGW or CO2-led Greenhouse effect. And so our coastal cities have zero danger of submersion.

2. What little CO2-greenhouse effect is present now, is produced by CO2 reflecting IR back to the planetary surface.

3. Weather stations fail to give valid planetary data because they are far too few, and (just as importantly) they are not evenly spaced.

4. "temperature records just aren't long enough ... to discern a definite trend ... you need at least 50 years."

5. "[land ice] In Antarctica (and Greenland) this is virtually all at altitude (above 1000 m) where the mean temperature is below -20 C, and the mean monthly temperature NEVER gets above zero, even in summer. Consequently, the likelihood of any of this ice melting is negligible."

6. AGW forcing does not supply enough heat to melt ice at the poles [he seems to include the Arctic, too].

7. The Arctic is not warming. [Presumably news to those alarmist Inuit who live there.]

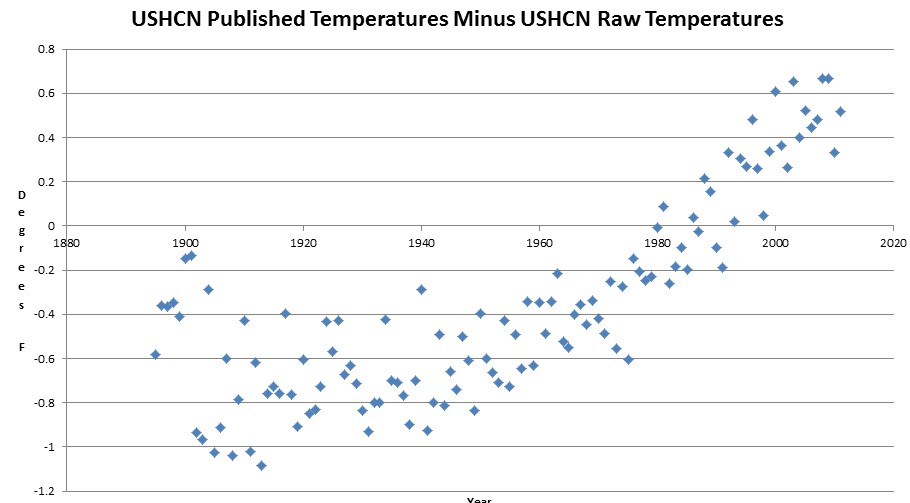

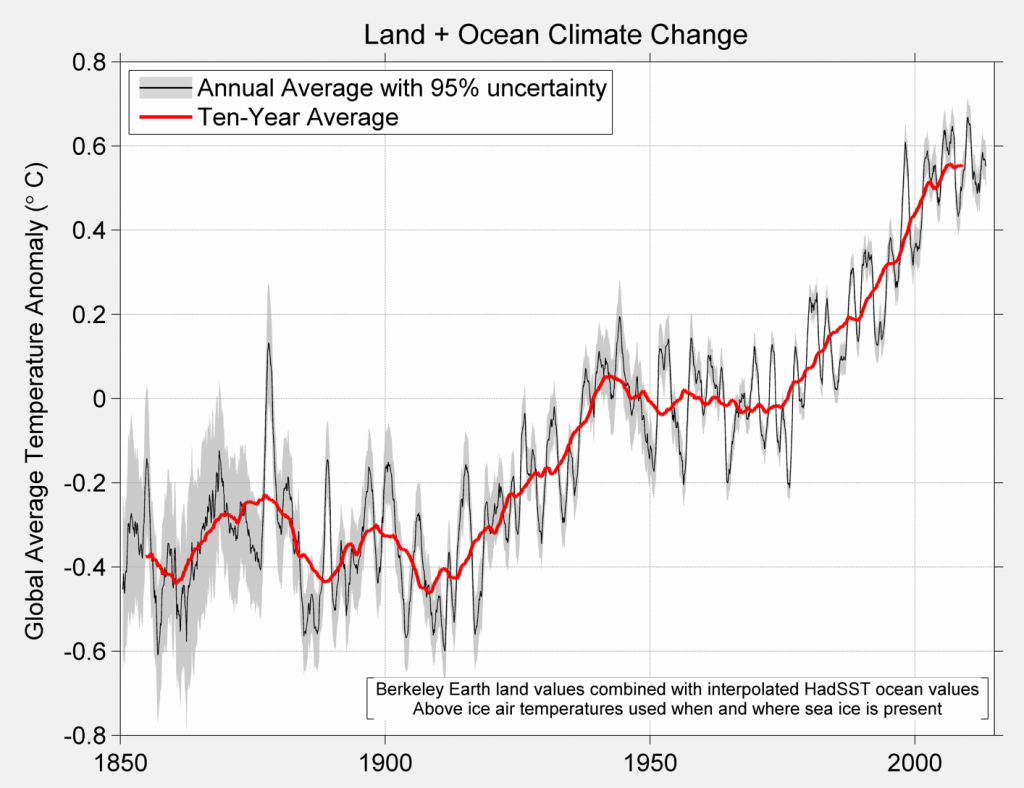

8. Berkeley Earth Study repeats the sins of Hadley/ NOAA / etc but in a more transparent way ~ and BEST generates a falsely-positive warming trend through its misuse of Breakpoint Adjustments (rather than using raw data).

9. Slarty's oceanic thermal expansion calculations are wrong [as pointed out by MA Rodger].

And there's more !

- YouTube's Climate Denial Problem

nigelj at 11:15 AM on 6 April, 2020dudo39 @8

Your comments are mostly misguided. Sorry about that, you will get over it.

We already know and accept water vapour is a greenhouse gas, but you have to be able to explain why its increased in the atmosphere in recent decades, and the IPCC has determined this is because of the CO2 forcing causing evaporation. The proven underlying thing driving the warming is CO2, with water vapour as a feedback. We know the spectral properties of the water molecule so know how much warming this water vapour causes in comparison to the C02 molecule.

The one area of doubt is the effect of clouds, but most published research finds they have a slightly positive warming effect overall or are neutral. They cannot be sharply negative or there would be no warming.

You do not need one million argo floats to sufficiently sample ocean temperatures. And ocean temperature trends are broadly similar to atmospheric and land based trends which you would expect so this provides evidence there are more than enough argo floats, and that 'drift' is not a significant issue.

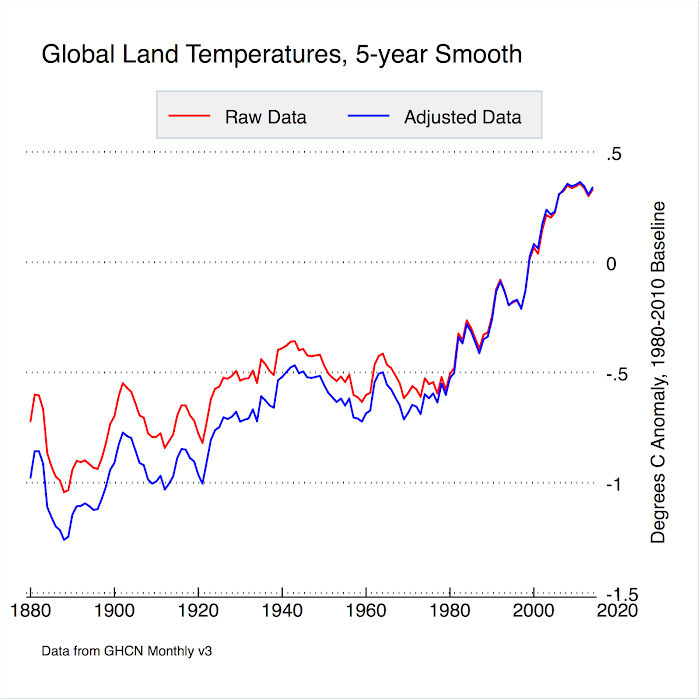

The issue with weather stations in northern Russia obviously has little significance for global temperatures, and you provide no link to back up your assertions about Russia. The urban heat island effect is taken into consideration and temperatures are adjusted downwards where its an issue. And research has determined its not a huge issue anyway.Regarding temperature adjustments, Read this article.

Since you are so conerned about facts, the global temperature dataset as a whole has been adjusted down because of a known issue with ships buoy issues. This is the reality, and is the complete opposite of the false denialist claims that global tempertaures have been adjusted upwards. Read this article.

Now go away and spread your useless, badly informed doubt somewhere else preferably in a hole in the ground.

- I had an intense conversation at work today.

nigelj at 12:59 PM on 15 January, 2020TomJanson @24, the point of the thread is Taminos article at the top which points out how these fires are different from the 1970's and how they are being influenced by warming.

Your comments are disgraceful. People have died, the fires a very much in urban areas, billions of animals have died. People wont forget that in a hurry.

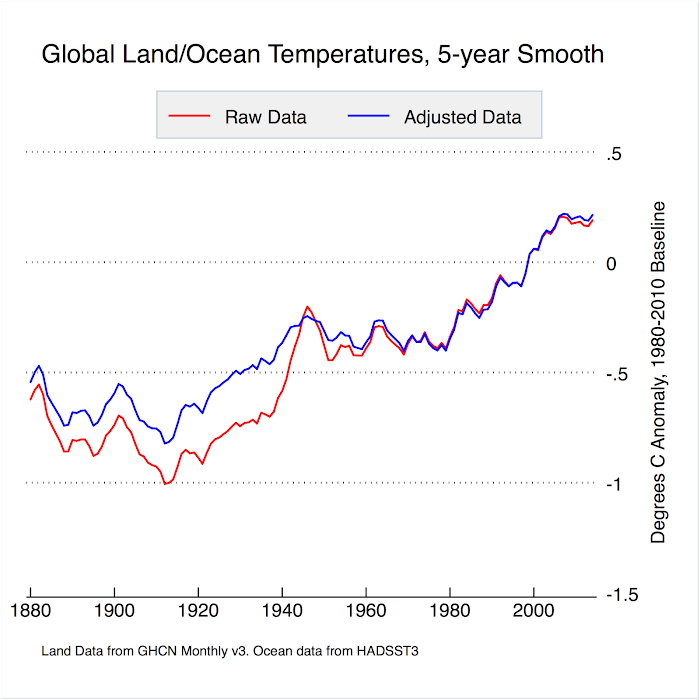

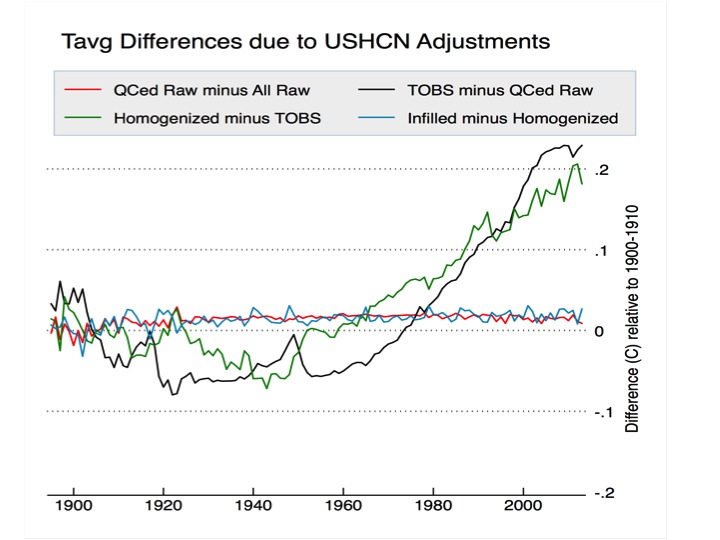

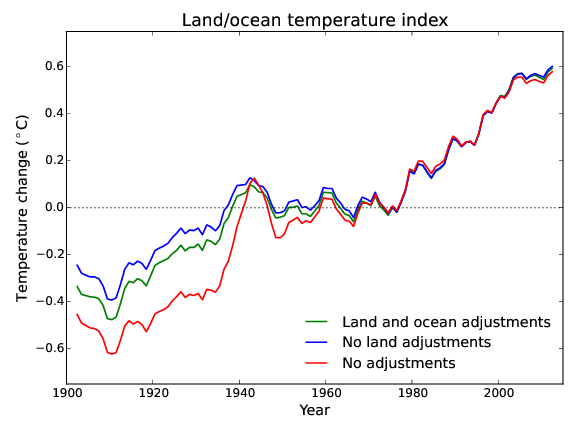

Your claims of temperature adjstments are sloganeering. But for the record the key global adjustments, done for proper reasons, adjust global temperatures down as below. So this doesn't look like much of a conspiracy to exaggerate warming now does it.

www.carbonbrief.org/explainer-how-data-adjustments-affect-global-temperature-records

As you can see from the graph down the page, most adjustments for the global record are in the early part of last century, and relate to problems with ocean measurements. The difference between raw and adjusted data since the 1980s is insignificant.

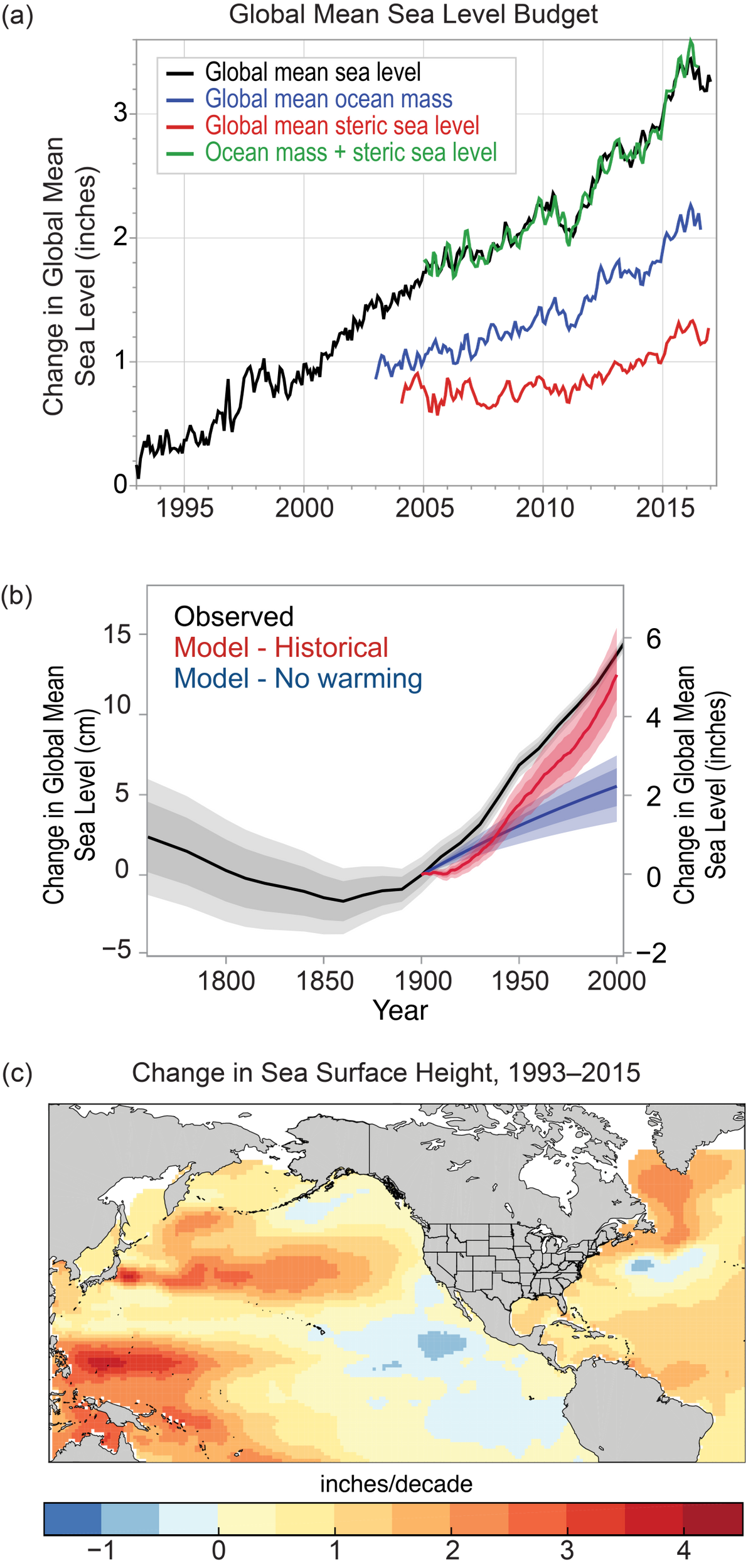

- Sea level rise is exaggerated

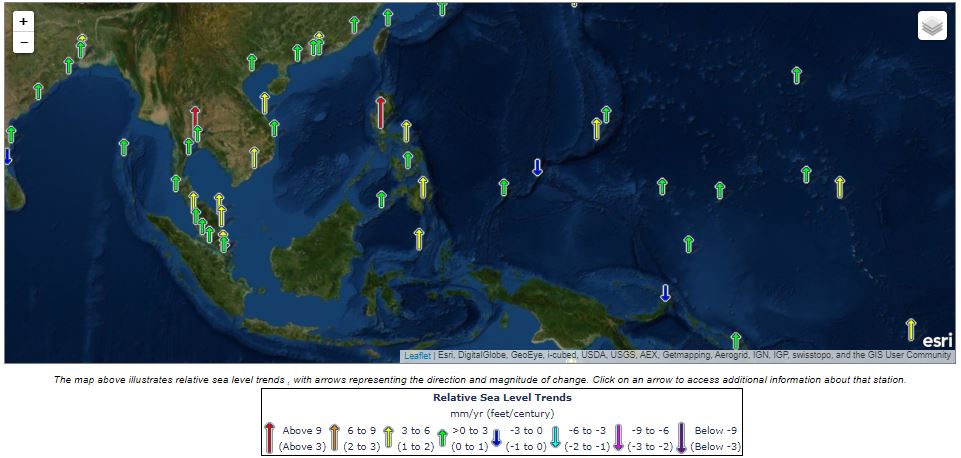

Daniel Bailey at 09:28 AM on 1 December, 2019Not sure what your definition of "uniformly even" is. Did you expect them to be so?

Firstly, global sea level rise is a global average and the surface of the oceans are anything but level (the surface of the oceans follow the gravitic shape of the Earth and are also subject to solar, lunar, sloshing and siphoning effects and oceanic oscillations, etc, all of which need to be controlled for).

From the NCA4, global average sea level has risen by about 7–8 inches since 1900, with almost half (about 3 inches) of that rise occurring since 1993:

From NOAA STAR NESDIS:

"Only altimetry measurements between 66°S and 66°N have been processed. An inverted barometer has been applied to the time series. The estimates of sea level rise do not include glacial isostatic adjustment effects on the geoid, which are modeled to be +0.2 to +0.5 mm/year when globally averaged."

Regional SLR graphics are also available from NOAA STAR NESDIS, here.

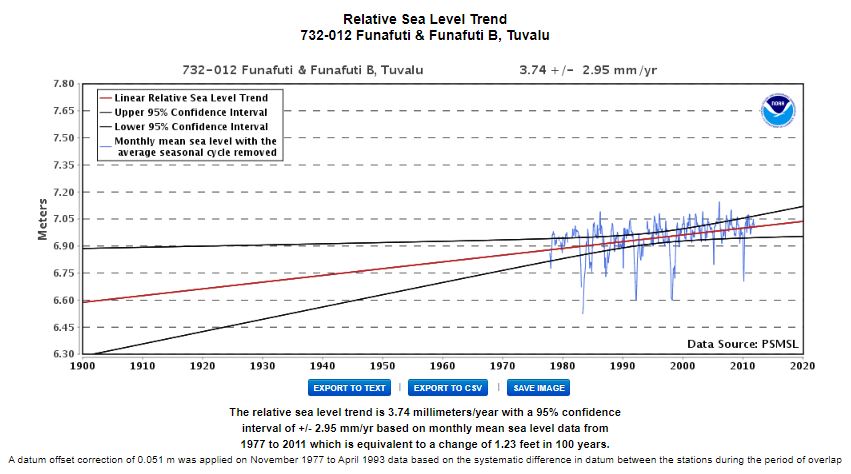

This is a screenshot of NOAA's tide gauge map for the Western Pacific (NOAA color-codes the relative changes in sea levels to make it easier to internalize):

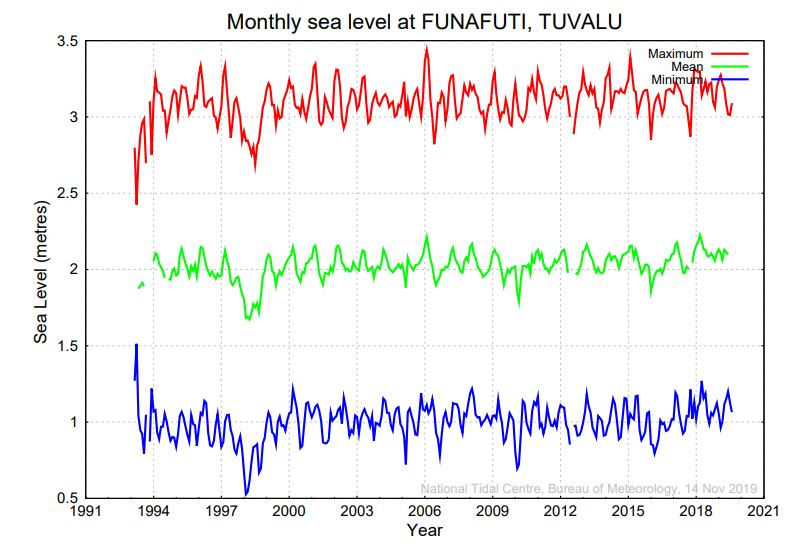

Clicking on the Funafuti, Tuvalu tide gauge station we see that sea levels are rising by 3.74 mm/yr (above the global average) there, with a time series starting around 1978 and ending about 2011:

However, the time series used by your BOM link for Funafuti (1993-2019) is shorter and the BOM also does not apply a linear trend line to it like NOAA does:

Feel free to make further comparisons, but comparing a set of graphics with no trend lines vs those with trend lines is no comparison at all.

From the recent IPCC Special Report 2019 - Ocean and Cryosphere in a Changing Climate - Summary for Policy Makers, September 25, 2019 release (SROCC 2019), the portions on sea level rise:Observed Physical Changes

A3. Global mean sea level (GMSL) is rising, with acceleration in recent decades due to increasing rates of ice loss from the Greenland and Antarctic ice sheets (very high confidence), as well as continued glacier mass loss and ocean thermal expansion. Increases in tropical cyclone winds and rainfall, and increases in extreme waves, combined with relative sea level rise, exacerbate extreme sea level events and coastal hazards (high confidence).A3.1 Total GMSL rise for 1902–2015 is 0.16 m (likely range 0.12–0.21 m). The rate of GMSL rise for 2006–2015 of 3.6 mm yr–1 (3.1–4.1 mm yr–1, very likely range), is unprecedented over the last century (high confidence), and about 2.5 times the rate for 1901–1990 of 1.4 mm yr–1 (0.8– 2.0 mm yr–1, very likely range). The sum of ice sheet and glacier contributions over the period 2006–2015 is the dominant source of sea level rise (1.8 mm yr–1, very likely range 1.7–1.9 mm yr–1), exceeding the effect of thermal expansion of ocean water (1.4 mm yr–1, very likely range 1.1–1.7 mm yr–1) (very high confidence). The dominant cause of global mean sea level rise since 1970 is anthropogenic forcing (high confidence).

A3.2 Sea-level rise has accelerated (extremely likely) due to the combined increased ice loss from the Greenland and Antarctic ice sheets (very high confidence). Mass loss from the Antarctic ice sheet over the period 2007–2016 tripled relative to 1997–2006. For Greenland, mass loss doubled over the same period (likely, medium confidence).

A3.3 Acceleration of ice flow and retreat in Antarctica, which has the potential to lead to sea-level rise of several metres within a few centuries, is observed in the Amundsen Sea Embayment of West Antarctica and in Wilkes Land, East Antarctica (very high confidence). These changes may be the onset of an irreversible (recovery time scale is hundreds to thousands of years) ice sheet instability. Uncertainty related to the onset of ice sheet instability arises from limited observations, inadequate model representation of ice sheet processes, and limited understanding of the complex interactions between the atmosphere, ocean and the ice sheet.

A3.4 Sea-level rise is not globally uniform and varies regionally. Regional differences, within ±30% of the global mean sea-level rise, result from land ice loss and variations in ocean warming and circulation. Differences from the global mean can be greater in areas of rapid vertical land movement including from local human activities (e.g. extraction of groundwater). (high confidence)

A3.5 Extreme wave heights, which contribute to extreme sea level events, coastal erosion and flooding, have increased in the Southern and North Atlantic Oceans by around 1.0 cm yr–1 and 0.8 cm yr–1 over the period 1985–2018 (medium confidence). Sea ice loss in the Arctic has also increased wave heights over the period 1992–2014 (medium confidence).

A3.6 Anthropogenic climate change has increased observed precipitation (medium confidence), winds (low confidence), and extreme sea level events (high confidence) associated with some tropical cyclones, which has increased intensity of multiple extreme events and associated cascading impacts (high confidence). Anthropogenic climate change may have contributed to a poleward migration of maximum tropical cyclone intensity in the western North Pacific in recent decades related to anthropogenically-forced tropical expansion (low confidence). There is emerging evidence for an increase in annual global proportion of Category 4 or 5 tropical cyclones in recent decades (low confidence).

B3. Sea level continues to rise at an increasing rate. Extreme sea level events that are historically rare (once per century in the recent past) are projected to occur frequently (at least once per year) at many locations by 2050 in all RCP scenarios, especially in tropical regions (high confidence). The increasing frequency of high water levels can have severe impacts in many locations depending on exposure (high confidence). Sea level rise is projected to continue beyond 2100 in all RCP scenarios. For a high emissions scenario (RCP8.5), projections of global sea level rise by 2100 are greater than in AR5 due to a larger contribution from the Antarctic Ice Sheet (medium confidence). In coming centuries under RCP8.5, sea level rise is projected to exceed rates of several centimetres per year resulting in multi-metre rise (medium confidence), while for RCP2.6 sea level rise is projected to be limited to around 1m in 2300 (low confidence). Extreme sea levels and coastal hazards will be exacerbated by projected increases in tropical cyclone intensity and precipitation (high confidence). Projected changes in waves and tides vary locally in whether they amplify or ameliorate these hazards (medium confidence).

B3.1 The global mean sea level (GMSL) rise under RCP2.6 is projected to be 0.39 m (0.26–0.53 m, likely range) for the period 2081–2100, and 0.43 m (0.29–0.59 m, likely range) in 2100 with respect to 1986–2005. For RCP8.5, the corresponding GMSL rise is 0.71 m (0.51–0.92 m, likely range) for 2081–2100 and 0.84 m (0.61–1.10 m, likely range) in 2100. Mean sea level rise projections are higher by 0.1 m compared to AR5 under RCP8.5 in 2100, and the likely range extends beyond 1 m in 2100 due to a larger projected ice loss from the Antarctic Ice Sheet (medium confidence). The uncertainty at the end of the century is mainly determined by the ice sheets, especially in Antarctica.

B3.2 Sea level projections show regional differences around GMSL. Processes not driven by recent climate change, such as local subsidence caused by natural processes and human activities, are important to relative sea level changes at the coast (high confidence). While the relative importance of climate-driven sea level rise is projected to increase over time, local processes need to be considered for projections and impacts of sea level (high confidence).

Projected Changes and Risks

B3.3 The rate of global mean sea level rise is projected to reach 15 mm yr–1 (10–20 mm yr–1, likely range) under RCP8.5 in 2100, and to exceed several centimetres per year in the 22nd century. Under RCP2.6, the rate is projected to reach 4 mm yr-1 (2–6 mm yr–1, likely range) in 2100. Model studies indicate multi-meter rise in sea level by 2300 (2.3–5.4 m for RCP8.5 and 0.6–1.07 m under RCP2.6) (low confidence), indicating the importance of reduced emissions for limiting sea level rise. Processes controlling the timing of future ice-shelf loss and the extent of ice sheet instabilities could increase Antarctica’s contribution to sea level rise to values substantially higher than the likely range on century and longer time-scales (low confidence). Considering the consequences of sea level rise that a collapse of parts of the Antarctic Ice Sheet entails, this high impact risk merits attention.B3.4 Global mean sea level rise will cause the frequency of extreme sea level events at most locations to increase. Local sea levels that historically occurred once per century (historical centennial events) are projected to occur at least annually at most locations by 2100 under all RCP scenarios (high confidence). Many low-lying megacities and small islands (including SIDS) are projected to experience historical centennial events at least annually by 2050 under RCP2.6, RCP4.5 and RCP8.5. The year when the historical centennial event becomes an annual event in the mid-latitudes occurs soonest in RCP8.5, next in RCP4.5 and latest in RCP2.6. The increasing frequency of high water levels can have severe impacts in many locations depending on the level of exposure (high confidence).

B3.5 Significant wave heights (the average height from trough to crest of the highest one-third of waves) are projected to increase across the Southern Ocean and tropical eastern Pacific (high confidence) and Baltic Sea (medium confidence) and decrease over the North Atlantic and Mediterranean Sea under RCP8.5 (high confidence). Coastal tidal amplitudes and patterns are projected to change due to sea level rise and coastal adaptation measures (very likely). Projected changes in waves arising from changes in weather patterns, and changes in tides due to sea level rise, can locally enhance or ameliorate coastal hazards (medium confidence).

B3.6 The average intensity of tropical cyclones, the proportion of Category 4 and 5 tropical cyclones and the associated average precipitation rates are projected to increase for a 2°C global temperature rise above any baseline period (medium confidence). Rising mean sea levels will contribute to higher extreme sea levels associated with tropical cyclones (very high confidence). Coastal hazards will be exacerbated by an increase in the average intensity, magnitude of storm surge and precipitation rates of tropical cyclones. There are greater increases projected under RCP8.5 than under RCP2.6 from around mid-century to 2100 (medium confidence). There is low confidence in changes in the future frequency of tropical cyclones at the global scale.

Challenges

C3. Coastal communities face challenging choices in crafting context-specific and integrated responses to sea level rise that balance costs, benefits and trade-offs of available options and that can be adjusted over time (high confidence). All types of options, including protection, accommodation, ecosystem-based adaptation, coastal advance and retreat, wherever possible, can play important roles in such integrated responses (high confidence).C3.1. The higher the sea levels rise, the more challenging is coastal protection, mainly due to economic, financial and social barriers rather than due to technical limits (high confidence). In the coming decades, reducing local drivers of exposure and vulnerability such as coastal urbanization and human-induced subsidence constitute effective responses (high confidence). Where space is limited, and the value of exposed assets is high (e.g., in cities), hard protection (e.g., dikes) is likely to be a cost-efficient response option during the 21st century taking into account the specifics of the context (high confidence), but resource-limited areas may not be able to afford such investments. Where space is available, ecosystem-based adaptation can reduce coastal risk and provide multiple other benefits such as carbon storage, improved water quality, biodiversity conservation and livelihood support (medium confidence).

C3.2 Some coastal accommodation measures, such as early warning systems and flood-proofing of buildings, are often both low cost and highly cost-efficient under current sea levels (high confidence). Under projected sea level rise and increase in coastal hazards some of these measures become less effective unless combined with other measures (high confidence). All types of options, including protection, accommodation, ecosystem-based adaptation, coastal advance and planned relocation, if alternative localities are available, can play important roles in such integrated responses (high confidence). Where the community affected is small, or in the aftermath of a disaster, reducing risk by coastal planned relocations is worth considering if safe alternative localities are available. Such planned relocation can be socially, culturally, financially and politically constrained (very high confidence).

C3.3 Responses to sea-level rise and associated risk reduction present society with profound governance challenges, resulting from the uncertainty about the magnitude and rate of future sea level rise, vexing trade-offs between societal goals (e.g., safety, conservation, economic development, intra- and inter-generational equity), limited resources, and conflicting interests and values among diverse stakeholders (high confidence). These challenges can be eased using locally appropriate combinations of decision analysis, land-use planning, public participation, diverse knowledge systems and conflict resolution approaches that are adjusted over time as circumstances change (high confidence).

C3.4 Despite the large uncertainties about the magnitude and rate of post 2050 sea level rise, many coastal decisions with time horizons of decades to over a century are being made now (e.g., critical infrastructure, coastal protection works, city planning) and can be improved by taking relative sea-level rise into account, favouring flexible responses (i.e., those that can be adapted over time) supported by monitoring systems for early warning signals, periodically adjusting decisions (i.e., adaptive decision making), using robust decision-making approaches, expert judgement, scenario-building, and multiple knowledge systems (high confidence). The sea level rise range that needs to be considered for planning and implementing coastal responses depends on the risk tolerance of stakeholders. Stakeholders with higher risk tolerance (e.g., those planning for investments that can be very easily adapted to unforeseen conditions) often prefer to use the likely range of projections, while stakeholders with a lower risk tolerance (e.g., those deciding on critical infrastructure) also consider global and local mean sea level above the upper end of the likely range (globally 1.1 m under RCP8.5 by 2100) and from methods characterised by lower confidence such as from expert elicitation.

To sum:

1. Global sea levels continue to rise, with the rise itself accelerating (due to an acceleration in land-based ice sheet mass losses). This will continue, for beyond the lifespans of any now alive.

2. Beware of the eyecrometer. It will deceive you, if you allow it to.

SLR Components, from Cazenave et al 2018

- Climate Scientist reacts to Donald Trump's climate comments

MA Rodger at 06:17 AM on 26 November, 2019prove we are smart @21,

The muppet in the video simply combines a number of weak or falacious argument to support his grand "there is no AGW" delusion.

The first bit of it is feeding off this weblog at denialist site http://joannenova.com.au. There are genuine reasons for adjusting temperature data but the usual nonsense from denialists is that such adjustments are fake, or at least they are fake when the raw data is more favourble to their delusions.

The Mayor of Glen Innes featured in the denialist video says nothing about what data is used to establish AGW. I'm sure if the number of +40ºC daily maximums was how to measure AGW, we would have debunked that particular denialist argument many times before.

The Glen Innes Annual Max data for the period 1907-2012 doesn't show any significant warming trend, although when combined with the Annual Min data, the Annual Average data 1907-2012 does. And over the period 1975-2012 the Average data is running at +0.15ºC/decade although the noise reduces the statistical significance (+/- 0.12ºC/decade at 2sd). The Annual Max also shows a reasonable warming trend but the noise makes it statistically insignificant at 2sd +0.12ºC(+/-0.21)/decade.

And the various reports of cold winters are not incompatible with AGW although it is wise not to listen to other swivel-eyed climate deniers unless you are happy broadcasting fake news. So the blather about a cold winter ahead for the UK is nought but blather. "Claims that the UK is set to face the chillest winter in a century and even a white Christmas have been dismissed by the Met Office."