Arguments

Arguments

Software

Software

Resources

Comments

Resources

Comments

The Consensus Project

The Consensus Project

Translations

Translations

About

Support

About

Support

Latest Posts

- What are the causes of recent record-high global temperatures?

- Fact brief - Are toxic heavy metals from solar panels posing a threat to human health?

- Emergence vs Detection & Attribution

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #50

- Skeptical Science New Research for Week #50 2025

- The rest of the world is lapping the U.S. in the EV race

- Fact brief - Are electromagnetic fields from solar farms harmful to human health?

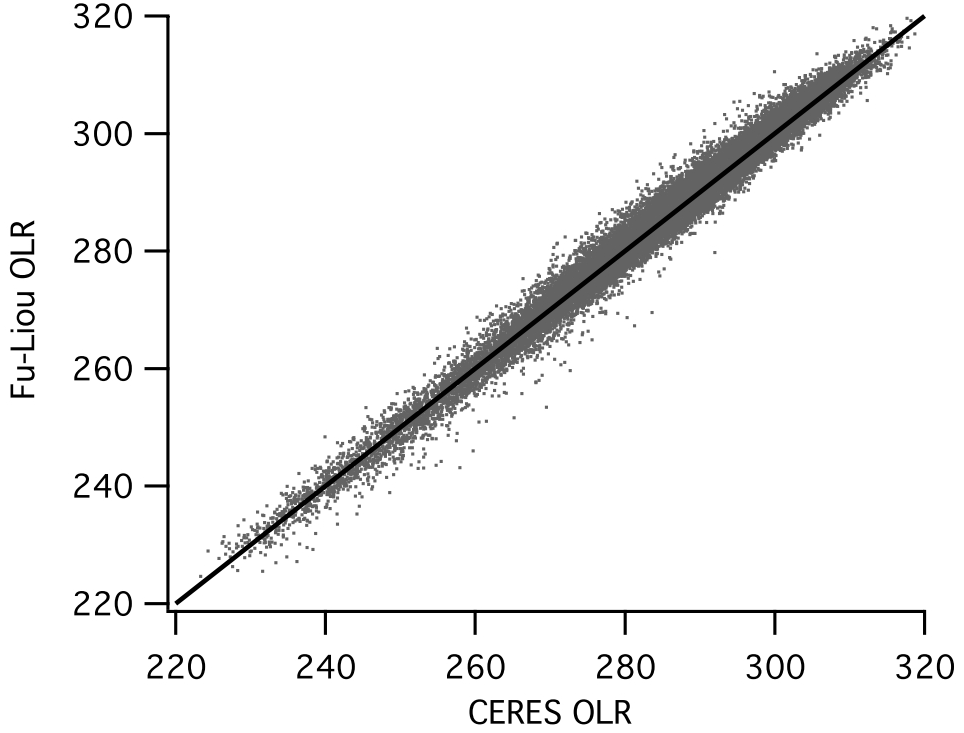

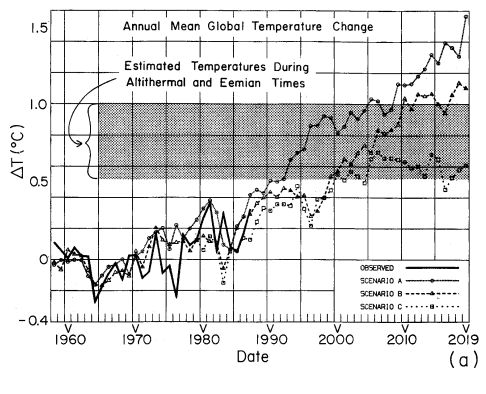

- Comparing climate models with observations

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #49

- Skeptical Science New Research for Week #49 2025

- Climate Adam & Dr Gilbz - Paris Climate Agreement At 10: Did It Do Anything?

- Fact brief - Does the recent slowdown in Arctic sea-ice extent loss disprove human-caused warming?

- Why the chemtrail conspiracy theory lingers and grows – and why Tucker Carlson is talking about it

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #48

- Skeptical Science New Research for Week #48 2025

- Consensus machines

- Just have a Think - How an African energy revolution could save ALL of us.

- A girl’s grades drop every summer. There’s an alarming explanation.

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #47

- Fact brief - Are changes in solar activity causing climate change?

- Skeptical Science New Research for Week #47 2025

- Exploring newly released estimates of current policy warming

- Climate Adam - Why the Climate Crisis is a Health Crisis

- Super pollutants are trendy, but we should be careful how we use them

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #46

- Skeptical Science New Research for Week #46 2025

- On the Gates climate memo

- Climate Adam - Climate Scientist responds to Bill Gates

- Five ways Joe Rogan misleads listeners about climate change

- 2025 SkS Weekly Climate Change & Global Warming News Roundup #45

Comment Search Results

Search for climate model accuracy

Comments matching the search climate model accuracy:

- At a glance - Is the CO2 effect saturated?

Charlie_Brown at 09:11 AM on 4 January, 2025The paper by Kubicki, Kopczyński, and Młyńczak., “Climatic consequence of the process of saturation of radiation absorption in gases,” Applications in Engineering Science, Vol. 17, March 2024 has been retracted by Elsevier. “After review by additional expert referees, the Editor-in-Chief has lost confidence in the validity of the paper and has decided to retract.”

www.sciencedirect.com/science/article/pii/S2666496823000456

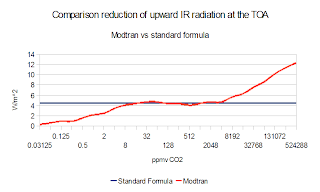

Not explained in the retraction, but according to my interpretation in addition to the previous posts, Kubicki, et al., describe the emitted intensity for one monochromatic transmittance line for methane at 3.39 microns. However, when they describe absorptance for CO2, the description changes from a single line to a spectrum. They do not integrate the intensity of single lines for all lines in the full spectrum, which is the straightforward approach used in atmospheric radiation models and climate models. Rigorous models use line-by-line calculations while simple models utilize narrow bands for calculation efficiency with minimal loss of accuracy. Instead, Kubicki, et al., introduce a definition of “saturation mass” that reaches 95% of maximum value of absorptance for a large band for an unspecified wavelength range. They support their concept by describing experiments for a detected value at the end of a tube. This experimental design does not account for re-radiation in any direction apart from a straight line.

- Climate - the Movie: a hot mess of (c)old myths!

scaddenp at 06:38 AM on 3 April, 2024Two dog. The OHC content data in red comes from the Argo array. You can find reasonable description here. The old pentadecadal data is ship-based and has much bigger error bars. I cant immediately find the paper that determined the accuracy of the Argo data but if interested I am sure I dig it out.

On interannual and to some extent the decadal scales, variations in surface temperature are strongly influenced by ocean-atmosphere heat exchange, but I think you would agree that the increasing OHC rules that out as cause of global warming?

"I did also read that the warming effect of CO2 decreases as its concentration increases so the warming is expected to reduce over time. Is there any truth in that?"

Sort of - there is a square law. If radiation increase from 200-400 is say 4W/m2, then you have to increase from CO2 from 400 to 800ppm to get 8W/m2. However, that doesnt translate directly into "warming" because of feedbacks. Water vapour is powerful greenhouse gas and its concentration in the atmosphere is directly related to temperature. Also as temperature rises, albedo from ice decreases so less radiation is reflected back. Worse, over century level scales, all that ocean heat reduces the ability of the ocean to absorb CO2. From memory, half of emissions are currently being absorbed there. Hot enough and the oceans de-gas. These are the calculation which have to go into those climate models.

Which brings us to natural sources. Geothermal heat and waste heat are insignificant so would you agree that the only natural source of that extra heat would be the sun? Now impact of sun on temperature has multiple components that climate models take into account. These are:

1/ variations in energy emitted from the sun.

2/ screening by aerosols (natural or manmade). Important in 20th century variations you see.

3/ changes in albedo (especially ice and high cloud)

4/ The concentration of greenhouse gases in the atmosphere.

Now climate scientist would say that changes to all of those can account for all past natural climate change using known physics. They would also say very high confidence that 1/ to 3/ are not a significant part of current climate change (you can see the exact amount for each calculated in the IPCC report). Why are they confident? If you were climate scientist investigating those factors, what would you want to measure to investigate there effects? Seriously, think about that and how you might do such investigations.

Is it possible there is something we dont understand at play? Of course, but there is no evidence for other factors. You can explain past and present climate change with known figures so trying to invoke the unknown seems to be clutching at straws.

- Climate - the Movie: a hot mess of (c)old myths!

diff01 at 19:38 PM on 1 April, 2024Will changing one small ingredient (0.04%) of the earth's greenhouse gases (CO2) arrest gloabal warming (if that is what is happening)?

If the scientists(?) believe this to be the case, how will it be regulated to adjust the climate to maintain an average that is not too hot or cold?

If all anti-carbon emitting policies were implemented, what says the climate will not be too cool?

The other, obvious hole in the argument for drastic economic change in the name of cooling the planet, is that the sun is not factored into the equation (by the way, I am all for increasing efficiency and reducing waste). How will the climate be regulated (say changing one greenhouse gas does the trick) if the sun's intensity changes (sun spots), the reduction in carbon emission works, and it cools too much?

Another question I have is about other factor's, such as the recent eruption at Hunga Tonga. Apparently water vapor increased by 10% in the stratosphere.

Won't that affect the climate? How do the 'models' account for nature not doing what the computers predict?

There are a myriad of other questions. I haven't watched the movie yet, but will, with interest.

When I searched for the movie, this website popped up right under the movie heading.

It's always interesting to hear from the 'true believers'.

The whole thing is a sham of biblical proportions. You need just a modicum of reasoned thought to tell you so.

Just had a quick look at your response regarding 'the sun'.

You say the 'irradiation level' has been measured with accuracy for the last 40 years, and shown little variation.

The sun has been influencing weather on earth for 4 and a half billion years. What about the earth's orbit, and it's distance from the sun?

- Water vapor is the most powerful greenhouse gas

Charlie_Brown at 07:47 AM on 16 June, 2023Actually, I think that Vidar2032 @383 is correct. When he/she says GHGs emit at a fixed temperature, I believe he/she means at the temperature of the atmosphere as fixed by the atmospheric temperature profile. The 1976 U.S. Std Atmosphere for the tropopause, where CO2 emits to space, is close to 220K, while the emitting layer of H2O vapor in the troposphere is about 240-270 K. When he/she says that the effect of increased concentration is to broaden the band, that also is correct when considering that increasing concentration strengthens weak absorption lines. Look at the Figure in Bob Loblaw @7 in his linked thread to Beer’s Law above, which Bob kindly produced for me at that time. The weak absorption lines on the wings get stronger as concentration increases. There is sufficient path length in the tropopause to bring most of the absorption lines for the CO2 band between 14-16 microns close to 1.0, which means that the emittance is close to 1.0. Stacking the strong absorption lines in the middle of the band, which means increasing the path length and bringing an emittance of close to 1.0 even closer to 1.0, is not how increasing CO2 increases the emittance. Note that increasing emittance means more energy is emitted from a colder temperature which has less intensity than the energy emitted from a lower altitude at a warmer temperature. This is in accordance with the Planck black body distribution curves that Bob presents. The difference between a black body and a gas is that a black body absorbs/emits at all wavelengths while gases absorb/emit only at wavelengths specific to their molecular structure. What would be interesting, if only I could post my own Figure, would be the HITRAN absorption lines for CO2 at conditions of the tropopause and H2O for the troposphere.

Meanwhile, Vidar’s question is an excellent opportunity to use the Univ of Chicago link to MODTRAN Infrared Light in the Atmosphere. Choose the 1976 U.S. Std Atmosphere. All one has to do is increase the water vapor scalar to 1.07 to show a 7% increase, then adjust the temperature offset until the original value is matched. It turns out to be about 0.25 C. Better, to see if 7 % is about right, set CO2 to 280, CH4 to 0.7, and Freon to 0 to get pre-industrial conditions. Save the run to background. Then change CO2 to 415, CH4 to 1.8, and Freon to 1.0 to get current conditions, adjust the temperature offset to match the starting value, and choose holding fixed relative humidity. The raw model output shows that it changes the water vapor by about 6%, and the temperature offset is about 1.0 C. It's a very good approximation, but be careful not to place too high of an expectation on the accuracy and precision of this model. Realize that it is designed to be an educational tool with high computational speed and limited flexibility that provides good results, but better models exist for professional use.

- Skeptical Science New Research for Week #50 2022

peterklein at 07:12 AM on 16 December, 2022I mostly became mostly aware of the climate and global warming issue about the time that Al Gore began beating the drum (even while he continued to fly globally in his private jet). Since then, I've read about climate change and climate modeling from many sources, including ones taking the position that ‘it is not a question if it is a big-time issue, but what to do about it now, ASAP?’.

In the past few weeks, it appeared to me there has been a of articles, issued reports, and federal government activity, including recently approved legislation, related to this topic. While it obviously has been one of the major global topics for the past 3+ decades, the amount of public domain ‘heightened activity’ seems (to me) to come in waves every 4-6 months. That said, I decided to write on the topic based on what I learned and observed over time from articles, research reports, and TV/newspaper interviews.

There clearly are folks, associations, formal and informal groups, and even governments on both sides of the topic (issue). I also have seen over the decades how the need for and the flow of money sometimes (many times?) taints the results of what appear to be ‘expert-driven and expert-executed’ quantitative research. For example, in medical research some of the top 5% of researchers have been found altering their data and conclusions because of the source of their research funding, peer ‘industry’ pressure and/or pressure from senior academic administrators.

Many climate and weather-related articles state that 95+% of researchers agree on major climate changes; however (at least to me) many appear to disagree on the short-medium-longer term implications and timeframes.

What I conclude (as of now)

1. This as a very complex subject about which few experts have been correct.

2. We are learning more and more every day about this subject, and most of what we learn suggests that what we thought we knew isn't really correct or at least as perfectly accurate as many believe.

3. The U.S. alone cannot solve whatever problem exists. If we want to do something constructive, build lots of nuclear power plants ASAP (more on that to follow)!

4. Any rapid reduction in the use of fossil fuels will devastate many economies, especially those like China, India, Africa and most of Asia. Interestingly, the U.S. can probably survive a 3 or 4% reduction in carbon footprint annually over the next 15 years better than almost any country in the world, but this requires the aforementioned construction of multiple nuclear electrical generating facilities. In the rest of the world, especially the developing world, their economies will crash, and famine would ensue; not a pretty picture.

5. I am NOT a reflexive “climate denier” but rather a real-time skeptic that humans will be rendered into bacon crisps sometime in the next 50, 100 or 500+ years!

6. One reason I'm not nearly as concerned as others is my belief in the concept of ‘progress’. Look at what we accomplished as a society over the last century, over the last 50, 10, 5 and 3 years (e.g., Moore’s Law is the observation that the number of transistors on integrated circuits doubles about every two years!). It is easy to conclude that we will develop better storage batteries and better, more efficient electrical grids that will reduce our carbon footprint. I'm not so sure about China, India and the developing world!

7. So, don't put me down as a climate denier even though I do not believe that the climate is rapidly deteriorating or will rapidly deteriorate as a result of CO2 upload. Part of my calm on this subject is because I have read a lot about the ‘coefficient of correlation of CO2 and global warming, and I really don't think it's that high. I won't be around to know if I was right in being relaxed on this subject, but then I have more important things to worry about (including whether the NY Yankees can beat Houston in the ACLS playoffs, assuming they meet!).

My Net/Net (As of Now!)

I am not a researcher or a scientist, and I recognize I know far less than all there is to know on this very complex topic, and I am not a ‘climate change denier’… but, after

also reading a lot of material over the years from ‘the other side’ on this topic, I conclude it is monumentally blown out of proportion relative to those claiming: ‘the sky is falling and fast’!

• Read or skim the book by Steven Koonin: Unsettled: What Climate Science Tells Us, What It Doesn't, and Why It Matters /April 27, 2021; https://www.amazon.com/Unsettled-Climate-Science-Doesnt-Matters/dp/1950665798

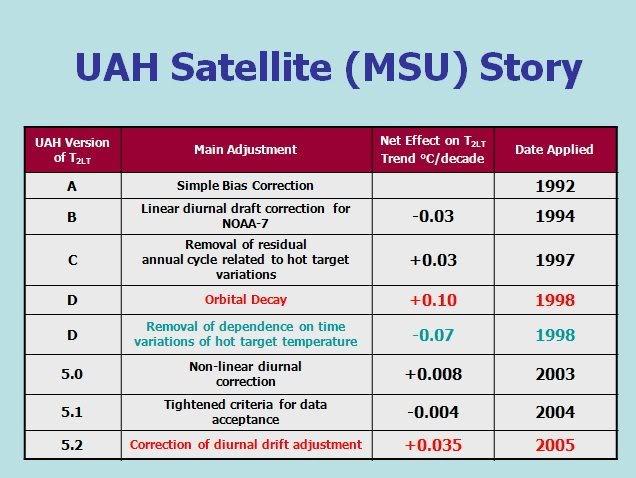

• Google ‘satellite measures of temperature’; also, very revealing… see one attachment as an example.

• Look at what is happening in the Netherlands and Sri Lanka! Adherence to UN and ESG mandates are starving countries; and it appears Canada is about to go over the edge!

• None of the climate models are accurate for a whole range of reasons; the most accurate oddly enough is the Russian model but that one is even wrong by orders of magnitude!

• My absolute favorite fact is that based on data from our own governmental observation satellites: the oceans have been rising over the last 15 years at the astonishing rate of 1/8th of an inch annually; and my elementary mathematics suggests that if this rate continues, the sea will rise by an inch sometime around 2030 and by a foot in the year 2118… so, no need to buy a lifeboat if you live in Miami, Manhattan, Boston, Los Angeles, or San Francisco!

• Attached is a recent article and a Research Report summary.

Probably the most damning is the Research Report comparison of the climate model predictions from 2000, pointing to 2020 versus the actual increase in temperature that has taken place in that timeframe (Pages 9-13). It's tough going and I suggest you just read the yellow areas on Page 9 (the Abstract and Introduction, very short) and the 2 Conclusions on Page 12. But the point is someone is going to the trouble to actually analyze this data on global warming coefficients!

My Observations and Thinking

In the 1970s Time Magazine ran a cover story about our entering a new Ice Age. Sometime in the early 1990s, I recall a climate scientist sounding the first warning about global warming and the potentially disastrous consequences. He specifically predicted high temperatures and massive floods in the early 2000’s. Of course, that did not occur; however, others picked up on his concern and began to drive it forward, with Al Gore being one of the primary voices of climate concern. He often cited the work in the 1990’s of a climate scientist at Penn State University who predicted a rapid increase in temperature, supposedly occurring in 2010 and, of course, this also did not occur.

Nonetheless many scientists from various disciplines also began to warn about global warming starting in the early 2000’s. It was this growing body of ‘scientific’ concern that stimulated Al Gore's concern and his subsequent movie. It would be useful for you to go back to that and review the apocalyptic pronouncements from that time; most of which predicted dire consequences, high temperatures, massive flooding, etc. which were to occur in 10 or 12 years, certainly by 2020. None of this even closely occurred to the extent they predicted.

That said, I was still generally aware of the calamities predicted by a large and diverse body of global researchers and scientists, even though their specific predictions did not take place in the time frame or to the extent that they predicted. As a result, I become a ‘very casual student’ of climate modeling.

Over the past 15 years climate modeling has become a popular practice in universities, think-tanks and governmental organizations around the globe. Similar to medical and other research (e.g., think-tanks, etc.) I recognized that some of the work may have been driven by folks looking for grants and money to keep them and their staff busy.

A climate model is basically a multi-variate model in which the dependent variable is global temperature. All of these models try to identify the independent variables which drive change in global temperature. These independent variables range from parts per million of carbon dioxide in the atmosphere to sunspot activity, the distance of the earth from the sun, ocean temperatures, cloud cover, etc. The challenge of a multi-variant model is first to identify all of the various independent variables affecting the climate and then to estimate the percent contribution to global warming made by a change in any of these independent variables. For example, what would be the coefficient of correlation for an increase in carbon dioxide parts per million to global warming?

You might find that an interesting cocktail party question to ask your friends “what is the coefficient of correlation between the increase in carbon dioxide parts per million and the effect on global warming?” I would be shocked if any of them even understood what you were saying and flabbergasted if they could give you an intelligent answer! There are dozens of these climate models. You might be surprised that none of them has been particularly accurate if we go back 12 years to 2010, for example, and look at the prediction that the models made for global warming in ten years, by 2020, and how accurate any given model would be.

An enterprising scientist did go back and collected the predictions from a score of climate models and found that a model by scientists from Moscow University was actually closer to being accurate than any of the other models. But the point is none were accurate! They all were wrong on the high side, dramatically over predicting the actual temperature in 2020. Part of the problem was that in several of those years, there was no increase in the global temperature at all. This caused great consternation among global warming believers and the scientific community!

A particularly interesting metric relates to the rise in the level of the ocean. Several different departments in the U.S. government actually measures this important number. You might be surprised to know, as stated earlier, that over the past 15 or so years the oceans have risen at the dramatic rate of 1/8th of an inch annually. This means that if the oceans continued to rise at that level, we would see a rise of an inch in about 8 years, sometime around 2030, and a rise of a foot sometime around the year 2118. I suspect Barack Obama had seen this data and that's why he was comfortable in buying an oceanfront estate on Martha's Vineyard when his presidency ended!

The ‘Milankovitch Theory’ (a Serbian astrophysicist Milutin Milankovitch, after whom the Milankovitch Climate Theory is named, proposed about how the seasonal and latitudinal variations of solar radiation that hit the earth in different and at different times have the greatest impact on earth's changing climate patterns) states that as the earth proceeds on its orbit, and as the axis shifts, the earth warms and cools depending on where it is relative to the sun over a 100,000-year, and 40,000-year cycle. Milankovitch cycles are involved in long-term changes to Earth's climate as the cycles operate over timescales of tens of thousands or hundreds of thousands of years.

So, consider this: we did not suddenly get a lot more CO2 in the atmosphere this year than we had in 2019 (or other years!), but maybe the planet has shifted slightly as the Milankovitch Theory states, and is now a little closer to the sun, which is why we have the massive drought. Nothing man has done would suddenly make the drought so severe, but a shift in the axis or orbit bringing the planet a bit closer to the sun would. It just seems logical to me. NASA publicly says that the theory is accurate, so it seems that is the real cause; but the press and politicians will claim it is all man caused! You can shut down all oil production and junk all the vehicles, and it will not matter per the Theory! Before the mid-1800’s there were no factories or cars, but the earth cooled and warmed, glaciers formed and melted, and droughts and massive floods happened. The public is up against the education industrial complex of immense corruption!

In the various and universally wrong ‘climate models’, one of the ‘independent’ variables is similar to the Milankovitch Theory. Unfortunately, it is not to the advantage of the climate cabal to admit this or more importantly give it the importance it probably deserves.

People who are concerned about the climate often cite an ‘increase in forest fires, hurricanes, heat waves, etc. as proof of global warming’. And many climate deniers point out that most forest fires are proven to be caused by careless humans tossing cigarettes into a pile of leaves or leaving their campfire unattended, and that there has been a dramatic decrease globally on deaths caused by various climate factors. I often read from climate alarmists (journalists, politicians, friends, etc.), what I believe are ‘knee-jerk’ responses since they are not supported by meaningful and relevant data/facts, see typical comments below:

• “The skeptical climate change deniers remind me of the doctors hired by the tobacco industry to refute the charges by the lung cancer physicians that tobacco smoke causes lung cancer. The planet is experiencing unprecedented extreme climate events: droughts, fires, floods etc. and the once in 500-year catastrophic climate event seems to be happening every other year. Slow motion disasters are very difficult to deal with politically. When a 200-mph hurricane hits the east coast and causes a trillion dollars in losses then will deal with it and then climate deniers will throw in the towel!”

These above comments may be right, but to date the forecasts on timing implications across all the models are wrong! It just ‘may be’ in 3, 10 or 50 years… or in 500-5000+ before the ‘sky is falling’ devastating events directly linked to climate occur. If some of the forecasts, models were even close to accuracy to date I would feel differently.

I do not deny there are climate related changes I just don’t see any evidence their impact is anywhere near the professional researchers’ forecasts/models on their impact as well as being ‘off the charts’ different than has happened in the past 100-1000+ years.

But a larger question is “suppose various anthropogenetic actions (e.g., chiefly environmental pollution and pollutants originating in human activity like anthropogenic emissions of sulfur dioxide) are causing global warming?”. What are they, who is doing it, and what do we do about it? The first thing one must do is recognize that this is a global problem and that therefore the actions of any one country has an effect on the overall climate depending upon its population and actions. Many in the United States focus intensely upon reducing carbon emissions in the U.S. when of course the U.S. is only 5% of the world population. We are however responsible for a disproportionate part of the global carbon footprint; we contribute about 12%. The good news is that the U.S. has dramatically reduced its share of the global carbon footprint over the past 20 years and doing so while dramatically increasing our GDP (up until the 1st Half of 2022).

Many factors have contributed to the relative reduction of the U.S. carbon footprint. Chief among these are much more efficient automobiles and the switch from coal-driven electric generation plants to those driven by natural gas, a much cleaner fossil fuel.

While the U.S. is reducing its carbon footprint more than any other country in the world, China has dramatically increased its carbon footprint and now contributes about 30% of the carbon expelled into the atmosphere. China is also building 100 coal-fired plants!

Additional facts, verified by multiple sources including SNOPES, the U.,S. government, engineering firms, etc.:

• No big signatories to the Paris Accord are now complying; the U.S. is out-performing all of them.

• EU is building 28 new coal plants; Germany gets 40% of its power from 84 coal plants; Turkey is building 93 new coal plants, India 446, South Korea 26, Japan 45, China has 2363 coal plants and is building 1174 new ones; the U.S. has 15 and is building no new ones and will close about 15 coal plants.

• Real cost example: Windmills need power plants run on gas for backup; building one windmill needs 1100 tons of concrete & rebar, 370 tons of steel, 1000 lbs of mined minerals (e.g., rare earths, iron and copper) + very long transmission lines (lots of copper & rubber covering for those) + many transmission towers… rare earths come from the Uighur areas of China (who use slave labor), cobalt comes from places using child labor and use lots of oil to run required rock crushers... all to build one windmill! One windmill also has a back-up, inefficient, partially running, gas-powered generating plant to keep the grid functioning! To make enough power to really matter, we need millions of acres of land & water, filled with windmills which consume habitats & generate light distortions and some noise, which can create health issues for humans and animals living near a windmill (this leaves out thousands of dead eagles and other birds).

• So, if we want to decrease the carbon footprint on the assumption that this is what is driving the rise in the sea levels (see POV that sea levels are not rising at: www.tiktok.com/t/ZTRChoNTg) and any increase in global temperature, we need to figure out how to convince China, India and the rest of the world from fouling the air with fossil fuels. In fact, if the U.S. wanted to dramatically reduce its own carbon footprint, we would immediately begin building 30 new nuclear electrical generating plants around the country! France produces about 85% of its electrical power from its nuclear-driven generators. Separately, but related, do your own homework on fossil fuels (e.g., oil) versus electric; especially on the big-time move to electric and hybrid vehicles. Engineering analyses show you need to drive an electric car about 22 years (a hybrid car about 15-18 years) to breakeven on the savings versus the cost involved in using fossil fuels needed to manufacture, distribute and maintain an electric car! Also, see page 14 on the availability inside the U.S. of oil to offset what the U.S. purchases from the middle east and elsewhere, without building the Keystone pipeline from Canada.

Two 4-5-minute videos* on the climate change/C02/new green deal issue, in my opinion, should be required viewing in every high school and college; minimally because it provides perspective and data on the ‘other’ side of the issue while the public gets bombarded almost daily by the ‘sky is falling now or soon’ side on climate change!

* https://www.prageru.com/video/is-there-really-a-climate-emergency and

https://www.prageru.com/video/climate-change-whats-so-alarming - Models are unreliable

One Planet Only Forever at 14:26 PM on 5 December, 2022This is new information related to the video by Spencer mentioned by EddieEvans @1312 and comments about it since then.

Roy Spencer has a November 19th 2022 blog posting titled "Canadian Summer Urban Heat Island Effects: Some Results in Alberta".

In the conclusion Spencer says:

"The issue is important because rational energy policy should be based upon reality, not perception. To the extent that global warming estimates are exaggerated, so will be energy policy decisions. As it is, there is evidence (e.g. here) that the climate models used to guide policy produce more warming than observed, especially in the summer when excess heat is of concern. If that observed warming is even less than being reported, then the climate models become increasingly irrelevant to energy policy decisions."

That is very similar to the wording by Spencer included in the comment by MA Rodger @1316. It appears to be Spencer's "New Trick" - seeking any bits of data evaluation to make-up a claim about model inaccuracy that is then claimed to mean that "Energy Policy" should be less aggressively ending fossil fuel use.

And the introduction of this blog post by Spencer makes it pretty clear he has made this line of investigation, evaluation and claim-making regarding "Energy Policy" his new focus.

- Models are unreliable

Bob Loblaw at 05:02 AM on 5 September, 2022JohnCalvinNYU:

I"m really not sure just what definition of "accurate" you are using. If you are expecting it to be "perfect", then prepare to be disappointed. Science (and life in general) does not produce perfect results. Any scientific prediction, projection, estimate, etc. comes with some sort of range for the expected results - either implicitly, or explicitly.

You will often see this expressed as an indication of the "level of confidence" in a result. (This applies to any analysis, not just models.) In the most recent IPCC Summary for Policymakers, the state that they use the following terms (footnote 4, page 4):

Each finding is grounded in an evaluation of underlying evidence and agreement. A level of confidence is expressed using five qualifiers: very low, low, medium, high and very high, and typeset in italics, for example, medium confidence. The following terms have been used to indicate the assessed likelihood of an outcome or result: virtually certain 99–100% probability; very likely 90–100%; likely 66–100%; about as likely as not 33–66%; unlikely 0–33%; very unlikely 0–10%; and exceptionally unlikely 0–1%. Additional terms (extremely likely 95–100%; more likely than not >50–100%; and extremely unlikely 0–5%) are also used when appropriate. Assessed likelihood is typeset in italics, for example, very likely. This is consistent with AR5. In this Report, unless stated otherwise, square brackets [x to y] are used to provide the assessed very likely range, or 90% interval.

So, the logical answer to your question of why models are constantly being updated or improved is so that we can increase the accuracy of the models and increase our confidence in the results. Since nothing is perfect, there is always room for improvement - even if the current accuracy is good enough for a specific practical purpose.

Models also have a huge number of different outputs - temperature, precipitation, winds, pressure - basically if it is measured as "weather" then you can analysis the model output in the same way that you can analyze weather. A model can be very accurate for some outputs, and less accurate for others. It can be very accurate for some regions, and less accurate for others. It can be very accurate for some periods of geological time, and less accurate for others. The things it is accurate for can be used to guide policy, while the things we have less confidence in we may want to hedge our bets on.

Saying "none of the climate catastrophes predicted in the last 50 years" is such a vague claim. If you want to be at all convincing in your claim, you are going to have to actually provide specific examples of what predictions you are talking about, and provide links to accurate analyses that show these predictions to be in error. Climate models have long track records of accurate predictions.

Here at SkS, you can use the search box (upper left" to search for "lessons from past climate predictions" and find quite a few posts here that look at a variety of specific predictions. (Spoiler alert: you'll find a few posts in there that show some pretty inaccurate predictions from some of the key "contrarians" you might be a fan of.)

As for Lomborg: very little he says is accurate. Or if it is accurate, it omits other important variables to such an extent that his conclusions are inaccurate. I have no idea where I would find the article of his that you mention, and no desire to spend time trying to find it. If that is your source of your "none of the climate catastrophes" claim, then I repeat: you need to provide specific examples and something better than a link to a Lomborg opinion piece.

There have been reviews, etc. posted here of previous efforts by Lomborg, such as:

https://skepticalscience.com/open-letter-to-wsj-scientist-response-to-misleading-lomborg.html

https://skepticalscience.com/lomborg-WSJ-debunk-CSRRT.html

https://skepticalscience.com/lomborg-detailed-citation-analysis.html

...and Lomborg has a page over at DesmogBlog.

In short, you're going to have to do a lot better if you expect to make a convincing argument.

- Climate's changed before

HK at 04:47 AM on 29 January, 2022How valid is the claim, in refuting man-made climate change, that the Earth's climate has changed before?

Your honor, I can’t be convicted of murder.

You see, people have been dying since before I was born.

So death is a natural thing, not caused by me. And probably nothing to worry about.

Plus, you can’t prove that I killed that guy.

Sure, I stabbed him, but all you can prove is that this caused localized cell death at the micro scale, not that it caused him to die.

People survive stabbings all the time, in fact, stabbing a scalpel in people is often healthy, doctors do it all the time!

I only stabbed 0.4% of his body, so it can’t be an issue, my stab is barely 3% of his body cavities.

And the models are so unreliable.

Doctors can’t even predict with 100% accuracy if someone will survive a surgery or not, how can they claim to suddenly know that this particular stab was bad?

Science has been wrong before!

So you see, I can’t be convicted of murder.

Now stop trying to push this radical anti-stabbing agenda.

- Models are unreliable

Bob Loblaw at 00:54 AM on 7 July, 2021MAR:

This all hinges on what is meant by "calibration", and whether or not the parameters in a model are arbitrary.

Wiktionary defines "calibrate" as "To check or adjust by comparison with a standard." When discussing climate models, this implies that there is some adjustable parameter (or seven) or input that can be varied at will to create a desired output.

There are many problems with this argument [that climate models are "calibrated" to create a result]:

- What are we calibrating for? A global 3-d climate model has thousands (if not millions) of outputs. Global mean surface temperature is one simple statistical summary of model output, but the model has temperatures that vary spatially (in 3-d) and temporally. It also has precipitation, humidity, wind speed, pressure, cloud cover, surface evaporation rates, etc. There are seasonal patterns, and patterns over longer periods of time such as El Nino. All of these are inter-related, and they cannot be "calibrated" independently. Analyzing the output of a GCM is as complex as analyzing weather observations to determine climate.

- How many input parameters are devoid of physical meaning and can be changed arbitrariiy? The more physcially-based the model is, the fewer arbitrary parameters there are. You can't simply decide that fresh snow will have an albedo of 0.4, or open water will evaporate at 30% of the potential evapotranspiration rate, just because it makes one output look better. So much of the input information is highly constrained by the need to use realistic values. All these have uncertainties, and part of the modelling process is to look at the effect of those uncertainties, but the value to use can be determined independently through measurement. It is not a case of choosing whatever you want.

So, robnyc987's claim that you can achieve 100% accuracy by "calibrating" a small set of parameters is bunkum. If climate models are so easy to "calibrate", then why do they show variations depending on who's model it is? Or depending on what the initial conditions are? That variability amongst models and model runs indicates uncertainty in the parameters, physics, and independent measurements of input variables - not "calibration".

Perhaps robnyc987 will return to provide more explanation of his claim, but I somehow doubt it.

- How much has nuclear testing contributed to global warming?

boston745 at 18:02 PM on 27 October, 2020You failed to address a single observation Ive made. You completely dismiss qualified scientists who disagree with the mainstream. And you put your faith in climate models that do not factor in things like clouds nor weakening magnetosphere. I'm sorry but it doesn't seem like you're after honest dialog.

Atmospheric scientists have learned a great deal in the past many decades about how clouds form and move in Earth's atmospheric circulation. Investigators now realize that traditional computer models of global climate have taken a rather simple view of clouds and their effects, partly because detailed global descriptions of clouds have been lacking, and partly because in the past the focus has been on short-term regional weather prediction rather than on long-term global climate prediction. To address today's concerns, we need to accumulate and analyze more and better data to improve our understanding of cloud processes and to increase the accuracy of our weather and climate models.

- The Trump EPA is vastly underestimating the cost of carbon dioxide pollution to society, new research finds

Postkey at 19:37 PM on 3 August, 2020“If the predictions of Nordhaus’s Damage Function were true, then everyone—including Climate Change Believers (CCBs)—should just relax. An 8.5 percent fall in GDP is twice as bad as the “Great Recession”, as Americans call the 2008 crisis, which reduced real GDP by 4.2% peak to trough. But that happened in just under two years, so the annual decline in GDP was a very noticeable 2%. The 8.5% decline that Nordhaus predicts from a 6 degree increase in average global temperature (here CCDs will have to pretend that AGW is real) would take 130 years if nothing were done to attenuate Climate Change, according to Nordhaus’s model (see Figure 1). Spread over more than a century, that 8.5% fall would mean a decline in GDP growth of less than 0.1% per year. At the accuracy with which change in GDP is measured, that’s little better than rounding error. We should all just sit back and enjoy the extra warmth. . . .

In this post, Keen delves into DICE (“Dynamic Integrated model of Climate and the Economy”)—the mathematical model underpinning Nordhaus’ work and the flaws in Nordhaus’ methodologies.”

- Models are unreliable

ClimateDemon at 08:12 AM on 6 July, 2020Tom Dayton @1224 and 1244

Thanks for your responses, and please understand that I am not trying to tear down the general integrity or accuracy of the current models. I do have serious concerns, however, as to how the climate science community is applying these models to conclude that humans are well on the way to toasting the entire earth with their CO2 emissions. Over the last 5-10 years, however, the only analysis I have been able to find that at least indirectly blames humans for the warming trend during the last two decades of the 20th century is the CO2 control-knob theory as explained on the Lacis et. al. paper. I did find several different authors, including John Cook, but they all said pretty much the same thing.

Now, in your statement 1224, you claimed that this was not the only model and paper that predicts the CO2 control knob and AGW. So, what I need to know is what models and papers are out there that do predict AGW, and specifically who or what the AGW community (including politicians as well as scientist) is referring to when they make swooping claims such as "scientists say that humans are causing global warming".

- Models are unreliable

scaddenp at 08:26 AM on 2 July, 2020Deplore_This: Not exactly sure what you mean by "climate temperature models referenced by the IPCC" but I assume you mean the GCMs used by CMIP to predict future climate (of which temperature is but variable that can be extracted). If this is the case, then note that these are the best means we have available to predict future climate, but by themselves say nothing about the validity of anthropogenic climate change. They could be completely wrong do to some fundimental algorithmic error which would affect their ability to infer the future, but say nothing about the accuracy of the physic of anthopogenic climate change.

The science does depend on other models (but not necessarily computer models), especially the radiative properties of gases and the Radiative Transfer Equations in particular. These have real-world applications and the detailed work was initially done by USAF because laser-guided bombs depend on them.

There are rather more direct ways of checking validity of science (eg empirical evidence). You can also directly measure the increase in surface irradiation. I rather suspect that you would agree that an increase in surface irradation because sun increased its output would warm the planet. The GHE can do that too. - Climate sensitivity is low

RedBaron at 06:52 AM on 14 May, 2020@381 Deplore This. Everyone has seen this argument. It was a well known published "merchant of doubt" argument full of logic fallicies and false premises designed to mislead people like you... which unfortunately it seems to have worked so far. However, if you are honestly seeking a University level course, I suggest you change your google search terms to "statistical modeling" and /or "statistical modeling of climate change" and you will find a whole lot of universities in the world can help you.

And yes sensitivity is a factor in all of them. In some as a constant and in a few papers there are calls for sensitivity as a variable to fine tune accuracy.

However, the statement, "The theory is based upon modeling climate sensitivity to CO2" is false. Called a false premise logic fallacy.

Actually global warming is based on empirical evidence.

- The never-ending RCP8.5 debate

nigelj at 07:35 AM on 12 January, 2020Nick Palmer @53, yes we see at least some issues the same way, and I respect your views as well.

I looked at the video, and read Barlows comment and your comment, and your debate between yourselves.

Overall I find your views the most credible. Thats the short answer.

I don't see that you were being overly insulting. You called him a fanatic just to get his attention, perhaps borderline insulting. Having got his attention, you could have been clever and then said sorry didn't mean to be too abrasive :)

But Barlow made some good points as well. Like a lot of issues the truth looks like it maybe somewhere in the middle between you guys, on some of this at least.

The thing is Barlow is an ecologist and I've noticed these sorts of people catastrophise about climate change a lot, probably to be expected as they fall in love with nature a bit. I actually respect that, but the risk is they loose objectivity and Barlow has.

Barlow is confused about the state of the science way back then. The state of the science in the 1970's and 1980's was definitely too uncertain for us to conclude we were warming the climate and should do something. The AGW signal was only confirmed in the early 1990's and even then it was not clear what the hell we should do. We had to have some real world evidence of some actual warming like this, plus detection of AGW, to confirm the theories.

But by the mid 1990s it was very clear we had a problem, and that it was serious enough to justify robust mitigation, and that we had some good mitigation options.

It's absurd of Barlow to say models in the 1970s were accurate, so action should have been taken back then. We only know they were accurate with the passing of time since then.

Regarding Barlow claiming the risks were downplayed for decades and hes claiming virtually a cover up. This is a thorny issue. I dont really think they have been on the whole. We just didn't know enough back then. It's not like the link between smoking and cancer which was quite compelling at even an early stage, so using scare tactics did make some sense.

However I do think the IPCC reports "lowball" some things a bit in recent years as I've mentioned. Whether this is political pressure or scientists being conservative is an interesting question.

Maybe I sit a little bit between you and Barlow on the whole thing. But my bottom line is if scientists put scary scenarios in front of the public, and they should, these scenarios need some pretty good evidential basis. They cannot just be speculation full of endless "what ifs".

Regarding the Australian bushfires. I dont think Climate Adam was hyping things. They definitely look very concerning. Yes more area was burned in the past but this latest fire seaon has just started. Its not unreasonable to suspect we are heading towards an absolute record setter, and climate change is a factor in it (which you did mention).

Of course your area calcs look robust to me and it was useful to mention those.

This is a tough one for me. I've sometimes done the same sort of thing as you. The hyper alarmists have sometimes made wild, exaggerated hand waving claims on various things and I have criticised their views and been labelled a luke warmer as a result which is so frustrating.

However in these posts I always mention that I think climate change is deadly serious and why, to try and get across that I'm not minimising the problem, but that we just need accuracy. I also make a point of posting alarmist science where I think it does actually have a robust basis.

Sorry for a rather nuanced reply but I'm just being honest. Hope it helps a bit.

- The five corrupt pillars of climate change denial

nigelj at 06:00 AM on 25 December, 2019blub @1

"The models are not robust at all between about 2000-2015, but have been recalibrated because of the heating hiatus during this time. This is far from settled science, but only a handful of "real" climatologists not self proclaimed climate scientists even understand climate modeling correctly."

Adding a few things. Climate models can never be 100% robust over short time frames of about 15 years, because these timeframes are modulated by ocean variability, and this does not follow a completely regular cycle. For blubs information, you cant ever accurately predict something that is partly random. Climate models are intended to model long terms trends of 30 years and more, and do this well. Scientists are aware of natural variability and the very first IPCC reports stated there would be flat periods within a longer term warming trend. The slowdown after 1998 was such a flat period.

Blub claims models have been recalibrated, but provides no evidence of this.

Blubs claims about a handful of so called real climate scientists are totally unsubstantiated arm waving.

Regarding the rest of his screed on natural variability. Cherrypicking a couple of scientific papers does not demonstrate anything. Nothing is provided to show there has been wide acceptance of these specific papers, and they do not falsify any of the models.

Models do reproduce ocean cycles, although not perfectly. However models have proven to have good accuracy at predicting multiple trends including temperatures here and here. Clearly although ocean cycles are not perfectly understood, their affects are overwhelmed by CO2.

- Climate denier scientists think these 5 arguments will persuade EU and UN leaders

Postkey at 08:17 AM on 14 September, 2019If?

“If the predictions of Nordhaus’s Damage Function were true, then everyone—including Climate Change Believers (CCBs)—should just relax. An 8.5 percent fall in GDP is twice as bad as the “Great Recession”, as Americans call the 2008 crisis, which reduced real GDP by 4.2% peak to trough. But that happened in just under two years, so the annual decline in GDP was a very noticeable 2%. The 8.5% decline that Nordhaus predicts from a 6 degree increase in average global temperature (here CCDs will have to pretend that AGW is real) would take 130 years if nothing were done to attenuate Climate Change, according to Nordhaus’s model (see Figure 1). Spread over more than a century, that 8.5% fall would mean a decline in GDP growth of less than 0.1% per year. At the accuracy with which change in GDP is measured, that’s little better than rounding error. We should all just sit back and enjoy the extra warmth.”

- The human fingerprint in the daily cycle

scaddenp at 07:13 AM on 19 July, 2019Schmidt is merely building on a long line of other papers and the paper is effectively a "quick a dirty" for the purposes of informing public discussion. From abstract:

"Much of the interest in these values is however due to an implicit

assumption that these contributions are directly relevant for the question of climate sensitivity."ie. it doesnt have a lot of relevance to the practise of climate science. Actual model codes are integrate over all gases and all absorbtion bands simultaneously. They reproduce observations of the radiation spectra with exquisite accuracy. Climate depends on how the system plays as a whole and the individual contributions of the gases in any particular atmospheric composition is of little practical interest.

- CO2 effect is saturated

jjworld at 15:09 PM on 1 July, 2019I re-read my post to make sure I didn't misstate anything and you've proven one of my points regarding proponents being less reasonable. In your third sentence of a two post response you call my comments ignorant and worthless. So you didn't even bother to follow my statements to the conclusion. That makes you unreasonable.

My initial response was to the statement from michael sweet that readers should not "waste" time trying to "understand" the science. Seriously? What about that premise is not directly on topic with this or any thread? And what part of my critique about using the scientific method are you calling ignorant and worthless?

My second portion is precise and accurate if you were willing to process it before calling my efforts ignorant and worthless. The math can be accurate and weak. Those adjectives are not mutually exclusive. Taking time to explain the language to you is far more off topic than my original post. For example, if f(x) = ax + bx and both a and b are assumptions derived from estimates, the math is potentially correct but definitely weak. Before you attack someone who is trying to learn and instruct, perhaps you should be more REASONABLE and consider the position first. My advice is to research, be skeptical and prove each point. I was responding to another poster's advice to ignore the science and trust a group of people that are still learning and evolving their positions as much as any other research group. I don't need any examples to recommend that readers not follow bad advice.

This post relates to saturation. The argument in this post is that saturation is not the issue since heat is being transferred to CO2 by convection. I'm not making up the topic, the 11 pages of comments brought up the topic as it relates to saturation. I'm directly on the topic if you cared enough to process my comments.

Since there is no mention of convection in the IPCC summary, I had to try and explain some of the details from the supporting references, all of which are already mentioned in this and other posts. So, I don't need to re-reference material here. I tried to be pithy since the post was getting long and you picked apart the abbreviated supporting points while leaving the premise untouched.

Thus far, this response is completely off topic since I'm having to defend my language which you chose to attack rather than consider. In an effort to get back on topic and honor the spirit of this site, I'll summarize as follows:

This topic suggests that the ONLY reason that the saturation argument doesn't hold is because of CO2 heat transfer via convection. For reference, read the post and the comments. I'm not arguing the math or the physics related to CO2 convection. I'm familiar with the calculations in a controlled environment. And despite your incorrect statement, the last several decades DO NOT correlate temperature and CO2. CO2 has steadily increased while the temperature spiked, leveled, and spiked again. Convection does not exhibit this pattern and cannot explain the temperature changes. In order for the convection argument to trump the saturation argument, the convection would need to involve something OTHER THAN CO2. Since the anthropogenic portion of CO2 in the atmosphere is less than 20ppm, there is no way CO2 can explain the temperature. I'm not arguing about the totality of climate change. I'm simply stating the physical limitations with the argument that CO2 convection can adequately explain the storage of IR heat in the atmosphere. The theory (and it is still a theory since the scientific method has not proven it), is that other GHGs are also contributing to convective heating. That discussion is off-topic for this post. But the IPCC math makes huge assumptions regarding the contribution of CO2 in the convective process. Are you suggesting that isn't true? And since the anthropogenic portion of CO2 is extremely small it cannot explain the totality of the temperature anomaly. The IPCC reports do cover this topic, but it goes beyond this thread's subject.

In summary, the convection story does NOT invalidate the saturation argument. This is the statement I was trying to make and encourage readers to research the topic and NOT trust the IPCC report just because one poster recommended it. Convection only explains the heat differential with one or more of the following:

1) Non CO2 GHGs are contributing more heat via convection than CO2. If this is true, than what are the ratios of those GHGs? The question remains unanswered without using a model to guesstimate the ratios. I'm a mathemetician and don't support guesstimates as scientific. (the non CO2 GHG question is beyond the scope of this thread)

2) More CO2 is coming from natural sources or indirectly caused by temperature change (thawing tundra, etc). If this is true, the CO2 is coming from temperature changes and not causing temperature changes. (the CO2 is leading or following temperature change is beyond the scope of this thread)

Regardless, the IPCC reports support my statements. My conclusion is simple. Since the source of the heat cannot be explained without assumptions on CO2 sources that cannot yet be proven, the math is unable to accurately predict future temperatures with any reasonable accuracy. From a mathematical scenario, a range of 1.5C to 4.5C is not reasonable accuracy. In the lower range, we have little change to worry about. If the upper range, we have dramatic regional climate changes. The math has a 50% error range and cannot be relied upon to divert trillions of dollars.

Other than my last sentence, I don't think many would disagree with any of my assertions. This thread is titled "Is the CO2 effect saturated?". The response to the question is YES, CO2 is saturated as it relates to radiant heat, but convection provides the difference.

To the readers, research the IPCC reports in detail since the IPCC summary does NOT mention convection. Convective heat transfer is a linear temperature model unless pressure or concentrations change. The temperature changes do not follow the path of a convection heat transfer based on the current CO2 concentrations without additional interference. There is plenty of support for this statement on this site.

Consider this article:

https://www.pnas.org/content/111/30/10943

In order to explain the current temperature trajectory, assumptions have to be made for time of year, location, and concentration. Those variables create the huge range of error in the climate models that predict 1.5C to 4.5C over a doubling of CO2. In other words, the models that predict these temperature changes still need dramatic improvements since we simply do not know how to treat all these variables under so many different conditions.

I say again, the math is not necessarily flawed, but it is weak. It relies on huge assumptions that no applied mathematician would support since each assumption adds more room for error. In other words, we don't know! CO2 does appear to be saturated for radiated IR and trapped convection doesn't explain the temperature anomaly.

But I'm "ignorant" and my comments are "worthless" according to the "reasonable" Philippe Chantreau. If only we had a scientific method to help us formulate, test, and MODIFY our hypotheses.

- Models are unreliable

AFT17170 at 07:38 AM on 31 December, 2018As I've stated in other posts, I am a non-scientist layman. I've gone through thousands of comments on this site and several articles on RealClimate. I just got done reading the article and comments over there on "30 years after Hansen’s testimony" here

Based on everything I've read so far, this is what I've internalized (please correct me as needed) — all climate models are obviously dependent upon the assumed inputs of both man-driven forcings and natural forcings, which the models use in physics-based simulations of the resulting outputs. Such models do not pretend to have intradecadal accuracy, rather the target is skill in projecting 30 year trends. Hansen was obviously required to guess those forcings, which he incorporated into 3 different scenarios. His man-driven forcings included not only CO2, but also N2O, CH4 and CFC. His CO2 forcings, in retrospect, were "pretty close" for Scenario B but he overshot on the others because humans actually tackled those other emissions. Gavin at RealClimate took a stab at adjusting Hansen's Scenario B and concluded that the adjusted results indicated a quite skillful model.

So my (perhaps dumb) question is — why not re-run the actual models with the actual man-made forcings that happened in those 3 decades, to see exactly how close the projections got for Scenario B? It seems like they might be "pretty darn close" and bolster the cause?

- Climate's changed before

Eclectic at 19:24 PM on 3 November, 2018Waterguy13 @ #614 ,

What source do you base your comment on? The earlier mainstream climate models have done a fairly good job with their projections during the past 30 years or so. They can be criticized for minor inaccuracy, in that they A) somewhat overestimated the tropical mid-trospheric "hot spot" , and B) underestimated arctic warming, and C) underestimated sealevel rise.

But on the whole, they have done quite well. In comparison, Dr Lindzen's model has done appallingly badly [he predicted cooling!] . . . and Lindzen still has difficulty acknowledging the reality of the actual ongoing global warming.

Waterguy13 , you very much need to explain your strange comment.

- Climate impacts

nigelj at 06:50 AM on 2 November, 2018Economics does not have the tools to make reliable long term predictions. Its history of prediction is poor, gdp estimates even a couple of years ahead lack accuracy, they never predicted the 2008 financial crash, or any crash really. This is because economics assumes people behave in simplistic ways when they don't, and because they take a narrow view of climate costs. This is not to say their work is useless of course, but it suggests a risk that climate costs will more likely be underestimates, and that we need to be wary.

Economics measures things in terms of profits and gdp growth. Very little attention is given to measuring happiness or human well being. The mines will keep extracting minerals even in a heatwave, to an extent and at a cost, so gdp output might march on, but its a miserable thing to live with heatwaves especially in countries that are already hot. Evidence suggests heatwaves may make parts of the world uninhabitable.

What projections are the economic models based on? The IPCC predict a worst case scenario of 10 degrees by 2100 if we go on burning fossil fuels. Economics has to consider worst case scenarios. Have they considered this, because my reading is they don't.

You don't even need an economic analysis to know worst case scenarios of 10 degrees will cost significantly.

How do you price climate tipping points? Its hard to even evaluate climate outcomes from those other than to say all the evidence suggests they will be mostly negative.

You have species loss potentially on a huge scale in worst case scenarios. How do you price this? A study I saw threw a rather arbitrary and small sum of money at this issue, but clearly many people consider loss of species a serious issue. Perhaps its an emotional thing, but this is not unimportant, and the natural world supplies approximately 50% of our pharmaceutical drugs.

Have they considered the costs of climate refugees? Causation would include heatwaves, crop losses, and loss of coastline just for starters. Look at the problem we have right now with political refugees, and you can triple that. It's not just the economic cost either, its the anxiety and tension.

Then theres the potential of refugeess leading to global conflict. Of course economists aren't bothered by wars, because gdp typically increases, but the rest of us might be bothered.

Economics is a useful tool, but a very crude too in evaluating the climate problem, and imho almost certainly underestimates the impacts.

- Human CO2 is a tiny % of CO2 emissions

lonegull at 02:19 AM on 15 October, 2018CO2 is .04% of the atmosphere, humans are 3.5% of that which comes out to be .0014% of CO2 is man made. CO2 lags temperature change in ice core samples by 800 years. Yet it is believed that man can emit a .0014% of CO2 for a mere 150 years and cause a 1.5+ degree change in climate temperature.

The weather cannot be predicted beyond several days with any accuracy, due to the complexity of the atmosphere. But we are told to believe that climate modeling can predictions 50+ years into the future is science fact.

- Models are unreliable

michael sweet at 08:54 AM on 22 April, 2018Your "luke warmers" used to argue that there was no warming. They changed their hats when it became impossible to continue with their past lies. They continue to lie to the public about the changes expected from warming. Look at the briefs submitted to the court by deniers in the case of young people suing the government. You cannot concede the possibility of sea level rise contained in the US Climate Change report which is described by its author as "very conservative".

The real difference between warmists and luke warmers is that the luke warmers are deliberately lying to the public about the dangers we face.

The models can be falsified in myriad ways. You are just making excuses. The problem is that you are listening to oil company lobbyists and not scientists. There is much more than temperature modeled. We can compare the models to ocean heat, atmospheric humidity, rainfall patterns, drought predictions, extreme storm predictions, floods, temperature changes in different areas, river flow, and many more. All these data points give us evidence of the accuracy of the models.

We already see the stronger storms, drought, flooding and sea level rise. You want to wait and see if it really gets as much worse as scientists have projected? You realize the it will continue to get worse after 2100 in any case? You want to wait until civilization collapses before you take any action?

The fact that Arhennius in 1896 made projections that are still in the range of what is expected tells us that scientists are close to the mark. How long do you need to wait? It has already been 120 years, why would we need to wait another 30? James Hansen testified to congfress in 1989, 30 years ago. Fossil fuel intrests used exactly your argument 30 years ago. Now that the future has realized we see that Hansens projections were very accurate and you say we need another 50 years to wait? Does that make sense?

You are making excuses so that you can make money while everyone younger than you will suffer. 10 years ago scientists did not use the term carastrophic global warming and the deniers (luke warmers do not exist) used that term to insult scientists. Today scientists warn of catastrophic damages and deniers say it will not be too bad. The consequences have gotton so much worse in the past ten years that it is no longer extreme for scientists to warn of castrophe.

Scientists work for 150 years to develop the knowledge to project the future climate. You do not like the projections because it means you will make less money. You say we will just have to wait and see if it is really that bad. There is a consensus that warming over 2C could threaten the collapse of civilization and you say we should wait and see what happens at 4C? That is insane.

You produce no peer reviewed papers to support you absurd claims. You dismiss Stern, Hansen and Jacobson with a handwave. You have only the opinion of a lawyer who invests heavily in fossil fuels. You ignore the evidence you are presented. Why do you waste our time here when you do not care about the evidence?

Arguing that you do not understand the consequences of material that you refuse to read is not rational.

Please do not insult us here again with your comparisons of "luke warmers" and scientists.

- Consensus on consensus

robert test at 07:45 AM on 21 January, 2018A few thoughts about the importance of consensus.

1. William – it seems to me that your comment is self-contradictory. You say

a. Consensus has little to do with science.

b. Evidence is essential to science.

c. We should base our arguments on the evidence.The use of the term ‘we’ indicates consensus concerning the evidence. Without this consensus there is no we who, you say, are to base arguments on the evidence.

The essence of science is the process of correction. As experiments are performed or as more data are gathered by a community of scientists, our understanding of physical processes increases, our instruments are improved and our measurements and our theories (i.e., models) become more precise.

Science is a communal enterprise. If you fail to get colleagues in your field to understand your experiments and theories you are failing as a scientist. If your colleagues, assuming they have reputations as capable experimentalists, are unable to replicate your findings you are failing as a scientist. It doesn’t mean that, in the end, their judgments will not be revised. It does mean, however, that the judgments of the scientific community— i.e., the consensus judgments of that community are important to the process of the scientific enterprise.

A certain measure of disagreement within the scientific community is sometimes helpful. Not all consensus or agreement is important. But deviate too far from the consensus views of this community : reject the importance of things such as measurements, experiments, data, and the use of mathematics and your ability to interact with the scientific community will come to an end. Science could not exist as an enterprise without shared views of the value of evidence, data, and instrumentation.

BBHY –

I am with you here. Evidence is only convincing if it is understood. I am not a scientist. I cannot claim to understand much at all beyond the introductory sentences of a science journal article.

I am no more capable of looking at the evidence for warming and arguing that this evidence is sufficient warrant to show that humans are causing warming than I can look at my x-rays and other medical evidence and claim that I need xyz surgery. I leave it to the scientific and medical experts to come to their conclusions. I would be a fool to disagree with the consensus of the scientific or the medical community.

William –

I agree with you on your views about rationality, mental short-cuts, biases etc. But the problem runs deeper. It affects scientists too. You say our scientific consensus is soundly based. How to we ever know this? There is no simple instrument that registers positively when consensus is soundly based.

How would we verify the accuracy of such an instrument?Right now, the scientific consensus on AGW is meeting very little opposition from credible sources. All objections to the consensus are coming from opponents based on their political and economic interests. None of the objections are coming from credible scientific sources.

So, I would argue that we think that our scientific consensus is soundly based because we have a consensus concerning how to conduct scientific inquiry – we agree on the use of data, the use of various instruments to collect that data, the use of various mathematical methods to evaluate that data, and we agree on the importance of open inquiry. Based on this consensus concerning how the scientific enterprise to to be conducted we can form a meta-consensus about the well founded basis of climate science.

Its not quite like turtles all the way down but it is turtles down a bit further than you suggest.

My point is no more than consensus is important and perhaps more important than its been treated in the comments.

- 2018 SkS Weekly Climate Change & Global Warming Digest #1

rkrolph at 04:21 AM on 8 January, 2018There was an article a couple days from someone named Brandon Morse. Sorry I don't have a link. The title was "New Study Shows Alarmist Climate Data Based Off Faulty Science...Sorry Bill Nye"

It discussed a study by Geoscientist Jeff Severinghaus at Scripps Institution of Oceanography, that claimed a new way to measure ocean heat content measuring gases trapped in ice cores. They claim that the study shows oceans have warmed much less than previously thought, which puts all the alarmist climate models in doubt. It also quotes a scientist named William Happer, who criticizes the alarmist climate models accuracy. The article seems full of denier type talk, as it makes continuous jabs at Al Gore and Bill Nye as being non-scientists, but doesn't bother to provide any real climate scientists take on the study.

But I am just wondering if anyone has any information on the validity of the study itself.

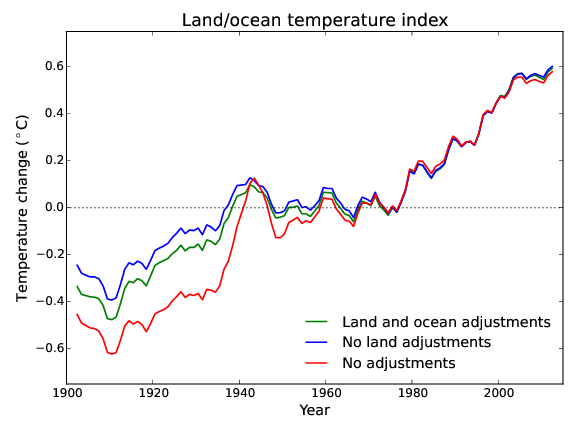

- Temp record is unreliable

randman at 08:42 AM on 25 September, 2017Tom, this is the paper by Hansen with 288 Kelvin as the mean. I think you've already seen the press comments by Hansen and Jones in 1988 of 59 degrees F and "roughly 59 degrees" respectively, right?

LINK

Obviously regardless of looking at anomalies, there is a reason they believed the mean was 59 degrees. The fact climatologists like to look at anomalies does not change that, does it? Not seeing your point.

On a wider note, this appears to be a pattern. 15 degrees was later adjusted down to 14 degrees, which had the effect of making the then present temps appear warmer, whether correctly so or not.

More recently, we've seen satellite data that showed no sea level rise to speak of "adjusted", perhaps correctly so or not, to now show sea level rise.

http://www.nature.com/news/satellite-snafu-masked-true-sea-level-rise-for-decades-1.22312

Prior to that we saw the posited warming hiatus changed by some, which changes including lowering the past means among other things. One climatologists somewhat famously has complained about this, Judith Curry. Some of her comments here:

""This short paper in Science is not adequate to explain and explore the very large changes that have been made to the NOAA data set," she wrote. "The global surface temperature data sets are clearly a moving target. So while I'm sure this latest analysis from NOAA will be regarded as politically useful for the Obama Administration, I don't regard it as a particularly useful contribution to our scientific understanding of what is going on.""

http://news.nationalgeographic.com/2015/06/150604-hiatus-climate-warming-temperature-denier-NOAA/

https://judithcurry.com/2015/07/09/recent-hiatus-caused-by-decadal-shift-in-indo-pacific-heating-2/

As I understand it, Curry was a proponent of AGW and perhaps still is in some respect, but has had problems with the way the data has been adjusted and the accuracy of the models among other things.

She's not the only scientist raises these questions. So it's not just laymen like myself who wonder why there appears to be a pattern of data that does not line up with predictions simply being "adjusted." These adjustments are not just one-off things either but a fairly consistent feature here. - Climate scientists just debunked deniers' favorite argument

nigelj at 08:38 AM on 29 June, 2017The models diverge from reality after about 2005, but only slightly. This is short term, so is most likely short term natural variation. As you say its not climate sensitivity and could be volcanic activity etc.

I would add natural variation like enso or pdo cycles could be difficult to 100% accurately incorporate into into models , as it's not perfectly regular, so you cannot read anything much into a divergence of temperatures over relatively short terms up to about 25 years.

In contrast sea level rise is slightly ahead of model estimates. Nothing from Christie on this. Again there's so much going on its hard to make completely 100% accurate predictions, but things can be more than predicted as well.

Do we do nothing on climate change because we don't as yet have 100% accuracy? It's like saying lets not treat this very sick patient, because we dont 100% understand how the body works, and can't 100% accurately predict outcomes of surgery or drugs. We would obviously treat the patient.

- Explainer: Dealing with the ‘loss and damage’ caused by climate change

nigelj at 11:15 AM on 21 June, 2017WatrWise @11, your post is a very long list of rhetorical questions. I find such long lists of questions to be by definition content free, and extremely irritating, reminding me of aggressive lawyers, so it just gets my back up, and is not conducive to open and useful discussion.

One or two perceptive rhetorical questions can clarify, but your list of over 10 is just ridiculous. We are not your students, Mr / Mss Waterwse.

It's especially frustrating because I know a simple google search would answer many of your questions, so why didn't you just do that first?

And most of your questions are off topic.

One point is worth comment because it demonstrates what I'm getting at. You say "Over 120 years and science cannot accurately predict climate change, even with evolving technology; why?"

If you had done a simple google search, or taken a more relaxed approach to your writing, instead of trying to intimidate people with long lists, you would know that some climate influences are known to be chaotic or variable (like el nino cycles) and so models will probably never be 100% accurate, no matter what technology is available, same issue as predicting outcomes of illness. But climate models have shown useful and reasonable levels of accuracy.