How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

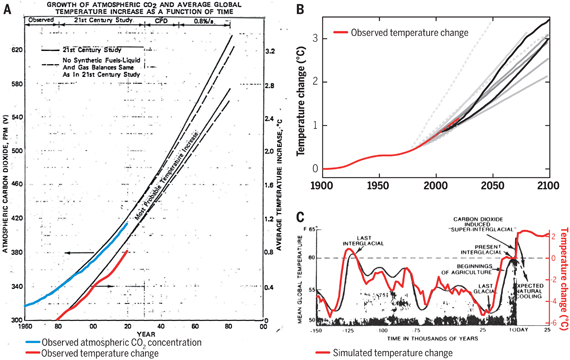

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

Not if they bothered to read the whole paper instead just quote mining. How about this quote then:

"For example, the forced trends in models are modulated up and down by simulated sequences of ENSO events, which are not expected to coincide with the observed sequence of such events."

What the modellers firmly state is that the models have no skill at decadal level prediction. They do not predict the ENSO events and trends are modified by this long period La Nina/neutral.

A better to question is to ask what skill do models have. If you dont trust models, then you must instead rely on simpler means to guide your policy. The verification of AGW do not depend on models so by what means would you guess the effect? The models despite their flaws remain the very best means we have predicting long term changes to the climate.

This comment is a continuation of a conversation that started on the Falsifiability thread; this continuation is more appropriate on this Models Are Unreliable thread.

PanicBusiness: I can say that evaluating GCMs' temperature projections requires evaluating the GCMs' hindcasts rather than forecasts, when the hindcast execution differs from forecast execution only in the hindcast having the actual values of forcings--at least solar forcing, greenhouse gases (natural and artificial), and aerosols (natural and artificial). That is because GCMs' value in "predicting" temperature does not include predicting those forcings. Instead, GCMs are tools for predicting temperature given specific trajectories of forcings. Modelers run GCMs separate times for separate scenarios of forcings. GCMs are valuable if they "sufficiently" accurately predict temperature for a given scenario, when "sufficient" means that scenario is useful for some purpose such as one input in policy decisions.

My other requirement for evaluating GCMs is that even within an accurate scenario of forcings, that the short-term noise be ignored. Perhaps the most important known source of that noise is ENSO. ENSO causes short term increases in warming and short term decreases in warming, but overall balances out to a net zero change, meaning it is noise on top of the long term temperature trend signal. You can do that by comparing the observed temperature trend to the range of the model run result trends rather than to the trend that is the mean of the individual runs. In GCM trend charts sometimes those individual model runs are shown as skinny lines, as in the "AR4 Models" graph in the "Further Reading" green box below the original post. (Unfortunately, in many graphs those skinny lines are replaced by a block of gray, which easily can be misinterpreted to mean a genuine probabilistic confidence interval around the mean trend.) Actual temperature is expected to not follow the mean trend line! Actual temperature is expected instead to be jagged like any one of those skinny model run lines. The GCMs do a good job of predicting that ENSO events occur and that they average out to zero, but a poor job at predicting when they occur. The mismatches in timing across model runs get averaged out by the model run ensemble mean, leading easily to the misinterpretation that the models project a trend without that jaggedness.

Another way to see past ENSO and to match observed forcings is to statistically adjust the GCMs' projections for those factors. That approach has been taken for observations rather than models by Foster and Rahmstorf. That approach just now has been taken for model projections by Gavin Schmidt, Drew Shindell, and Kostas Tsigaridis--paywalled, but one of their figures has been posted by HotWhopper. Doing so shows that observations are well within the range of model runs.

PanicBusiness, you wrote on another thread:

"The CAGW community" specifically disclaims the ability to predict temperatures for five year and even ten year spans. That's weather, not climate. So you've set up a strawman if you mean you want predictions from now for the next five years.

If instead you mean that in five years the trend over the previous 30 years (25 years ago from now, plus 5 years into the future) will be below the GCMs' projections, then first you will need to verify that result after having used the models to hindcast using the actual forcings during that period (Sun, aerosols, and greenhouse gases), or at least will have to statistically adjust the model projections to accommodate the actual forcings, as was done by Schmidt, Shindell, and Tsigaridis. To be thorough you should remove ENSO as well, though over a 30-year period it should average out to about zero. When I say 30 years, I mean really 30 years. Focusing on the last five years of a 30-year period is just looking at weather, so if the projections were within range for 29 years and in the 30th year dipped below the 90% range, you can't yell about that 30th year as if that is climate.

If after doing all that, five years from now the 30-year trend is below the 90% range of the model projections, then I would say that the projections were too high and that policies depending on those projections need to be modified. But the modifications of policy would not be to assume the models are "falsified" in the sense that they are useless. Instead, policies would need to be reworked to suit projections that are lower than those original projections, but lower only as much as indicated by the difference from the observations.

PanicBusiness, you wrote:

It seems you have not done much research. The IPCC has produced its AR5 report, containing those projections. One thing you absolutely must take into account is that the projections depend on assumptions about forcings such as greenhouse gas emissions, aerosols from volcanoes and humans, and solar intensity. Those are uncertain. We can't model each one of the infinite scenarios of forcings. So the IPCC defined a few scenarios that span the range of reasonable expectations of scenarios. GP Wayne has written an excellent explanation of the greenhouse gas emission aspects of those scenarios. How well the models project temperature depends in large part on how well the real world forcings match each of the scenarios. Even the best-matching scenario will not assume exactly the same forcings as happened in the real world. That is not an excuse, it is as unavoidable a problem as is the traffic condition that will actually happen during your drive to work, versus your beforehand scenarios of possible traffic conditions--the scenarios you use to decide when to leave for work.

The projections themselves are in the AR5. Projections from 2016 to 2050 are in the Near Term chapter; see figure 11.9. Projections beyond that are in the Long Term chapter.

Obviously not in the format I wanted, and It is very hard to infer actual confidence intervals as highlighted in 12.2.3:

But it does provide some predictions. The problem remains that it will be still very hard to publicly demonstrate the strengths and weaknesses of this report. The reason for that is it is nearly impossible to recreate the predictions for a quantiatively defined scenario. Later it says explicitly that I will not get what I wanted. (But it is great that they acknowledge the need to have it)

But I may not need it after all If there will be no significant warming in the next 10 years it will cast serious doubt on CAGW scenarios.

Since the science never refers to "CAGW", perhaps you had better define it for us? And of course you are going to go on record as changing your mind if there is seriously significant warming in next 5 years? (I think there is a high likelihood of El Nino in that period) - or are you firmly of the opinion that surface temperature record has nothing to do with ENSO modes?

But this picture of the models compared to actual temperatures appears to support that the AGW threat is less imminent than AGWists used to think. If there is no significant warming in the next few years, It suggests that High sensitivity AGW supporters are either extremely unlucky(in a sense that an implausible scenario happens) or wrong.

As seen in IPCC AR5

[RH] Changed image width to preserve page formatting.

"If" is a lovely word, isn't it?

PB: "But I may not need it after all If there will be no significant warming in the next 10 years it will cast serious doubt on CAGW scenarios."

Yes, and if we find out that aliens have been manipulating our instruments, that will make a big difference as well. Perhaps you'll agree that such a scenario is unlikely. Upon what basis do you imply that "no significant warming" is likely? What model are you using, and is it "falsifiable" as you define it?

PanicBusinss... Curious if there was a reason you omitted the lower panel of the figure.

Also wondering why you would link to a tinypic without citing the actual location of the source material. This is located in AR5, Chapter 11, Fig 11.25.

What you fail to grasp is that there are a number of things that could be wrong with this. Models could be running hot. Surface temp readings could be reading low (poor polar coverage). More heat may be going into the deep oceans than anticipated. There may be an under counting of volcanic activity. There may be an under counting of industrial aerosols.

What we're likely to find is that it is some combination of these things. Problem for you is that, none of these would invalidate models since models are just a function of the inputs.

Ultimately what doesn't change is the fact that we have a high level of scientific understanding regarding man-made greenhouse gases. The changes in radiative forcing from GHG's relative to natural radiative forcing is large. That gives scientists a high level of confidence that we are warming the planet in a very serious way, regardless of how the models may perform on a short term basis.

Interesting. Here's the passage right next to Fig 11.25.

PanicBusiness @682.

To truly get a handle on what you are on about, what would you consider defines "High sensitivity AGW supporters "?

And regarding the part of AR5 Figure 11.25 that you pasted in the thread above. Is this not what you have requested? A projection based on current climate science that you can compare to you own particular view that it is "very likely that in the coming five years there will be no significant warming or there will even be significant cooling."? Indeed if you examine Figure 11.25 you will find it is projection a global temperature rise of 0.13ºC to 0.5ºC/decade averaged over the next two decades.

And regarding your comment that "High sensitivity AGW supporters are either extremely unlucky(in a sense that an implausible scenario happens) or wrong." What you describe as an "implausable scenario" is presently explainable by the recent run of negative ENSO conditions. The underlying global temperature rise remains ~0.2ºC/decade which does not as of today indicate any "unlucky" 'hiatus' unless it is an accelerating rise in temperature that is being projected.

Of course, climate science is expecting such an acceleration, that being evident in AR5 Figure 11.25. However talk of acceleraton may not be very helpful for somebody still grappling with the concept of average global surface temperatures getting higher with time. Where many have difficulty when they reflect on global climate is the vast size of the system under examination. It functions on a different timescale to that we humans are used to. So it will not give definitive answers on the basis of 5 or 10 years data.

All: PanicBusiness has been banned from further posting on the SkS website. The person behind the PanicBusiness screen is the same person the was behind the Elephant In The Room screen. Sock puppetry is strictly prohibited by the SkS Comments Policy. Persons engaging in sock puppetry automatically lose their posting privileges,

Can someone give me some information concerning John C. Fyfe; & Nathan P. Gillett which that claims global warming over the past 20 years is significantly less than that calculated from 117 simulations of the climate?

I asked this question because I posted on our newspapaer the following.

The letter writer wrote: " Patrick Moore, co-founder of Greenpeace, the international n February, he spoke before Congress about the futility of relying on computer models to predict the future."

I wrote, "But computer models predicted our current state of warming more than 30 years ago when winter temperatures were consistently sub zero around the nation. They predicted sea level rise. They identify trends not year to year predictions."

https://www.skepticalscience.com/climate-models.htm

This is a reply I recieved.

"But computer models predicted our current state of warming more than 30 years ago..."

No, they haven't. Please read:

“Recent observed and simulated warming” by John C. Fyfe & Nathan P. Gillett published in Nature Climate Change 4, 150–151 (2014) doi:10.1038/nclimate2111 Published online 26 February 2014"

I tried to find some disscusion here, REal Climate and Tamino and didn't have any success.(Rob P) - The climate model simulations in CMIP5 do indeed show greater warming than is observed. But the simulations use projections from either 2000 onwards, or 2005 onwards, rather than actual observations. This animation below shows what happens when the models are based on what actually happened to the climate system - well our best estimate so far anyway.

See this post: Climate Models Show Remarkable Agreement with Recent Surface Warming on Schmidt et al (2014).

Re Stranger at 02:28 AM on 30 April, 2014

Try this. Not very conclusive, but it has some preliminary hints.

Thanks Alexandre but I was hoping for someting more that I could sink my teeth into.

Just a different scientific perspective on modeling from another arena...

Semicondcutor device physics is used to model behavior of transistors that is key to driving the whole of small scale electronics that runs today's devices. These are complicated models that are relied on by designers (like myself) to create working circuits. If the models are wrong, the some or all of the millions of transistors and other devices on the chips don't work. This is modeling. The reason it works is that not only does it predict past behavior and predict future behavior, but the underlying science for why the models are accurate is understood to extreme detail.

As a scientist, what is missing for me in climate modeling is the actual scientific understanding of how climate works. Understanding this is a tall order, you would think that some humility would exist in the climate modeling community due to their understandable ignorance about something as complicated as the climate of a planet over long periods.

(-snip-) Climatology is a relatively young endeavor and a worthwhile one but it has a long way to go to be considered settled and understood.

[Dikran Marsupial] Inflammatory tone snipped, suggesting that the climate modelling community lacks humility is also sailing close to the wind. In my experience, climatologists are only too happy to discuss the shortcomings of the models, for example, see the last paragraph of this RealClimate article. Please read the comments policy and abide by it, as you are new to SkS I have snipped your post, rather than simply deleting it, however moderation is an onerous task, and this will not generally be the case in future.

marisman @692... You're making several common of mistakes regarding climate modeling and climate science.

First, climate science is not young. It dates back nearly 150 years. It's a well developed field with well in excess of 100,000 published research papers, and 30,000+ active climate researchers today. The fundamental physics is very much settled science. There are uncertainties but those are generally constrained through other areas of research. For instance, we have high uncertainty on cloud responses to changes in surface temperature, but we also have paleodata that shows us how the planet has responded in the past to changes in forcing, thus we can assume the planet will behave at least fairly similarly today.

Second, it sounds to me like you're making huge overreaching generalizations about climate modeling when you don't even have a basic understanding of how climate modeling is different from your own field. Climate models is a boundary conditions problem rather than an initial conditions problem. Climate modelers do not pretend they are creating a perfect model of how weather will progress over time, leading to climate. Rather, climate models test responses to changes in forcing and project (rather than predict) what might occur run out over many decades. They do not pretend to predict climatic changes from year to year or even within decadal scales.

Third, you make an error in stating that modelers don't have a scientific understanding of how climate works. The link below is to Dr Gavin Schmidt discussing how models are created. Maybe instead of making such sweeping claims about a field of science where you have little understand, you can take a moment to try to start to understand what climate modelers actually do.

Dr Gavin Schmidt, TED Talk

Listening to Dr. Gavin Schmidt speak, he spends a fair amount of time talking about how complex the problem is. I agree. That is my point entirely. While I don't study climatology, I do understand what you do. I also understand computer modeling. It matters little whether your are modeling semiconductor physics, planetary motion, human intelligence, or the Earth's climate. Many of the same principles and limitations apply.

I believe a better solution for the modeling problem is to cease making it an all encompassing model as Dr. Schmidt argues in favor. He says the problem cannot be broken down to smaller scales - "it's the whole or its nothing". I could not disagree more. The hard work of proper modeling is to exactly break the problem down in to small increments that can be modeled on a small scale, proven to work, and the incorporated into a larger working model.

Scientists didn't succeed in semiconductor physics by first trying to model artificial intelligence using individual transistors. They began by modeling one transistor very well and understanding it thoroughly. Thus, assumptions and simplifications that were of necessity made moving forward through increasing complexity were made with a thorough understanding of the limitations.

Let me suggest then as an outsider that you exactly do what Dr. Schmidt says can't be done. Create a model of weather with proper boundary conditions on a small geographical scale.

It seems like a good place to start is a 100 km^2 slice. That would give similar scale up factors (7-8 orders of magnitude) to the largest semiconductor devices today. Create a basic model for weather patterns of this small square.

Hone that model. Make it work. Show that it does. Understand the order of effects so that you then have the opportunity to use any number of mathematical techniques to attach those models with their boundary conditions side by side with increasing complexity and growing area but necessarily greater simplification yet losing little accuracy.

That is exactly the way that successful complex models have been built in other fields. I think the current approach tries to short cut the process by trying to jump to the big problem of modeling over decades too soon. If you have links to those that might be attacking this small scale modeling project, I'd like to have that resource. It would interest me greatly.

marisman - So, you would prefer a complete ab initio approach from the ground up, extrapolating from basic physics (do your semconductor models go from the level of quarks, since those affect electrical charge and mass at the molecular level?). This rapidly scales to the ridiculous.

At each scale level you still need to validate the behavior of that model scale against observations - meaning that when looking at global models you will still be dealing with effectively black box parameterizations at scales below what you can computationally afford.

Which is exactly what gets done in any computational fluid dynamics (CFD) or finite element analysis (FEA) - once you get below computational scale you use parameterizations. Techniques that have a proven track record despite sub-element black boxes.

Granted, GCMs don't do as well at the regional level, let alone the microscale of local weather. But as pointed out before, they are boundary value models, not initial value models, and energy bounded chaotic behavior from ENSO to detailed cloud formation mean that there will be variability around the boundary conditions. Variability that, while it cannot be exactly predicted in trajectory, is not the output goal of GCMs. Rather, they are intended to explore mean climate under forcing changes (~30 year running behavior).

Note that coupled GCMs do use sub-models such as atmosphere, ocean, ice, land use, etc., individually validated and exchanging fluxes to form the model as a whole.

Saying that models are mathematically representative of interactions in the climate, is far to simple a statement. As I understand, they basically numerically integrate Navier Stokes Equations (NSE). While NSE are quite complete, integrating them is no simple minded task. Grid size, time steps, and most importatnly boundary and starting condidtions have a big effect on the model's results. Also there are many constants, linear and non linear, that may only be described in limited or aproximate way, or omitted.

Hindcasting is of course a practicle method of checking all the assumption, but it does not guarantee results. It is entirely possible that thousands of papers can be written all using limited and poor models for constants and make bad assumptions, only refering to the work of another reseacher. For example, people numerically intgrate equations that describe reinforced concrete. They describe cracks as a softening and ignore aggregate interlock. Engineers forget when the limits of these assumtions are reached; normally they just add more steel to be safe.

It also been all over the news now that temperatures have not risen in the last 15 years.I realize the oceans are storing heat, and their are trade winds, and that this post started years ago, neverthess average tempratures are not rising. Also early models could not predict trade winds! What, they don`t have oceans either? We have waited now 20 years and the models are wrong. So it seems that although tested with hindcasting on data that showed increasing temperatures, the models could not predict the temperatures staying constant.

(Rob P) - The news, in general, is hardly a reliable source of information. Some news media organizations seem little concerned with things such as facts.

Yes, the rate of surface warming has been slower in the last 16-17 years, but it has warmed in all datasets apart from the RSS satellite data - see the SkS Trend calculator on the left-hand side of the page.

As for hindcasts see: Climate Models Show Remarkable Agreement with Recent Surface Warming.

Reduced integration techniques are a good example of how researchers can BS themselves for years, just to get papers published (which I will eplain). So the analytical solution is a series of sines and cosines. One tries to approximate the solution using a third order polynimial, obviously in small segments to reduce the error. They use three gausss points (? i dont remeber) for each axis to integrate their equations. Their results are crap. So they just remove a Gauss point and say it has ceratin advantages. Another resaercher removes another Gauss points and says it has some advantages and some problems. They create word like 'shear locking' or 'zero energy mode'--how about 'wrong solution'? This goes on for 20 years and thousands of papers. Meanwhile the pile of paper of what you have to learn gets higher and these methods are put in commercial programs. The next generation of people have fanciful intellectual musings on what a 'zero-energy' mode is, because the pile of paers is so full of crap and the fact that its the 'wrong solution' is lost.

Although, to be complete, theses solutions ^, do get tested and conditioned to work reasonably well under normal circomstances, ie hindcasting. However this is the mathematically equivalent of rigging it with duct tape. Certainly for highly non linear equations, like NSE, this is not good for extrapolation.

Razo, if you find the models failing, what practical conclusions do you draw from it? In other words, so what? Let's imagine that the sign of the alleged failure was in the other direction. Would you still make the comment?

So a question: if we had the GCM of today 30 years ago, would we be having the same conversation? how would it be different?