How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

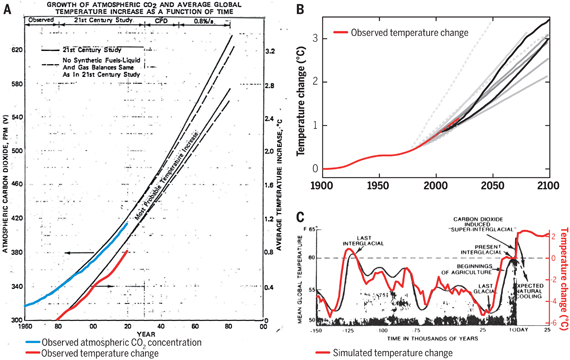

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

http://www.telegraph.co.uk/finance/economics/11633745/Fossil-industry-faces-a-perfect-political-and-technological-storm.html#comment-2051149797

[RH] Your post should have been deleted as a "link only" post, which is against the SkS posting policy. Being that others have already jumped in and explain the materials, we'll let this one stand.

If you wish to continue posting please review the comments policy.

Postkey:

In 1896 Arhennius reviewed the basic claculations for AGW and closely estimated the amount of warming we woud see by today. He also predicted it would warm more at night than day, more in winter than summer, more in the Northern Hemisphere, more in the Arctic and more over land than over sea. How could Sagen have made a mistake that affected Arhennius 60 years earlier?

If Sagen had acutally made a mistake, the contributor argues that the scientists who work for Exxon, BP, and Shell are too stupid to recognize that mistake and correct it. Obviously, these companies have scientists who review all the AGW data and correct errors that hurt their story. Do you really think that Exxon cannot find any scientists who could expose a simple mistake? The IPCC report is approved by all the countries in the world. The summary is approved line by line. Exxon, Saudi Arabia and other interested fossil fuel companies have lawers there for the entire discussion.

Postkey @925

The section you quote is essentially a conspiracy theory: that errors in physics originally made by Sagan have been continually supressed.

It is worth noting that there have, in the past, been a number of scientific papers published that have challenged or questioned the accepted model of climate change; these include papers by Richard Lindzen, Christy and Spencer, Murray Salby and Gerlich and Tscheuschner. This provides us with evidence of the absense of a conspiracy: the scientific community is perfectly willing to publish a variety of views on Climate Change, even if further examination shows these papers to be wrong, unlikely or implausible.

Thus, had Sagan actually made "4 basic mistakes", and these were hushed up "in the Cold War Space race", there is no way that these mistakes would not have found their way into the scientific literature today. The fame of any scientist able to disprove todays consensus on Climate change would be immense (if only for the amount of physics they would actually have to overturn in order to do so).

A brief viewing of the on-line biographies of Carl Sagan and James Hansen shows almost no intersection; Sagan was an advisor to the NASA space program in the 1970's, whilst Hansen was employed at GISS (which is a division of NASA, but not the one Sagan was advising)

.

It is worth noting that Sagan is perhaps an easy target; as a science communicator and educator it is often necessary to simplify the science (It is for that reason , for example, that grossly inaccurate "pictures" of the atom persist today for educational purposes). Thus his public pronouncements may have been less rigourous. But as Michael Sweet mentions above, the development of climate science does not spring from Sagan.

Postkey, how shallI say this? The quote you gave is a pile of idiotic nonsense. The radiative physics of the greenhouse effect do not violate the laws of thermodynamics. They predict how much infra red radiation must reach the surface, and that can be measured. It has been measured and is the subject of numerous science papers. It is measured in real time at a variety of locations. It has been measured in the Arctic during the winter, which precludes any other IR source than the atmosphere. The person you quote may not be an egineer, because they normally know better. Saying one "comes from engineering" is rather vague.

There is no perpetual motion machine in the atmosphere, those who try to argue such idiocy do not understand the physics. SkS has entire threads about the subject. Search the site.

The conspiracy theory mentioning Sagan's name is complete bullocks, as he never had anything to do with the climate part of NASA. Without being more specific, it is not possible to answer about Sagan's alleged "mistakes." Considering how incompetent that telegraph person seems to be, the "mistakes" accusations are likely based on incomprehension of physics. I would caution you that trying to engage someone like this will likely be a complete waste of time. You can see indications of that through the 2nd law thread on SkS.

Thanks, for all of your replies.

These are the 4 basic 'mistakes'!

1. To assume surface exitance, a potential energy flux in a vacuum to a radiation sink at 0 deg K, is a real energy flux.

2. To misuse Mie theory to claim that clouds forward scatter when in reality the light becomes diffused.

3. To claim black body surface IR causes a planet's Lapse Rate temperature gradient, when it is caused by gravity.

4. To completely cock up aerosol optical physics by assuming van der Hulst's lumped parameterisation indicated a real physical process. In reality, there are two optical processes and the sign of the Aerosol Indirect Effect is reversed. It is in fact the real AGW and explains Milankovitch amplification at the end of ice ages.

Point 4 has messed up Astrophysics as well.

Postkey @931, you should always cleary indicate when words are not yours by the use of quotation marks. In particular, it is very bad form to quote a block of text from somebody else (as you did from point 1 onwards) without indicating it comes from somebody else, and providing the source in a convenient manner (such as a link). For everybody else, from point 1 onwards, PostKey is quoting Alec M from the discussion he previously linked to.

With regard to Alec M's alegations, although Carl Sagan did a lot of work on Venus' climate, Mars' climate, the climate of the early Earth, and the potential effect of volcanism and nuclear weapons on Earth's climate, he did not publish significantly on the greenhouse effect on Earth. The fundamental theory of the greenhouse effect as currently understood was worked out by Manabe and Strickler in 1964. As can be seen in Fig 1 of Manabe and Strickler, they clearly distinguish between lapse rates induced by radiation, and those induced by gravity (that being the point of the paper) - a fundamental feature of all climate models since. So Alec M's "mistake 3" is pure bunk. By claiming it as a mistake he demonstrates either complete dishonesty or complete ignorance of the history of climate physics.

With regard to "mistake 2", one of the features of climate models is that introducing a difussing element, such as SO2 or clouds, will cool the region below the element and increase it above it. The increase in temperature above the diffusive layer would be impossible if the clouds were treated as forward scattering only. So again, Alex M is revealed as a liar or completely uninformed.

The surface excitance (aka black body radiation) was and is measured in the real world with instruments that are very substantially warmer than absolute zero. Initially it was measured as the radiation emitted from cavities with instruments that were at or near room temperature. As it was measured with such warm instruments, and the fundamental formula's worked out from such measurements, it is patently false that the surface excitance is "potential energy flux in a vacuum to a radiation sink at 0 deg K". Indeed, the only thing a radiation sink of 0 deg K would introduce would be a complete absence of external radiation, so that the net radiation equals the surface excitance. As climate models account for downwelling radiation at the surface in addition to upwelling radiation, no mistake is being made and Alex M is again revealed as a fraud.

With regard to his fourth point, I do not know enough to comment in detail. Given that, however, the name gives it away. A parametrization is a formula used as an approximation of real physical processes which are too small for the resolution of the model. As such it may lump together a number of physical processes, and no assumption is made that it is not. Parametrizations are examined in great detail for accuracy in the scientific literature. So, neither Sagan nor any other climate scientist will have made the mistake of assuming a parametrization is a real physical process. More importantly, unlike Alex M's unreferenced, unexplained claim, the parametrization he rejects has a long history of theoretical and emperical justification.

Alex M claims "My PhD was in Applied Physics and I was top of year in a World Top 10 Institution." If he had done any PhD not simply purchased on the internet, he would know scientists are expected to back their claims with published research. He would also know they are expected to properly cite the opinions of those they attempt to use as authorities, or to rebut. His chosen method of "publishing" in comments at the telegraph without any citations, links or other means to support his claims shows his opinions are based on rejecting scientific standards. They are in fact a tacit acknowledgement that if his opinions were examined with the same scientific rigour Sagan examined his with, they would fail the test. Knowing he will be unable to convince scientists, he instead attempts to convince the scientifically uninformed. His only use of science in so doing is to use obscure scientific terms to give credence to his unsupported claims. Until such time as he both shows the computer code from GCM's which purportedly make the mistakes he claims, and further shows the empirical evidence that it is a mistake the proper response to such clowns is laughter.

Michael, Postkey,

Yes in my reply @928, I suggested that to disprove Climate science, you would need to overturn or reject a large proportion of well established physics; it seems Alec M has had to resort to trying to do just that.

The Climate Alchemists from 1989 have imagined a spurious bidirectional photon diffusion argument for which there hasnever been experimental proof..

This comment (somewhat idiosyncratically phrased) is incorrect, photon emission is omni-directional in gases (due to the fact that molecules in gases are, by the very nature of gases, free to rotate) and this is sufficient to account for downwelling radiation - which has itself been measured. However "experimental proof" can also be gained by looking at a domestic light bulb. It would seem that when it comes to evidence AlecM is confusing "looking but not finding" with "not bothering to look"

The commentor AlecM over at the Torygraph is certainly a blowhard and well out of control. The full post he wrote that Phil @935 quotes from bears being reproduced in full as we get the name of physicists he blames for his pervertion of science. And we also get the name of the scientist he rated as the US top cloud physicist. If GL Stephens did uncover a fatal flaw in climatology in 2010 and been unable to publish, it is not as though he has had problems publishing other works since then.

Houghton's figure 2.5 plots black body radiation against atmospheric temperature/height (unfortunately the actual page is missing from this google preview) but it's probably the IR-induced convection that the blowhard is saying ensures the GH effect is tiny.

And an optical pyrometer? Isn't that a thermometer?

[JH] Please resist the tempatation to repost the pseudo-science poppycock being posted on the comment threads of other websites.

MA Rodger @934, Houghton's Fig 2.5 is shown in google preview of the 3rd edition of his work. I assume it is the same as that shown in the first edition, given that figs 2.4 and 2.6 are unchanged between the two editions. In addition to plotting the radiative equilibrium temperature, Houghton also plots the convective equilibrium temperature (or an approximation with a lapse rate of -6 C per km). If Alex M thinks that plot "showed why there can be no Enhanced GHE", he merely demonstrates he has no understanding of atmospheric physics (as if we needed further proof of that). Consulting the 3rd edition, published in 2002, ie, one year after the Third Assessment report also demonstrates neatly that Houghton saw no contradiction between the physics he continued to teach essentially unchanged after he joined the IPCC, the physics that he had taught before hand.

The preview of the first edition is also interesting in that it contains on page 10 Houghton's explanation of why climate scientists often (though not in GCMs) treat IR radiation from an atmospheric layer to consist of an upward and a downward flux, rather than as radiating in all directions. The reasoning is simple. As Alex M himself points out, "Standard Physics predicts net unidirectional radiant flux from the vector sum of Irradiances at a plane". But if you have radiation from a sphere with equal temperatures at all points, then at any give point above the surface of the sphere it will have equal radiative flux coming in at φ degrees from all downward directions, for all φ. Thus, for a given φ, with that angle will come equally from a circle on the sphere with a center directly beneath the point. If you sum the vectors of all those fluxes, only the vertical component of those vectors will not cancel out. As this applies to all φ, it follows that the integral of the all fluxes from the surface at any point above the surface consists of a net flux with a vertical component only. Similar reason applies for any point below the surface (assuming it is a transparent region). Because of this, the radiation from a given level of the atmosphere can be treated as consisting of only vertical components for simplicity.

This simplifying assumption does not hold if large temperature differences exist between different regions of the surface. This is not always true in the atmosphere, but is often approximately true so that the simplifying assumption makes a good approximation. Despite that it is not used in GCMs and so is not a necessary assumption for the theory of the greenhouse effect. (Note, any time we express the black body radiation in terms of W/m^2 rather than in terms of W/m^2/steradian; we are making this simplifying assumption.)

So, it turns out that one of the biggest problems Alex M has with climate science is a simplifying assumption that is not necessary for the science, is explained in a book he purports to have read, and as it happens, follows reasoning first developed (in relation to gravity) by that well known alchemist, Isaac Newton.

Finally, as a note for PostKey, your most recent comment has been deleted by the moderators. That may only be because you are in effect allowing Alex M to comment here by proxy whilst ignoring the SkS comment's policy, although I can think of a number of comment's policies you are also violating by just posting full quotes. If you want help understanding where Alex M is in error, quote only the relevant text. Explain what you do not understand about the quoted material, and make sure you post on the appropriate thread. The last may take a bit of reading to find the appropriate thread, but that same reading may well answer your question. I and several other commenters here are always glad to help people who are seeking understanding, but we have no interest in carrying on a discussion by proxy with a conspiracy theorist and pseudoscientist such as Alex M.

[JH] Postkey would do well to follow your advice. If he does not, his/her future posts are likely to be deleted.

Wow. This AlecM guy is a hoot! I love this comment the best...

"PS when I used the old term Emittance instead of Exitance, Wikipedia was altered to remove Emittance! This showed the disinformation process in action. We are being conned!"

It's illuminating in terms of his state of mind.

[JH] Further discussion of comments posted by AlecM on another website will be deleted for being "off-topic".

Appears to be no acknowledgement here of the difficulties raised by Edward N Lorenz, MIT. Eg, a 2011 Royal Society paper on Uncertainty in weather and climate prediction: “The richness of the El Nino behaviour, decade by decade and century by century, testifies to the fundamentally chaotic nature of the system that we are attempting to predict. It challenges the way in which we evaluate models and emphasizes the importance of continuing to focus on observing and understanding processes and phenomena in the climate system.”

[TD] Enter the word chaos in the Search field at the top left of this page. Also read The Difference Between Weather and Climate. Note that many posts have Basic, Intermediate, and Advanced tabbed panes.

NanooGeek,

Can you please link the paper you are citing? A quick google of Edward N Lorenz from MIT indicates that he died in 2008. It is very unlikely that he published your quote in 2011. His last paper was published in 2008 and I see nothing in his CV that resembles your citation. His CV shows nothing published by the Royal Society after 1990.

michael sweet @938.

It seems the quote comes from Stilgo & Palmer (2011) 'Uncertainty in weather and climate prediction'. which addresses the legacy of EN Lorenz' work.

The paper seems to reinforce what modellers already say - "models have no skill at decadal level prediction". While models appear to capture ENSO behaviors, there is no way they can predict it. If you compare models to observations over short time frames (<30y), then they wont match well. However, climate is about the long term averages of weather and in that the models do quite well.

"While models appear to capture ENSO behaviors, there is no way they can predict it. "

We may be getting close to doing just that at the Azimuth Project forum — http://forum.azimuthproject.org/discussion/1608/enso-revisit#latest

Others are making progress as well [1].

[1] H. Astudillo, R. Abarca-del-Rio, and F. Borotto, Long-term non-linear predictability of ENSO events over the 20th century, arXiv preprint arXiv:1506.04066, 2015.

I'm looking for a good graphic showing where the surface temperature models with the latest observed temperatures added. Anyone know of one?

dvaytw: Climate Lab Book has a comparison that is updated frequently.

Thank you, Tom. I would like to ask a question about this. A guy is giving me crap that:

On the graph, it shows a cut-off between historical and RCP's at 2005 (I assume "historical" means post-predictions?) However, I looked up CMIP5 and their site says

This is confusing to me. So these projections are from around 2011? Why does the chart say 2005? And how do these projections compare with earlier models? Also, the guy in the argument is claiming:

Far as I can tell, this isn't true... but I can't find a nice graph with all five ARs' projections compared... best I can come up with is the first four (and that one clearly shows SAR as lower than the other three, so already he's wrong on that).

Any help here?

Others can probably give you a more detailed answer but models predict the outcomes from given forcings. Predicting future forcings is uncertain so hardly unreasonable to update a model run done is 2005 with what the actual forcings to 2015 to see how it fared. That is very different thing to tuning a model to reproduce a particular time series which is of course of little value.

When doing obs/model comparisons, the interesting question is how well did the model perform actual forcings rather than how well did researchers predict when volcanoes would erupt or how much CO2 human would produce.

dvaytw, I'll expand scaddenp's answer: Models are fed actual ("historical") values of anthropogenic and natural forcings up through some date that the modelers decide has reliable forcing data. The actual running of the models can be years after that cutoff date. The vertical line you see in model projection graphs demarcates that cutoff date. For dates beyond that cutoff, the modelers feed the models estimates of future forcings. Although those are "predictions" of those future forcings, the modelers rarely are very confident of those "predictions," because those modelers are not in the business of predicting forcings. Indeed, those models themselves are not predicting forcings; these models take forcings as inputs.

An ensemble of model runs such as CMIP5 generally uses the same forcings in all the model runs. See, for example, the CMIP5 instructions to the modelers. Differences across model run outputs therefore are due to different constructions of the different models, and tweaks across runs within the same model. (CMIP5 has more than one run of each of the models.) The goal is replication in the sense of seeing whether the fundamental characteristics of the outputs are robust to what should be minor differences in approaches. See AR5 WG1, Chapter 11, Box 11.1 (pp. 959-961) for more explanation. See Figure 11.25 (p. 1011) for detailed graphs of only the CMIP5 projections.

Modelers almost never rerun old models with new actual (historical) forcing data, because too much time, money, and labor are required to run the models. Instead they run their latest, presumably improved, version of their model. But several authors have made statistical adjustments to model results to approximate the effect of rerunning those models with actual forcings.

Dana wrote a post with separate sections for the different reports' projections, but it is three years old so does not show the recent upswing in temperature.

I know there are graphs combining all the IPCC reports' different projections, but I can't find one at this moment. Somebody else must know where one is.

Ed Hawkins has posted a good article on how choice of baseline matters, including a neat animation.

Hi,

I was wondering if anyone could help me here. I've been inundated by this chart and others like it from my skeptic friends. It compares computer models to observed temperature only using UAH and RSS. Obviously it's cherry-picking since there are other temperature sets but does anyone have a chart similar to this that shows all the major data sets?

[TD] Resized image.

spunkinator99: Among other problems with that graph, it is baselined improperly so that the UAH and RSS lines begin near the model mean and diverge over time. Spencer used the same deceptive tactic in later constructing a graph of 90 model runs, as Sou explains at Hotwhopper. Tom Curtis pointed out why 1983 was such an obvious choice for Spencer's distortion.

Ed Hawkins at Climate Lab Book updates his comparison graph frequently. John Abraham's recent article's graph is bigger and so easier to read, and shows the earlier (CMIP3) model runs as well as CMIP5.

None of those shows the correct model lines, because those model lines were for surface air temperature despite observations being of surface sea temperature where not ice covered. The correct model lines are shown in an SkS post.

spunkinator99, see also the post countering the myth that satellites show no warming.