How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

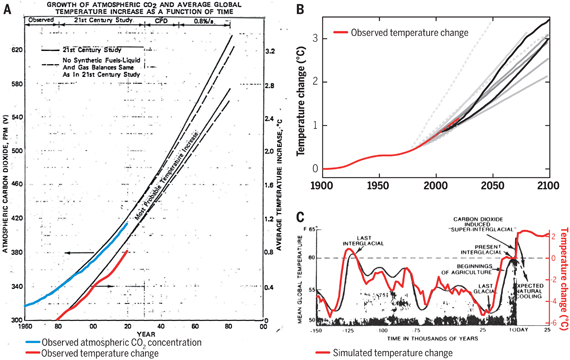

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

The whole point of current focus on climate models is to inform policy with regard to emission strategies. That is under human control, not something you "forecast". We are only peripherally interested in effects on climate from variations in natural forcing because a/ they are either short term or small, and b/ there is nothing we can do about them. We can however control emissions and we really do want to know whether models will accurate predict future under various emission scenarios. For evaluating an older models, you can simply rerun with actual forcings (without changing the model code) and compare to what we actually got.

The hard bit in communication is having public understand the difference between a physical model and statistical model in terms of value in hindcasting.

KR and scaddenp: I understand the role of the RCP scenarios, and that controlling emissions is key - I am not suggesting that either be abandoned.

However, many people don't distinguish between uncertainty in the climate models and uncertainty in the forecasts of forcings - and this is exploited by deniers ("the IPCC forecasts are flawed/too vague/too complex"). In addition, because cycles in some forcings cause a stepwise rise in temperature, deniers are able to pretend that temperature has stopped rising (a pause, or even a permanent pause). Climate scientists explain all this in advance and at the time (and produce The Escalator graph), but many of the public are not convinced (or are misled), and this delays reduction in emissions.

I merely proposed (@974) an extra tool (that complements The Escalator) to remove variations in the forcings from forecasts of temperature, and enable climate scientists to say that the models they produced 10 or 20 or 30 years ago have accurately forecast temperatures in advance given the forcings that subsequently actually occurred.

Would it be possible to go further and calculate what temperatures the FAR model predicted for 1990-2016 given the forcings that actually occurred each year, and the SAR model for 1995-2016, the TAR model for 2001-2016, AR4 for 2007-2016, and AR5 for 2013-2016? Perhaps it's not possible do this - but if it were, it would contribute greatly to answering the question posed by the title of this thread - "How reliable are climate models?" (It might be a good student research project.) And doing it again each year in the future would keep on showing that the models are accurate. Would it be possible to do this?

Rerunning the actual climate models is not a trivial process. Serious computer time for version 4 and 5 models. Hassles with code for pre-CMIP days. However, outputs can be scaled for actual forcings. "Lessons for past climate predictions" series do this. Eg for the FAR models, see http://www.skepticalscience.com/lessons-from-past-climate-predictions-ipcc-far.html

However, I am very doubtful about the possibility of changing the minds of the wilfully ignorant. It seems to outsider, that in USA in particular, climate denial is part of right-wing political identity.

scaddenp: thanks for taking the trouble to reply, and for the very useful link.

Despite the difficulties, it would be great to have a Skeptical Science resource page that was kept up to date with previous IPCC models (or even just two or three of them) and their forecasts (given the forcings that actually occurred), which would show that the models are accurate. I accept that's very easy for me to suggest, but a lot of work for someone else to do.

I agree about the wilfully ignorant, but surely we need to keep trying. It's one of the important functions of Skeptical Science. Most deniers don't read Skeptical Science, but it's a very useful resource for people trying to persuade the deniers and (more realistically) the undecided. Climate scientists will win in the end - because they're right. Let's hope they win soon enough to prevent catastrophe.

I just had a quick look at Mann 2015 where this all started and at CMIP5 website. According to website, the runs were originally done in 2011. The CMIP5 graph is Mann, is the model ensemble but run with updated forcings. To my mind, this is indeed the correct way to evaluate the predictive power of a model, though the internal variability makes difference fom 2011 to 2015 insignificant. The continued predictive accuracy of even primitive models like Manabe and Weatherall, and FAR suggests to me that climate models are a very long way ahead of reading entrails as means of predicting future climate.

I wonder if Sks should publish a big list of the performance of the alternative "skeptic" models like David Evans, Scafetta, :"the Stadium wave" and other cycle-fitting exercises for comparison.

Framk Shann has, in my opinion, made a sound argument for improving the way SkS and others communicate the science of climate change. His central point is butressed by a just published editorial by Nature Climate Change, Reading science.

The tease-line of the editorial is:

Scientists are often accused of poorly communicating their findings, but improving scientific literacy is everyone's responsibility.

John Harz @981, Frank Shann's premise of (not argument for) the need for effective communication is valid (and well known, and acknowledged). His belief that using only archetypal meanings represents effective communication is just false, something he conceals from himself by not asking the crucial question - what is misunderstood as a result of the use of the word 'predict' by Dana. The answer is nothing - something his survey does not address and he does not address in counter argument.

In constrast, his preferred word, 'describe' would introduce genuine misunderstanding, and has it happens is also not an archetypal use of the term.

Your link supports his premise - ie, that effective communication is important, but has only one bearing on his argument about whether a particular example of communication was more or less effective than alternatives. In fact, it only has one bearing on the topic. Specifically, he is a scientist, as were (in all probability) his colleagues who he surveyed. From your link we learn that scientists are poor judges of effective communication. That is, the linked article undermines his claim to relevant expertise on this issue.

I was prepared to allow his courtious granting of himself the last word @967 to stand, but not if you are going to step in and misrepresent the discussion in his favour.

Tom Curtis: I have neither the time nor inclination to get into a protracted discussion about the details of Frank Shann's posts. Having said that, I believe that the word "simulate" would have been a better choice than "predict" in Dana's OP. "Predict" connotes to the average person the foretelling of something that is likely to happen in the future.

Does anyone know where I can find a graph that shows how the climate models correlate with temperature back to around 1880? Can't find that by using google for some reason.

Should we all be jumping for joy?

Climate Sensitivity Paper Hints Models Overestimate Warming

Ps- never mind. Comment withdrawn:

Bates’ Embarrassment: Sad and Sloppy Climate Sensitivity Study

dvaytw, more details on how the Bates paper is ridiculous are at ATTP.

By far the most common global warming skeptic argument on the Skeptical Science iPhone app is the contention that the models are unreliable (www.skepticalscience.com/iphone_results.php).

It would be helpful to prominently display one or more graphs of predicitons made in advance by the models (not hindcasts) with superimposed subsequent actual changes in temperature.

On the Skeptical Science "Most Used Climate Myths" graphic, would it be helpful to rank the myths in the same order as the iPhone app results?

" A sign that you're spending too much time in model land is if you start to use the word 'prediction' to refer to how you model past data ... It is very easy to overfit a model, thinking you have captured the signal when you've just described the noise. Sticking to the simple, commonsense definition of prediction as something that applies strictly to a future event may reduce the risk of these errors." Nate Silver. The Signal and the Noise. New York, Penguin, 2015:452.

And sticking to the simple, commonsense definition of prediction as something that applies strictly to a future event will also reduce the risk of being misunderstood by the general public.

FrankShann the Nate Silver article is missing an important point. If I construct a model and fix its parameters and then observe the eruption of Mt Pinatubo, the eruption is still in the future from the perspective of the model as the parameters of the model had already been fixed. This is true even if I don't generate the model runs until afterwards. In this case, the model cannot possibly be overfitting the response to pinatubo (as the parameters were already fixed), and hence Silver's criticism is not relevant. Similarly, the CMIP projects archive model projections (understanding the difference between a projection and a prediction is much more important), so if we compare what the models say about climate after they were archived, that is still a prediction.

So we could say that "the model would have predicted the response to Pinatubo had we known in advance the details of the eruption", but this is a bit verbose, and most people are happy just to view it as bing a prediction from the perspective of the model.

Essentially overfitting is only a concern if you have tuned the model in some way on the observations you are "predicting" (this does happen in statistics/machine learning, I even wrote a paper describing a common, but not widely understood, way in which it can creep in, and what to do about it).

Just to add, what Nate Silver is really talking about is "in sample" predictions and "out of sample" predictions. It is an "out of sample" prediction if the thing you were predicting is not part of the data used to calibrate the model. If I calibrate the model on the data from 1950-present and use it to predict what happened from 1900-1949, then this is still a prediction from a statistical perspective, even though it is predicting something that ocurred before the calibration data. The key point is that you can't overfit "out of sample" data.

HTH

Models are always wrong. What matters is if they are skillful.

Emerging patterns of climate change

As you can see, in order to be skillful you must take what you have learned apply it to the improving models, adjust them, and then run them again to see how they match up to unknown future events. Those future events once known and giving you more knowledge, then can give you the needed information to again improve your models...which will also be wrong, but hopefully better. This continues as long as you are learning because of skillful models, they will keep improving.

So this idea that you can't go back and see if the new improvements fit old data (hindcasts) is categorically false. Of course you need to go back and match it up to old data. That's how you determine if the new adjustments to the model are skillful.

In other words it's a process. It will never be perfect, but it should gradually trend towards the models becoming better over time.

Another example of "predicting the past" would be a geologist searching for gold. She might come up with a new hypothesis ("model") of how gold deposits are related to other geological features, and a prediction from that hypothesis is that gold would be found in a place nobody had ever thought to look before.

When you do dig there, and find gold, the fact that the gold has always been there (it was placed there in the distant past) does not mean that the hypothesis was not making a successful prediction. The hypothesis was not developed with knowledge of that particular gold deposit. Predictions from the hypothesis do not have to be "gold will eventually form here in the future" - it's still a prediction of you say "dig there, and I think you'll find pre-existing gold".

Concerning Nate Silver's 'The Signal and the Noise' book cited @989, I note Michael Mann has a number of serious criticisms of its coverage of climate modelling (although Mann does admit that his use of five-hundred-and-thirty-eight quoted as being 'The Number of Things Nate Silver Gets Wrong About Climate Change' in his title is “poetic license”).

@RedBaron #992

In many cases, the "old data" is also being refined.

In addition, the scientific and technical teams engaged in the care and feeding of Global Climate Models are highly skilled and dedicated professionals. In other words, they know their stuff better than do any of us.

John,

I have no doubt you are right John. I have every respect for the mathematicians doing the models. They are remarkably close considering the difficulty of the task, and getting better as time goes on. I was simply addressing the claim that there was somehow some conspiracy or something where hindcasts was somehow wrong. It isn't as long as the reason for it is to check the skillfulness of models as you improve them. Hindcasts are a valuable tool, used properly.

I repeat this due to many typhographical errors:

It seems that many models give more or less accurate long term predictions of average temperature rises in the sea, land and atmosphere as well as ice and snow coverage. The major contributors are supposedly atmospheric levels of CO2 and water vapor and possibly methane and other gases, all assumed to induce positive feedback effects with some (presumeably individual) delays. The effects of volcanic eruptions, cloud coverage, ocean currents, sun spots, wobbling/perturbation of the earths axis, distance from the sun, etc., may or may not be inputs or possibly outputs from some of these models.

Being a layman, all this suggests a complexity that make it difficult for any model to simulate yearly variations in any historically known output of interest based on all (or allmost all) historically known ( measured) inputs. To me this suggests that a sufficiently sophisticated neural network could be trained to accurately simulate any climate effects (one or more chosen outputs) and causes (using the effects of several assumed driving forces as inputs). If such a model was implemented and sufficiently well trained (millions or billions of runs) it might reveal some surprising relationships, that could explain some short term known analomies or it might even reverse some theories about cause and effect (eg. by trying to exchange certain inputs and outputs and studying the results). If sufficiently accurate, such a model might even convince the sceptics.

Al, this is hust a lead-up to my question: Has any such neural network already been implemented or contemplated and if so what are the capabillties?

jchoelgaard, the main influence on year to year temperature is the ENSO cycle which is appears to be chaotic and neural networks are no better than any other curve-fitting in predicting such processes. You have a limited no. of inputs (measured values) and you know that imperfect measurement and subscale unknowns will invalidate the prediction eventually.

The situation is analogous to predicting mid summer day temperature 3 months out. You can state with great confidence that the temperature will likely be higher than mid winters day, because (like with increased GHG), there is more incoming radiation. However, exactly what that temperature will be is dependent on weather - an inherently chaotic process. Applying the ANN approach to climate modelling would face the same formidable challenges as applying to a long term weather forecast.

You can however make a pretty good punt at what the long term average mid summer day temperature will be and climate model predictions are like that.

Question: Is a potential reason for three record hot years in a row the fact that aerosol production is peaking?

jchoelgaard @997.

The answer is evidently 'yes' as Knutti et al (2002) demonstrates. However, the literature seems to show neural network models being used mostly to analyse particular aspects of climate rather modelling the global climate as a whole.

But as scaddenp @998 says, the main causes of historical global temperature fluctuations is ENSO. Add in a few other factors and a model can be built to fit certain historical data and having been thus 'curve-fitted' used to calculate an output that can be tested against other 'non-curve-fitted' historical data, as this recent Tamino post demonstrates.