How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

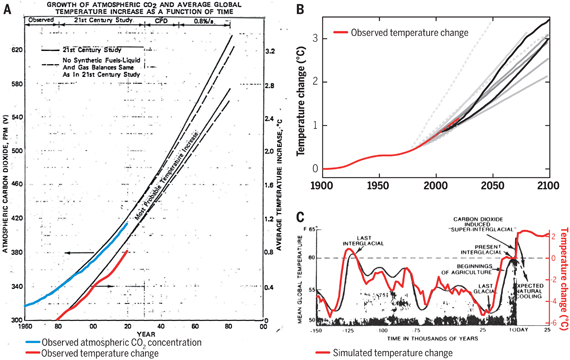

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

One Planet Only Forever @1325,

Spencer is not the first to waste his time searching for that mythical archepelago known as The Urban Heat Islands. Of course these explorers are not trying to show such islands exist (they do) but to show the rate of AGW is being exaggerated because of these islands. That's where their myth-making kicks off.

This particular blog of Spencer's is a bit odd on a number of counts. He tells us he is correlating 'temperature' against 'level of urbanisation' using temperature data of his own derivation and paired urban/rural sites, this all restricted to summer months. Yet this data shown in his Fig 1 seems to show temperatures mainly for a set of pretty-much fixed levels of ΔUrbanisation (no more than 5% ΔUrbanisation over a 10-mile square area), so not for any significant changing levels of urbanisation. The data showing these urban records warming faster under AGW that nearby rural stations and thus the actual variation in warming between his paired rural/urban stations is not being presented.

And note his "sanity check" appears to confirm that "homogenization" provides entirely expected results so why is he using his own temperature derivations?

And I'm also not sure his analysis isn't hiding some embarassing findings. Thus according to the GISTEMP station data, the urban Calgary Int Airport & rural Red Deer have a lot less summer warming than the urban Edmonton Int Airport & rural Cold Lake. The data doesn't cover the full period, but it is rural Cold Lake that shows the most warming of the four.

MA Roger @1326,

Thank you for adding technical details to my 'general evaluation' that Spencer is not developing and sharing genuine improvements of awareness and understanding.

The following part of the Conclusion that I quoted @1325 is a very bizarre thing to be stated by someone supposedly knowledgeable and trying to help others better understand what is going on.

"As it is, there is evidence (e.g. here) the climate models used to guide policy produce more warming than observed, especially in the summer when excess heat is of concern. If that observed warming is even less than being reported, then the climate models become increasingly irrelevant to energy policy decisions."

Spencer is silent about considering aspects of the models that underestimate the warming (those aspects probably deserve as much, and potentially more, consideration). Their narrow focus actually makes their developed analysis 'irrelevant to energy policy decisions'.

In spite of Spencer creating something claimed to dictate that there should be reduced effort to end fossil fuel use, the point remains that the overall global warming and resulting global consequences are the required understanding for Policy Development by any government (at any level).

I have work experience with the development of large complex projects. Planning is done at various stages to establish an expected duration and cost to complete the project. The resulting plans often trigger game-playing by project proponents, especially if the plan indicates that completion will be too late or cost too much for the project to be pursued any further. The game-players try to focus on what they believe are long duration aspects that should be able to be done quicker or higher cost items that should be able to be less expensive. What people like me do, to be helpful to all parties involved, is agree with the narrow-focused project proponents as long as they agree to put the extra effort into re-evaluating every aspect of the project plan, especially the items that could take much longer or cost more than estimated.

People like Spencer are like those project proponents. They seek out and focus on the bits they think help justify their preferred belief rather than increasing their overall understanding to make a more knowledgeable decision.

Discovered inaccuracies in the models may be important to correct. But for Energy Policy development the most important consideration is the overall understanding of the model results, not a focus on selected bits of model results.

That is one weird analysis presented by Spencer. I read the blog post OPOF pointed to, and the one linked in it that points to an earlier similar analysis.

I really cannot figure out what he has done. Figure 1 refers to "2-Station Temp Diff.", but there is no indication of how many stations are included in the dataset, or exactly how he paired them up. Is each station only paired to one other station, or is there a point generated for each "station 2" that is within a certain distance? He talks about a 21x21km area centered on each station - but he also mentioned a 150km distance limit in pairing stations. Not at all clear.

There are also some really wonky statements. He talks about "operational hourly (or 3-hourly) observations made to support aviation at airports" and claims "...better instrumentation and maintenance for aviation safety support." He clearly has no understanding of the history of weather observations in Canada. Aviation weather historically was collected by Transport Canada (a federal government department), and indeed the Meteorological Service of Canada (as it is now known) was part of that department before the creation of Environment Canada in the early 1970s. Even though MSC was in a different department, it still looked after the installation, calibration, and maintenance of the "Transport Canada" aviation weather instrumentation. This even continued (under contract) for a good number of years after the air services were moved out into the newly-created private corporation Nav Canada in 1996. The standards and instrumentation at aviation weather stations was no different from any other station operated by MSC. Now, Nav Canada buys and maintains its own instruments, and MSC has ended up going back to many of these locations to install their own instrumentation because the Nav Canada "aviation" requirements do not include long-term climate monitoring. (Nav Canada data still funnels into the MSC systems, though.)

What has changed over time is levels of automation. Originally, human observers recorded data and sent it into central collection points. Now, nearly all observations are made by fully-automated systems. A variety of automatic station types have existed over the decades, and there have been changes in instrumentation.

As for the 3-hour observing frequency? Not an aviation requirement - but rather the standard synoptic reporting interval used by the World Meteorological Organization.

So, reading Spencer's analysis raises large numbers of questions:

Without this information, it is very difficult to check the validity of the comparisons he is making. Figure 1 has a lot of points - but later in the post he mentions only having four stations in "SE Alberta" (Edmonton, Red Deer, Calgary, and Cold Lake). Does figure 1 included many "within 150km" stations paired to each individual station in the list? Does this mean that within an area containing say 5 stations, that there are 4x3x2 "pairs"? That would be one way of getting a lot of points - but they would not be independent. We are left guessing.

Spencer links to this data source for weather data. I managed to search for stations in "Alberta", and found 177 active on January 1, 2021. It contains six stations with "Edmonton" in the name. Which one is Spencer's "Edmonton" is important. It is almost certainly the International airport south of the city (often jokingly called "Leduc International Airport because of its distance from the city proper), but the downtown Municipal airport is also on the NOAA/NCEI list. The difference in urbanization is huge - the Leduc one has some industrial areas to the east, but as you can see on this Google Earth image, it is largely surrounded by rural land. The downtown airport would be a much better "urban" location. The Leduc location only has "urbanization" to the east - downwind of the predominant west-east wind and weather system movements.

My number of 177 Alberta stations is an overestimate, as the NOAA/NCEI web page treats "Alberta" as a rectangular block that catches part of SE British Columbia. I also only grabbed recently-active stations - the number available over time changes quite a bit. The lack of clarity from Spencer about station selection is disturbing.

As MAR has pointed out, Spencer's four "SE Alberta" stations of Edmonton, Red Deer, Calgary, and Cold Lake make for an odd mix. The first three are all in a 300km N-S line in the middle of the province. Edmonton is 250km from the mountains; Red Deer about 125km, and Calgary about 65km. (For a while, I had an office window in Calgary where I could look out at the snow-capped mountains.) Cold Lake is about 250km NE of Edmonton, near the Saskatchewan border. The differences in climate are strong. Spencer dismisses these factors as unimportant.

Spencer's Table 1 (cities across Canada) suffers from the same problems: not clearly identifying exactly which station he is examining. At least here he says "Edmonton Intl. Arpt".

He does not clearly explain his method of urban de-trending. I followed his link to the earlier blog post that gives more information, but it is not all that helpful. As far as I can tell, he's used figure 4 in that blog post to determine a "temperature difference vs urbanization difference" relationship and then used that linear slope to "correct" trends at individual stations based on the Urbanization coefficients he obtained from a European Landsat analysis for the three times he used in his analysis (1990, 2000, and 2014). Figure 4 is a shotgun blast, and he provides no justification for assuming that an urbanization change from 0 to 10% has the same effect as a change from 80-90%. (Such an assumption appears to be implicit in his methodology.) In fact, many studies in urban heat island effect have show log-linear relationships for UHI vs population of other indicators (e.g., Oke, 1973). Spencer's figure 4 in that second blog post is also for "United States east of 95W". No justification as to why that analysis (with all its weaknesses) would be applicable in a very different climate zone such as the Canadian prairies (Alberta).

His list of 10 Canadian cities also has some wildly different climate zones in it.

Spencer should be embarrassed by this sort of analysis, but I doubt he cares. He has the "result" he wants.

Bob Loblaw @1328,

Thanks for providing even more evidence and reasons to doubt what Spencer produces and the claims he makes-up.

I share the understanding that Spencer does not care about how flawed his analysis is.

I would add that it appears to be highly likely that, long ago, he predetermined that his focus would be on finding ways to claim that less aggressive leadership action should be taken to end fossil fuel use. Spencer's motivating requirement appears to be having the analysis 'results' fit that narrative.

Can anyone comment (in terms that an intelligent but relative domain novice might understand) on Nic Lewis's 'rebuttal' (specifically his 3 claims and conclusion) of Steven Sherwood et al's findings relative to effective sensitivity and it's uncertainty ?

Sherwood: https://agupubs.onlinelibrary.wiley.com/doi/full/10.1029/2019RG000678

Lewis: https://link.springer.com/article/10.1007/s00382-022-06468-x

[BL] Links activated...

...but, you have asked the same question on another thread, and have already received an answer there.

Please do not post the same information on multiple threads. Pick one, and stick to it, so all responses can be seen together. New comments are always visible - regardless of the thread - from the Comments link immediately under the masthead.

Anyone reading this comment in the "Models are unreliable" thread should follow the link to the comment on the "How sensitive is our climate?" thread and respond there.

Please note: the basic version of this rebuttal has been updated on May 26, 2023 and now includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

Thanks - the Skeptical Science Team.

If a hypothesis should be considered proven it must stand up against falsification attempts. If you take a climate model as an example, and remove all CO2 dependency, and adjust all other model parameters, and you can train the model to fit historical data AND it still makes a decent prediction of future climate, it is then hard to use that model to claim that CO2 is what drives global warming. I have done a little bit of searching but not found any such falsification attempt.

For example, since early human civilisation about 1/3 of the world's forests have been cut down, farmland has been drained. This inevitably makes the soil drier and you get less daytime cumulus clouds. These clouds reflect sunlight, but disappear at night allowing long-wave CO2-radiation to escape. Most of this deforestation & drainage has happened in sync with CO2-emissions since the beginning of the industrial revolution. If you can adjust/train the climate model by tuning all other model parameters (I'm sure there are hundreds in a climate model) relating to deforestation, soil moisture, evapotranspiration, cloud formation, land use, etc etc etc, and the model 1) follows the observed climate and 2) makes decent predictions into the future (relative to the training window) then the hypothesis that CO2 is what drives climate change can't be claimed to be true, based on that model.

I have done a bit of model fitting on systems way less complex than the global climate. If the model contains more than, say 5 (five) model parameters that need to be tuned to make the model fit historical data, you really start chasing your own tail. The problem becomes "ill conditioned" and several combinations of model parameters can give a good fit and make decent predictions. In such a situation, you can choose to eliminate some parameters or variables, or impose some known or suspected correlation or causality between them, to simplify the model. A model should be kept as simple as possible.

A climate model probably contains hundreds of model parameters. Can you adjust them so that you get a good fit with historical data, and good predictive capability at a significantly lower, or even completely excluded CO2-dependency?

[PS] Please have a decent read of the IPCC summaries of climate modelling, especially the earlier reports 3 and 4. Climate models are physics-based models, not statistical models. You cant "fit" parameters like you do in a statistical model. Parameterization of variables is extremely limited and not tuned to say global temperature. The old FAQ on climate models at https://www.realclimate.org/index.php/archives/2008/11/faq-on-climate-models/ is very useful in this. Models do include landuse change that you mention.

As to falsification, climate science makes a very large number of robust predictions like ocean heat content, changes to outgoing and incoming IR, response to volcanic eruptions etc. Observations that differ from these would indeed falsify the models (though not necessarily the science).

You can still download a cut down version of GISS model that will run on a desktop. https://edgcm.columbia.edu/ Try it yourself. Good luck getting it simulate climate with CO2 depencency set to zero.

Syme_Minitrue @ 1332

That is an incorrect way to prove the null hypothesis or to demonstrate falsification. Climate models are not simple empirical models. They contain a mix of fundamental principles, including the laws of physics, as well as tunable parameters for uncertain factors. One cannot simply remove radiant energy, which follows the physics of CO2, and then tune the model to an unconstrained set of empirical variables, then say that if it can be made to fit, conclude that the laws of physics are invalid.

Syme_Minitrue @ 1332:

Your comment contains several misunderstandings of how models are developed and tested, and how science is evaluated.

To begin, you start with the phrase "If a hypothesis should be considered proven..." Hypotheses are not proven: they are supported by empirical evidence (or not). And there is lots and lots of empirical evidence that climate models get a lot of things right. They are not "claimed to be true" (another phrase you use), but the role of CO2 in recent warming is strongly supported.

In your second and third paragraphs, you present a number of "alternative explanations" that you think need to be considered. Rest assured that none of what you present is unknown to climate science, and these possible explanations have been considered. Some of them do have effects, but none provide an explanation for recent warming.

In your discussion of "parameters", you largely confuse the characteristics of purely-statistical models with the characteristics of models that are largely based on physics. For example, if you were to consider Newton's law of gravity, and wanted to use it to model the gravitational pull between two planets, you might think there are four "parameters" involved: the mass of planet A, the mass of planet B, the distance between them, and the gravitational constant. None of the four are "tunable parameters", though. Each of the four is a physical property that can be determined independently. You can't change the mass of planet A that you used in calculating the gravitational pull with planet B, and say that planet A has a different mass when calculating the attraction with planet C.

Likewise, many of the values used in climate model equations have independently-determined values (with error bars). Solar irradiance does not change on Tuesday because it fits better - it only changes when our measurements of solar irradiance show it is changing, or (for historical data prior to direct measurement) some other factor has changed that we know is a reliable proxy indicator for past solar irradiance. We can't make forests appear and disappear on an annual basis to "fit" the model. We can't say vegetation transpires this week and not next to "fit" the model (although we can say transpiration varies according to known factors that affect it, such as temperature, leaf area, soil moisture, etc.)

And climate models, like real climate, involve a lot of interconnected variables. "Tuning" in a non-physical way to fit one output variable (e..g. temperature) will also affect other output variables (e.g. precipitation). You can't just stick in whatever number you want - you need to stick with known values (which will have uncertainty) and work within the known measured ranges.

Climate models do have "parameterizations" that represent statistical fits for some processes - especially at the sub-grid scale. But again, these need to be physically reasonable. And they are often based on and compared to more physically-based models that include finer detail (and have evidence to support them). This is often done for computational efficiency - full climate models contain too much to be able to include "my back yard" level of detail.

You conclude with the question "Can you adjust them so that you get a good fit with historical data, and good predictive capability at a significantly lower, or even completely excluded CO2-dependency?" The answer to that is a resounding No. In the 2021 IPCC summary for policy makers, figure SPM1 includes a graph of models run with and without the anthropogenic factors. Here is that figure:

Note that "skeptics" publish papers from time to time purporting to explain recent temperature trends using factors other than CO2. These papers usually suffer from major weaknesses. I reviewed one of them a couple of years ago. It was a badly flawed paper. In general, the climate science community agrees that recent warming trends cannot be explained without including the role of CO2.

Syme_Minitrue @1332,

You suggest CO2 can be extracted from climate models and it would be "then hard to use that model to claim that CO2 is what drives global warming." You then add "I have done a little bit of searching but not found any such falsification attempt."

I would suggest it is your searches that are failing as there are plenty "such falsification attempt(s)." They do not have the resources behind them to run detailed models like the IPCC does today. But back in the day the IPCC didn't have such detailed models yet still found CO2 driving climate change.

These 'attempts' do find support in some quarters and if they had the slightest amount of merit they would drive additional research. But they have all, so far, proved delusional, usually the work of a know bunch of climate deniers with nothing better to do.

Such clownish work, or perhaps clownish presentation of work, has been getting grander but less frequent through the years. An exemplar is perhaps Soon et al (2023) 'The Detection and Attribution of Northern Hemisphere Land Surface Warming (1850–2018) in Terms of Human and Natural Factors: Challenges of Inadequate Data'. Within the long list of authors I note Harde, Humlum, Legates, Moore and Scafetta who are all well known for these sorts of papers usually published in journals of little repute. If such work was onto something, it would be followed up by further work. That is how science is supposed to work.

Instead all we see is the same old stuff recycled again and again by the same old autors and being shown to be wrong again and again.

A further follow-up to Syme_Minitrue's post @ 1332, where (s)he finishes with the statement:

Let's say we wanted to run a climate model over the historical period (the last century) in a manner that "excluded CO2-dependency". How on earth (pun intended) would we do that, with a physically-based climate model?

In comment 1334, I linked to a review I did of a paper that claimed to be able to fit recent temperature trends with a model that showed a small CO2 effect. I said it was badly flawed.

Physically-based models in climate science generally get "fit" by trying to get the physics right.

Syme_Minitrue says: "and you can train the model to fit historical data "

This is a link to a unified model that gets the physics right. The data is fit according to a forcing with parameters that correspond to measured values, and cross-validated against test regions with a unique fingerprint

https://geoenergymath.com/2024/11/10/lunar-torque-controls-all/

The residual can then be evaluated for a climate change trend. Ideally, this is the way that climate change needs to be estimated. All conflating factors should be individually discriminated before the measure of interest can be isolated. That's the way it's done in other quantitative disciplines.