How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

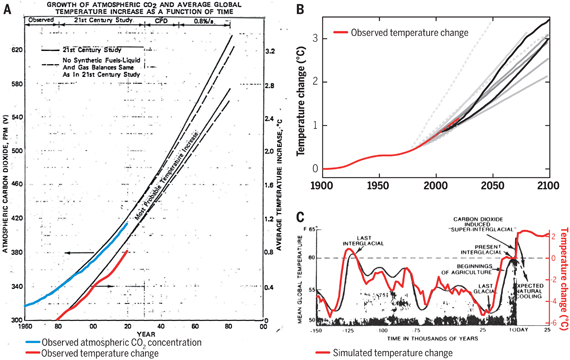

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

I'm still not sure what "increasing importance of precipitation data" means in the context of general circulation modeling. Is it not important enough right now? Why should it become more important?

Well, to the extent that certain specific models can be improved I think you'll see a decrease in public misunderstanding and "denialism", and an increased acceptance of the science.

protagorias @802:

First, your claim is false. There has been a remarkable and continuous improvement of the models over time. Going back to Hansen 88, models did not even include aerosols. By the TAR, they universally did, but did not show ENSO like fluctuations. By the AR4, some did, but others did not. Now, with AR5 they all do. None of this has had any noticable effect on public non-acceptance of climate science - which is not driven by the science, but by political ideology.

Second, no scientist anywhere should alter how they do science to "increase acceptance of the science". Doing so is just modifying science to suit political ends by a different name. Indeed, that is exactly what you are after, a fact given a way by your desire "that certain specific models" be "improved", rather than that all of them be improved by standard methods. By "improved" you mean no more than adjusted to give the results that you want.

Tom Curtis,

So what is the purpose of the Coupled Model Intercomparison Project as mentioned in #800, if all the models are homogenized as you appear to suggest? According to CIMP5, one of the goals is "determining why similarly forced models produce a range of responses". Clearly, not all models are equally valid in their predictions. I'm not suggesting that they don't all improve over time, but merely that certain improvements may more accurately reflect appropriate environmental factors. For example, heat in the oceans may be an appropriate factor.

Furthermore, what is the purpose of discussing consensus itself as a specific factor if not to encourage acceptance of the facts, if non-acceptance (which I agree is motivated by ideology) - leads to unhelpful inaction on the part of the public?

protagorias

"Well, to the extent that certain specific models can be improved I think you'll see a decrease in public misunderstanding and "denialism", and an increased acceptance of the science."

With respect I think that is utterly naive. Most misunderstanding and denialism has diddly-squat to do with the minutiae of the accuracy of specific models. 99.999% of the earths population have no idea of how 'the models' differ.

Glenn Tamblyn

You may be right.

Be that as it may, I will continue to maintain that increased accuracy in measurement is one of the primary drivers of model improvement.

[PS] Then maybe you would like to provide evidence to support that assertion.

Only one quick example

International Journal of Climatology Vol 25, Issue 15 High Resolution Climate Surfaces for Global Land Areas

[PS] Except that it is not. What is discussed is not input into any GCM.

Yet that is precisely what is relevant. Why else would climate modellers be asking for better software from which to better model climate change, if they didn't have, or at least recognize, the need for better input?

Please note this recent article in the Insurance Journal. Climate Change Modelling on Cusp of Paradigm Shift

Protagorias, I suggest you actually read the articles you link to. That insurance one is about insurance models. It has nothing to do with GCMs.

DSL,

You're right. I hadn't even noticed that! I tend to make wild leaps sometimes, i apologize for that.

protagorias @various, of course climate modellers want to make their models more accurate. The problem is that you and they have different conceptions as to what is involved. There are several key issues on this.

First, short term variations in climate are chaotic. This is best illustrated by the essentially random pattern of ENSO fluctuations - one that means that though a climate model may model such fluctuations, the probability that it models the timeing and strengths of particular El Ninos and La Ninas is minimal. Consequently, accuracy in a climate model does not mean exactly mimicing the year to year variation in temperature. Strictly it means that the statistics of multiple runs of the model match the statistics of multiple runs of the Earth climate system. Unfortunately, the universe has not been generous enough to give us multiple runs of the Earth climate system. We have to settle with just one, which may be statisticaly unusual relative to a hypothetical multi-system mean. That means in turn that altering a model to better fit a trend, particularly a short term trend may in fact make it less accurate. The problem is accentuated in that for most models we only have a very few runs (and for none do we have sufficient runs to properly quantify ensemble means for that model). Therefore the model run you are altering may also be statistically unusual. Indeed, raw statistics suggests that the Earth's realized climate history must be statistically unusual in some way relative to a hypothetical system ensemble mean (but hopefully not too much), and the same for any realized run for a given model relative to its hypothetical model mean.

Given this situation, they way you make models better is to compare the Earth's realized climate history to the multi-model ensemble mean; but assume only that that realized history is close to statistically normal. You do not sweat small differences, because small differences are as likely to be statistical aberrations as model errors. Instead you progressively improve the match between model physics and real world physics; and map which features of models lead to which differences with reality so as you get more data you get a better idea of what needs changing.

Ideally we would have research programs in which this was done independently for each model. That, however, would require research budgets sufficient to allow each model to be run multiple times (around 100) per year, ie, it would require a ten fold increase in funding (or thereabouts). It would also require persuading the modellers that their best gain in accuracy would be in getting better model statistics rather than using that extra computer power to get better resolution. At the moment they think otherwise, and they are far better informed on the topic than you or I, so I would not try to dissuade them. As computer time rises with the fourth power of resolution, however, eventually the greater gain will be found with better ensemble statistics.

Finally, in this I have glossed over the other big problem climate modellers face - the climate is very complex. Most criticisms of models focus entirely on temperature, often just GMST. However, an alteration that improves predictions of temperature may make predictions of precipitation, or windspeeds, or any of a large number of other variables, worse. It then becomes unclear what is, or is not an improvement. The solution is the same as the solution for the chaotic nature of wheather. However, these too factors combined mean that one sure way to end the progressive improvement of climate models is to start chasing a close match to GMST trends in the interests of "accuracy". Such improvements will happen as a result of the current program, and are desirable - but chasing it directly means either tracking spurious short term trends, or introducing fudges that will worsten performance in other areas.

Tom Curtis,

That's a lot to think about. We'll see what happens with improved computers, better instruments and measurements.

Responding to ryland's complaint (from an inappropriate thread) that models are wrong due to an error in computing insolation: Richard Telford in his blog Musings on Quantitative Paleoecology explained that it is a non-issue for global insolation (instead it is only a local issue), a non-issue for some models, a trivial issue for other models, and a small issue for a few models. In other words, what scaddenp and Kevin C already explained to ryland--but Richard has added a couple graphs.

ask yourself what is the purpose of climate models. As I see it the purpose is to predict the future of climate. However, the future of climate can not be predicted because it is a non linear chaotic system. The idea of increased co2 in the atmosphere causing increased temperature is a linear concept. Increasing the temperature causing sea level rise is a linear concept. In fact increasing temperate may set off a series of events that leads you into an ice age. Can the models predict these occurances, no they can not. in fact the use of models already have proven to be ineffective for prediction of global temperatures. they are unable to deal with chaotic events such as el ninos, volcanic eruptions, etc.

in fact we don't really know what the future will bring and therefore it is easier to adapt to the change than to prepare for and event that is not likely to occur,

[TD] See the response to the myth "Climate is Chaotic and Cannot Be Predicted." After you read the Basic tabbed pane there, read the Intermediate tabbed pane. If you have questions or comments about chaos, please make them on that thread, not this one. Everybody who wants to respond to that particular aspect of Rhwool's comment please do so over there, not here. Other aspects of Rhwool's comment are legitimately responded to on this thread.

[TD] Rhwool, please read the original post at the top of this thread, which presents empirical evidence of the success of climate models. After you read the Basic tabbed pane, read the Intermediate one. Please restate your claims that climate models cannot predict, in specific, concrete ways that confront that empirical evidence. Vague, evidence-free, and especially evidence-contradictory, claims have no place in a scientific discussion and therefore no place on this Skeptical Science site.

I would have say say that I am quite an expert when it comes to modeling real world phenomema. I used computers to model hydrology and finite element analysis regular at work. I've been doing this for 40 years. The two applications represent linear mathematics. The most important aspect of modeling is confidence in the model. Confidence is gained by checking the results against the real world applications. I get the luxury of testing this as soon as a month but normally with 6 months.

I know now the mathematics for the finite element analysis is rock solid. It's based on Hardy Cross's Method of Virtual Work. Basically just one equation. Hydrology is based on many equations. If someone else analyses the model it's normal to get results within 15 percent. This has been tested 1000's of times. Getting these results gives you high confidence.

Different results are due to initial conditions of the model, calculation interpretation, etc.

but alas it's not so simple. As model complexity increases the confidence level goes down. You get unexpected results. Small changes in the model seem to produce large changes in the results. It starts to behave non linear.

The he modeller has a preconceived notion as what to expect from the result. When the results are not what you think they should be you will test this by altering the conditions. This is performed in all modeling. When they ran the climate models to test against the piñatuba volcano I guarantee you they massaged the model quite a few iterations to achieve this result. In reality this give you a better understanding of how the model works.

One argument you hear is Gigo. It's my belief this is not accurate And a bad argument. Believe me...the modeller spends a large amont of time to get all the parameters as precise as he can to ensure the best result.

This is my believe that the climate models can not be trusted

1 the results of all the models indicate a wide spread. The ipcc show predicted temperature errors is in the +/- 75 percent range. This would yield a low confidence. If I got results in my work for that spread I would trash the result and use another method. In fact it would be nearly impossible to design anything based on that result.

2. The model has not been tested against enough real world events to judge the reliability of the model. It takes 1000s of tests to ensure model reliability.

3. The non linearity signficantly complicates the model performance.

4 model complexity increases errors through unexpected results.

Rhoowl... Have you actually tried engaging with researchers who are actively working on climate models?

Hydrology is basically a micro climate Model....you go through very similar steps to model the system....you have to use storm data to calculate rainfall. Break them into isoheytals. Quantify drainage areas and land parameters. Calculate stage storage discharge relationships. Understand how the fluid mechanics affects your models.

In in reality the steps you go through in the analysis isnt any different than doing finite element analysis. Even though hydrology isnt anything like finite element analysis in theory....

ive have have worked mostly with other engineers..... I have a degree in civil engineering environmental emphasis.

Rhowl - I think you should read up on actually how climate models work and particularly make sure you understand the difference between a weather forecast models and climate models.

"When they ran the climate models to test against the piñatuba volcano I guarantee you they massaged the model quite a few iterations to achieve this result."

I am lost to understand how you can conclude that. When Pinatuba erupted, the model prediction was made at the time (published as Hansen et al 1992). The evaluation of model prediction was done with Hansen et al 1996 and Soden 2002. I also notice that the incredibly primitive Manabe model used by Broecker 1975 is doing pretty well.

I am not quite sure what you understand what the predictions of a climate model to mean. As the modellers would happily tell you, models have no skill at sub-decadal or even decadal prediction of surface temperature. That is basically weather not climate. In the short term, large scale, unpredictable internal variability like ENSO dominate. They do have skill at climate prediction - ie 30 year trends. That said, climate sensitivity is difficult to pin down. It is most likely in the range 2-3.5. We would desparately like to be pinned down better than that but perhaps you should look at the recent Ringberg workshop presentations to understand why this is so difficult. Nonetheless, the 2-3.5 is certainly good enough to drive policy. Whatever the shortcomings of climate models, their skill is far better than reading chicken entrails etc.

Rhoowl @815, you claim that with respect to temperature, the AR5 models show an error spread of plus or minus 75%. That is completely false. The models is in AR5 show a range of predicted absolute global mean surface temperature (1961-1990) from 285.7 to 288.4 K, with a mean of 286.9 K and a standard deviation of 0.6 K. The observed values are given as 287.1 K, for an error range (minimum to maximum) of -0.49 to +0.45%. You think there is a larger percentage error range, but that is only because values are stated as anomalies of the 1961-1990 mean, ie, they eliminate most of the denominator for convenience. That is approriate for their studies, but if you are going to run the argument that the models are so inaccurate as to be useless, you better compare the models actual ability to reproduce the Earth's climate, not merely the exact measure of its reproduction of minor divergences in that climate.

http://www.ipcc.ch/publications_and_data/ar4/wg1/en/spmsspm-projections-of.html

this give a predicted future temperature...and plus or minus therefrom...errors are in the range +/- 100% for constant to somewhat less as you move down the chart....so 75% is a reasonable figure

these estimates are based on their models

scaddenp....i don't know where you see in my posts where i am comparing weather forecast models to climate models....although those two models are very similiar...

as far as pinatubo...

http://earthobservatory.nasa.gov/Features/Volcano/

this explains how they used this eruption to model aerosols and test it against real world effects..it also went on to explain they ran several simulations..this is actually critical in determining the accuracy of the model...without real world test the models mean nothing..but you need many tests to ensure your model is properly working. trouble is the events that they can test are few and far between...it will take a very long time before they can refine the models to get accurate results..

not sure what your other comments are about...never mentioned any of those either.

[JH] Link activated.

Rhoowl @820, so you are going to stick dogmatically to the belief that the possible range of Earth temperatures is restricted to 287 K plus or minus a couple of degrees not matter how conditions at the surface, or astronomically vary? Because the only way a comparison for accuracy matters if you are determining whether the models are any good is by comparing their predictions relative to the possible range. They are skillful if they narrow that range, and not otherwise. Given that the range of possible plantetary surface temperatures is known from observation to be from around 2 to around 600 K, that shows a remarkable level of dogmatism on your part.

Rhoowl - you are comparing your hydrology models to climate models. Understanding the differences between weather and climate (initial value versus boundary value) would give you some insight into the difference. While using the pinatuba data to improve aerosols is certainly a way to test and improve models, I am noting that modellers published an essentially correct prediction of what would happen with pinatuba in advance.

The other comments were explaining what are the known issue with limits on temperature prediction (the problem of climate sensitivitiy) which explains some of the spread in model prediction. You claim models cant be trusted but I am trying to point out that

a/ they can be trusted to predict various climate variables within useful limits. You can get your "1000s of tests" by looking at model versus observation on a whooping range of climate variables over various time intervals. AR4 has lengthy chapter on model validation.

b/ they are the best tool we have estimate future climate change. You dont need a model to tell you that if you add extra radiation to a surface is going to warm it up but you do need one to tell you by how much.

Rhoowl

"weather forecast models to climate models....although those two models are very similiar...".

That is the nub of it. They aren't.

Here is a simple analogy.

I have a swimming pool in my backyard. Summer is approaching and the water level is low. So I throw the garden hose in and turn on the tap. Big pool, small hose - it will take quite a while to fill. While it is filing, my family are using the pool, getting in and out, adjusting the water level due to the displacement of their bodiea. Lots of splashing, waves, the dog jumping in after a frisbee.

I could build two models. One model attempts to predict the detailed water level across the pool, all those waves and stuff. Pretty complex and it can only be done for short timescales. The other model attempts to predict the slower variation of the average height of the water. Much simpler; pool, hose, tap, flow rate, that's about it. Can't predict short term small scale variations but pretty good at predicting long term changes in averages.

The first model is an initial value problem. It takes the current state of the surface of the pool, in all its messy complexity, and attempts to project it forward for seconds, minutes at best. Because over that timescale th change in total volume of water in the pool is a minor component.

The second model is a boundary value problem. It is looking at those factors that determine the boundaries within which the smaller scale phenomena play out. Essentially in this case, how much water is in the pool.

Although the two models are based on similar basic principals, the goal and methods of the models are very different. At its simplest, weather models are attempting to model the detailed distribution of energy within the climate system to determine local effects, but essentially assuming that the total pool of energy within the entire system is largely constant. Esentially modelling intra-system energy flows.

Climate models are firstly modelling how the total pool of energy for the entire system changes in size over time. Then secondly they attempt broad estimations of general intra-system distributions of energy. But they can't attempt detailed estimations of intra-system distributions, only broad characteristics.

In a simple sense, weather models model the waves, climate models model the water volume. Weather models ignore the change in water volume, climate models ignore the details of each individual wave.

Rhoowl

"Hydrology is basically a micro climate Model....you go through very similar steps to model the system....you have to use storm data to calculate rainfall"

Nope. It's a micro weather model!

Your hydro model is trying to model how a system responds to a set of external inputs. The best analogy with climate models would be if you were trying to predict what the storm data will be. Modelling the micro detail of behaviour from given inputs is different from modelling what the inputs will be AND broad general behaviour in response to that.