Recent Comments

Prev 21 22 23 24 25 26 27 28 29 30 31 32 33 34 35 36 Next

Comments 1401 to 1450:

-

Bob Loblaw at 04:45 AM on 21 June 2024Climate - the Movie: a hot mess of (c)old myths!

janolsen @ 114:

(yawn)

Yep. The same collection of "usual suspects". Soon. Tol. Lomborg. Koonin. Those names pop up in the first few pages.

Didn't read any further.

Let us know if you find an argument that has not previously been debunked. Don't forget to follow the links in the table in the OP if the "argument" you find convincing relates to one of those topics. You'll be expected to provide an argument as to why what you are pointing to represents something new - and not just a re-assertion of the myth listed on the relevant SkS page.

-

John Mason at 03:28 AM on 21 June 2024Climate - the Movie: a hot mess of (c)old myths!

Re: janolsen #114:

I said in the original post:

"This same old carnival troupe is wheeled out time and again to spread doubt about climate science. Why? Because that's what they are good at doing, with decades of combined experience under their belts."

Your link makes the same point but in greater detail. -

janolsen at 01:53 AM on 21 June 2024Climate - the Movie: a hot mess of (c)old myths!

One of the themes in the movie seems to be that co2 levels and temperatures have been higher before humans were around, i.e. when other animals roamed the earth...They also seems to claim that temperatures have risen shortly before co2 levels rise, rather than as a direct result of co2 levels (though co2 is undoubtedly has a greenhouse effect).

Here's is "opposing side's" documenation for the statements made in the movie:

https://andymaypetrophysicist.com/2024/03/26/annotated-bibliography-for-climate-the-movie/ -

nigelj at 06:48 AM on 19 June 2024At a glance - Was 1934 the hottest year on record?

The following study published on researchgate explores the reasons why the 1930s were so hot and dry in the USA:

"Extraordinary heat during the 1930s US Dust Bowl and associated large-scale conditions. Unusually hot summer conditions occurred during the 1930s over the central United States and undoubtedly contributed to the severity of the Dust Bowl drought. We investigate local and large-scale conditions in association with the extraordinary heat and drought events, making use of novel datasets of observed climate extremes and climate reanalysis covering the past century. We show that the unprecedented summer heat during the Dust Bowl years was likely exacerbated by land-surface feedbacks associated with springtime precipitation deficits. The reanalysis results indicate that these deficits were associated with the coincidence of anomalously warm North Atlantic and Northeast Pacific surface waters and a shift in atmospheric pressure patterns leading to reduced flow of moist air into the central US. Thus, the combination of springtime ocean temperatures and atmospheric flow anomalies, leading to reduced precipitation, also holds potential for enhanced predictability of summer heat events. The results suggest that hot drought, more severe than experienced during the most recent 2011 and 2012 heat waves, is to be expected when ocean temperature anomalies like those observed in the 1930s occur in a world that has seen significant mean warming. (emphasis mine)"

-

Bob Loblaw at 08:19 AM on 18 June 2024Of red flags and warning signs in comments on social media

OPOF:

Responding to one other point you make.

One individual may not hold those contradictory opinions (although many do), but as you say when contrarians collect their arguments from others and present them at their "conferences", publish them in their reports, or testify to Congress, they often don't care that there are contradictions. All they need to do is convince The Powers That Be that there must be a pony somewhere in that pile of [self-moderation]...

-

Bob Loblaw at 08:13 AM on 18 June 2024Of red flags and warning signs in comments on social media

OPOF:

Your closing sentence - "...when evidence and reasoning do not limit the realm of what is 'believable'." - sums up the non-scientific aspect of the majority of the contrarian arguments.

Scientists disagree about many things. The scientific process for dealing with that is:

- Acknowledge that the difference exists.

- Discuss the differences (conversation, via journal papers, etc.) and come up with a clear understanding of the nature and root cause of the differences.

- This usually includes an effort to recognize where there are not differences - finding common ground that is generally accepted as being well-supported by evidence.

- Determine what sort of evidence is lacking to determine which of the competing explanations is more likely to be correct.

- The differences of opinion are usually in the form of interpretation of the evidence, or speculation. Things that are not directly measured.

- Determine a method (experimental, observational) where the needed evidence can be obtained.

- This needs to be evidence where one hypothesis says one thing will happen, while the other says something different will happen. You know: the differences.

- If the competing hypotheses all predict exactly the same outcomes for all situations, then the hypotheses are not different. They may use different words, but that's not enough to make them "different".

- Collect the needed evidence.

- Be prepared to drop your belief if the evidence goes against it.

Principles such as parsimony or Occam's razor apply when competing explanations can explain all the current evidence equally well. The principle says that it is more reasonable to use the explanation with fewer assumptions. It's a preference, not a Golden Rule. And when additional evidence comes along that can distinguish among the various explanations, the evidence will win the argument.

-

One Planet Only Forever at 07:36 AM on 18 June 2024Of red flags and warning signs in comments on social media

Bob Loblaw,

Thank you for the additional information.

The BBC article I referred to in my comment @12 also includes the following:

"The Intergovernmental Panel on Climate Change (IPCC) says Africa is “one of the lowest contributors to greenhouse gas emissions causing climate change”.

However, it is also “one of the most vulnerable continents” to climate change and its effects - including more intense and frequent heatwaves, prolonged droughts, and devastating floods.

Despite all this, Mr Machogu continues to insist “there is no climate crisis”."

To be fair Mr Machogu does not appear to also claim that 'climate change impact reduction actions redistribute wealth from rich to poor'. He appears to only claim that the actions keep Africans poor.

The problem is the way that contradictory beliefs seem to get gathered up into a collective of harmful misunderstandings. The contradicting claims about rich and poor both exist unchallenged in the denial gathering.

Political players with a penchant for benefiting from understandably harmful misunderstandings do not appear to care about contradictions between the misunderstandings inside their big tent, or under their large umbrella, of harmful misunderstandings. Winning any way that can be gotten away with appears to be 'Their Primary Interest'. And an essentially infinite number of 'contradictions' can be produced when evidence and reasoning do not limit the realm of what is 'believable'.

-

Bob Loblaw at 06:08 AM on 18 June 2024Of red flags and warning signs in comments on social media

OPOF:

Yes, it's amazing how so many of these zombie myths keep coming back in slightly altered form.

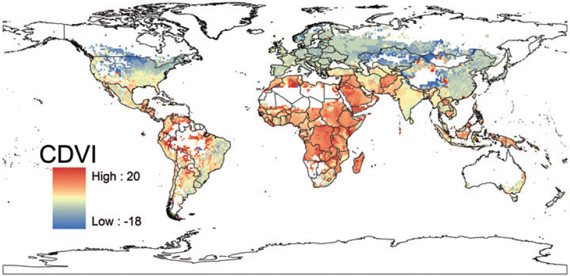

The OP does include a link to SkS's list of common myths (https://sks.to/arguments), and lo and behold we find "CO2 limits will hurt the poor" at #67. In that rebuttal, there is a map of Climate Demography Vulnerability Index (CDVI) that shows much of Africa as being highly vulnerable. (Go to the link above to see details on the source).

...but you also raise another important "red flag" not specifically mentioned in the OP here: logical inconsistencies in the arguments being made. It takes a significant level of psychological compartmentalization to be able to hold strongly contradicting beliefs at the same time. As you state, how can action make poor countries richer and poorer at the same time?

SkS used to have an online list of contradictory "contrarian" viewpoints, but it became too difficult for our limited number of volunteers to keep up-to-date. Too many contradictions, I suppose.

-

One Planet Only Forever at 02:27 AM on 18 June 2024Of red flags and warning signs in comments on social media

I just read the following BBC article that presents a ‘red flag’ twist on the Agenda 21 point raised by nigelj @1 and expanded on by Nick Palmer @2.

BBC News: How a Kenyan farmer became a champion of climate change denial

I have seem many comments dismissing the undeniably required rapid significant correction of developed activity and related perceptions of status by claiming that ‘Agenda 21 types of actions’ are ‘unjustified wealth redistribution from rich to poor people’ (unjustly based on the unjustified beliefs that people perceived to be richer deserve to be richer and people who are poorer deserve to be poorer).

This ‘red flag twist’ is basically that actions to correct the developed harmful ways of living ‘harm the poor’. It is often simplistically claimed that ‘putting a price on carbon’ should not be done because it hurts the poor. Of course, parallel actions to help the poor, like rebating collected carbon fees with more going to poorer people than to richer people, are required to limit the harm done to the less fortunate by the undeniably helpful action of making it more expensive to be harmful.

The following quote from the BBC reporting is the twist made by a ‘social media popular African farmer (Mr Machogu)’:

“On social media, he has repeatedly posted unfounded claims that man-made climate change is not only a “scam” or a “hoax”, but also a ploy by Western nations to “keep Africa poor”.”

So the climate change actions can be unjustifiably accused of ‘keeping the poor poor’ as well as ‘redistributing from the rich to the poor’.

Of course, anyone who cares to learn about important matters like Agenda 21 will understand the injustice of demanding restrictions on harmful actions by ‘poorer farmers’ without ‘wealth redistribution from those who are richer’ that effectively improves the lives of poorer farmers, especially the poorest, in parallel with richer people dramatically reducing how harmful their developed ways of living are (even if they believe that such harm reduction by them combined with having to help the least fortunate makes them poorer relative to the poorest).

The BBC article also includes the following statement directly related to a ‘red flag’ already identified in the OP:

““Climate change is mostly natural. A warmer climate is good for life,” Mr Machogu wrongly claimed in a tweet posted in February, along with the hashtag #ClimateScam (which he has used hundreds of times).”

-

BaerbelW at 19:36 PM on 16 June 2024It warmed before 1940 when CO2 was low

Please note: the basic version of this rebuttal was updated on June 16, 2024 and now includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

-

nigelj at 08:54 AM on 16 June 2024Of red flags and warning signs in comments on social media

Interesting that TWFA quotes Paul Ehrlich as an example of a clever contrarian. Perhaps TWFA doesnt realise Ehrlichs predictions of mass famine by the 1970s due to over population, have clearly been proven spectacularly wrong. And it is unlikely there would be mass famine in the future, given fertility rates have fallen so much (fortunately).

Sure sometimes contrarians are proven right but putting your faith in them is very risky - especially the climate contrarians who have been debunked over and over again.

-

One Planet Only Forever at 07:14 AM on 16 June 2024Of red flags and warning signs in comments on social media

nigelj @1 is correct that the mention of 'Agenda 21' should be a red flag that the comment is presenting a misunderstanding.

The following is a link directly to UN Agenda 21. It was published in 1992.

Learning about the reality (the truth) of Agenda 21 is contrary to the developed interests of many people. The developed interests of those people are understandably a serious problem negatively impacting humanity and democracy now and into the future.

-

One Planet Only Forever at 06:54 AM on 16 June 2024Of red flags and warning signs in comments on social media

In addition to the justified criticisms of TWFA’s comment @4, in particular Charlie-Brown’s @8 concern regarding the significant number of people who have allowed themselves to be uninformed or misinformed, NPR recently reported “A major disinformation research team's future is uncertain after political attacks”.

The Republican Party radicalized by Fossil Fuel Interests is not the only party interested in misleading people and keeping the misled from becoming better informed. But they certainly are a major part of the problem. And, like other ‘successful’ misleaders, they mislead on many matters, not just climate science.

I recently made a related comment (see here on the SkS shared item Fossil fuels are shredding our democracy). Misleading political and economic game players are a massive threat to the future of humanity and efforts to improve democracy.

People in competition for perceptions of status and hoping to maximize ‘their freedom to enjoy believing and doing whatever they please’ can easily be tempted to misunderstand how harmful and unhelpful their misunderstanding and related unacceptable actions actually are ... including actions like absurdly believing that ‘understanding that burning fossil fuels produces significant negative climate change impacts’ can be simplistically dismissed by claiming it is the belief that ‘humans can control the climate’.

-

Charlie_Brown at 02:27 AM on 16 June 2024Of red flags and warning signs in comments on social media

TWFA @ 4

I worry about the 42% of adult Americans who either do not believe or do not know that global warming is caused by human activities (ref: Yale Climate Opinion Maps 2023. I believe that they have been influenced by persistent disinformation that continues to be initiated by the 1-3% of scientists who undermine the science. As voters, they influence political leaders and policy. Many policy makers have not been convinced that action is needed to reduce greenhouse gas emissions. See, for example, Mike Johnson Town Hall.That global warming is caused by greenhouse gas emissions is solid, fundamental science including: 1) conservation of energy – 1st law of thermodynamics, 2) basic atmospheric physics, and 3) laws of radiant energy transfer (Stefan-Boltzmann Law, Planck Distribution Law, Kirchoff’s Law, and Beer-Lambert Law. Global warming theory is based on these fundamentals and supported by a massive amount of evidence and cross-checks. All of the disinformation that I have seen is either: 1) not supported by evidence, 2) misrepresents the global warming theory, and/or 3) does not comply with the laws of science listed above.

-

michael sweet at 01:32 AM on 16 June 2024Of red flags and warning signs in comments on social media

TWFA:

Have you noticed that there is a record heat dome over the USA today? Or that south Florida had record floods yesterday? Why would we wait 20 years to decide to take action when the climate has already dramatically changed exactly as the 97% of scientists predicted?

The longer we wait to take action the worse the damage will be.

Renewable energy is cheaper than fossil energy today and will save trillions of dollars.

-

Bob Loblaw at 01:31 AM on 16 June 2024Of red flags and warning signs in comments on social media

TWFA @ 4:

Oh, my. What red flags does your comment trigger?

- Questions the 97% consensus. Suggests waiting a couple of decades to see what happens. Claims action will take "hundreds of trillions". As the OP says, "wording typical for the 'discourses of climate delay'".

- Uses phrases "conclusions are correct and infallible" and "your perfection of science and purity of motive" [emphasis added], which attempts to make the consensus look like it is an absolute claim (even though the OP says "the facts are at least more than settled enough to base our decisions on". [again, emphasis added].

- Says "...convinced the right people..." and "...your theory of climate control..." [emphasis added], showing conspiratorial thinking (in group, out group).

- Says "...that anything said in question or to the contrary is "disinformation"...", in spite of the fact that the OP uses phrases such as "...in most likelihood be wrong or misleading...", "...might also be an indication...", "...It doesn't necessarily mean that everything written is wrong, but it nonetheless serves as a warning flag...", and "...might need to be read with a suitably large grain of salt."

All in one short paragraph. An impressive feat, to pack so many warning signals into such a short comment. Were you intending to provide us with an example of the type of comment that is probably safe to ignore? Or maybe you're just doing a Poe? Or maybe you are just so self-unaware that you don't see this in yourself?

-

John Mason at 00:46 AM on 16 June 2024Of red flags and warning signs in comments on social media

@TWFA #4:

Just WHO is, "going to make everybody else spend hundreds of trillions testing your theory of climate control"???

-

TWFA at 00:18 AM on 16 June 2024Of red flags and warning signs in comments on social media

I don't know why you worry about all this chatter if you know your science and conclusions are correct and infallible, that anything said in question or to the contrary is "disinformation". You've already convinced the right people of your perfection of science and purity of motive and they are going to make everybody else spend hundreds of trillions testing your theory of climate control, so stop worrying about it, in a couple decades we'll see if the 97% were right or some Copernicus or Ehrlich among the 3% hawking "disinformation" was instead.

-

Nick Palmer at 20:54 PM on 15 June 2024Of red flags and warning signs in comments on social media

Just to clarify, both of those quotes have been 'quote mined' and cherry picked, by manipulative people, from much longer texts which, if read in their entirety, put a very differen meaning on the text.

-

Nick Palmer at 20:47 PM on 15 June 2024Of red flags and warning signs in comments on social media

I find that the 'it's all a plan to enable the evil Agenda 21’ has mutated in the last couple of years into 'it's all a plan to enable the evil WEF (World Economic Forum) plan for totalitarian global control. This is usually expressed as a neo-McCarthyist fear that Johnny Foreigner is planning to take away Americans' freedoms (and guns...). They'll often refer to 'super-wealthy globalists' like George Soros and Bill Gates, Mencken's 'hobgoblins' quote and go on to quote the 'Club of Rome'

"In searching for a new enemy to unite us, we came up with the idea that pollution, the threat of global warming, water shortages, famine and the like would fit the bill. In their totality and in their interactions these phenomena do constitute a common threat with demands the solidarity of all peoples. But in designating them as the enemy, we fall into the trap about which we have already warned namely mistaking systems for causes. All these dangers are caused by human intervention and it is only through changed attitudes and behaviour that they can be overcome. The real enemy, then, is humanity itself."

"The First Global Revolution", A Report by the Council of the Club of Rome by Alexander King and Bertrand Schneider 1991"

Another favourite quote is bt Ottmar Edenhofer - "But one must say clearly that we redistribute de facto the world's wealth by climate policy. Obviously, the owners of coal and oil will not be enthusiastic about this. One has to free oneself from the illusion that international climate policy is environmental policy. This has almost nothing to do with environmental policy anymore.

Ottmar Edenhofer

'UN IPCC official'" -

nigelj at 08:36 AM on 15 June 2024Of red flags and warning signs in comments on social media

This is an example of warning sign I've seen a few times: "Climate science and mitigation is just part of Agenda 21."

This is allegedy a plan for the United Nations to exert totalitarian control over the world and impliment evil policies. Some States in the USA (Republican states) have banned its use

In fact Agenda 21 is a set of voluntary, practical, sensible, generally commonly publically accepted guidelines to help solve a range of environmental, economic and social problems and to promote sustainable development, ideally to be worked out and implimented locally rather than by central government. This link gives a summary:

-

One Planet Only Forever at 11:37 AM on 14 June 2024Fossil fuels are shredding our democracy

Thank you for continuing to point to and share excellent examples of the increased awareness and improved understanding that needs to become the norm for the vast majority of global humanity in order for humanity to have a decent improving future.

A focus on pursuing individual interests can lead to conflicting interests escalating into ‘uncivil conflicts’. A mechanism to limit the harm of such damaging developments, perhaps the most effective mechanism, is for all actions to be governed by the pursuit of learning to be less harmful and more helpful to Others. There are many books and research reports supporting this understanding regarding climate science and many other important matters that ‘people can and should learn about’.

Specifically regarding democracy, “How Democracies Die”, by Steven Levitsky and Daniel Ziblatt, is a robust evidence-based presentation focused on ‘norms that are essential to democracy’. The norms include:

- accepting that all competitors for leadership are legitimate (which is only possible if all competitors have a passion for learning to be less harmful and more helpful to Others, no misleaders allowed)

- limiting the use of systemic powers like ‘legal or government’ actions (such powers only used to limit the harm done by people, especially misleaders, who have interests that conflict with learning to be less harmful and more helpful to Others).

If more powerful people respected those norms then less harm would have happened to date, the current day would be better, and it would be less challenging to end the harms being done to the future of humanity and develop lasting improvements like truly better energy production and less unnecessary energy demand.

I have an improved understanding to offer regarding the opening statement. Instead of “...waiting for the day when renewable energy would become cheaper than fossil fuels”, people concerned about the climate change impacts of developed human activity should have been demanding that the negative impacts of fossil fuels and any of the alternatives be learned about and be neutralized (and reversed) regardless of the cost. A fundamental requirement is ensuring that people’s ‘free choices’ are harmless to Others. And more costly energy would bring about a very important correction – reduction of unnecessary energy use.

The cheapest renewable energy will likely be the most harmful and least sustainable option. That is what the competitive marketplace (economic and political) can be expected to produce and promote if the objective is simply ‘Renewable energy that is cheaper than fossil fuels’. The marketplace can also be expected to produce efforts that ‘increase the harm done by fossil fuels to maximize the benefits obtained by people who want to benefit from fossil fuel use’. Also, even the best renewable energy production will have some negative impacts.

The governing norm required to sustain any system, including a democracy or business organization, can be understood to be: Passionate pursuit of learning to be less harmful and more helpful to Others. Anyone with interests that conflict with that governing norm are likely to harm the future of the system, including democracy or other systems of ‘individual freedoms’.

Without ‘respectful tolerance of justifiable differences and leadership passion for correction of misunderstandings’ competition for perceptions of status relative to Others will likely produce conflicting interests with damaging results. Poorly governed or misled competition will degenerate into harmful escalating efforts to obtain and maintain unjustified perceptions of status.

Competition for perceptions of status can produce very beneficial results. But it can undeniably also produce very damaging results, including the shredding of democracy.

-

Bob Loblaw at 23:26 PM on 13 June 2024On Hens, Eggs, Temperature and CO2

Since we are using cartoons to represent Koutsoyiannis et al's thought process, today's XKCD cartoon seems appropriate. (As usual with XKCD, if you follow the link to the original web page, you can hover over the cartoon to get additional insight.)

-

Bob Loblaw at 08:42 AM on 12 June 2024CO2 effect is saturated

Jim: I have not had time to read that paper in detail yet, but I can provide a bit of background.

From the paper, it mentions

AIRS is a grating spectrometer that measures spectral radiances in 2378 channels across the 2,169–2,673 cm−1, 1,217–1,613 cm−1, and 649–1,136 cm−1 bands and has calibration and stability performance since 2002.

In order to get spectral data from a light source, the light source needs to be split into different wavelengths.

- One way to do this is with optical filters - but you need one filter for each wavelength, so it is hard to get a lot of wavelengths this way.

- The second method is to spread the light out by wavelength, like a prism does. You don't use prisms in such instruments though - you use either a narrow slit, or a diffraction grating. AIRS seems to use the latter.

- Once the light is spread out, you need to measure it. One way is to have a single sensor move along the spectrum, but this is slow and mechanically complex (but highly accurate). The modern way is to put a diode array into the system, and each diode in the array falls at a different wavelength. That's what AIRS seems to use.

The raw diode array output needs to get translated to spectral irradiance, and the spectral resolution is limited to the number of individual diodes in the array.

The Raghuraman et al paper then increases the resolution using radiance models as an interpolation/enhancement method, and uses CERES broadband (not spectral) data to help limit the model results.

In short, it's not a particularly simple process. Not surprising that it has taken time for someone to do it.

-

Jim Hunt at 19:45 PM on 11 June 2024CO2 effect is saturated

Thanks for the heads up re Raghuraman et al. 2023 in the new "basic" section.

Having looked at CERES data in the past I now find myself wondering why (apparently) nobody has previously thought of using AIRS data to "provide measurements of Earth's emitted thermal heat at fine-scale wavelengths".

Am I missing something? If so, what?! -

Bob Loblaw at 22:58 PM on 10 June 2024Fact Brief - Is the ocean acidifying?

Eddie:

Also keep in mind that the logarithmic/exponential nature of pH values means that any decrease of 0.1 in pH units means a 26% increase in acidity from the previous value - 8.2 to 8.1, 9.6 to 9.5, 10.9 to 10.8, etc. The ratio between the two numbers is always 10-0.1 = 1.26.

-

EddieEvans at 05:54 AM on 10 June 2024Fact Brief - Is the ocean acidifying?

Both my wife and I thank you.

-

Bob Loblaw at 05:30 AM on 10 June 2024Fact Brief - Is the ocean acidifying?

Eddie:

Remember that pH is a logarithmic scale. This is explained in the links at the bottom of the post.

To get actual concentration of H ions, you calculate 10-pH.

- 10-8.1 = 7.943e-9

- 10-8.2 = 6.31e-9

Ratio between those two numbers is 1.26. So from that calculation, pH 8.1 is 26% higher H ion concentration than ph 8.2.

If you want to calculate the other way, to see what pH is 30% higher than 8.2, we do 1.3* 6.31e-9 = 8.2e-9, and -log(8.2e-9 = 8.086.

In round numbers, pH 8.1 is about 30% more acidic than pH 8.2. At a guess, the source of the original 30% figure is either rounding, or has slightly more precise measurements of the change in pH.

-

EddieEvans at 03:56 AM on 10 June 2024Fact Brief - Is the ocean acidifying?

"Since the Industrial Revolution, ocean pH has declined from 8.2 to 8.1 — a 30% increase in acidity."

I'm not quite clear on the 30% increase in acidity." I understand how acidity increases as Ph drops. I don't know what to do with the numbers 8.2 to 8.1 in the context of a point 1 change creating a 30% acidity increase.

Where do I go astray?

Thanks to anyone who cares to help me out on this. -

Bob Loblaw at 23:05 PM on 9 June 20241934 - hottest year on record

One aspect of hot records versus cold is that in a warming planet we expect to see more high temperature records set than cold.

Anecdotal information for sure, but I saw recently that Las Vegas has not set a daily cold temperature record for 25 years. It sure has set a lot of record daily highs since then.

Even Fox News has reported on this (first Google hit).

https://www.foxweather.com/weather-news/25-years-las-vegas-low-temperature-record

-

BaerbelW at 19:53 PM on 9 June 20241934 - hottest year on record

Please note: the basic version of this rebuttal was updated on June 9, 2024 and now includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

-

David Kirtley at 09:41 AM on 5 June 2024On Hens, Eggs, Temperature and CO2

Bob, @7:

Ha-ha, yes, good catch, re. Foghorn's quantum-state delimma (as it were) and Koutsoyiannis et al's refusal to look at the bleedingly obvious climate science found in Foghorn's Feed Box.

-

Bob Loblaw at 05:37 AM on 4 June 2024On Hens, Eggs, Temperature and CO2

RE: my comment 10:

Now, if Koutsoyiannis et al want to claim that ENSO effects on temperature are irrelevant - i.e., that it does not matter if the temperature variation is due to ENSO, volcanoes, or fairy dust, etc. - then they can try to make that claim. But then they are breaking the chain of causality.

Causation has to start somewhere, and their "unidirectional, potentially causal link with T as the cause..." is basically ignoring any previous cause. By ignoring anything else, they fail to consider the possibility that both T and CO2 are responding to something else (hello, ENSO!). And, of course, they ignore the possibility of feedbacks, where two or more factors affect each other - i.e., the world is not unidirectional.

-

Bob Loblaw at 04:24 AM on 4 June 2024On Hens, Eggs, Temperature and CO2

As MAR points out in comment 9, Koutsoyiannis et al ignore ENSO as a possible factor in their analysis.

Is ENSO a factor in global temperatures? Yes. Tamino has had several blog posts on the matter, where he has covered the results of a paper he co-authored in 2011, with updates. The original paper (Foster and Rahmstorf, 2011) looked at the evolution of temperatures from 1979-2010, and determined that much of the short-term variation is explained by ENSO and volcanic activity. After accounting for ENSO and volcanic activity, a much clearer warming signal is evident.

Tamino recently updated this analysis, with modified methodology and covering a longer time span (1950-2023). This method turns this:

to this:

Now remember: Koutsoyiannis et al used differenced/detrended data in their analysis, which means that they have removed any long-term trend and fitted their analysis to short-term variations. If you remove the short-term effects due to ENSO, Koutsoyiannis et al will have a temperature signal with a lot less variation. That means they have a lot less ΔT to "cause" CO2 changes. Their physics-free "causality" gets stretched even thinner (if this is possible with an analysis that is already broken).

-

Eclectic at 04:23 AM on 4 June 2024Is Nuclear Energy the Answer?

Michael Sweet @363 :

Thank you for the IEEFA report. Incidentally I had just read a December 2023 interview of Mycle Schneider by Diaz-Maurin (editor of the Bulletin of the Atomic Scientists ).

Too long to give a thorough summary ~ but in short, the Small Modular Reactor programs are "going nowhere fast". Costings now worse than the conventional large fission reactors (which are also in deep trouble in cost & time). Schneider touched on SMR security problems as well.

-

MA Rodger at 01:41 AM on 4 June 2024On Hens, Eggs, Temperature and CO2

The most powerful message of the paper Koutsoyiannis et al (2023) 'On Hens, Eggs, Temperatures and CO2: Causal Links in Earth’s Atmosphere' is their "Graphical Abstract" which is reproduced in the OP above as Figure 1.

They are not the first to try and use these data to suggest increasing CO2 is not warming the planet. And likely there will be other fools who attempt the same in the future.

So what does their "Graphical Abstract" show?

The graphic below is Fig2 of Humlum et al (2012) which insists this same data shows that CO2 lags temperature and not the other way round.

The data that is missing is the ENSO cycle which precedes both the T and the CO2 wobbles and thus drives global temperature wobbles and, by shifting rainfall patterns, drives CO2 wobbles. To suggest (as Koutsoyiannis et al do) that such "analyses suggests a unidirectional, potentially causal link with T as the cause and [CO2] as the effect" is simply childish nonsense.

-

Bob Loblaw at 00:42 AM on 4 June 2024On Hens, Eggs, Temperature and CO2

David @ 6:

Yes, the point you make about how glacial cycles show CO2 and T variations that would imply a huge temperature increase is needed over the last century to cause the observed rise in CO2 is discussed in the PubPeer comments on the earlier paper.

The earlier paper used the UAH temperature record that only covers very recent times (since 1979). The new paper also looks at temperature data starting in 1948 - but temperature data from re-analysis, not actual observations.

If their statistical technique is robust, then they should come up with the same result from the glacial/interglacial cycles of temperature and CO2...

...but their methodology is devoted to looking at the short-term variation, not the long-term trends. Our knowledge from the glacial/interglacial periods has much lower time resolution. Different time scale, difference processes, different feedbacks, different causes. That does not fit with their narrative of "The One True Cause".

A purely statistical method like Koutsoyiannis et al cannot identify "cause" when the system has multiple paths and feedbacks operating at different time scales.

-

Bob Loblaw at 00:29 AM on 4 June 2024On Hens, Eggs, Temperature and CO2

David @ 5:

Yes, that wording of "commonly assumed" in the Koutsoyiannis paper is rather telling. Either they are unaware of the carbon cycle and climate science work that has gone into the understanding of the relationship between CO2 and global temperature, or they are using a rhetorical trick to wave away an entire scientific discipline as if it is an "assumption".

That Looney Tunes clip has one more snippet that I think applies to Koutsoyiannis et al: at the end Foghorn Leghorn says "No, I'd better not look". I think that Koutsoyiannis et al did that with respect to learning about the science of the carbon cycle: "No, I'd better not look".

-

michael sweet at 08:11 AM on 3 June 2024Is Nuclear Energy the Answer?

An organization called Institute for Energy Economics and Financial Analysis (IEEFA) just released a report on small modular reactors. The title of the report is "Small Modular Reactors: Still too expensive, too slow and too risky". It says that small nuclear reactors will not be able to contribute significantly to the energy transition and the money spent on them is being wasted.

Key Findings;

1) Small modular reactors still look to be too expensive, too slow to build, and too risky to play a significant role in transitioning from fossil fuels in the coming 10-15 years.

2) Investment in SMRs will take resources away from carbon-free and lower-cost renewable technologies that are available today and can push the transition from fossil fuels forward significantly in the coming 10 years.

3) Experience with operating and proposed SMRs shows that the reactors will continue to cost far more and take much longer to build than promised by proponents.

4) Regulators, utilities, investors and government officials should embrace the reality that renewables, not SMRs, are the near-term solution to the energy transition.

Follow the link above to read the full report. The IEEFA apparently is a left leaning think tank that opposes nuclear power.

-

David Kirtley at 06:25 AM on 3 June 2024On Hens, Eggs, Temperature and CO2

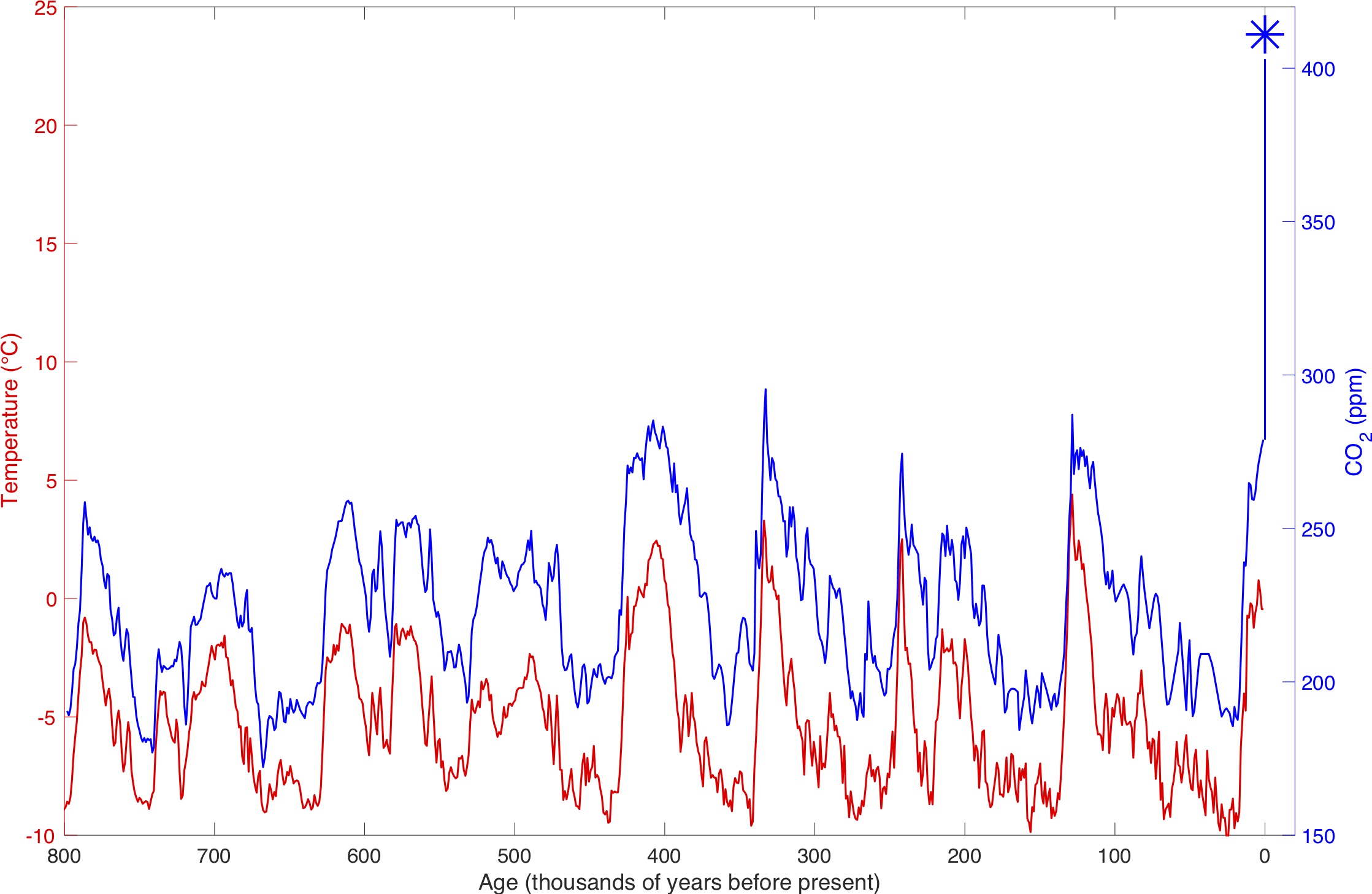

An easy way to test whether today's atmospheric temp inc. are causing today's rise in CO2 levels might be to look at this chart of data from the EPICA ice core:

From this SkS rebuttal: https://skepticalscience.com/co2-lags-temperature-intermediate.htm

Yes indeed, some of the CO2 inc during glacial-interglacial cycles was caused by Temp inc first. Koutsoyiannis et al. would have us believe that the current huge increase in CO2 in the atmosphere is also caused by Temp inc first.

Where is this huge increase in Temp?

-

David Kirtley at 05:52 AM on 3 June 2024On Hens, Eggs, Temperature and CO2

From the Koutsoyiannis et al. abstract: "According to the commonly assumed causality link, increased [CO2] causes a rise in T. However, recent developments cast doubts on this assumption by showing that this relationship is of the hen-or-egg type, or even unidirectional but opposite in direction to the commonly assumed one."

"Commonly assumed"? I don't think so. The link between CO2 and Temp is shown by a wealth of actual physical evidence. There is no question that Temp increases can cause CO2 increases and that the opposite ("commonly assumed causality link") relationship is also true: CO2 inc. can cause Temp inc. Koutsoyiannis et al. seem to be saying that their study proves that the causality relationship can only be "unidirectional": Temp inc cause CO2 increases.

It is all very strange. They seem to be trying to answer questions about climate science which have very solid answers already from different lines of evidence. Is their statistical magic a new line of evidence which overturns the vast majority of climate science? I doubt it.

But, since we're on the subject of hens and eggs, this paper reminds me of an old Looney Tunes cartoon starring the rooster Foghorn Leghorn and the highly intelligent little chick, Egghead, Jr. Foghorn is babysitting little Egghead and they decide to play hide and seek. Egghead starts counting while Foghorn hides in a large "Feed Box". When Egghead finishes counting he gets out a slide rule and a pencil and paper and runs some calculations to try to locate Foghorn. He grabs a shovel and starts digging a hole nowhere near the Feed Box. With his last final tug on the shovel handle Foghorn pops out of the hole, flabbergasted.

Foghorn Leghorn-Hide and Seek: https://www.youtube.com/watch?v=jMyD3TSXyUc

I think Koutsoyiannis et al. think they are like Egghead, Jr and have come up with some magical statistics which override our physical reality. Maybe their calculations would work in Looney Tunes land. But they don't work in the climate system we are familiar with.

-

BaerbelW at 19:02 PM on 2 June 2024Positive feedback means runaway warming

Please note: the basic version of this rebuttal was updated on June 2, 2024 and now includes an "at a glance“ section at the top. To learn more about these updates and how you can help with evaluating their effectiveness, please check out the accompanying blog post @ https://sks.to/at-a-glance

-

Bob Loblaw at 02:02 AM on 2 June 2024On Hens, Eggs, Temperature and CO2

To continue, one nice new example that appears in this blog post is the accelerator versus the brake analogy. The OP does a nice job of describing the natural carbon cycle, pointing out that the natural portions of the cycle include both emissions and removals - adding to and subtracting from atmospheric CO2 storage.

Koutsoyiannis et al basically assume that if there are changes in atmospheric CO2, they must be linked to something that changed emissions. As the OP points out, the likelihood is that the correlation Koutsoyiannis et al see (in the short-term detrended data set they massaged) is more likely related to changes in natural removals.

Once again, Koutsoyiannis et al do not realize the limitations of their methodology, ignore a well-known physical process in the carbon cycle (rates of natural atmospheric CO2 removal), and attribute their correlation to the wrong thing. The right thing isn't in their model (statistical method) or thought-space (mental model), so they don't see it.

The OP's bathtub analogy is useful to see this. The diagram (figure 4) looks at the long-term rise in bathtub level (CO2 rise), but it is easy to do a thought experiment on how we could introduce short-term variability into the water level. There are three ways:

- Short-term variability in the natural emissions (faucet on the left).

- Short-term variability in the human emissions (faucet on the right).

- Short-term variability in the natural sinks (drain pipe on the lower right).

The bathtub analogy is similar to the water tank analogy that is used in this SkS post on the greenhouse effect. The primary analogy in that post is a blanket, but the level of water in a water tank appears further down the page.

In short, the Koutsoyiannis et al paper ignores known physics, fails to incorporate known physics in their methodology, and comes to incorrect conclusions because the correct conclusion involves factors that were eliminated from their analysis from the beginning.

-

Bob Loblaw at 01:31 AM on 2 June 2024On Hens, Eggs, Temperature and CO2

Yes, this blog post does a really good job of outlining the correct scientific background on atmospheric CO2 rise, and pointing out the glaring error that Koutsoyiannis et al have made.

The recent paper is a rehash of an earlier Koutsoyiannis paper that is allude to but not specifically linked in the OP and comments. The OP does subtly link to a rebuttal publication of that earlier work (link repeated here... You'll have to verify you're not a robot to get to the paper). As Dikran has pointed out, the authors appear to have doggedly refused to accept their error.

Both the current Koutsoyiannis et al paper and the earlier one have threads over at PubPeer:

- The current paper only has one short comment.

- ...but the earlier paper has more extensive comments, including more detail on the criticisms Dikran mentions here in comment 1.

...and as Dikran mentions, this basic error is an old one, being repeated again and again in the contrarian literature on the subject. Two Skeptical Science blog posts from 11 and 12 years ago discusses this and similar errors. Plus ca change...

The blog And Then There's Physics also posted a blog on the earlier papers.

The importance of the differencing scheme used by Koutsoyiannis et al cannot be overstated. I hate to inject that dreaded word "Calculus" into the discussion, but if you'll bear with me for a moment I can explain. Taking differences (AKA detrending) is that dreaded Calculus process called differentiation - taking the derivative. This tells you the rate of change at any point in time - but it does not tell you how much CO2 accumulates over time. To get accumulation over time, you need to sum those changes over time - in Calculus-speak, you need to integrate.

The catch is, as Dikran points out, that taking differences has eliminated any constant factor - in Calculus-speak, the derivative of a constant is zero. And when you turn around and do the integration to look at how CO2 accumulates over time (basically, undo the differentiation), you need to remember to add the constant back in. Koutsoyiannis et al fail to do this, and then make the erroneous conclusion that the constant is not a factor. Their method made it disappear, and they can't see it as a result. David Copperfield did not actually make that airplane disappear - he just applied a method that hid it from the sight of the audience. (Of course, David Copperfield knows the airplane did not disappear, and is just trying to entertain the audience. In contrast, it appears that Koutsoyiannis et al are fooling themselves.)

At least introducing Calculus to the discussion give me a chance to mention one of my two math jokes. (Yes, I know. "math joke" is an oxymoron. Don't ask me to tell you the one about Noah and the snakes.)

Two mathematicians are in a bar, arguing about the general math knowledge of the masses. They end up deciding to settle the issue by seeing if the waitress can answer a math question. While mathematician A is in the bathroom, mathematician B corners the waitress and tells her that when his friend asks her a question, she should answer "one half X squared". A little later, when the waitress returns to the table, A asks her "what is the integral of X?". She answers as instructed, and mathematician A sheepishly pays off the bet and admits that B was right. As the waitress walks away, she is heard to mutter "pair of idiots. It's one-half X squared, plus a constant".

-

Dikran Marsupial at 23:44 PM on 31 May 2024On Hens, Eggs, Temperature and CO2

The earlier paper by Koutsoyiannis et al.

"Deep doubts, deep wisdom; shallow doubts, shallow wisdom" – Chinese proverb

which seems somewhat ironic at this point.

-

Dikran Marsupial at 23:41 PM on 31 May 2024On Hens, Eggs, Temperature and CO2

Good article!

Koutsoyiannis et al. have made essentially the same mathematical blunder that Murray Salby did ten years ago (and he was far from being the first), which I covered here:

https://skepticalscience.com/salby_correlation_conundrum.html

Correlations are insensitive to constant offsets in the two signals on which it is computed. The differencing operator, Δ, which gives the difference between successive samples converts the long term linear trend in the signal to an additive constant. So as soon as you use Δ on both signals, the correlation can tell you precisely nothing about the long term trends.

When the earlier work was published in Proceedings of the Royal Society A, I communicated this error to both the authors of the paper and the editor of the journal. The response was, shall we say "underwhelming".

The communication (June 2022) included the observation that atmospheric CO2 levels are more slowly than the rate of fossil fuel emissions, which shows that the natural environment is a net carbon sink, and therefore the rise cannot be due to a change in the carbon cycle resulting from an increase in temperature. It is "dissapointing" that the authors have published a similar claim again (submission recieved 17 March 2023) when they had already been made aware that their claim is directly refuted by reliable observations.

Moderator Response:[BL] Link activated.

-

michael sweet at 02:45 AM on 30 May 20242024 SkS Weekly Climate Change & Global Warming News Roundup #21

There was an article today in The Guardian about the health benefits from currently installed renewable energy. The original study is here.

Installing wind and solar from 2019 to 2022 resulted in reductions of SO2 and nitrogen oxides NOx in the USA. Both these cheicals cause respiratory damage. They found that the reduction of pollution resulted in $249 billion dollars in health and climate benefits. I note that these benefits will continue for the foreseeable future and will be much greater than the cost of installing the renewable energy. In addition, the renewable energy is cheaper than fossil energy.

Jeremiah Johnson, a climate and energy professor at North Carolina State University who was not involved in the study said :

The public “is often focused on the challenges we face” when it comes to ecological damage, he said. “But it is also important to recognize when something is working.”

It would be interesting to see what David-acct thinks about these massive savings from installing renewable energy.

-

BaerbelW at 02:38 AM on 29 May 2024Mastering FLICC - A Cranky Uncle themed quiz

OPOF @2

Thanks!

felixscout @3

Thanks for the feedback! The link is actually correct as it's the summary page for all of the cartoons regardless of language. They show up sorted alphabetically in blocks: German (DE), English (EN) and Dutch (NL), so there's unfortunately some scrolling involved.

-

felixscout at 21:11 PM on 28 May 2024Mastering FLICC - A Cranky Uncle themed quiz

Thank you for this.

The one issue I discovered was, when going through the answers after submitting the quiz the link to the correct cranky uncle cartoon linked to the german version.

-

One Planet Only Forever at 02:05 AM on 28 May 2024Mastering FLICC - A Cranky Uncle themed quiz

BaerbelW,

I think that the 'standard' response that is provided to a wrong choice was a good response. A person taking the quiz to learn would be inclined to review the FLICC information and try again.

Arguments

Arguments