How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

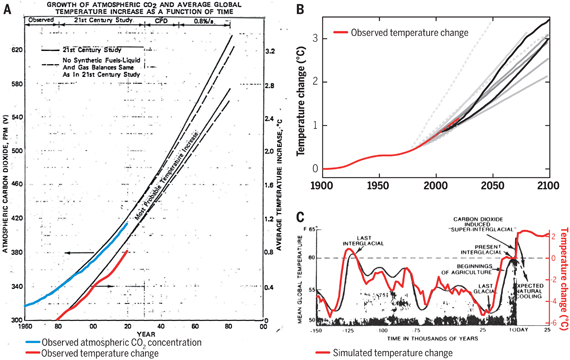

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

DSL, I think my argument is sufficiently abstract to allow for critism of failure in all directions? (I'm not sure what you mean. I also gave examples from solid mechanics, after all. I was trying to illustrate that modelling is not as perfect as it may seem, for both experts and to the layperson.

DSL, I think my argument is sufficiently objective to allow for critism of failure in all directions? (I'm not sure what you mean). I talk about the equations, the procedure, and give examples of approximations. I also gave examples from solid mechanics, a different feild after all. Numerical integration applies to all fields. I was trying to illustrate that modelling is not as perfect as it may seem, for both experts and to the layperson. Also that it takes decades to develope good models.

This is site is for skeptics. So to your question "Let's imagine that the sign of the alleged failure was in the other direction. Would you still make the comment?", unless you disagree with my content, so what.

Razo, pointing out that modeling is "not as perfect as it may seem" is a no-brainer. Who has been saying it is? Are you suggesting that climate modeling is useless? Where is your comment going? Or is that the extent of it?

Razo: You really should do some homework before piously pontificating about climate models. The Intermediate version of the OP has a Reading List appended to it. If you read the first Spncer Weart article, you will discover that the first General Circulation Model was created in the mid-1950s. Would six decades of model development and enhancement satisfy your rather vague time criteria?

Well models from 30 year ago have been remarkably accurate. The Manabe model used by Broecker in the landmark paper 39 years ago nailed 2010 temperatures remarkably well. However, the model is too primitive to deal with much more than energy balance which is reasonably well understood. The inner workings of climate internal variability, regional difference etc are not captured at all. What exactly do mean by the comment?

That all models are wrong is trivial. The question is, are they skillful? Ie do they allow you to make better predictions of the future than doing it without a model. How would you make a prediction for future temperatures without a model.

Recent article on this here.

If you are trying to imply that AGW is dependent on models, then please try reading the IPCC WG1 report first so we can have a more informed discussion.

I find Fig. 1 of the respone curious.Figure 1a, the natural forcings, shows quite bad match with the climate model, especially for the 1850s. Fig 1b, the man made forcings, shows a great match in the 1850s, which is flat around zero. The model aslso shows a great match around the 80s and 90s, which is probably what it is calebrated to. In 1c, the combination of the two, the match is pretty good.

Even though its agreed that there is little effect of human activity in the 1860s, there is a significant correction in the model 1c at these dates. The poor modelling of natural forcing, doesn't say much for the model. This may best describe the error of the model, say .2C.The choice of calibration date appears to have a large effect on the results. I don't think AR4 models 'perturbate' calibration dates.

Its hard to understand the model to such detail, but it does appear that the models seem to only be able to predict recent warming.

Razo - these diagrams are from TAR, based on Stott et al 2000. I would agree there is an issue and I would hazard a guess the cloud-response to sulphate aerosols is exaggerated at low concentrations.

If you look at the corresponding diagram in FAQ 10.1, Fig 1 in latest report you will see that neither the CMIP3 nor CMIP5 model ensembles have this issue.

Razo,

You say "The model aslso shows a great match around the 80s and 90s, which is probably what it is calebrated to." This is fundamentaly not how climate models work. You are coming in from another line of work and incorrectly applying your modeling methods to climate. The climate models are designed from the ground up using basic physics principls and are not "calibrated" to any period. If you wish to continue posting on an informed board you need to do your homework and stop making baseless assertions.

You would be much better served by asking questions about how climate models work, which you obviously do not understand, than incorrectly complaining that those models do not work properly. Real Climate has several basic links on how climate models work.

Micheal Sweet, I think my tone is reasonably passive to suggest I'm open to correction. I am not a denier, I present myself as an educated skeptic, and I am here to learn. When I make a mistake, people expect me to have a PHD in GCM. I think the point of calibration can be a little subtle, but it doesn't take away from my post.

I did read about AR4 models:

http://web.archive.org/web/20100322194954/http://tamino.wordpress.com/2010/01/13/models-2/

Here they say

''But there’s a sizeable spread in the model outputs as well (especially in the early 20th century, since these results are set to a 1980-2000 baseline).''

In this case the spread is very little in the 1980-2000 period. If its just blind physics, it is improbable that the spread of the results would behave so differently in this time period.

Also in the rebuttal above, the basic section, they say models use hindcasting. So if they test with hindcasting, and it doesn't work, one tries to improve thier model. So this is what I call calibrating. Am I wrong on this?

I think I see the word 'calibrate' has a charged meaning, becasue deniers use it. Well I didn't know that. LOL. Anyway, I presented my definition of calibrate. Please note the above question mark indicating a question--you invited me to ask.

Razo writes "When I make a mistake, people expect me to have a PHD in GCM. "

and yet on another thread, (s)he writes " I am not in the climate field, but I do have experience with numerical modelling. I simply think GCM should be able to predict trade winds and ocean warming."

where (s)he is explicitly claiming to have a background that provides a position to criticise GCMs. As it happens the criticism shows a fundamental lack of understanding of what GCMs are designed to do and what can be expected of them. Razo, you need to understand first and then criticise. Asking questions is a better way of learning than making assertions or criticism, especially when you are not very familiar with the problems. Your tone is not "reasonably passive" as the quote above demonstrates.

BTW Razo, baselining and calibration are not the same thing. Yes, the variability in the baselining period should be expected to underestimate the true variability of the model projections, even if the model physics is 100% correct.

Well here are a few examples of the use of the word 'calibration' or synonyms in climate change literature, found by simply googling 'calibration climate change model'. I think my use of the term and the idea are reasonbly inline with the scientific community. Where am I going wrong on this? I have not read each of these exhustively. I am only showing showing the use of the expression.

1)http://www.iac.ethz.ch/groups/schaer/research/reg_modeling_and_scenarios/clim_model_calibration

This is a Swiss institute for atmospheric and climate science:

''The tuning of climate models in order to match observed climatologies is a common but often concealed technique. Even in physically based global and regional climate models, some degree of model tuning is usually necessary as model parameters are often poorly confined. This project tries to develop a methodological framework allowing for an objective model tuning with a limited number of (expensive) climate model integrations.''

2)

http://journals.ametsoc.org/doi/abs/10.1175/2011BAMS3110.1

American meteriological society

''Calibration Strategies: A Source of Additional Uncertainty in Climate Change Projections''

3)http://www.academia.edu/4210419/Can_climate_models_explain_the_recent_stagnation_in_global_warming

An article titled ''Can climate models explain the recent stagnation in global warming?''

''In principle, climatemodel sensitivities are calibrated by fitting the climate response to the known seasonal and latitudinalvariations in solar forcing, as well as by the observed climate change to increased anthropogenicforcing over a longer period, mostly during the 20th century. It would be difficult to modify the modelcalibration significantly to reproduce the recent global warming slow down while still satisfying theseother major constraints.''

Razo,

In the second question at Real Climate's FAQ on Global Climate Models they say:

"Are climate models just a fit to the trend in the global temperature data? No. Much of the confusion concerning this point comes from a misunderstanding stemming from the point above. Model development actually does not use the trend data in tuning (see below). Instead, modellers work to improve the climatology of the model (the fit to the average conditions), and it’s intrinsic variability (such as the frequency and amplitude of tropical variability). The resulting model is pretty much used ‘as is’ in hindcast experiments for the 20th Century. - See more at: http://www.realclimate.org/index.php/archives/2008/11/faq-on-climate-models/#sthash.EiXtCir9.dpuf"

You say in reply to me: "If its just blind physics, it is improbable that the spread of the results would behave so differently in this time period." It appears to me that you are saying that the results are too good to be based on just the physics.

I am not an expert in this area, but it seems to me that you are confusing your definations of tuning and calibration for these models because you have not read the background material. This is very common and most of the experienced posters have seen this many times. As I understand Tamino's reference to baselining, they are aligning the data from 1980-2000 for comparison, they did not use that data to tune the models. Perhaps the alignment you referred to above is from how the data are graphed for comparison. Read the above Real Climate reference.

In your post at 712, your first and second references are to Regional Climate Models, not Global Climate models. They are not done the same way. You need to clear up in your mind what you want to discuss. Since you mentioned the "hiatus", it previously appeared that you were discussing Global Climate models.

Hans Von Storch is a respected scientist, but there are many different opinions on how well Global Climate Models are performing. Tamino states at the end of the post you linked above "The outstanding agreement holds not just for the 20th century, but into the 21st as well — putting the lie to claims that recent observations somehow “falsify” IPCC model results." (my emphasis) That was in 2010, but Tamino still feels that climate models are holding their own.

There are several posters here that are more experienced that I am. They seem to be holding back. Perhaps if you make less statements about the Physics being too good people will be more friendly.

You frequently make sweeping statements and confuse apples and oranges. For example, your confusion of Regional and Global climate models above. This makes you appear hostile. Dikran Marsupial is very knowledgable and can answer your questions if you pose them in a less hostile tone.

Razo, none of the examples of the use of "calibration" you provide are referring to baselining, which kind of makes my point, you are not using the term in the ususal sense in climatology. There is a difference between baselining (which was the cause of the phenomenon you were describing) and calibration (a.k.a. tuning) in the sense of those three quotes. The models are not affected in anyway by baselining, it is a method used in the analysis of model output and is not part of the model in any way.

Please, do yourself a favour and try and learn a bit more before making assertions or criticisms (or at least pay attention to responses to your posts, such as mine at 711).

I wasn't think that calibrating means curve fitting or trend.

I know there are different opinions. Some, which are not deniers, appear to agree with me.

I can appreciate baseline study is a little more complex. I think the topic of my post 706 is not effected by this.

This is what I found for what a baseline climate is for. Amoungst other things, they do say its used for calibration.

http://www.cccsn.ec.gc.ca/?page=baseline

''Baseline climate information is important for:

-characterizing the prevailing conditions under which a particular exposure unit functions and to which it must adapt;

-describing average conditions, spatial and temporal variability and anomalous events, some of which can cause significant impacts;

-calibrating and testing impact models across the current range of variability;

-identifying possible ongoing trends or cycles; and

-specifying the reference situation with which to compare future changes.''

Razo, climate modellers explicitly state that climate models have no skill at decadal level prediction. You seem to think from your experience as a numerical modeller, that they should be able to predict the trade winds (aka ENSO cycle), but then have tried predicting weather beyond 5 days? Weather prediction (and ENSO prediction) are initial values limited by chaos theory. Climate prediction is a boundary value problem where internal variability is bounded energy levels. (by analogy, you might get warm days in winter, but the average temperature for a month is going to be lower in winter than in summer). Climate models are trying to predict what will happen to 30 year averages. Got a better way of doing it?

They are incredibly useful tools in climate science, but if you are wanting to evaluate the AGW hypothesis, then please do it properly rather making uninformed stabs at things you havent understood. Perhaps the IPCC chapters on the subject where everything is referenced to the relevant published science as a starting point?

Razo - Baselines are not complex in the least. Simply put, you take two series, and using a baseline period adjust them so that they have the same mean over that period -in order to see how they change relative to one another.

For example, comparing GISTEMP and HadCRUT4 without adjusting for the fact that they use different baselines:

And by setting them to a common baseline of 1980-1999:

A common baseline is really a requirement for comparing two data series.

Razo - I would point out that the reference you made here to "Can climate models explain the recent stagnation in global warming" is to an un-reviewed blog article.

The published peer-reviewed literature on global models, on the other hand, states something quite different, such as in Schmidt et al 2014. This is discussed in some detail here on SkS. Climate model projections are run with forcing projections, and that includes the CMIP3 and CMIP5 model sets discussed by the IPCC. And each model run represents a response to those projected forcings.

When (as per that paper) you incorporate observed, not projected, forcings, it is clear that the models are quite quite good.

Hey KR. Thank you.

I wanted to point out to Dikran Marsupial, that the point of my post 706 was that the model that includes man made forcings only seems to be reducing the large error of the natural forcing only model in the 1850s when they are combined. this is about the figures 1 a, b, and c in the rebuttal on the intermediate page.

So KR I have a couple questions: 1) Is the common base the measured values or the ensemble mean? 2) does it make a difference to the results if you change the baseline dates?

michael sweet,

When I am talking about calibration, I look at it more like this (slightly simplified version follows):

Computer models are based on math. Math is equations. For curve fitting or trends one guesstimates an equation based on a graph. For more physical models, the equations are derived from basic principles. In both cases some 'calibration' is done to establish the equation's parameters. In the latter case, sometimes its easy like g=9.81 m/s2.

As I understand, GCMs basically integrate Navier Stokes equation. These are big and complicated. They can however be broken up into different pieces and a large part of the calibration can be done in parts. Some of the paramteres are omitted and some are estimated using yet another equation, and maybe curvefitting.

On top of this, computers don't do math like humans. They usually break it into small steps which they perform fast. So the solution process itself is approximate.

Razo: So? That doesnt make them unreliable nor unskillful. You seem to saying "its complex therefore they must be wrong". Much more importantly, you dont have to rely on models to verify AGW. Nor to see that we have problem. Empirically sensitivity, is most likely between 2 and 4.5. From bottom up reasoning, you need a large unknown feedback to get sensivitity below 2. (Planck's law get you 1.1, clausius-clapeyron gives you 2, with albedo to follow). And as for models, the robust predictions from models seem to be holding up pretty good. (eg see here ).

Razo,

Calibration of Global Climate Models is difficult. I understand that they are not calibrated to match the temperature trend (either for forcast or hindcast). The equations are adjusted so that measured values like cloud height and precipitation are close to climatological averages for times when they have measurements (hindcasts). The temperature trends are an emergent property, not a calibrated property. This also applies to ENSO. When the current equations are implemented ENSO emerges from the calculations, it is not a calibrated property.

Exact discussions of calibration seem excessive to me. In 1894, Arrhenius calculated from basic principles, using only a pencil, and estimated the Climate Sensitivity as 4.5C. This value was not calibrated or curve fitted at all— there was no data to fit to. The current range (from IPCC AR5) is 1.5-4.5C with a most likely value near 3 (IPCC does not state a most likely value). If the effect of aerosols is high the value could be 3.5-4, almost what Arrhenius calculated without knowing about aerosol effects. If it was really difficult to model climate, how could Arrhenius have been so accurate when the Stratosphere had not even been discovered yet? To support your claim that the models are not reliable you have to address Arrhenius' projection, made 120 years ago. If it is so hard to model climate, how did Arrhenius successfully do it? Examinations of other model predictions (click on the Lessons from Past Predictions box to get a long list) compared to what has actually occured show scientists have been generally accurate. You are arguing against success.

A brief examination of the sea level projections in the OP show that they are too low. The IPCC has had to raise it's projection for sea level rise the last two reports and will have to significantly increase it again in the near future. Arctic sea ice collapsed decades before projections and other effects (drought, heat waves) are worse than projected only a decade ago. Scientists did not even notice ocean acidification until the last 10 or 20 years. If your complaint is that the projections are too conservative you may be able to support that.

Razo wrote "I can appreciate baseline study is a little more complex. I think the topic of my post 706 is not effected by this."

No, as KR pointed out, baselining is actually a pretty simple idea. It is a shame that you appear to be so resistant to the idea that you have misunderstood this and are trying so hard to avoid listening to the explanation of why the good fit is obtained during the baseline period (and why it is nothing to do with the models themselves). You will learn very little this was as most people don't have the patience to put up with that sort of behaviour. However, ClimateExplorer allows you to experiment with the baseline period to see what difference it makes to the ensemble. Here is an CMIP3 SRESA1B ensemble with baseline period from 1900-1930:

here is one with a baseline period of 1930-1960:

1960-1990:

1990-2020:

I'm sure you get the picture. Now the IPCC generally use a baseline period ending close to the present day, one of the problems with that is that it reduces the variance of the ensemble runs during the last 15 years, which makes the models appear less able to explain the hiatus than they actually are.

Now as to why the observations are currently in the tails of the distribution of model runs, well it could be that the models run too warm on average, or it could be that the models underestimate the variability due to unforced climate change, or a bit of both. We don't know at the current time, but there is a fair amount of work going on to find out (although you will only find skeptics willing to talk about the "too warm" explanation). The climate modellers I have discussed this with seem to think it is "a bit of both". Does it mean the models are not useful or skillful? No.

Razo also wrote "I wanted to point out to Dikran Marsupial, that the point of my post 706 was that the model that includes man made forcings only seems to be reducing the large error of the natural forcing only model in the 1850s when they are combined."

Well perhaps you should have just asked the question directly. I suspect the reasons for this are twofold: Firstly it is to a large extent the result of baselining (the baseline period for these models is 1880 to 1920), if you made the "error" of the "natural only" models smaller in the 1850s, that would make the difference in the baseline period bigger than currently shown and hence this is prevented by th ebaselining procedure. The same baselining causes the "anthropogenic model" to have large "errors" from the 1930s to the 1960s. The primary cause is baselining. Now if you have a better model that includes both natural and anthropogenic forcings, you get a model that doesn't have these gross errors anywhere, because the warming over the last century and a half has had both natural and anthropogenic components. So this is no surprise.

Now it is a shame that you didn't stop to find out what baselining is and why it is used when you first saw it on Tamino's blog, rather than carry on trying to criticise tghe models with incorrect arguments. Please take some time to do some learning, don't assume your background means you don't have to start at the beginning (as I had to), and dial the tone back a bit.

@scaddenp, in fact the navier stokes are absolutely non-predictable. This is what the whole deal with Lorenz is all about. In fact, we cant event integrate a simple 3 variable differential equation with any accuracy for anything but a small amount of time. Reference:http://www.worldscientific.com/doi/abs/10.1142/S0218202598000597

Now, if we assume that climate scientists are unbiased (I've been in the business, this would be a somewhat ridiculous assumption), the models would provide our BEST GUESS. But they are of absolutely NO predictive value, as anyone who has integrated PDE's where the results matter (i.e. Engineering) knows.

Oh, and this inability to integrate the model forward with accuracy doesn't even touch on the fact that the model itself is an extreme approximation of the true physics. Climates models are jam-packed with adhoc parameterizations of physical process. Now the argument (assuming the model was perfect) is that averages are computable even if the exact state of the climate in the future is not. Its a decent arguement, and in general this is an arguable stance. However, there is absolutely no mathematical proof that the average temperature as a quantity of interest is predictable via the equations of the climate system. And there likely never will be. But, again, all of this is not a criticism of climate modelling. They do the best they can. The future is uncertain nonetheless.