How reliable are climate models?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

| |||

|

Models successfully reproduce temperatures since 1900 globally, by land, in the air and the ocean. |

|||||

Climate Myth...

Models are unreliable

"[Models] are full of fudge factors that are fitted to the existing climate, so the models more or less agree with the observed data. But there is no reason to believe that the same fudge factors would give the right behaviour in a world with different chemistry, for example in a world with increased CO2 in the atmosphere." (Freeman Dyson)

At a glance

So, what are computer models? Computer modelling is the simulation and study of complex physical systems using mathematics and computer science. Models can be used to explore the effects of changes to any or all of the system components. Such techniques have a wide range of applications. For example, engineering makes a lot of use of computer models, from aircraft design to dam construction and everything in between. Many aspects of our modern lives depend, one way and another, on computer modelling. If you don't trust computer models but like flying, you might want to think about that.

Computer models can be as simple or as complicated as required. It depends on what part of a system you're looking at and its complexity. A simple model might consist of a few equations on a spreadsheet. Complex models, on the other hand, can run to millions of lines of code. Designing them involves intensive collaboration between multiple specialist scientists, mathematicians and top-end coders working as a team.

Modelling of the planet's climate system dates back to the late 1960s. Climate modelling involves incorporating all the equations that describe the interactions between all the components of our climate system. Climate modelling is especially maths-heavy, requiring phenomenal computer power to run vast numbers of equations at the same time.

Climate models are designed to estimate trends rather than events. For example, a fairly simple climate model can readily tell you it will be colder in winter. However, it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Weather forecast-models rarely extend to even a fortnight ahead. Big difference. Climate trends deal with things such as temperature or sea-level changes, over multiple decades. Trends are important because they eliminate or 'smooth out' single events that may be extreme but uncommon. In other words, trends tell you which way the system's heading.

All climate models must be tested to find out if they work before they are deployed. That can be done by using the past. We know what happened back then either because we made observations or since evidence is preserved in the geological record. If a model can correctly simulate trends from a starting point somewhere in the past through to the present day, it has passed that test. We can therefore expect it to simulate what might happen in the future. And that's exactly what has happened. From early on, climate models predicted future global warming. Multiple lines of hard physical evidence now confirm the prediction was correct.

Finally, all models, weather or climate, have uncertainties associated with them. This doesn't mean scientists don't know anything - far from it. If you work in science, uncertainty is an everyday word and is to be expected. Sources of uncertainty can be identified, isolated and worked upon. As a consequence, a model's performance improves. In this way, science is a self-correcting process over time. This is quite different from climate science denial, whose practitioners speak confidently and with certainty about something they do not work on day in and day out. They don't need to fully understand the topic, since spreading confusion and doubt is their task.

Climate models are not perfect. Nothing is. But they are phenomenally useful.

Please use this form to provide feedback about this new "At a glance" section. Read a more technical version below or dig deeper via the tabs above!

Further details

Climate models are mathematical representations of the interactions between the atmosphere, oceans, land surface, ice – and the sun. This is clearly a very complex task, so models are built to estimate trends rather than events. For example, a climate model can tell you it will be cold in winter, but it can’t tell you what the temperature will be on a specific day – that’s weather forecasting. Climate trends are weather, averaged out over time - usually 30 years. Trends are important because they eliminate - or "smooth out" - single events that may be extreme, but quite rare.

Climate models have to be tested to find out if they work. We can’t wait for 30 years to see if a model is any good or not; models are tested against the past, against what we know happened. If a model can correctly predict trends from a starting point somewhere in the past, we could expect it to predict with reasonable certainty what might happen in the future.

So all models are first tested in a process called Hindcasting. The models used to predict future global warming can accurately map past climate changes. If they get the past right, there is no reason to think their predictions would be wrong. Testing models against the existing instrumental record suggested CO2 must cause global warming, because the models could not simulate what had already happened unless the extra CO2 was added to the model. All other known forcings are adequate in explaining temperature variations prior to the rise in temperature over the last thirty years, while none of them are capable of explaining the rise in the past thirty years. CO2 does explain that rise, and explains it completely without any need for additional, as yet unknown forcings.

Where models have been running for sufficient time, they have also been shown to make accurate predictions. For example, the eruption of Mt. Pinatubo allowed modellers to test the accuracy of models by feeding in the data about the eruption. The models successfully predicted the climatic response after the eruption. Models also correctly predicted other effects subsequently confirmed by observation, including greater warming in the Arctic and over land, greater warming at night, and stratospheric cooling.

The climate models, far from being melodramatic, may be conservative in the predictions they produce. Sea level rise is a good example (fig. 1).

Fig. 1: Observed sea level rise since 1970 from tide gauge data (red) and satellite measurements (blue) compared to model projections for 1990-2010 from the IPCC Third Assessment Report (grey band). (Source: The Copenhagen Diagnosis, 2009)

Here, the models have understated the problem. In reality, observed sea level is tracking at the upper range of the model projections. There are other examples of models being too conservative, rather than alarmist as some portray them. All models have limits - uncertainties - for they are modelling complex systems. However, all models improve over time, and with increasing sources of real-world information such as satellites, the output of climate models can be constantly refined to increase their power and usefulness.

Climate models have already predicted many of the phenomena for which we now have empirical evidence. A 2019 study led by Zeke Hausfather (Hausfather et al. 2019) evaluated 17 global surface temperature projections from climate models in studies published between 1970 and 2007. The authors found "14 out of the 17 model projections indistinguishable from what actually occurred."

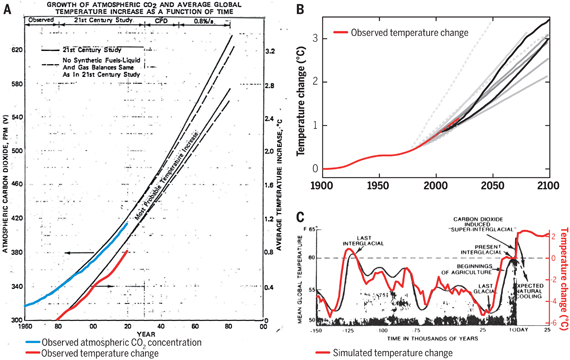

Talking of empirical evidence, you may be surprised to know that huge fossil fuels corporation Exxon's own scientists knew all about climate change, all along. A recent study of their own modelling (Supran et al. 2023 - open access) found it to be just as skillful as that developed within academia (fig. 2). We had a blog-post about this important study around the time of its publication. However, the way the corporate world's PR machine subsequently handled this information left a great deal to be desired, to put it mildly. The paper's damning final paragraph is worthy of part-quotation:

"Here, it has enabled us to conclude with precision that, decades ago, ExxonMobil understood as much about climate change as did academic and government scientists. Our analysis shows that, in private and academic circles since the late 1970s and early 1980s, ExxonMobil scientists:

(i) accurately projected and skillfully modelled global warming due to fossil fuel burning;

(ii) correctly dismissed the possibility of a coming ice age;

(iii) accurately predicted when human-caused global warming would first be detected;

(iv) reasonably estimated how much CO2 would lead to dangerous warming.

Yet, whereas academic and government scientists worked to communicate what they knew to the public, ExxonMobil worked to deny it."

Fig. 2: Historically observed temperature change (red) and atmospheric carbon dioxide concentration (blue) over time, compared against global warming projections reported by ExxonMobil scientists. (A) “Proprietary” 1982 Exxon-modeled projections. (B) Summary of projections in seven internal company memos and five peer-reviewed publications between 1977 and 2003 (gray lines). (C) A 1977 internally reported graph of the global warming “effect of CO2 on an interglacial scale.” (A) and (B) display averaged historical temperature observations, whereas the historical temperature record in (C) is a smoothed Earth system model simulation of the last 150,000 years. From Supran et al. 2023.

Updated 30th May 2024 to include Supran et al extract.

Various global temperature projections by mainstream climate scientists and models, and by climate contrarians, compared to observations by NASA GISS. Created by Dana Nuccitelli.

Last updated on 30 May 2024 by John Mason. View Archives

Arguments

Arguments

"The future is uncertain nonetheless."

A statement of the exceedingly obvious. However you have not written anything that would support the contention that the future is any more uncertain than the model projections state, or that the models are not useful or basically correct.

"If we had observations of the future, we obviously would trust them more than models, but unfortunately …… observations of the future are not available at this time. (Knutson & Tuleya – 2005)."

nickels - If you feel that the climate averages cannot be predicted due to Lorenzian chaos, I suggest you discuss this on the appropriate thread. Short answer: chaotic details (weather) cannot be predicted far at all due to nonlinear chaos due to slightly varying and uncertain detailed starting conditions. But the averages are boundary problems, not initial value problems, are strongly constrained by energy balances, and far more amenable to projection.

Steve Easterbrook has an excellent side-by-side video comparison showing global satellite imagery versus the global atmospheric component of CESM over the course of a year. Try identifying which is which, and if there are significant differences between them, without looking at the captions! Details (weather) are different, but as this model demonstrates the patterns of observations are reproduced extremely well - and that based upon large-scale integration of Navier-Stokes equations. The GCMs perform just as well regarding regional temperatures over the last century:

[Source]

Note the average temperature (your issue) reconstructions, over a 100+ year period, and how observations fall almost entirely within the model ranges.

Q.E.D., GCMs present usefully accurate representations of the climate, including regional patterns - as generated by the boundary constraints of climate energies.

---

Perhaps SkS could republish Easterbrooks post? It's an excellent visual demonstration that hand-waving claims about chaos and model inaccuracy are nonsense.

So with regards to baselines.

I can understand the need to do it to compare models, less so with perterbations of the same model. But I'm suprised that people use it when comparing models with the actual data. Its one thing to calculate and present ensemble mean, its quite another to offset model runs or different models to match the mean of the baseline. This becomes an arbitrary adjustment, not based on any physics. Could you explain this to me please?

Also, Dikran you say

"Now the IPCC generally use a baseline period ending close to the present day, one of the problems with that is that it reduces the variance of the ensemble runs during the last 15 years, which makes the models appear less able to explain the hiatus than they actually are."

You seem to be saying a higher variance is better. Having the hiatus within the 95% confidence interval is a good thing, but a narrower interval is better if you want to more accurately predict a number, or justify a trend.

Another thing to add, as I understand if the projected values are less than 3 times the variance, one says there is no result. If it is over 3 times one says there is a trend, and not until the ratio is 10, does one quote a value. looking at the variablity caused by changing the baseline, as well as the height of the red zones in post 723, the variance appears to be about 2.0C, the range of values is about 6.0 (from 1900 to 2100). Can one use thse same rules here?

Razo wrote "But I'm suprised that people use it when comparing models with the actual data."

Models are able to predict the response to changes in forcings more accurately than they are able to estimate the absolute temperature of the Earth, hence baselining is essential in model-observation comparisons. There is also the point that the observations are not necessarily observations of exactly the same thing projected by the models (e.g. limitations in coverage etc.) and baselining helps to compensate for that to an extent.

"its quite another to offset model runs or different models to match the mean of the baseline."

This is not what is done, a constant is subtracted from each model run and set ob observations independently such that it has a zero offset during the baseline period. This is a perfectly reasonable thing to do in research on climate change as it is the anomalies from a baseline in which we are primarily interested.

"You seem to be saying a higher variance is better. "

No, I am saying that an accurate estimate of the variance (which is essentially an estimate of the variability due to unforced climate change) is better, and that the baselining process has an unfortunate side effect in artificially reducing the variance, which we ought to be aware of in making model-observation comparisons.

"Having the hiatus within the 95% confidence interval is a good thing, but a narrower interval is better if you want to more accurately predict a number, or justify a trend."

no, you are fundamentally missing the point of the credible interval, which is to give an indication of the true uncertainty in the projection. It is what it is, neither broader nor narrower is "better", what you want is for it to be accurate. Artifically making them narrower as a result of baselining does not make the projection more accurate, it just makes the interval a less accurate representation of the uncertainty.

"Another thing to add, as I understand if the projected values are less than 3 times the variance, one says there is no result."

No, one might say that the observations are consistent with the model (at some level of significance), however this is not a strong comment on the skill of the model.

"If it is over 3 times one says there is a trend, and not until the ratio is 10, does one quote a value."

No, this would not indicate a "trend" simply because an impulse (e.g. the 1998 El-Nino event) could cause such a result. One would instead say that the observations were inconsistent with the models (at some level of significance). Practice varies about the way in which significance levels are quoted and I am fairly confident that most of them would have attracted the ire of Ronald Aylmer Fisher.

"Can one use thse same rules here?"

In statistics, it is a good idea to clearly state the hypothesis you want to test before conducting the test as the details of the test depend on the nature of the hypothesis. Explain what it is that you want to determine and we can discuss the nature of the statistical test.

Dikran Marsupial wrote "Models are able to predict the response to changes in forcings more accurately than they are able to estimate the absolute temperature of the Earth, hence baselining is essential in model-observation comparisons."

Thats a kind of calibration. I understand the need. People here were trying to tell me that no such thing was happening, and it just pure physics. I didn't know exactly how it was calculated, but I expected it. I know very well that "Models are able to predict the response to changes in forcings more accurately than they are able to estimate the absolute...".

"a constant is subtracted from each model run."

That's offsetting. Please don't disagree. Its practically the OED definition. Even the c in y=mx+c is sometimes called an offset.

"neither broader nor narrower is "better", what you want is for it to be accurate"

I said, narrower is better if you want to predict a number, or justify a trend. I mean this regardless of the issue of the variance in the baseline region.

Razo wrote "Thats a kind of calibration."

sorry, I have better things to do with my time than to respond to tedious pedantry used to evade discussion of the substantive points. You are just trolling now.

Just to be clear, calibration or tuning refers to changes made to the model in order for it to improve its behaviour. Baselining is a method used in analysis of the output of the model (but which does not change the model itself in any way).

"I said, narrower is better if you want to predict a number, or justify a trend. "

No, re-read what I wrote. What you are suggesting is lampooned in the famous quote "he uses statistics in the same way a drunk uses a lamp post - more for support than illumination". The variability is what it is, and a good scientist/statistician wants to have as accurate an estimate as possible and then see what conclusions can be drawn from the results.

Have the points in this video ever been adressed here?

Climate Change in 12 Minutes - The Skeptics Case

https://www.youtube.com/watch?v=vcQTyje_mpU

From my readings thus far, I agree with this evaluation of the accuarcy and utility of current climate models:

Part of a speech delivered by David Victor of the University of California, San Diego, at the Scripps Institution of Oceanography as part of a seminar series titled “Global Warming Denialism: What science has to say” (Special Seminar Series, Winter Quarter, 2014):

"First, we in the scientific community need to acknowledge that the science is softer than we like to portray. The science is not “in” on climate change because we are dealing with a complex system whose full properties are, with current methods, unknowable. The science is “in” on the first steps in the analysis—historical emissions, concentrations, and brute force radiative balance—but not for the steps that actually matter for policy. Those include impacts, ease of adaptation, mitigation of emissions and such—are surrounded by error and uncertainty. I can understand why a politician says the science is settled—as Barack Obama did…in the State of the Union Address, where he said the “debate is over”—because if your mission is to create a political momentum then it helps to brand the other side as a “Flat Earth Society” (as he did last June). But in the scientific community we can’t pretend that things are more certain than they are."

Also, any comments on this paper:

Verification, Validation, and Confirmation of Numerical Models in the Earth Sciences

Naomi Oreskes,* Kristin Shrader-Frechette, Kenneth Belitz

SCIENCE * VOL. 263 * 4 FEBRUARY 1994

Abstract: Verification and validation of numerical models of natural systems is impossible. This is because natural systems are never closed and because model results are always non-unique. Models can be confirmed by the demonstration of agreement between observation and prediction, but confirmation is inherently partial. Complete confirmation is logically precluded by the fallacy of affirming the consequent and by incomplete access to natural phenomena. Models can only be evaluated in relative terms, and their predictive value is always open to question. The primary

value of models is heuristic.

http://courses.washington.edu/ess408/OreskesetalModels.pdf

Winston2014,two things:

1. What does Victor's point allow you to claim? By the way, Victor doesn't address utility in the quote.

2. Oreskes point is a no-brainer, yes? No one in the scientific community disagrees, or if they do, they do it highly selectively (hypocritically). Models fail. Are they still useful? Absolutely: you couldn't drive a car without using an intuitive model, and such models fail regularly. The relationship between climate models and policy is complex. Are models so inaccurate they're not useful? Can we wait till we get a degree of usefulness that's satisfactory to even the most "skeptical"? Suppose, for example, that global mean surface temperature rises at 0.28C per decade for the next decade. This would push the bounds of the AR4/5 CMIP3/5 model run ranges. What should policy response be ("oh crap!")? What if that was followed by a decade of 0.13C per decade warming? What should policy response be then ("it's a hoax")?

Models will drive policy; nature will drive belief.

DSL,

"Models fail. Are they still useful?"

Not for costly policies until the accuracy of their projections is confirmed. From the 12 minute skeptic video, it doesn't appear that they have been confirmed to be accurate where it counts, quite the opposite. To quote David Victor again, "The science is “in” on the first steps in the analysis—historical emissions, concentrations, and brute force radiative balance—but not for the steps that actually matter for policy."

"Models will drive policy"

Until they are proven more accurate than I have seen in my investigations thus far, I don't believe they should.

The following video leads me to believe that even if model projections are correct, it would actually be far cheaper to adapt (according to official figures) to climate change than it would be to attempt to prevent it based upon the "success" thus far of the Australian carbon tax:

The 50 to 1 Project

https://www.youtube.com/watch?v=Zw5Lda06iK0

Winston @734, the claim that the policies will be costly is itself based on models, specifically economic models. Economic models perform far worse than do climate models, so if models are not useful "... for costly policies until the accuracy of their projections is confirmed", the model based claim that the policies are costly must be rejected.

Victor, when you say it's cheaper to adapt, you're falling into an either-or fallacy. Mitigation and adaptation are the extreme ends of a range of action. Any act you engage in to reduce your carbon footprint is mitigation. Adaptation can mean anything from doing nothing and letting the market work things out to engaging government-organized and subsidized re-organization of human life to create the most efficient adaptive situation. If you act only in your immediate individual self-interest, with no concern for how your long-term individual economic and political freedom are constructed socially in complex and unpredictable ways, then your understanding of adaptation iss probably the first of my definitions. If you do understand your long-term freedoms as being socially constructed, you might go for some form of the second, but if you do, you will--as Tom points out--be relying on some sort of model, intuitive or formal.

Do you think work on improving modeling should continue? Or should modeling efforts be scrapped?

Victor -> Winston — where "Victor" came from, I have no idea.

Winston2014,

Your 12min video talks about models' and climate sensitivity uncertainty. However, it cherry picks the lower "skeptic" half of ECS uncertainty only. It is silent about the upper long tail of ECS uncertainty, which goes well beyond 4.5degrees - up to 8degrees - although with low probability.

The cost of global warming in highly non-linear - very costly at the high end of the tail - essentially a catastrophe above 4degC. Therefore, in order to formulate the policy response you need to convolve the probability function with the potential cost function and integrate it and compare with the cost of mitigation.

Because we can easily adapt to changes up to say 1degc, the cost of low sensitivity is almost zero - it does not matter. What really matters is the long tail of potential warming distribution, because its high cost - even at low probability - resulting in high risk, demanding serious preventative response.

BTW, the above risk-reward analysis is the driver of policy response. Climate models have nothing to do with it. Your statement repeated after that 12min video that "Models will drive policy" is just nonsense. Policy should be driven by our best understanding of the ECS. ECS is derived from mutiple lines of evidence, e.g. paleo being one of them. The problem has nothing to do with your pathetic "Models fail. Are they still useful?" question. The answer to that question is: models output, even if they fail, is irrelevant here.

Incidentally, concentrating on models' possible failure due to warming overestmation (as in your 12min video) while ignoring that models may also fail (more spectacularly) by underestimating over aspects of global warming (e.g. arctic ice melt), indicates cherry picking on a single aspect only that suits your agenda. If you were not biased in your objections, you would have noticed that models departure from observations are much higher in case of sea ice melt rather than in case of surface temps and concentrate your critique on that aspect.

On the costs of mitigation, an IEA Special Report "World Energy Investment" is just out that puts the mitigation costs in the context of the $48 trillion investments required to keep the lights on under a BAUesque scenario. They suggest the additional investment required to allow a +2ºC future rather than a +4ºC BAUesque future is an extra $5 trillion on top.

"The answer to that question is: models output, even if they fail, is irrelevant here."

Not in politcs and public opinion, which in the real world is what drives policy when politicians respond to the dual forces of lobbyists and the desire to project to the voting public that they're "doing something to protect us." Model projections of doom drive the public perception side. The claim that policy is primarily driven by science is, I think, terribly niave. If that were the case, the world would be a wonderfully different place.

"Your 12min video talks about models' and climate sensitivity uncertainty. However, it cherry picks the lower "skeptic" half of ECS uncertainty only. It is silent about the upper long tail of ECS uncertainty, which goes well beyond 4.5degrees - up to 8degrees - although with low probability."

But isn't climate sensitivity uncertainty what it's all about?

"Incidentally, concentrating on models' possible failure due to warming overestmation (as in your 12min video) while ignoring that models may also fail (more spectacularly) by underestimating over aspects of global warming (e.g. arctic ice melt), indicates cherry picking on a single aspect only that suits your agenda."

Exactly and skeptics can then use that to point out that the projections themselves are likely garbage. No one has yet commented on the rather damning paper in that respect I posted a link to:

Verification, Validation, and Confirmation of Numerical Models in the Earth Sciences

Naomi Oreskes,* Kristin Shrader-Frechette, Kenneth Belitz

SCIENCE * VOL. 263 * 4 FEBRUARY 1994

Abstract: Verification and validation of numerical models of natural systems is impossible. This is because natural systems are never closed and because model results are always non-unique. Models can be confirmed by the demonstration of agreement between observation and prediction, but confirmation is inherently partial. Complete confirmation is logically precluded by the fallacy of affirming the consequent and by incomplete access to natural phenomena. Models can only be evaluated in relative terms, and their predictive value is always open to question. The primary

value of models is heuristic.

http://courses.washington.edu/ess408/OreskesetalModels.pdf

Also:

Twenty-three climate models can't all be wrong...or can they?

http://link.springer.com/article/10.1007/s00382-013-1761-5

Climate Dynamics

March 2014, Volume 42, Issue 5-6, pp 1665-1670

A climate model intercomparison at the dynamics level

Karsten Steinhaeuser, Anastasios A. Tsonis

According to Steinhaeuser and Tsonis, today "there are more than two dozen

different climate models which are used to make climate simulations and

future climate projections." But although it has been said that "there is

strength in numbers," most rational people would still like to know how

well this specific set of models does at simulating what has already

occurred in the way of historical climate change, before they would be

ready to accept what the models predict about Earth's future climate. The

two researchers thus proceed to do just that. Specifically, they examined

28 pre-industrial control runs, as well as 70 20th-century forced runs,

derived from 23 different climate models, by analyzing how well the models

did in hind-casting "networks for the 500 hPa, surface air temperature

(SAT), sea level pressure (SLP), and precipitation for each run."

In the words of Steinhaeuser and Tsonis, the results indicate (1) "the

models are in significant disagreement when it comes to their SLP, SAT, and

precipitation community structure," (2) "none of the models comes close to

the community structure of the actual observations," (3) "not only do the

models not agree well with each other, they do not agree with reality," (4)

"the models are not capable to simulate the spatial structure of the

temperature, sea level pressure, and precipitation field in a reliable and

consistent way," and (5) "no model or models emerge as superior."

In light of their several sad findings, the team of two suggests "maybe the

time has come to correct this modeling Babel and to seek a consensus

climate model by developing methods which will combine ingredients from

several models or a supermodel made up of a network of different models."

But with all of the models they tested proving to be incapable of

replicating any of the tested aspects of past reality, even this approach

would not appear to have any promise of success.

Have these important discoveries been included in models? Considering that it is believed that bacteria generated our initial oxygen atmosphere, one that metabolizes methane should be rather important when considering greenhouse gases. As climate changes, how many more stagnant, low oxygen water habitats for them will emerge?

Bacteria Show New Route to Making Oxygen

http://www.usnews.com/science/articles/2010/03/25/bacteria-show-new-route-to-making-oxygen

Excerpt:

Microbiologists have discovered bacteria that can produce oxygen by breaking down nitrite compounds, a novel metabolic trick that allows the bacteria to consume methane found in oxygen-poor sediments.

Previously, researchers knew of three other biological pathways that could produce oxygen. The newly discovered pathway opens up new possibilities for understanding how and where oxygen can be created, Ettwig and her colleagues report in the March 25 (2010) Nature.

“This is a seminal discovery,” says Ronald Oremland, a geomicrobiologist with the U .S. Geological Survey in Menlo Park, Calif., who was not involved with the work. The findings, he says, could even have implications for oxygen creation elsew here in the solar system.

Ettwig’s team studied bacteria cultured from oxygen-poor sediment taken from canals and drainage ditches near agricultural areas in the Netherlands. The scientists found that in some cases the labgrown organisms could consume methane — a process that requires oxygen or some other

substance that can chemically accept electrons — despite the dearth of free oxygen in their environment. The team has dubbed the bacteria species Methylomirabilis oxyfera, which translates as “strange oxygen producing methane consumer.”

--------

Considering that many plants probably evolved at much higher CO2 levels than found at present, the result of this study isn't particularly surprising, but has it been included in climate models? Has the unique respiration changes with CO2 concentration for every type of plant on Earth been determined and can the percentage of ground cover of each type be projected as climate changes?

High CO2 boosts plant respiration, potentially affecting climate and crops

http://www.eurekalert.org/pub_releases/2009-02/uoia-hcb020609.php

Excerpt:

"There's been a great deal of controversy about how plant respiration responds to elevated CO2," said U. of I. plant biology professor Andrew Leakey, who led the study. "Some summary studies suggest it will go down by 18 percent, some suggest it won't change, and some suggest it will increase as much as 11 percent."

Understanding how the respiratory pathway responds when plants are grown at elevated CO2 is key to reducing this uncertainty, Leakey said. His team used microarrays, a genomic tool that can detect changes in the activity of thousands of genes at a time, to learn which genes in the high CO2 plants

were being switched on at higher or lower levels than those of the soybeans grown at current CO2 levels.

Rather than assessing plants grown in chambers in a greenhouse, as most studies have done, Leakey's team made use of the Soybean Free Air Concentration Enrichment (Soy FACE) facility at Illinois. This open-air research lab can expose a soybean field to a variety of atmospheric CO2

levels – without isolating the plants from other environmental influences, such as rainfall, sunlight and insects.

Some of the plants were exposed to atmospheric CO2 levels of 550 parts per million (ppm), the level predicted for the year 2050 if current trends continue. These were compared to plants grown at ambient CO2 levels (380 ppm).

The results were striking. At least 90 different genes coding the majority of enzymes in the cascade of chemical reactions that govern respiration were switched on (expressed) at higher levels in the soybeans grown at high CO2 levels. This explained how the plants were able to use the increased

supply of sugars from stimulated photosynthesis under high CO2 conditions to produce energy, Leakey said. The rate of respiration increased 37 percent at the elevated CO2 levels.

The enhanced respiration is likely to support greater transport of sugars from leaves to other growing parts of the plant, including the seeds, Leakey said.

"The expression of over 600 genes was altered by elevated CO2 in total, which will help us to understand how the response is regulated and also hopefully produce crops that will perform better in the future," he said.

--------

I could probably spend days coming up with examples of greenhouse gas sinks that are most likely not included in current models. Unless you fully understand a process, you cannot accurately “model” it. If you understand, or think you understand, 1,000 factors about the process but there are another 1,000 factors you only partially know about, don't know about, or have incorrectly deemed unimportant in a phenomenally complex process, there is no possibility whatsoever that your projections from the model will be accurate, and the further out you go in your projections, the less accurate they will probably be.

The current climate models certainly do not have all the forces that create changes in the climate integrated, and there are who knows how many more factors that have not even been realized as yet. I suspect there are a huge number of them if my reading about the newly discovered climate relevant factors discovered almost weekly is anything to judge by. Too little knowledge, too few data points or proxy data points with uncertain accuracy lead to a "Garbage in - Garbage models - Garbage out" situation.

Can I suggest that we ignore Winstons most recent post. The discovery that bacteria have a novel pathway for generating oxygen should not be incorporated into climate models unless there is a good reason to suppose that the effects of this pathway are of sufficient magnitude to significantly alter the proportions of gasses in the atmosphere.

Winston has not provided this, and I suspect he cannot (which in itself would answer the question of whether they were included in the models and why). Winstons post comes across as searching for some reason, any reason, to criticize the models and is already clutching at straws. I suggest DNFTT.

"BTW, the above risk-reward analysis is the driver of policy response. Climate models have nothing to do with it. Your statement repeated after that 12min video that "Models will drive policy" is just nonsense. Policy should be driven by our best understanding of the ECS. ECS is derived from mutiple lines of evidence, e.g. paleo being one of them. The problem has nothing to do with your pathetic "Models fail. Are they still useful?""

Equilibrium Climate Sensitivity

http://clivebest.com/blog/?p=4923

Excerpt from comments:

"The calculation of climate sensitivity assumes only the forcings included in climate models and do not include any significant natural causes of climate change that could affect the warming trends."

If it's all about ECS and ECS is "determined" via the adjustment of models to track past climate data, how are models and their degree of accuracy irrelevant?

"I suggest DNFTT"

I'm not trolling. It's called playing the proper role of a skeptic which is asking honest questions. Emphasis is mine.

Here's my main point in which I am in agreement with David Victor, "The science is “in” on the first steps in the analysis—historical emissions, concentrations, and brute force radiative balance—but not for the steps that actually matter for _policy_. Those include impacts, ease of adaptation, mitigation of emissions and such—are surrounded by error and uncertainty."

"Can I suggest that we ignore Winstons most recent post. The discovery that bacteria have a novel pathway for generating oxygen should not be incorporated into climate models unless there is a good reason to suppose that the effects of this pathway are of sufficient magnitude to significantly alter the proportions of gasses in the atmosphere."

I didn't say they necsessarily should be, my intent was to show the likely huge number of factors that aren't modelled that may very well be highly significant, just as were the bacteria that once generated most of the oxygen on this planet.

Winston2014:

You can propose, suppose, or, as you say, "show" as many "factors that aren't modelled that may very well be highly significant" as you like.

Unless and until you have some cites showing what they are and why they should be taken seriously, you're going to face some serious... wait for it... skepticism on this thread.

You can assert you're "playing the proper role of a skeptic" if you like. But as long as you are offering unsupported speculation about "factors" that might be affecting model accuracy, in lieu of (a) verifiable evidence of such factors' existence, (b) verifiable evidence that climatologists and climate modellers haven't already considered them, and (c) verifiable evidence that they are "highly significant", I think you'll find that your protestations of being a skeptic will get short shrift.

Put another way: there are by now 15 pages of comments on this thread alone, stretching back to 2007, of self-styled "skeptics" trying to cast doubt on or otherwise discredit climate modelling. I'm almost certain some of them have also resorted to appeals to "factors that aren't modelled that may very well be highly significant", without doing the work of demonstrating that these appeals have a basis in reality.

What have you said so far that sets you apart?

[JH] Please specify to whom your comment is directed.

What makes you think uncertainty is your friend? Suppose the real sensitivity is 4.5, or 8? You seem to overemphasising uncertaintly, seeking minority opinions to rationalize a "do nothing" predisposition. Try citing peer-reviewed science instead of distortions by deniers.

Conservatively we know how live in a world with slow rates of climate change and 300pm of CO2. 400ppm was last seen when we didnt have ice sheets.

I agree science might change, the fly spaghetti monster or the Second Coming might happen instead, but that is not the way to do policy. I doubt would take the attitude to uncertainty in medical science if it came to treating a personal illness.

[JH] Please specify to whom your comment is directed.

Also, if you are actually a skeptic, how about trying some of that skepticism on the disinformation sites you seem to be reading.

Winston: "I didn't say they necsessarily should be, my intent was to show the likely huge number of factors that aren't modelled that may very well be highly significant, just as were the bacteria that once generated most of the oxygen on this planet."

This is why you're not being taken seriously. You're comparing the potential impact of new bacterial growth sites over decades to centuries to the impact of the great oxygenation event, when a brand new type of life was introduced to the globe over a period of millions of years.

You fail to recognize the precise nature of the change taking place. Rising sea level and changing land storage of freshwater will be persistent. This is not a step change where we go from one type of land-water transitional space to another. It is a persistently-changing transitional space. Thus, adaptation in these spaces must be persistent. How many suitable habitats will be destroyed for every one created? It's inevitable that some--many--will be destroyed.

Further, what impact would additional oxygen mean for the radiative forcing equation? Note that human burning of fossil carbon has been taking oxygen out of the atmosphere for the last 150 years.

Your plant argument belongs on another page.

Winston wrote "I didn't say they necsessarily should be, my intent was to show the likely huge number of factors that aren't modelled that may very well be highly significant, just as were the bacteria that once generated most of the oxygen on this planet. "

That is trolling. Come back when you can think of a factor that is likely to have a non-negligible impact on climate that is not included in the model, and until then stop wasting out time by trying to discuss things that obviously aren't.

"I'm not trolling. It's called playing the proper role of a skeptic which is asking honest questions."

It isn't an honest question as you can't propose a factor that has a non-negligible impact on climate, you just posit that they exist with no support. That is not skepticism.