Are surface temperature records reliable?

What the science says...

| Select a level... |

Basic

Basic

|

Intermediate

Intermediate

|

Advanced

Advanced

| ||||

|

The warming trend is the same in rural and urban areas, measured by thermometers and satellites, and by natural thermometers. |

|||||||

Climate Myth...

Temp record is unreliable

"We found [U.S. weather] stations located next to the exhaust fans of air conditioning units, surrounded by asphalt parking lots and roads, on blistering-hot rooftops, and near sidewalks and buildings that absorb and radiate heat. We found 68 stations located at wastewater treatment plants, where the process of waste digestion causes temperatures to be higher than in surrounding areas.

In fact, we found that 89 percent of the stations – nearly 9 of every 10 – fail to meet the National Weather Service’s own siting requirements that stations must be 30 meters (about 100 feet) or more away from an artificial heating or radiating/reflecting heat source." (Watts 2009)

At a glance

It's important to understand one thing above all: the vast majority of climate change denialism does not occur in the world of science, but on the internet. Specifically in the blog-world: anyone can blog or have a social media account and say whatever they want to say. And they do. We all saw plenty of that during the Covid-19 pandemic, seemingly offering an open invitation to step up and proclaim, "I know better than all those scientists!"

A few years ago in the USA, an online project was launched with its participants taking photos of some American weather stations. The idea behind it was to draw attention to stations thought to be badly-sited for the purpose of recording temperature. The logic behind this, they thought, was that if temperature records from a number of U.S. sites could be discredited, then global warming could be declared a hoax. Never mind that the U.S. is a relatively small portion of the Earth;s surface. And what about all the other indicators pointing firmly at warming? Huge reductions in sea ice, poleward migrations of many species, retreating glaciers, rising seas - that sort of thing. None of these things apparently mattered if part of the picture could be shown to be flawed.

But they forgot one thing. Professional climate scientists already knew a great deal about things that can cause outliers in temperature datasets. One example will suffice. When compiling temperature records, NASA's Goddard Institute for Space Studies goes to great pains to remove any possible influence from things like the urban heat island effect. That effect describes the fact that densely built-up parts of cities are likely to be a bit warmer due to all of that human activity.

How they do this is to take the urban temperature trends and compare them to the rural trends of the surrounding countryside. They then adjust the urban trend so it matches the rural trend – thereby removing that urban effect. This is not 'tampering' with data: it's a tried and tested method of removing local outliers from regional trends to get more realistic results.

As this methodology was being developed, some findings were surprising at first glance. Often, excess urban warming was small in amount. Even more surprisingly, a significant number of urban trends were cooler relative to their country surroundings. But that's because weather stations are often sited in relatively cool areas within a city, such as parks.

Finally, there have been independent analyses of global temperature datasets that had very similar results to NASA. 'Berkeley Earth Surface Temperatures' study (BEST) is a well-known example and was carried out at the University of California, starting in 2010. The physicist who initiated that study was formerly a climate change skeptic. Not so much now!

Please use this form to provide feedback about this new "At a glance" section, which was updated on May 27, 2023 to improve its readability. Read a more technical version below or dig deeper via the tabs above!

Further details

Temperature data are essential for predicting the weather and recording climate trends. So organisations like the U.S. National Weather Service, and indeed every national weather service around the world, require temperatures to be measured as accurately as possible. To understand climate change we also need to be sure we can trust historical measurements.

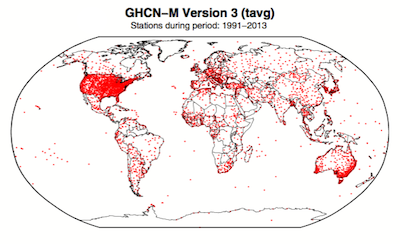

Surface temperature measurements are collected from more than 30,000 stations around the world (Rennie et al. 2014). About 7000 of these have long, consistent monthly records. As technology gets better, stations are updated with newer equipment. When equipment is updated or stations are moved, the new data is compared to the old record to be sure measurements are consistent over time.

Figure 1. Station locations with at least 1 month of data in the monthly Global Historical Climatology Network (GHCN-M). This set of 7280 stations are used in the global land surface databank. (Rennie et al. 2014)

In 2009 allegations were made in the blogosphere that weather stations placed in what some thought to be 'poor' locations could make the temperature record unreliable (and therefore, in certain minds, global warming would be shown to be a flawed concept). Scientists at the National Climatic Data Center took those allegations very seriously. They undertook a careful study of the possible problem and published the results in 2010. The paper, "On the reliability of the U.S. surface temperature record" (Menne et al. 2010), had an interesting conclusion. The temperatures from stations that the self-appointed critics claimed were "poorly sited" actually showed slightly cooler maximum daily temperatures compared to the average.

Around the same time, a physicist who was originally hostile to the concept of anthropogenic global warming, Dr. Richard Muller, decided to do his own temperature analysis. This proposal was loudly cheered in certain sections of the blogosphere where it was assumed the work would, wait for it, disprove global warming.

To undertake the work, Muller organized a group called Berkeley Earth to do an independent study (Berkeley Earth Surface Temperature study or BEST) of the temperature record. They specifically wanted to answer the question, “is the temperature rise on land improperly affected by the four key biases (station quality, homogenization, urban heat island, and station selection)?" The BEST project had the goal of merging all of the world’s temperature data sets into a common data set. It was a huge challenge.

Their eventual conclusions, after much hard analytical toil, were as follows:

1) The accuracy of the land surface temperature record was confirmed;

2) The BEST study used more data than previous studies but came to essentially the same conclusion;

3) The influence of the urban stations on the global record is very small and, if present at all, is biased on the cool side.

Muller commented: “I was not expecting this, but as a scientist, I feel it is my duty to let the evidence change my mind.” On that, certain parts of the blogosphere went into a state of meltdown. The lesson to be learned from such goings on is, “be careful what you wish for”. Presuming that improving temperature records will remove or significantly lower the global warming signal is not the wisest of things to do.

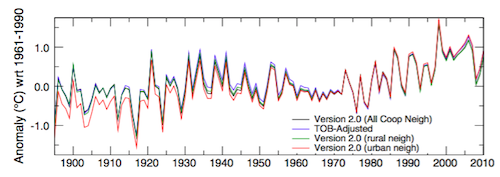

The BEST conclusions about the urban heat effect were nicely explained by our late colleague, Andy Skuce, in a post here at Skeptical Science in 2011. Figure 2 shows BEST plotted against several other major global temperature datasets. There may be some disagreement between individual datasets, especially towards the start of the record in the 19th Century, but the trends are all unequivocally the same.

Figure 2. Comparison of spatially gridded minimum temperatures for U.S. Historical Climatology Network (USHCN) data adjusted for time-of-day (TOB) only, and selected for rural or urban neighborhoods after homogenization to remove biases. (Hausfather et al. 2013)

Finally, temperatures measured on land are only one part of understanding the climate. We track many indicators of climate change to get the big picture. All indicators point to the same conclusion: the global temperature is increasing.

See also

Understanding adjustments to temperature data, Zeke Hausfather

Explainer: How data adjustments affect global temperature records, Zeke Hausfather

Time-of-observation Bias, John Hartz

Check original data

All the Berkeley Earth data and analyses are available online at http://berkeleyearth.org/data/.

Plot your own temperature trends with Kevin's calculator.

Or plot the differences with rural, urban, or selected regions with another calculator by Kevin.

NASA GISS Surface Temperature Analysis (GISSTEMP) describes how NASA handles the urban heat effect and links to current data.

NOAA Global Historical Climate Network (GHCN) Daily. GHCN-Daily contains records from over 100,000 stations in 180 countries and territories.

Last updated on 27 May 2023 by John Mason. View Archives

Arguments

Arguments

Deniers use the arguement that the data are fudged to cast doubt on the rising temperatures. This acticle should show Zeke Huasfather's work, that shows that the raw data has MORE warming, that because of adjustments over the oceans, the estimate of global warming are LESS than the raw data.

Hi, I am new to this site. Originally thought it was a deniers site, but recently came across you, when searching for Milankovitch and you had a pretty possitive comprehensive description for it.

My question is about temperature records, but first a bit about me. I live in AUS at -29.7 152.5 in my own 2 square km forest in the mountains. Not bragging here, but rather inspiring others to do the same and put their money where their mouth (blog) is, cause around here 1 square km of beautiful Aussie forest goes for about 50K USD. Between us, we could own half the Amazon and stop them from taking it down. I live in an area called Northern Rivers, cause water is scarce in AUS. Many big rivers here, some 1km wide, good for water, but they do flood. I have my own lake, so plenty of water all year round. I moved here from Melbourne after the big melt in the Arctic in 2007. Always been a GW "enthusiast" and this area would provide a better future, than living in a big City. Any GW tipping point will seriously affect big cities, causing food shortages and riots, a bit like Mad Max. Picked this area cause it is just South of the Gold Coast and the Rain Forests. With GW all that will be slowly moving South, I hope. OK, now for my query.

When I first moved here, most days and night the sky was clear and the temperatures ranged 0-35 in winter and 15 to 45 in summer. But for the last few years, there has been much more cloud cover. I suppose if you warm water, you get more steam. Cloud cover during the day reduces the temperature and clouds at night act as a blanket. But a cloudy day, may have a few hours of clear sky, immediately increasing the temperature.

The weather bureau in AUS (bom.gov.au) only keeps the MIN/MAX/AVR for each day recorded at 9am. Surely, that will in no way capture the changes I am seeing here. Have my own $150 weather station, but it has only been operating since 2012. Surely since COP21, the whole world agrees that the climate is warming, so why do we keep such basic sloppy temperature records. At the current level of cheap technology, the cost of one COP21 lunch would facilitate upgrading all our weather stations. Put them in parallel, so MIN/MAX matches and add a gadget to measure cloud cover (luminosity). So my query is really about the effects of cloud on our climate.

Slightly off-topic, but with all this talk of "hiatus", are the Milankovich cycles now slowly eroding the warming, cause their effect is well overdue and if you turn down the heater, you will need more blankets.

Thanks for listening. (moderator prune as you see fit, but with some feedback please)

"Slightly off-topic, but with all this talk of "hiatus", are the Milankovich cycles now slowly eroding the warming, cause their effect is well overdue and if you turn down the heater, you will need more blankets."

Milankovitch questions belong with Milankovitch, but short answer is that rate of change of milankovich forcing is 2 orders of magnitude less than rate of change in GHG forcing - ie completely overwhelmed. The signature is different as well. The last time we had 400ppm of CO2, we didnt have ice ages.

Theo, I'd start here for a primer on clouds and climate change.

Please show Karl's science paper, which puts the deniers' arguement to bed.

1. The corrections to the data from the last 70 years are small and hardly noticable on his plots.

2. Overall, the raw data shows MORE warming than the corrected data. That is correct: the corrections act to lower the warming.

Here is a link to the Karl paper: http://www.sciencemag.org/content/348/6242/1469.full

See fig.2

Empirical demonstration that adjusted historic temperature station measurements are correct, because they match the pristine reference network: Evaluating the Impact of Historical Climate Network Homogenization Using the Climate Reference Network, 2016, Hausfather, Cowtan, Menne, and Williams.

Hi y'all. I'm arguing with this guy who is claiming that AGW is junk-science. Here's one of his points:

Now, I've told him that AGW is historical science, not experimental science, so we aren't talking about "trials", and that temperature averages have uncertainties which means that they are ranges; further that we have even more uncertainties with temperature proxies. I've also said that, although neither he nor I knows how the statistics work to come up with the probability, to state that therefore it is meaningless is just an argument from ignorance.

However, he persists. In my annoyance, I'm reaching out to ask: can anyone actually explain this in sufficient detail or point me to a clear source on it, to show him how dumb his argument is?

The first thing to note is that this is based on comparing one measurement with another. No measurement is perfect, especially the measurement of NH atmosphere over 1400 years. This results in error bars on the measurement which, depending on methodology, can be expressed in probability terms. ie considering the spread of all sources of error in estimating temperature, we would say temp at time x is Tx and can estimate 66% or 95% error bars on that measurement. The IPCC claim is that error range on the modern 30year temp average is highe than the 66% error limit on past temperature (but not higher than the 95% limit).

Determining error bars is not a simple process. You would need to look in detail at the source papers to determine how that was done. If you look up monte carlo methods to estimating error propogation, you will see one way of doing.

I suspect you are arguing against wilful ignorance however. Good luck on that.

dvaytw @358.

Your 'guy' is quoting from AR5 SPM which does refer you to Sections 2.4 & 5.3 of the full report. His quote also sits cheek-by-jowl with Figure SPM-01a, the lower panel of which does show the confidence intervals for decadal measurements. Figure 2-19 also shows these for HadCRUT & also the differences between temperature series.

However, the main reason for there being doubt as to the interval 1983-2012 being the hottest 30-years in 1,400 years is dealt with in 5.3.5.1 Recent Warming in the Context of New Reconstructions on page 410-11 IPCC AR5 Chaper 5 which states:-

Thanks guys. Scaddenp, I looked up the Monte Carlo method, but that stuff is way over my head. The main issue of the guy you suggested is being willfully-ignorant (I agree) is that he doesn't see how a single fact about the past can be expressed as a probability. He quoted the definition of probability to me:

and asked how such an expression could fit the definition. I suggested to him that here, "probability" means something like, 'given similar amount and kinds of evidence plus measurement uncertainty, if we ran the calculation multiple times, what percentage of the time would the calculation be correct'. Is this basically right?

I have a question I would like to ask; I'm not sure if this is the best thread to ask it, for which I appologise, but it's somethimg I've always wondered and would really appreciate an answer to. I know that the Had Cru surface temp data is biased low because they do not have coverage in the arctic. My question is, why is this the case? Why have Had Cru never used data from the arctic stations when the greater coverage will clearly lead to more accurate estimates?

Darkmath,

Responding to Darkmath here. Tom will give you better answers than I will.

It appears to me that you agree with me that your original post here where you apparently have a graph of the completely unadjusted data from the USA is incorrect. The data require adjusting for time of day, number of stations etc. We can agree to ignore your original graph since it has not been cleaned up at all.

I will try to respond to your new claim that you do not like the NASA/NOAA adjustments. As Tom Curtis pointed out here, BEST (funded by the Koch brothers) used your preferred method of cleaning up the data and got the same result as NASA/NOAA. I do not know why NASA/NOAA decided on their corrections but I think it is historical. When they were making these adjustments in the 1980's computer time was extremely limited. They corrected for one issue at a time The BEST way is interesting, but that does not mean that the BEST system would have worked with the computers available in 1980. In any case, since it has been done both ways it has been shown that both ways work well.

It strikes me that you have made strong claims, supported with data from denier sites. Perhaps if you tried to ask questions without making the strong claims you will sound more reasonable. Many people here will respond to fair questions, strong claims tend to get much stronger responses.

My experience is that the denier sites you got your data from often massage the data (for example by using data that is completely uncorrected to falsely suggest it has not warmed) to support false claims. Try to keep an open mind when you look at the data.

Darkmath

Just to clarify one point, regarding time of observation. Until the introduction of electronic measurement devices, the main method for measuring surface temperatures were maximum/minimum thermometers. These thermometers measure the maximum and minimum temperatures recordedsince the last time the thermometers were reset. Typically they were read every 24 hours. Importantly they don't tell you when the maximum and minimum were reached, just what the values were.

If one reads a M/M thermometer in the evening, presumably you get the maximum and minimum temperatures for that day. However they can introduce a bias. Here is an example.

Imagine on Day 1 the maximum at 3:00 pm was 35 C and drops to 32 C at 6:00 pm. So the thermometers are read at 6:00 and the recorded maximum is 35 C. Then they are reset. The next day is milder. The maximum is only 28 C at 3:00 pm, dropping to 25 C at 6:00 pm when the thermometer are read again. We would expect them to read 28 C, that was the maximum on day 2.

However, the thermometer actually reports 32 C !!! This was the temperature at 6:00 pm the day before, just moments after the thermometer was reset. We have double counted day 1 and added a spurious 7 4 C [edit Whoops, can't count]. This situation doesn't occur when a cooler day is followed by a warmer day. And an analogous problem can occur with the minimum thermometer if we take readings in the early morning, there is a double counting of colder days.

The problem is not with when the thermometer was read, the problem is that we aren't sure when the temperature was read because of the time lag between the thermometer reading the temperature, and a human reading the thermometer. This is particularly an issue in the US temperature record and is referred to as the Time of Observation Bias. The 'time of observation' referred to isn't the time of measuring the temperature, it is the later time of observing the thermometer.

The real issue isn't the presence of a bias. Since all the datasets are of temperature anomalies, differences from baseline averages, any bias in readings tends to cancel out when you subtract the baseline from individual readings;

(Reading + Bias A) - (Baseline + Bias A) = (Reading - Baseline).

The issue is when biases change over time. Then the subtraction doesn't remove the time varying bias:

(Reading + Bias B) - (Baseline + bias A) = (Reading - Baseline) + (Bias B - Bias A)

The problem in the US record is that the time of day when the M/M thermometers were read and reset changed over the 20th century. From mainly evening readings (with a warm bias) early in the century to mainly morning readings (with a cool bias) later. So this introduced a bias change that made the early 20th century look warmer in the US, and the later 20th century cooler.

Additionally, like any instrument, the thermometers will also have a bias in how well they actually measure temperature. But this isn't a bias that is effected by the time the reading is taken. So this, as a fixed bias, tends to cancel out.

So these instruments introduce biases into the temperature record that we can't simply remove by the sort of approach you suggest. In addition, since most of the temperature record is trying to measure the daily maximum and minimum and then averages them, your suggested approach, even if viable would simply introduce a different bias - what would the average of the readings from your method actually mean?

In order to create a long term record of temperature anomalies, the key issue is how to relate different time periods together and different measurement techniques to produce a consistent historical record. Your suggested approach would produce something like 'during this period we measured an average of one set of temperatures of some sort, A, and during a later period we measured an average of a different set of temperatures of some sort, B. How do A and B relate to each other? Dunno'.

More recent electronic instruments now typically measure every hour so they aren't prone to this time of observation bias. They can have other biases, and we also then need to compensate for the difference in bias between the M/M thermometers, and the electronic instruments.

Thanks Michael for referencing the BEST data, they seem to be employing the simpler technique where any change in temperature recording is treated as an entirely new weather station. I'll look into further.

Regarding the change in adjustments/estimates over time being due to computing power that seems like a stretch to me. Given Moores Law in 30 years they could look back to the techniques employed today and say the same thing. Who knows what new insites Quantum Computers will yield when they come online.

Actually my real question is about the BEST data matching up with results NASA/NOAA got using adjustments/estimates. Which iteration do that match up with, Hansen 1981, GISS 2001 or GISS 2016?

[PS] The simplist way to find what the adjustment process was and why the changes is simply to read the papers associated with each change. GISS provide this conveniently here http://data.giss.nasa.gov/gistemp/history/ (Same couldnt be said for UAH data which denier sites accept uncritically).

(Also fixed graphic size. See the HTML tips in the comment policy for how to do this yourself)

[TD] You have yet to acknowledge Tom Curtis's explanation of the BEST data. Indeed, you seem in general to not be reading many of the thorough replies to you, instead Gish Galloping off to new topics when faced with concrete evidence contrary to your assertions. Please try sticking to one narrow topic per comment, actually engaging with the people responding to you, before jumping to another topic.

Thanks Glenn. Was the Min/Max thermometer used for all U.S. weather stations early in the 20th century? What percent used Min/Max? Where could I find that data?

One other thing regarding the technique I suggested where a change in measurement means the same weather station is treated as an entirely new one. That's the technique the BEST data is using and they got the same results as NASA/NOAA did with adjustments. Does that address the problems you raise with the "simpler" non-adjustment technique?

Darkmath:

I don't think it has been linked to yet, but there is also a post here at Skeptical Science titled "Of Averages and Anomalies". It is in several parts. There are links at the end of each pointing to the next post. Glenn Tamblyn is the author. Read his posts and comments carefully - they will tell you a lot.

[PS] Getting to close to point of dog-piling here. Before anyone else responds to Darkmath, please consider carefully whether the existing responsers (Tom, Michael,Glenn, Bob) have it in hand.

Darkmath

I don't know for certain but I expect so. There was no other technology available that was cheap enough to be used in a lot of remote stations and often unpowered stations. Otherwise you need somebody to go out and read the thermometer many times a fday to try and capture the max & min.

The US had what was known as the Cooperative Reference network. Volunteer (unpaid) amateur weather reporters, often farmers and people like that who would have a simple weather station set up on their property, take daily readings and send them off to the US weather service (or its predecessors).

Although there isn't an extensive scientific literature on the potential problems TObs can cause when using M/M thermometers, there are enough papers to show that it was known, even before consideration of climate change arose. People likethe US Dept of Agriculture did/do produce local climatic data tables for farmers - number of degree days etc. - for use in determining growing seasons, planting times etc. TObs bias was identified as a possible inaccuracy in those tables. The earliest paper I have heard of discussing the possible impact of M/M thermometers on the temperature record was published in England in 1890.

As to BEST vs NOAA, yes the BEST method does address this as well. Essentially the BEST method uses rawer (is that a real word) data but apply statistical methods to detect any bias changes in data sets and correct for them. So NOAA target a specific bias change with a specific adjustment, BEST have a general tool box for finding bias changes generally.

That both approaches converge on the same result is a good indicator to me that they are on the right track, and that there is no 'dubious intent' as some folks think.

DarkMath @365, as indicated elsewhere, the graph you show exagerates the apparent difference between versions of the temperature series by not using a common baseline. A better way to show the respective differences is to use a common baseline, and to show the calculated differences. Further, as on this thread the discussion is on global temperatures, there is no basis to use Meteorological Station only data, as you appear to have done. Hence the appropriate comparison is this one, from GISTEMP:

Note that the Hansen (1981) and Hansen (1987) temperature series did not include ocean data, and so do not in this either.

For comparison, here are the current GISTEMP LOTI and BEST LOTI using montly values, with the difference (GISS-BEST) also shown:

On first appearance, all GISTEMP variants prior to 2016 are reasonable approximations of the BEST values. That may be misleading, as the major change between GISSTEMP 2013 and 2016 is the change in SST data from ERSST v3b to ERSST 4. Berkely Earth uses a HadSST (version unspecified), and it is likely that the difference lies in the fact that Berkely Earth uses a SST database that does not account for recent detailed knowledge about the proportion of measurement types (wood or canvas bucket, or intake manifold) used at different times in the past.

Regardless of that, the difference in values lies well within error, and the difference in trend from 1966 onwards amounts to just 0.01 C per decade, or about 6% of the total trend (again well within error). Ergo, any argument on climate that depends on GISS being in error and BEST being accurate (or vice versa) is not supported by the evidence. The same is also true of the NOAA and HadCRUT4 timeseries.

I think that Darkmath is working through the data. I think Glenn and Tom will provide better answers to his questions than I will. I think Darkmath is having difficulty responding to all the answers they get. That is dogpiling and is against the comments policy. I would like to withdraw to simplify the discussion.

As a point of interest, earlier this year, Chris Burt at Weather Underground had a picture on his blog of the min/max thermometer at Death Valley, California, showing the hottest reliably measured temperature ever measured world-wide. (copy of photo). This thermometer is still in use. Apparently the enclosure is only opened twice a day (to read the thermometer). Min/max thermometers are still in use, even at important weather stations.

Thanks for all the responses everyone, they're very helpful. Michael is correct, I'm still working through the data. Actually I haven't even started as I was travelling today for work and only got back just now.

[RH] Just a quick reminder: When you post images you need to limit the width to 500px. That can be accomplished on the second tab of the image insertion tool. Thx!

I will also mainly let Tom and Glenn respond to DarkMath, only interjecting when I can add a little bit of information.

For the moment, I will comment on Glenn's description of the US cooperative volunteer network and comparisons between manual and automatic measurements.

I am more familair with the Canadian networks, which have a similar history to the US one that Glenn describes. A long time ago, in a galaxy far, far away, all measurements were manual. That isn't to say that the only temperature data that was available was max/min though: human observers - especially at aviation stations (read "airports") - often took much more detailed observations several times per day.

A primary focus has been what are called "synoptic observations", suitable for weather forecasting. There are four main times each day for synoptic observations - 00, 06, 12, and 18 UTC. There are also "intermediate" synoptic times at (you guessed it!) 03, 09, 15, and 21 UTC. The World Meteorological Organization tries to coordinate such readings and encourages nations to maintain specific networks of stations to support both synoptic and climatological measurements. See this WMO web page. Readings typically include temperature, humidity, precipitation, wind, perhaps snow, and sky conditions.

In Canada, the manual readings can be from their volunteer Cooperative Climate Network (CCN), where readings are typically once or twice per day and are usually restricted to temperature and precipitation, or from other manual systems that form part of the synoptic network. The observation manuals for each network can be found on this web page (MANOBS for synoptic, MANCLIM for CCN). Although the CCN is "volunteer" in terms of readings, the equipment and maintanance is provided to standards set by the Meteorological Service of Canada (MSC). MSC also brings in data from many partner stations, too - of varying sophistication.

Currently, many locations that used to depend on manual readings are now automated. Although automatic systems can read much more frequently, efforts are made to preserve functionality similar to the old manual systems. Archived readings are an average over the last minute or two of the hour, daily "averages" in the archives are still (max+min)/2. Canada also standardizes all time zones (usually) to a "climatological day" of 06-06 UTC. (Canada has 6 time zones, spanning 4.5 hours.) It is well understood that data processing methods can affect climatological analysis, and controlling these system changes is important.

Tony Heller has created a bunch of charts like these above, they all show it was hottests in the 1930's, how do you explain that?